916 Repositories

Python Non-Local-Sparse-Attention Libraries

Drone-based Joint Density Map Estimation, Localization and Tracking with Space-Time Multi-Scale Attention Network

DroneCrowd Paper Detection, Tracking, and Counting Meets Drones in Crowds: A Benchmark. Introduction This paper proposes a space-time multi-scale atte

External Attention Network

Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks paper : https://arxiv.org/abs/2105.02358 Jittor code will come soon

.png)

Share your files on local network just by one click.

Share Your Folder This script helps you to share any folder anywhere on your local network. it's possible to use the script on both: Windows (Click he

Implementation of Kaneko et al.'s MaskCycleGAN-VC model for non-parallel voice conversion.

MaskCycleGAN-VC Unofficial PyTorch implementation of Kaneko et al.'s MaskCycleGAN-VC (2021) for non-parallel voice conversion. MaskCycleGAN-VC is the

Block Sparse movement pruning

Movement Pruning: Adaptive Sparsity by Fine-Tuning Magnitude pruning is a widely used strategy for reducing model size in pure supervised learning; ho

Exploring whether attention is necessary for vision transformers

Do You Even Need Attention? A Stack of Feed-Forward Layers Does Surprisingly Well on ImageNet Paper/Report TL;DR We replace the attention layer in a v

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

Source codes for the paper "Local Additivity Based Data Augmentation for Semi-supervised NER"

LADA This repo contains codes for the following paper: Jiaao Chen*, Zhenghui Wang*, Ran Tian, Zichao Yang, Diyi Yang: Local Additivity Based Data Augm

Twins: Revisiting the Design of Spatial Attention in Vision Transformers

Twins: Revisiting the Design of Spatial Attention in Vision Transformers Very recently, a variety of vision transformer architectures for dense predic

Research shows Google collects 20x more data from Android than Apple collects from iOS. Block this non-consensual telemetry using pihole blocklists.

pihole-antitelemetry Research shows Google collects 20x more data from Android than Apple collects from iOS. Block both using these pihole lists. Proj

Reimplementation of the paper `Human Attention Maps for Text Classification: Do Humans and Neural Networks Focus on the Same Words? (ACL2020)`

Human Attention for Text Classification Re-implementation of the paper Human Attention Maps for Text Classification: Do Humans and Neural Networks Foc

TalkNet 2: Non-Autoregressive Depth-Wise Separable Convolutional Model for Speech Synthesis with Explicit Pitch and Duration Prediction.

TalkNet 2 [WIP] TalkNet 2: Non-Autoregressive Depth-Wise Separable Convolutional Model for Speech Synthesis with Explicit Pitch and Duration Predictio

OCTIS: Comparing Topic Models is Simple! A python package to optimize and evaluate topic models (accepted at EACL2021 demo track)

OCTIS : Optimizing and Comparing Topic Models is Simple! OCTIS (Optimizing and Comparing Topic models Is Simple) aims at training, analyzing and compa

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Implementation of Convolutional enhanced image Transformer

CeiT : Convolutional enhanced image Transformer This is an unofficial PyTorch implementation of Incorporating Convolution Designs into Visual Transfor

Implementation of Perceiver, General Perception with Iterative Attention in TensorFlow

Perceiver This Python package implements Perceiver: General Perception with Iterative Attention by Andrew Jaegle in TensorFlow. This model builds on t

The official pytorch implementation of our paper "Is Space-Time Attention All You Need for Video Understanding?"

TimeSformer This is an official pytorch implementation of Is Space-Time Attention All You Need for Video Understanding?. In this repository, we provid

NoPdb: Non-interactive Python Debugger

NoPdb: Non-interactive Python Debugger Installation: pip install nopdb Docs: https://nopdb.readthedocs.io/ NoPdb is a programmatic (non-interactive) d

OpenMMLab's Next Generation Video Understanding Toolbox and Benchmark

Introduction English | 简体中文 MMAction2 is an open-source toolbox for video understanding based on PyTorch. It is a part of the OpenMMLab project. The m

Submanifold sparse convolutional networks

Submanifold Sparse Convolutional Networks This is the PyTorch library for training Submanifold Sparse Convolutional Networks. Spatial sparsity This li

Code for the paper "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021)

MASTER-PyTorch PyTorch reimplementation of "MASTER: Multi-Aspect Non-local Network for Scene Text Recognition" (Pattern Recognition 2021). This projec

Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch

Cross Transformers - Pytorch (wip) Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch Install $ pip install cross-t

CondenseNet V2: Sparse Feature Reactivation for Deep Networks

CondenseNetV2 This repository is the official Pytorch implementation for "CondenseNet V2: Sparse Feature Reactivation for Deep Networks" paper by Le Y

QueryDet: Cascaded Sparse Query for Accelerating High-Resolution SmallObject Detection

QueryDet-PyTorch This repository is the official implementation of our paper: QueryDet: Cascaded Sparse Query for Accelerating High-Resolution Small O

Newt - a Gaussian process library in JAX.

Newt __ \/_ (' \`\ _\, \ \\/ /`\/\ \\ \ \\

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

![[WWW 2021 GLB] New Benchmarks for Learning on Non-Homophilous Graphs](https://user-images.githubusercontent.com/58995473/113487776-f3665d80-9487-11eb-8035-fbf5126f85df.png)

[WWW 2021 GLB] New Benchmarks for Learning on Non-Homophilous Graphs

New Benchmarks for Learning on Non-Homophilous Graphs Here are the codes and datasets accompanying the paper: New Benchmarks for Learning on Non-Homop

Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GanFormer and TransGan paper

TransGanFormer (wip) Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GansFormer and TransGan paper. I

monolish: MONOlithic Liner equation Solvers for Highly-parallel architecture

monolish is a linear equation solver library that monolithically fuses variable data type, matrix structures, matrix data format, vendor specific data transfer APIs, and vendor specific numerical algebra libraries.

Official PyTorch Code of GrooMeD-NMS: Grouped Mathematically Differentiable NMS for Monocular 3D Object Detection (CVPR 2021)

GrooMeD-NMS: Grouped Mathematically Differentiable NMS for Monocular 3D Object Detection GrooMeD-NMS: Grouped Mathematically Differentiable NMS for Mo

Code for "LoFTR: Detector-Free Local Feature Matching with Transformers", CVPR 2021

LoFTR: Detector-Free Local Feature Matching with Transformers Project Page | Paper LoFTR: Detector-Free Local Feature Matching with Transformers Jiami

💻 A fully functional local AWS cloud stack. Develop and test your cloud & Serverless apps offline!

LocalStack - A fully functional local AWS cloud stack LocalStack provides an easy-to-use test/mocking framework for developing Cloud applications. Cur

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Code for the paper "Graph Attention Tracking". (CVPR2021)

SiamGAT 1. Environment setup This code has been tested on Ubuntu 16.04, Python 3.5, Pytorch 1.2.0, CUDA 9.0. Please install related libraries before r

Implementation of the Swin Transformer in PyTorch.

Swin Transformer - PyTorch Implementation of the Swin Transformer architecture. This paper presents a new vision Transformer, called Swin Transformer,

Implementation of STAM (Space Time Attention Model), a pure and simple attention model that reaches SOTA for video classification

STAM - Pytorch Implementation of STAM (Space Time Attention Model), yet another pure and simple SOTA attention model that bests all previous models in

![Deep Occlusion-Aware Instance Segmentation with Overlapping BiLayers [CVPR 2021]](https://github.com/lkeab/BCNet/raw/main/figures/fig_vis2_new.png)

Deep Occlusion-Aware Instance Segmentation with Overlapping BiLayers [CVPR 2021]

Deep Occlusion-Aware Instance Segmentation with Overlapping BiLayers [BCNet, CVPR 2021] This is the official pytorch implementation of BCNet built on

Fast solver for L1-type problems: Lasso, sparse Logisitic regression, Group Lasso, weighted Lasso, Multitask Lasso, etc.

celer Fast algorithm to solve Lasso-like problems with dual extrapolation. Currently, the package handles the following problems: Lasso weighted Lasso

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Lambda Networks - Pytorch Implementation of λ Networks, a new approach to image recognition that reaches SOTA on ImageNet. The new method utilizes λ l

An implementation of Performer, a linear attention-based transformer, in Pytorch

Performer - Pytorch An implementation of Performer, a linear attention-based transformer variant with a Fast Attention Via positive Orthogonal Random

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

PyTorch Extension Library of Optimized Autograd Sparse Matrix Operations

PyTorch Sparse This package consists of a small extension library of optimized sparse matrix operations with autograd support. This package currently

Library for faster pinned CPU - GPU transfer in Pytorch

SpeedTorch Faster pinned CPU tensor - GPU Pytorch variabe transfer and GPU tensor - GPU Pytorch variable transfer, in certain cases. Update 9-29-1

git《Self-Attention Attribution: Interpreting Information Interactions Inside Transformer》(AAAI 2021) GitHub:

Self-Attention Attribution This repository contains the implementation for AAAI-2021 paper Self-Attention Attribution: Interpreting Information Intera

The open source code of SA-UNet: Spatial Attention U-Net for Retinal Vessel Segmentation.

SA-UNet: Spatial Attention U-Net for Retinal Vessel Segmentation(ICPR 2020) Overview This code is for the paper: Spatial Attention U-Net for Retinal V

Implementation of the 😇 Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones

HaloNet - Pytorch Implementation of the Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones. This re

![[ICLR'21] FedBN: Federated Learning on Non-IID Features via Local Batch Normalization](https://github.com/med-air/FedBN/raw/master/assets/illustration.png)

[ICLR'21] FedBN: Federated Learning on Non-IID Features via Local Batch Normalization

FedBN: Federated Learning on Non-IID Features via Local Batch Normalization This is the PyTorch implemention of our paper FedBN: Federated Learning on

Visual Attention based OCR

Attention-OCR Authours: Qi Guo and Yuntian Deng Visual Attention based OCR. The model first runs a sliding CNN on the image (images are resized to hei

A Tensorflow model for text recognition (CNN + seq2seq with visual attention) available as a Python package and compatible with Google Cloud ML Engine.

Attention-based OCR Visual attention-based OCR model for image recognition with additional tools for creating TFRecords datasets and exporting the tra

🖺 OCR using tensorflow with attention

tensorflow-ocr 🖺 OCR using tensorflow with attention, batteries included Installation git clone --recursive http://github.com/pannous/tensorflow-ocr

Single Shot Text Detector with Regional Attention

Single Shot Text Detector with Regional Attention Introduction SSTD is initially described in our ICCV 2017 spotlight paper. A third-party implementat

Scene text detection and recognition based on Extremal Region(ER)

Scene text recognition A real-time scene text recognition algorithm. Our system is able to recognize text in unconstrain background. This algorithm is

Implement 'Single Shot Text Detector with Regional Attention, ICCV 2017 Spotlight'

SSTDNet Implement 'Single Shot Text Detector with Regional Attention, ICCV 2017 Spotlight' using pytorch. This code is work for general object detecti

textspotter - An End-to-End TextSpotter with Explicit Alignment and Attention

An End-to-End TextSpotter with Explicit Alignment and Attention This is initially described in our CVPR 2018 paper. Getting Started Installation Clone

Pytorch implementation of PSEnet with Pyramid Attention Network as feature extractor

Scene Text-Spotting based on PSEnet+CRNN Pytorch implementation of an end to end Text-Spotter with a PSEnet text detector and CRNN text recognizer. We

MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition

MORAN: A Multi-Object Rectified Attention Network for Scene Text Recognition Python 2.7 Python 3.6 MORAN is a network with rectification mechanism for

Adaptive Attention Span for Reinforcement Learning

Adaptive Transformers in RL Official implementation of Adaptive Transformers in RL In this work we replicate several results from Stabilizing Transfor

CVPR 2021: "Generating Diverse Structure for Image Inpainting With Hierarchical VQ-VAE"

Diverse Structure Inpainting ArXiv | Papar | Supplementary Material | BibTex This repository is for the CVPR 2021 paper, "Generating Diverse Structure

![[ICLR 2021] Is Attention Better Than Matrix Decomposition?](https://github.com/Gsunshine/Enjoy-Hamburger/raw/main/assets/Hamburger.jpg)

[ICLR 2021] Is Attention Better Than Matrix Decomposition?

Enjoy-Hamburger 🍔 Official implementation of Hamburger, Is Attention Better Than Matrix Decomposition? (ICLR 2021) Under construction. Introduction T

Open source home automation that puts local control and privacy first

Home Assistant Open source home automation that puts local control and privacy first. Powered by a worldwide community of tinkerers and DIY enthusiast

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Pytorch implementation of Each Part Matters: Local Patterns Facilitate Cross-view Geo-localization https://arxiv.org/abs/2008.11646

[TCSVT] Each Part Matters: Local Patterns Facilitate Cross-view Geo-localization LPN [Paper] NEWs Prerequisites Python 3.6 GPU Memory = 8G Numpy 1.

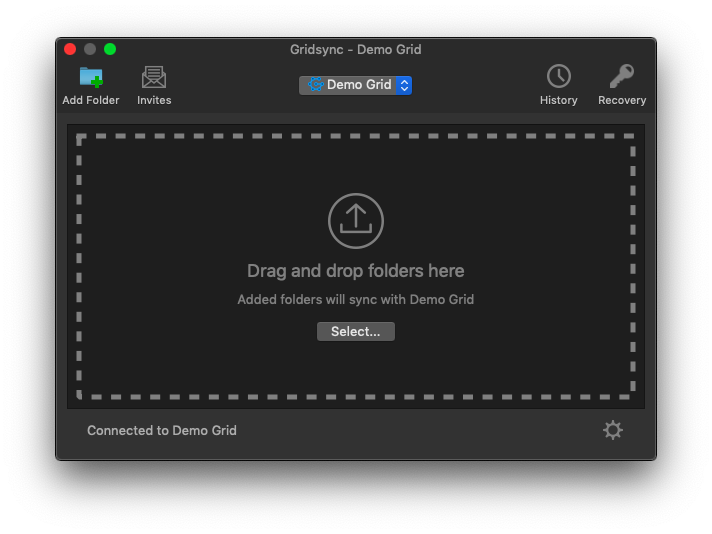

Synchronize local directories with Tahoe-LAFS storage grids

Gridsync Gridsync aims to provide a cross-platform, graphical user interface for Tahoe-LAFS, the Least Authority File Store. It is intended to simplif

Official implementation of Self-supervised Graph Attention Networks (SuperGAT), ICLR 2021.

SuperGAT Official implementation of Self-supervised Graph Attention Networks (SuperGAT). This model is presented at How to Find Your Friendly Neighbor

Official PyTorch Implementation of Unsupervised Learning of Scene Flow Estimation Fusing with Local Rigidity

UnRigidFlow This is the official PyTorch implementation of UnRigidFlow (IJCAI2019). Here are two sample results (~10MB gif for each) of our unsupervis

Object-Centric Learning with Slot Attention

Slot Attention This is a re-implementation of "Object-Centric Learning with Slot Attention" in PyTorch (https://arxiv.org/abs/2006.15055). Requirement

Code for our CVPR2021 paper coordinate attention

Coordinate Attention for Efficient Mobile Network Design (preprint) This repository is a PyTorch implementation of our coordinate attention (will appe

Implementation of Perceiver, General Perception with Iterative Attention, in Pytorch

Perceiver - Pytorch Implementation of Perceiver, General Perception with Iterative Attention, in Pytorch Install $ pip install perceiver-pytorch Usage

library for nonlinear optimization, wrapping many algorithms for global and local, constrained or unconstrained, optimization

NLopt is a library for nonlinear local and global optimization, for functions with and without gradient information. It is designed as a simple, unifi

Tool for producing high quality forecasts for time series data that has multiple seasonality with linear or non-linear growth.

Prophet: Automatic Forecasting Procedure Prophet is a procedure for forecasting time series data based on an additive model where non-linear trends ar

Implementation of OmniNet, Omnidirectional Representations from Transformers, in Pytorch

Omninet - Pytorch Implementation of OmniNet, Omnidirectional Representations from Transformers, in Pytorch. The authors propose that we should be atte

Local community telegram bot

Бот на районе Телеграм-бот для поиска адресов и заведений в вашем районе города или в небольшом городке. Требует недели прогулок по району д

GANsformer: Generative Adversarial Transformers Drew A

GANsformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick *I wish to thank Christopher D. Manning for the fruitf

Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image classification, in Pytorch

Transformer in Transformer Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image c

An attempt at the implementation of Glom, Geoffrey Hinton's new idea that integrates neural fields, predictive coding, top-down-bottom-up, and attention (consensus between columns)

GLOM - Pytorch (wip) An attempt at the implementation of Glom, Geoffrey Hinton's new idea that integrates neural fields, predictive coding,

Python script for Linear, Non-Linear Convection, Burger’s & Poisson Equation in 1D & 2D, 1D Diffusion Equation using Standard Wall Function, 2D Heat Conduction Convection equation with Dirichlet & Neumann BC, full Navier-Stokes Equation coupled with Poisson equation for Cavity and Channel flow in 2D using Finite Difference Method & Finite Volume Method.

Navier-Stokes-numerical-solution-using-Python- Python script for Linear, Non-Linear Convection, Burger’s & Poisson Equation in 1D & 2D, 1D D

Implementation of E(n)-Transformer, which extends the ideas of Welling's E(n)-Equivariant Graph Neural Network to attention

E(n)-Equivariant Transformer (wip) Implementation of E(n)-Equivariant Transformer, which extends the ideas from Welling's E(n)-Equivariant G

Pytorch Code for "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation"

Medical-Transformer Pytorch Code for the paper "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation" About this repo: This repo

A Robust Non-IoU Alternative to Non-Maxima Suppression in Object Detection

Confluence: A Robust Non-IoU Alternative to Non-Maxima Suppression in Object Detection 1. 介绍 用以替代 NMS,在所有 bbox 中挑选出最优的集合。 NMS 仅考虑了 bbox 的得分,然后根据 IOU 来

Implementation of TimeSformer, a pure attention-based solution for video classification

TimeSformer - Pytorch Implementation of TimeSformer, a pure and simple attention-based solution for reaching SOTA on video classification.

Implementation of Nyström Self-attention, from the paper Nyströmformer

Nyström Attention Implementation of Nyström Self-attention, from the paper Nyströmformer. Yannic Kilcher video Install $ pip install nystrom-attention

Implementation of self-attention mechanisms for general purpose. Focused on computer vision modules. Ongoing repository.

Self-attention building blocks for computer vision applications in PyTorch Implementation of self attention mechanisms for computer vision in PyTorch

计算机视觉中用到的注意力模块和其他即插即用模块PyTorch Implementation Collection of Attention Module and Plug&Play Module

PyTorch实现多种计算机视觉中网络设计中用到的Attention机制,还收集了一些即插即用模块。由于能力有限精力有限,可能很多模块并没有包括进来,有任何的建议或者改进,可以提交issue或者进行PR。

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Sparse Beta-Divergence Tensor Factorization Library

NTFLib Sparse Beta-Divergence Tensor Factorization Library Based off of this beta-NTF project this library is specially-built to handle tensors where

text_recognition_toolbox: The reimplementation of a series of classical scene text recognition papers with Pytorch in a uniform way.

text recognition toolbox 1. 项目介绍 该项目是基于pytorch深度学习框架,以统一的改写方式实现了以下6篇经典的文字识别论文,论文的详情如下。该项目会持续进行更新,欢迎大家提出问题以及对代码进行贡献。 模型 论文标题 发表年份 模型方法划分 CRNN 《An End-t

Open source repository for the code accompanying the paper 'Non-Rigid Neural Radiance Fields Reconstruction and Novel View Synthesis of a Deforming Scene from Monocular Video'.

Non-Rigid Neural Radiance Fields This is the official repository for the project "Non-Rigid Neural Radiance Fields: Reconstruction and Novel View Synt

Implementation of TabTransformer, attention network for tabular data, in Pytorch

Tab Transformer Implementation of Tab Transformer, attention network for tabular data, in Pytorch. This simple architecture came within a hair's bread

CPU inference engine that delivers unprecedented performance for sparse models

The DeepSparse Engine is a CPU runtime that delivers unprecedented performance by taking advantage of natural sparsity within neural networks to reduce compute required as well as accelerate memory bound workloads. It is focused on model deployment and scaling machine learning pipelines, fitting seamlessly into your existing deployments as an inference backend.

Code for our ICASSP 2021 paper: SA-Net: Shuffle Attention for Deep Convolutional Neural Networks

SA-Net: Shuffle Attention for Deep Convolutional Neural Networks (paper) By Qing-Long Zhang and Yu-Bin Yang [State Key Laboratory for Novel Software T

Authors implementation of LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant self-at

Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series Forecasting.

Non-AR Spatial-Temporal Transformer Introduction Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series For

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Local continuous test runner with pytest and watchdog.

pytest-watch -- Continuous pytest runner pytest-watch a zero-config CLI tool that runs pytest, and re-runs it when a file in your project changes. It

Asynchronous parallel SSH client library.

parallel-ssh Asynchronous parallel SSH client library. Run SSH commands over many - hundreds/hundreds of thousands - number of servers asynchronously

A Python module that tries to figure out what your local timezone is

tzlocal This Python module returns a tzinfo object with the local timezone information under Unix and Windows. It requires either Python 3.9+ or the b

Implementation of Feedback Transformer in Pytorch

Feedback Transformer - Pytorch Simple implementation of Feedback Transformer in Pytorch. They improve on Transformer-XL by having each token have acce

FcaNet: Frequency Channel Attention Networks

FcaNet: Frequency Channel Attention Networks PyTorch implementation of the paper "FcaNet: Frequency Channel Attention Networks". Simplest usage Models

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

DALL-E in Pytorch Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch. It will also contain CLIP for ranking the ge

Learning Continuous Image Representation with Local Implicit Image Function

LIIF This repository contains the official implementation for LIIF introduced in the following paper: Learning Continuous Image Representation with Lo