916 Repositories

Python Non-Local-Sparse-Attention Libraries

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

An implementation of DeepMind's Relational Recurrent Neural Networks in PyTorch.

relational-rnn-pytorch An implementation of DeepMind's Relational Recurrent Neural Networks (Santoro et al. 2018) in PyTorch. Relational Memory Core (

Pytorch implementation of face attention network

Face Attention Network Pytorch implementation of face attention network as described in Face Attention Network: An Effective Face Detector for the Occ

Adversarial Framework for (non-) Parametric Image Stylisation Mosaics

Fully Adversarial Mosaics (FAMOS) Pytorch implementation of the paper "Copy the Old or Paint Anew? An Adversarial Framework for (non-) Parametric Imag

A PyTorch Implementation of "Watch Your Step: Learning Node Embeddings via Graph Attention" (NeurIPS 2018).

Attention Walk ⠀⠀ A PyTorch Implementation of Watch Your Step: Learning Node Embeddings via Graph Attention (NIPS 2018). Abstract Graph embedding meth

A PyTorch implementation of "Signed Graph Convolutional Network" (ICDM 2018).

SGCN ⠀ A PyTorch implementation of Signed Graph Convolutional Network (ICDM 2018). Abstract Due to the fact much of today's data can be represented as

A PyTorch implementation of "Graph Classification Using Structural Attention" (KDD 2018).

GAM ⠀⠀ A PyTorch implementation of Graph Classification Using Structural Attention (KDD 2018). Abstract Graph classification is a problem with practic

A PyTorch implementation of "SimGNN: A Neural Network Approach to Fast Graph Similarity Computation" (WSDM 2019).

SimGNN ⠀⠀⠀ A PyTorch implementation of SimGNN: A Neural Network Approach to Fast Graph Similarity Computation (WSDM 2019). Abstract Graph similarity s

A PyTorch implementation of "Predict then Propagate: Graph Neural Networks meet Personalized PageRank" (ICLR 2019).

APPNP ⠀ A PyTorch implementation of Predict then Propagate: Graph Neural Networks meet Personalized PageRank (ICLR 2019). Abstract Neural message pass

An implementation of "MixHop: Higher-Order Graph Convolutional Architectures via Sparsified Neighborhood Mixing" (ICML 2019).

MixHop and N-GCN ⠀ A PyTorch implementation of "MixHop: Higher-Order Graph Convolutional Architectures via Sparsified Neighborhood Mixing" (ICML 2019)

A PyTorch implementation of "Capsule Graph Neural Network" (ICLR 2019).

CapsGNN ⠀⠀ A PyTorch implementation of Capsule Graph Neural Network (ICLR 2019). Abstract The high-quality node embeddings learned from the Graph Neur

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Implementation of Invariant Point Attention, used for coordinate refinement in the structure module of Alphafold2, as a standalone Pytorch module

Invariant Point Attention - Pytorch Implementation of Invariant Point Attention as a standalone module, which was used in the structure module of Alph

Deep RGB-D Saliency Detection with Depth-Sensitive Attention and Automatic Multi-Modal Fusion (CVPR'2021, Oral)

DSA^2 F: Deep RGB-D Saliency Detection with Depth-Sensitive Attention and Automatic Multi-Modal Fusion (CVPR'2021, Oral) This repo is the official imp

PyTorch code for our ECCV 2020 paper "Single Image Super-Resolution via a Holistic Attention Network"

HAN PyTorch code for our ECCV 2020 paper "Single Image Super-Resolution via a Holistic Attention Network" This repository is for HAN introduced in the

A Planar RGB-D SLAM which utilizes Manhattan World structure to provide optimal camera pose trajectory while also providing a sparse reconstruction containing points, lines and planes, and a dense surfel-based reconstruction.

ManhattanSLAM Authors: Raza Yunus, Yanyan Li and Federico Tombari ManhattanSLAM is a real-time SLAM library for RGB-D cameras that computes the camera

Implementation of "Glancing Transformer for Non-Autoregressive Neural Machine Translation"

GLAT Implementation for the ACL2021 paper "Glancing Transformer for Non-Autoregressive Neural Machine Translation" Requirements Python = 3.7 Pytorch

Plug and play transformer you can find network structure and official complete code by clicking List

Plug-and-play Module Plug and play transformer you can find network structure and official complete code by clicking List The following is to quickly

A collection of 100 Deep Learning images and visualizations

A collection of Deep Learning images and visualizations. The project has been developed by the AI Summer team and currently contains almost 100 images.

PyTorch code for our paper "Image Super-Resolution with Non-Local Sparse Attention" (CVPR2021).

Image Super-Resolution with Non-Local Sparse Attention This repository is for NLSN introduced in the following paper "Image Super-Resolution with Non-

A collection of 100 Deep Learning images and visualizations

A collection of Deep Learning images and visualizations. The project has been developed by the AI Summer team and currently contains almost 100 images.

This is an differentiable pytorch implementation of SIFT patch descriptor.

This is an differentiable pytorch implementation of SIFT patch descriptor. It is very slow for describing one patch, but quite fast for batch. It can

Official PyTorch implementation of UACANet: Uncertainty Aware Context Attention for Polyp Segmentation

UACANet: Uncertainty Aware Context Attention for Polyp Segmentation Official pytorch implementation of UACANet: Uncertainty Aware Context Attention fo

2021-MICCAI-Progressively Normalized Self-Attention Network for Video Polyp Segmentation

2021-MICCAI-Progressively Normalized Self-Attention Network for Video Polyp Segmentation Authors: Ge-Peng Ji*, Yu-Cheng Chou*, Deng-Ping Fan, Geng Che

The official implementation of VAENAR-TTS, a VAE based non-autoregressive TTS model.

VAENAR-TTS This repo contains code accompanying the paper "VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis". Sa

Implementation of Self-supervised Graph-level Representation Learning with Local and Global Structure (ICML 2021).

Self-supervised Graph-level Representation Learning with Local and Global Structure Introduction This project is an implementation of ``Self-supervise

Codes for TS-CAM: Token Semantic Coupled Attention Map for Weakly Supervised Object Localization.

TS-CAM: Token Semantic Coupled Attention Map for Weakly SupervisedObject Localization This is the official implementaion of paper TS-CAM: Token Semant

Fast sparse deep learning on CPUs

SPARSEDNN **If you want to use this repo, please send me an email: zihengw@stanford.edu, or raise a Github issue. ** Fast sparse deep learning on CPUs

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

The official implementation of VAENAR-TTS, a VAE based non-autoregressive TTS model.

VAENAR-TTS This repo contains code accompanying the paper "VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis". Sa

Selective Wavelet Attention Learning for Single Image Deraining

SWAL Code for Paper "Selective Wavelet Attention Learning for Single Image Deraining" Prerequisites Python 3 PyTorch Models We provide the models trai

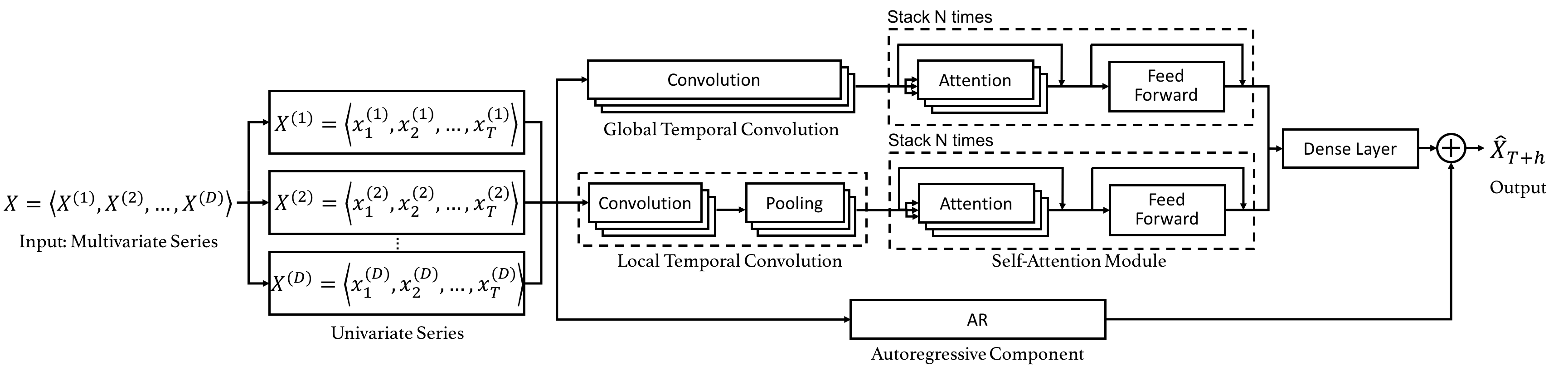

Code for the CIKM 2019 paper "DSANet: Dual Self-Attention Network for Multivariate Time Series Forecasting".

Dual Self-Attention Network for Multivariate Time Series Forecasting 20.10.26 Update: Due to the difficulty of installation and code maintenance cause

Multi-Task Temporal Shift Attention Networks for On-Device Contactless Vitals Measurement (NeurIPS 2020)

MTTS-CAN: Multi-Task Temporal Shift Attention Networks for On-Device Contactless Vitals Measurement Paper Xin Liu, Josh Fromm, Shwetak Patel, Daniel M

PyTorch Implementation of NCSOFT's FastPitchFormant: Source-filter based Decomposed Modeling for Speech Synthesis

FastPitchFormant - PyTorch Implementation PyTorch Implementation of FastPitchFormant: Source-filter based Decomposed Modeling for Speech Synthesis. Qu

Pytorch implementation of “Recursive Non-Autoregressive Graph-to-Graph Transformer for Dependency Parsing with Iterative Refinement”

Graph-to-Graph Transformers Self-attention models, such as Transformer, have been hugely successful in a wide range of natural language processing (NL

💥 Share files easily over your local network from the terminal!

Fileshare 📨 Share files easily over your local network from the terminal! 📨 Installation # clone the repo $ git clone https://github.com/dopevog/fil

PyTorch implementation of ARM-Net: Adaptive Relation Modeling Network for Structured Data.

A ready-to-use framework of latest models for structured (tabular) data learning with PyTorch. Applications include recommendation, CRT prediction, healthcare analytics, and etc.

This is a template for the Non-autoregressive Deep Learning-Based TTS model (in PyTorch).

Non-autoregressive Deep Learning-Based TTS Template This is a template for the Non-autoregressive TTS model. It contains Data Preprocessing Pipeline D

Attention mechanism with MNIST dataset

[TensorFlow] Attention mechanism with MNIST dataset Usage $ python run.py Result Training Loss graph. Test Each figure shows input digit, attention ma

Shared Attention for Multi-label Zero-shot Learning

Shared Attention for Multi-label Zero-shot Learning Overview This repository contains the implementation of Shared Attention for Multi-label Zero-shot

LoFTR:Detector-Free Local Feature Matching with Transformers CVPR 2021

LoFTR-with-train-script LoFTR:Detector-Free Local Feature Matching with Transformers CVPR 2021 (with train script --- unofficial ---). About Megadepth

Craxk is a SINGLE AND NON-REPLICABLE Hash that uses data from the hardware where it is executed to form a hash that can only be reproduced by a single machine.

What is Craxk ? Craxk is a UNIQUE AND NON-REPLICABLE Hash that uses data from the hardware where it is executed to form a hash that can only be reprod

codes for paper Combining Dynamic Local Context Focus and Dependency Cluster Attention for Aspect-level sentiment classification

DLCF-DCA codes for paper Combining Dynamic Local Context Focus and Dependency Cluster Attention for Aspect-level sentiment classification. submitted t

Polkit - Local Privilege Escalation (CVE-2021-3560)

CVE-2021-3560 Polkit - Local Privilege Escalation Original discovery by kevin_backhouse from GitHub Security Lab References https://github.blog/2021-0

PyTorch Implementation of Google Brain's WaveGrad 2: Iterative Refinement for Text-to-Speech Synthesis

WaveGrad2 - PyTorch Implementation PyTorch Implementation of Google Brain's WaveGrad 2: Iterative Refinement for Text-to-Speech Synthesis. Status (202

Learning to Reconstruct 3D Non-Cuboid Room Layout from a Single RGB Image

NonCuboidRoom Paper Learning to Reconstruct 3D Non-Cuboid Room Layout from a Single RGB Image Cheng Yang*, Jia Zheng*, Xili Dai, Rui Tang, Yi Ma, Xiao

FinGAT: A Financial Graph Attention Networkto Recommend Top-K Profitable Stocks

FinGAT: A Financial Graph Attention Networkto Recommend Top-K Profitable Stocks This is our implementation for the paper: FinGAT: A Financial Graph At

Code for the CVPR2021 paper "Patch-NetVLAD: Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition"

Patch-NetVLAD: Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition This repository contains code for the CVPR2021 paper "Patch-NetV

SCALE: Modeling Clothed Humans with a Surface Codec of Articulated Local Elements (CVPR 2021)

SCALE: Modeling Clothed Humans with a Surface Codec of Articulated Local Elements (CVPR 2021) This repository contains the official PyTorch implementa

Stereo Radiance Fields (SRF): Learning View Synthesis for Sparse Views of Novel Scenes

Stereo Radiance Fields (SRF): Learning View Synthesis for Sparse Views of Novel Scenes

Make nixos usable for non-technical users through a settings / package management GUI.

Nix-Gui Make nixos usable for non-technical users through a settings / package management GUI. Motives The declarative nature of ni

RGB-D Local Implicit Function for Depth Completion of Transparent Objects

RGB-D Local Implicit Function for Depth Completion of Transparent Objects [Project Page] [Paper] Overview This repository maintains the official imple

Lightweight tool to perform MITM attack on local network

ARPSpy - A lightweight tool to perform MITM attack Using many library to perform ARP Spoof and auto-sniffing HTTP packet containing credential. (Never

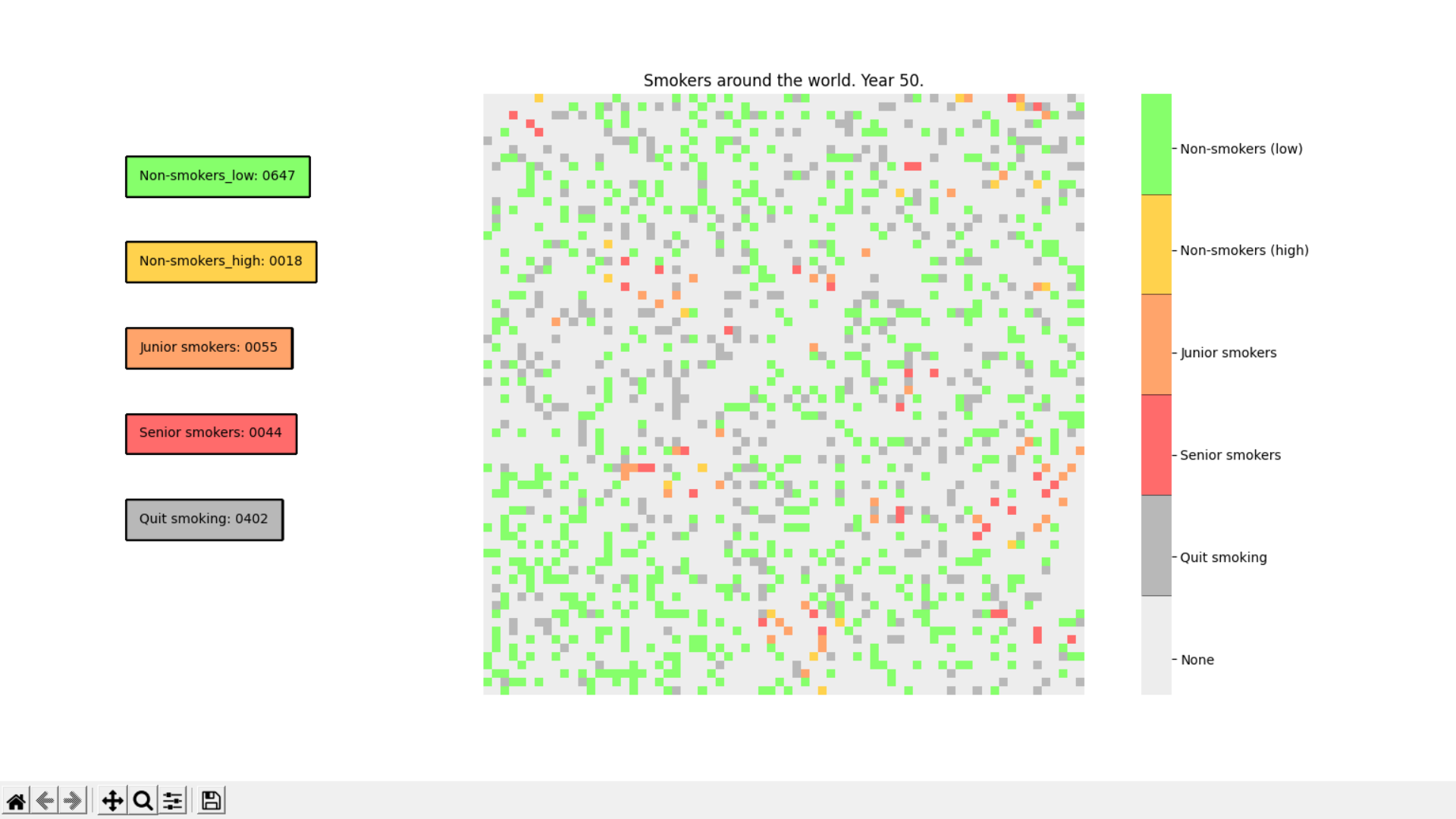

Smoking Simulation is an app to simulate the spreading of smokers and non-smokers, their interactions and population during certain amount of time.

Smoking Simulation is an app to simulate the spreading of smokers and non-smokers, their interactions and population during certain

Episodic Transformer (E.T.) is a novel attention-based architecture for vision-and-language navigation. E.T. is based on a multimodal transformer that encodes language inputs and the full episode history of visual observations and actions.

Episodic Transformers (E.T.) Episodic Transformer for Vision-and-Language Navigation Alexander Pashevich, Cordelia Schmid, Chen Sun Episodic Transform

The code for the CVPR 2021 paper Neural Deformation Graphs, a novel approach for globally-consistent deformation tracking and 3D reconstruction of non-rigid objects.

Neural Deformation Graphs Project Page | Paper | Video Neural Deformation Graphs for Globally-consistent Non-rigid Reconstruction Aljaž Božič, Pablo P

LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant Self-At

A gesture recognition system powered by OpenPose, k-nearest neighbours, and local outlier factor.

OpenHands OpenHands is a gesture recognition system powered by OpenPose, k-nearest neighbours, and local outlier factor. Currently the system can iden

Implementation of Uformer, Attention-based Unet, in Pytorch

Uformer - Pytorch Implementation of Uformer, Attention-based Unet, in Pytorch. It will only offer the concat-cross-skip connection. This repository wi

Shuffle Attention for MobileNetV3

SA-MobileNetV3 Shuffle Attention for MobileNetV3 Train Run the following command for train model on your own dataset: python train.py --dataset mnist

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network This repo contains the official Pytorch implementaion code and conf

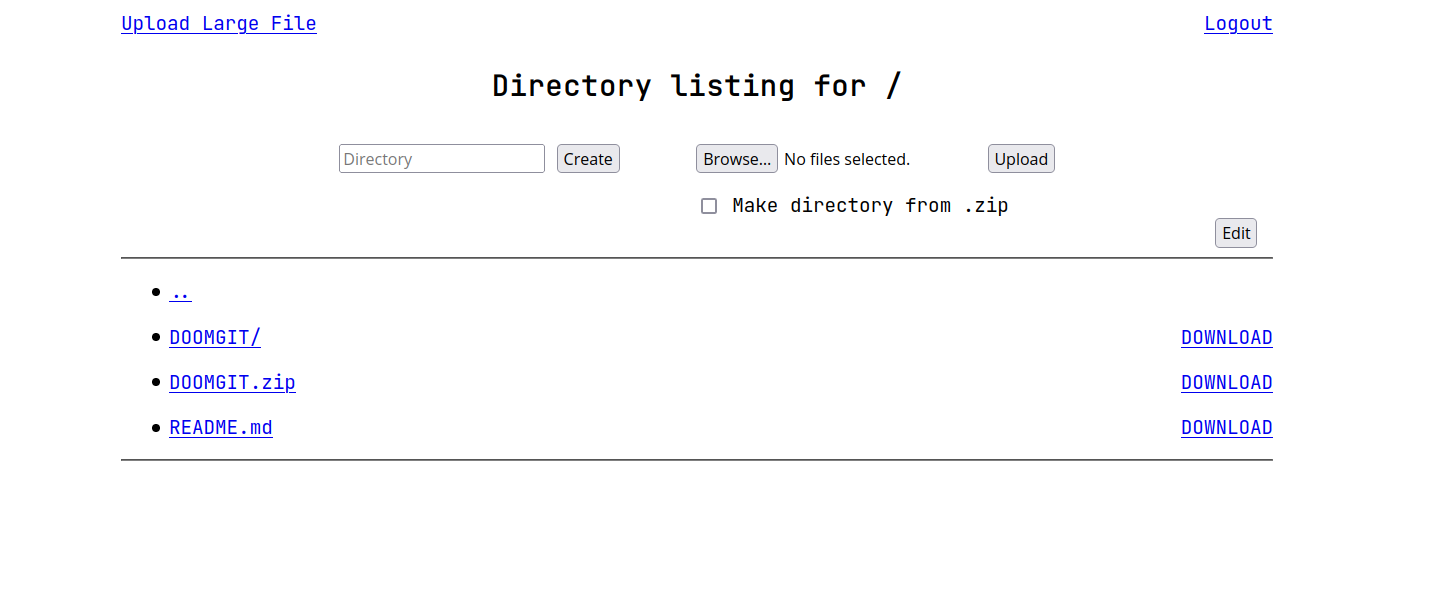

Nerd-Storage is a simple web server for sharing files on the local network.

Nerd-Storage is a simple web server for sharing files on the local network. It supports the download of files and directories, the upload of multiple files at once, making a directory, updates and deletions.

Code + pre-trained models for the paper Keeping Your Eye on the Ball Trajectory Attention in Video Transformers

Motionformer This is an official pytorch implementation of paper Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers. In this rep

FANet - Real-time Semantic Segmentation with Fast Attention

FANet Real-time Semantic Segmentation with Fast Attention Ping Hu, Federico Perazzi, Fabian Caba Heilbron, Oliver Wang, Zhe Lin, Kate Saenko , Stan Sc

Official implement of "CAT: Cross Attention in Vision Transformer".

CAT: Cross Attention in Vision Transformer This is official implement of "CAT: Cross Attention in Vision Transformer". Abstract Since Transformer has

PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation

StyleSpeech - PyTorch Implementation PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation. Status (2021.06.13

Implementation of Segformer, Attention + MLP neural network for segmentation, in Pytorch

Segformer - Pytorch Implementation of Segformer, Attention + MLP neural network for segmentation, in Pytorch. Install $ pip install segformer-pytorch

Official PyTorch implementation of Less is More: Pay Less Attention in Vision Transformers.

Less is More: Pay Less Attention in Vision Transformers Official PyTorch implementation of Less is More: Pay Less Attention in Vision Transformers. By

The official PyTorch implementation of recent paper - SAINT: Improved Neural Networks for Tabular Data via Row Attention and Contrastive Pre-Training

This repository is the official PyTorch implementation of SAINT. Find the paper on arxiv SAINT: Improved Neural Networks for Tabular Data via Row Atte

BuddyPress is an open source WordPress plugin to build a community site. In releases of BuddyPress from 5.0.0 before 7.2.1 it's possible for a non-privileged, regular user to obtain administrator rights by exploiting an issue in the REST API members endpoint. The vulnerability has been fixed in BuddyPress 7.2.1. Existing installations of the plugin should be updated to this version to mitigate the issue.

CVE-2021-21389 BuddyPress 7.2.1 - REST API Privilege Escalation to RCE PoC (Full) Affected version: 5.0.0 to 7.2.0 User requirement: Subscriber user

PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation

StyleSpeech - PyTorch Implementation PyTorch Implementation of Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation. Status (2021.06.09

Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning

We challenge a common assumption underlying most supervised deep learning: that a model makes a prediction depending only on its parameters and the features of a single input. To this end, we introduce a general-purpose deep learning architecture that takes as input the entire dataset instead of processing one datapoint at a time.

Unofficial PyTorch implementation of Attention Free Transformer (AFT) layers by Apple Inc.

aft-pytorch Unofficial PyTorch implementation of Attention Free Transformer's layers by Zhai, et al. [abs, pdf] from Apple Inc. Installation You can i

PyTorch implementation of Pay Attention to MLPs

gMLP PyTorch implementation of Pay Attention to MLPs. Quickstart Clone this repository. git clone https://github.com/jaketae/g-mlp.git Navigate to th

glip is a module for retrieve ip address like local-ip, global-ip, external-ip as string.

gle_ip_info glip is a module for retrieve ip address like local-ip, global-ip, external-ip as string.

PyTorch Code of "Memory In Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity from Spatiotemporal Dynamics"

Memory In Memory Networks It is based on the paper Memory In Memory: A Predictive Neural Network for Learning Higher-Order Non-Stationarity from Spati

Pytorch Implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension)

DiffSinger - PyTorch Implementation PyTorch implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension). Status

External Attention Network

Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks paper : https://arxiv.org/abs/2105.02358 EAMLP will come soon Jitto

Code for the paper "How Attentive are Graph Attention Networks?"

How Attentive are Graph Attention Networks? This repository is the official implementation of How Attentive are Graph Attention Networks?. The PyTorch

MSG-Transformer: Exchanging Local Spatial Information by Manipulating Messenger Tokens

MSG-Transformer Official implementation of the paper MSG-Transformer: Exchanging Local Spatial Information by Manipulating Messenger Tokens, by Jiemin

Attention-driven Robot Manipulation (ARM) which includes Q-attention

Attention-driven Robotic Manipulation (ARM) This codebase is home to: Q-attention: Enabling Efficient Learning for Vision-based Robotic Manipulation I

PyTorch implementation of: Michieli U. and Zanuttigh P., "Continual Semantic Segmentation via Repulsion-Attraction of Sparse and Disentangled Latent Representations", CVPR 2021.

Continual Semantic Segmentation via Repulsion-Attraction of Sparse and Disentangled Latent Representations This is the official PyTorch implementation

Implements MLP-Mixer: An all-MLP Architecture for Vision.

MLP-Mixer-CIFAR10 This repository implements MLP-Mixer as proposed in MLP-Mixer: An all-MLP Architecture for Vision. The paper introduces an all MLP (

Implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT : Cross-Attention Multi-Scale Vision Transformer for Image Classification This is an unofficial PyTorch implementation of CrossViT: Cross-Att

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Github project for Attention-guided Temporal Coherent Video Object Matting.

Attention-guided Temporal Coherent Video Object Matting This is the Github project for our paper Attention-guided Temporal Coherent Video Object Matti

Conformer: Local Features Coupling Global Representations for Visual Recognition

Conformer: Local Features Coupling Global Representations for Visual Recognition (arxiv) This repository is built upon DeiT and timm Usage First, inst

Official implementation for (Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching, AAAI-2021)

Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching Official pytorch implementation of "Show, Attend and Distill: Kn

Local Attention - Flax module for Jax

Local Attention - Flax Autoregressive Local Attention - Flax module for Jax Install $ pip install local-attention-flax Usage from jax import random fr

Implementation of Bidirectional Recurrent Independent Mechanisms (Learning to Combine Top-Down and Bottom-Up Signals in Recurrent Neural Networks with Attention over Modules)

BRIMs Bidirectional Recurrent Independent Mechanisms Implementation of the paper Learning to Combine Top-Down and Bottom-Up Signals in Recurrent Neura

Non-Official Pytorch implementation of "Face Identity Disentanglement via Latent Space Mapping" https://arxiv.org/abs/2005.07728 Using StyleGAN2 instead of StyleGAN

Face Identity Disentanglement via Latent Space Mapping - Implement in pytorch with StyleGAN 2 Description Pytorch implementation of the paper Face Ide

PyTorch code for our paper "Attention in Attention Network for Image Super-Resolution"

Under construction... Attention in Attention Network for Image Super-Resolution (A2N) This repository is an PyTorch implementation of the paper "Atten

Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Parallel Tacotron2 Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Improving Deep Network Debuggability via Sparse Decision Layers

Improving Deep Network Debuggability via Sparse Decision Layers This repository contains the code for our paper: Leveraging Sparse Linear Layers for D

Contains code for the paper "Vision Transformers are Robust Learners".

Vision Transformers are Robust Learners This repository contains the code for the paper Vision Transformers are Robust Learners by Sayak Paul* and Pin

A simple demonstration of how a django-based website can be set up for local development with microk8s

Django with MicroK8s Start Building Your Project This project provides a Django web app running as a single node Kubernetes cluster in microk8s. It is

Visualize the bitcoin blockchain from your local node

Project Overview A new feature in Bitcoin Core 0.20 allows users to dump the state of the blockchain (the UTXO set) using the command dumptxoutset. I'

A Udemy downloader that can download DRM protected videos and non-DRM protected videos.

Udemy Downloader with DRM support NOTE This program is WIP, the code is provided as-is and i am not held resposible for any legal repercussions result

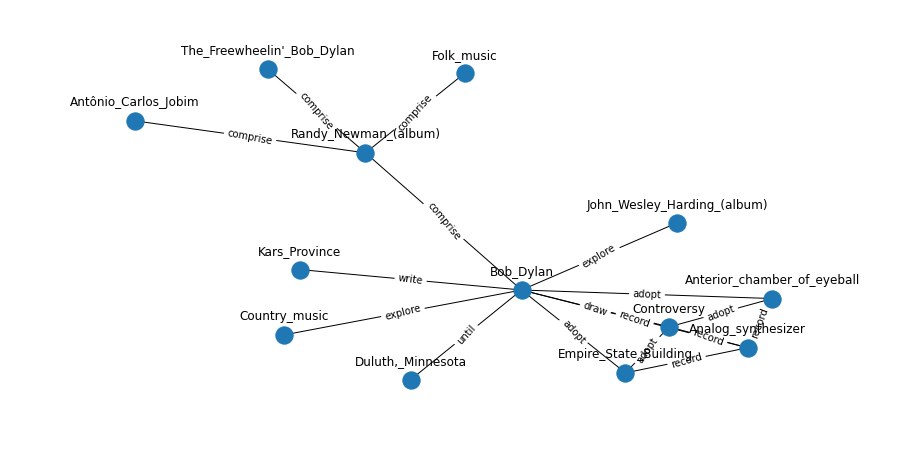

Language models are open knowledge graphs ( non official implementation )

language-models-are-knowledge-graphs-pytorch Language models are open knowledge graphs ( work in progress ) A non official reimplementation of Languag