757 Repositories

Python Swin-Transformer-TensorRT Libraries

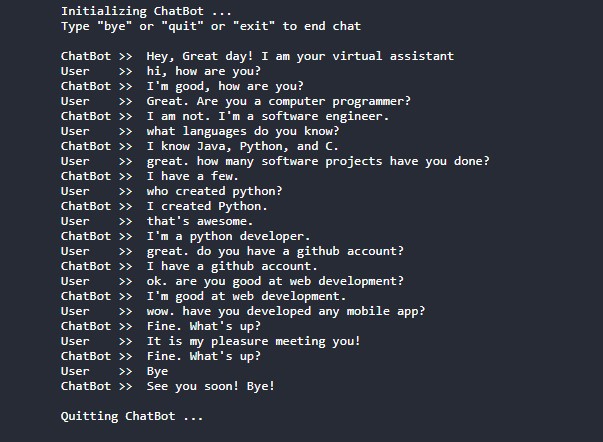

Conversational-AI-ChatBot - Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users!

Conversational AI ChatBot Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users! In this project? Thi

SPT_LSA_ViT - Implementation for Visual Transformer for Small-size Datasets

Vision Transformer for Small-Size Datasets Seung Hoon Lee and Seunghyun Lee and Byung Cheol Song | Paper Inha University Abstract Recently, the Vision

Image-popularity-score - A novel deep regression method for image scoring.

Image-popularity-score - A novel deep regression method for image scoring.

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyTorch Implementation of SSTNs for hyperspectral image classifications from the IEEE T-GRS paper "Spectral-Spatial Transformer Network for Hyperspectral Image Classification: A FAS Framework."

PyTorch Implementation of SSTN for Hyperspectral Image Classification Paper links: SSTN published on IEEE T-GRS. Also, you can directly find the imple

The official pytorch implementation of ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias

ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias Introduction | Updates | Usage | Results&Pretrained Models | Statement | Intr

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation Where we are ? 12.27 目前和原论文仍有1%左右得差距,但已经力压很多SOTA了 ckpt__448_epoch_25.pth mIoU

Variable Transformer Calculator

✠ VASCO - VAriable tranSformer CalculatOr Software que calcula informações de transformadores feita para a matéria de "Conversão Eletromecânica de Ene

The modify PyTorch version of Siam-trackers which are speed-up by TensorRT.

SiamTracker-with-TensorRT The modify PyTorch version of Siam-trackers which are speed-up by TensorRT or ONNX. [Updating...] Examples demonstrating how

Official release of MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis of Pancreatic Cancer axriv: http://arxiv.org/abs/2112.13513

MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis This is the official page of the MSHT with its experimental script and records. We de

Unofficial TensorFlow implementation of the Keyword Spotting Transformer model

Keyword Spotting Transformer This is the unofficial TensorFlow implementation of the Keyword Spotting Transformer model. This model is used to train o

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

OpenMMLab Model Deployment Toolset

Introduction English | 简体中文 MMDeploy is an open-source deep learning model deployment toolset. It is a part of the OpenMMLab project. Major features F

Machine Learning Course Project, IMDB movie review sentiment analysis by lstm, cnn, and transformer

IMDB Sentiment Analysis This is the final project of Machine Learning Courses in Huazhong University of Science and Technology, School of Artificial I

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

A website which allows you to play with the GPT-2 transformer

transformers A website which allows you to play with the GPT-2 model Built with ❤️ by raphtlw Table of contents Model Setup About Contributors Model T

Tensorflow implementation for "Improved Transformer for High-Resolution GANs" (NeurIPS 2021).

HiT-GAN Official TensorFlow Implementation HiT-GAN presents a Transformer-based generator that is trained based on Generative Adversarial Networks (GA

Group Activity Recognition with Clustered Spatial Temporal Transformer

GroupFormer Group Activity Recognition with Clustered Spatial-TemporalTransformer Backbone Style Action Acc Activity Acc Config Download Inv3+flow+pos

Source code of SIGIR2021 Paper 'One Chatbot Per Person: Creating Personalized Chatbots based on Implicit Profiles'

DHAP Source code of SIGIR2021 Long Paper: One Chatbot Per Person: Creating Personalized Chatbots based on Implicit User Profiles . Preinstallation Fir

LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation

LoveDA: A Remote Sensing Land-Cover Dataset for Domain Adaptive Semantic Segmentation by Junjue Wang, Zhuo Zheng, Ailong Ma, Xiaoyan Lu, and Yanfei Zh

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

This repository contains a CBIR system that uses swin transformer to extract image's feature.

Swin-transformer based CBIR This repository contains a CBIR(content-based image retrieval) system. Here we use Swin-transformer to extract query image

StyleSwin: Transformer-based GAN for High-resolution Image Generation

StyleSwin This repo is the official implementation of "StyleSwin: Transformer-based GAN for High-resolution Image Generation". By Bowen Zhang, Shuyang

Official Pytorch implementation of the paper: "Locally Shifted Attention With Early Global Integration"

Locally-Shifted-Attention-With-Early-Global-Integration Pretrained models You can download all the models from here. Training Imagenet python -m torch

Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021).

STAR-pytorch Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021). CVF (pdf) STAR-DC

This project aims to explore the deployment of Swin-Transformer based on TensorRT, including the test results of FP16 and INT8.

Swin Transformer This project aims to explore the deployment of SwinTransformer based on TensorRT, including the test results of FP16 and INT8. Introd

OSLO: Open Source framework for Large-scale transformer Optimization

O S L O Open Source framework for Large-scale transformer Optimization What's New: December 21, 2021 Released OSLO 1.0. What is OSLO about? OSLO is a

TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning

TransZero++ This repository contains the testing code for the paper "TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning" submitted

MISSFormer: An Effective Medical Image Segmentation Transformer

MISSFormer Code for paper "MISSFormer: An Effective Medical Image Segmentation Transformer". Please read our preprint at the following link: paper_add

TensorFlow implementation of "TokenLearner: What Can 8 Learned Tokens Do for Images and Videos?"

TokenLearner: What Can 8 Learned Tokens Do for Images and Videos? Source: Improving Vision Transformer Efficiency and Accuracy by Learning to Tokenize

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Mixed Transformer UNet for Medical Image Segmentation

MT-UNet Update 2021/11/19 Thank you for your interest in our work. We have uploaded the code of our MTUNet to help peers conduct further research on i

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

[ICCV2021] TransReID: Transformer-based Object Re-Identification [pdf] The official repository for TransReID: Transformer-based Object Re-Identificati

This repository builds a basic vision transformer from scratch so that one beginner can understand the theory of vision transformer.

vision-transformer-from-scratch This repository includes several kinds of vision transformers from scratch so that one beginner can understand the the

A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization

University1652-Baseline [Paper] [Slide] [Explore Drone-view Data] [Explore Satellite-view Data] [Explore Street-view Data] [Video Sample] [中文介绍] This

Official PyTorch implementation of SegFormer

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

FastFormers - highly efficient transformer models for NLU

FastFormers FastFormers provides a set of recipes and methods to achieve highly efficient inference of Transformer models for Natural Language Underst

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

The code for two papers: Feedback Transformer and Expire-Span.

transformer-sequential This repo contains the code for two papers: Feedback Transformer Expire-Span The training code is structured for long sequentia

Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing

Introduction Funnel-Transformer is a new self-attention model that gradually compresses the sequence of hidden states to a shorter one and hence reduc

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

An implementation of the Pay Attention when Required transformer

Pay Attention when Required (PAR) Transformer-XL An implementation of the Pay Attention when Required transformer from the paper: https://arxiv.org/pd

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

Reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer: Self-Attention with Linear Complexity)

Linear Multihead Attention (Linformer) PyTorch Implementation of reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer:

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Longformer: The Long-Document Transformer

Longformer Longformer and LongformerEncoderDecoder (LED) are pretrained transformer models for long documents. ***** New December 1st, 2020: Longforme

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Source code of paper "BP-Transformer: Modelling Long-Range Context via Binary Partitioning"

BP-Transformer This repo contains the code for our paper BP-Transformer: Modeling Long-Range Context via Binary Partition Zihao Ye, Qipeng Guo, Quan G

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Using VapourSynth with super resolution models and speeding them up with TensorRT.

VSGAN-tensorrt-docker Using image super resolution models with vapoursynth and speeding them up with TensorRT. Using NVIDIA/Torch-TensorRT combined wi

[ACM MM 2021] Yes, "Attention is All You Need", for Exemplar based Colorization

Transformer for Image Colorization This is an implemention for Yes, "Attention Is All You Need", for Exemplar based Colorization, and the current soft

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2 Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexan

Paper and Codes for “Embracing Single Stride 3D Object Detector with Sparse Transformer”

SST: Single-stride Sparse Transformer This is the official implementation of paper: Embracing Single Stride 3D Object Detector with Sparse Transformer

This is the official source code of "BiCAT: Bi-Chronological Augmentation of Transformer for Sequential Recommendation".

BiCAT This is our TensorFlow implementation for the paper: "BiCAT: Sequential Recommendation with Bidirectional Chronological Augmentation of Transfor

The code of "Dependency Learning for Legal Judgment Prediction with a Unified Text-to-Text Transformer".

Code data_preprocess.py: preprocess data for Dependent-T5. parameters.py: define parameters of Dependent-T5. train_tools.py: traning and evaluation co

Using image super resolution models with vapoursynth and speeding them up with TensorRT

vs-RealEsrganAnime-tensorrt-docker Using image super resolution models with vapoursynth and speeding them up with TensorRT. Also a docker image since

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts

t5-japanese Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts. The following is a list of models that

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation [arxiv] This is the official repository for CDTrans: Cross-domain Transformer for

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Continuous Augmented Positional Embeddings (CAPE) implementation for PyTorch

PyTorch implementation of Continuous Augmented Positional Embeddings (CAPE), by Likhomanenko et al. Enhance your Transformer positional embeddings with easy-to-use augmentations!

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

Hardware-accelerated DNN model inference ROS2 packages using NVIDIA Triton/TensorRT for both Jetson and x86_64 with CUDA-capable GPU

Isaac ROS DNN Inference Overview This repository provides two NVIDIA GPU-accelerated ROS2 nodes that perform deep learning inference using custom mode

Embracing Single Stride 3D Object Detector with Sparse Transformer

SST: Single-stride Sparse Transformer This is the official implementation of paper: Embracing Single Stride 3D Object Detector with Sparse Transformer

Implementation of the paper Recurrent Glimpse-based Decoder for Detection with Transformer.

REGO-Deformable DETR By Zhe Chen, Jing Zhang, and Dacheng Tao. This repository is the implementation of the paper Recurrent Glimpse-based Decoder for

A new video text spotting framework with Transformer

TransVTSpotter: End-to-end Video Text Spotter with Transformer Introduction A Multilingual, Open World Video Text Dataset and End-to-end Video Text Sp

Convert openmmlab (not only mmdetection) series model to tensorrt

MMDet to TensorRT This project aims to convert the mmdetection model to TensorRT model end2end. Focus on object detection for now. Mask support is exp

SOLOv2 on onnx & tensorRT

SOLOv2.tensorRT: NOTE: code based on WXinlong/SOLO add support to TensorRT inference onnxruntime tensorRT full_dims and dynamic shape postprocess with

K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce (EMNLP Founding 2021)

Introduction K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce. Installation PyTor

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

SimMIM: A Simple Framework for Masked Image Modeling

SimMIM By Zhenda Xie*, Zheng Zhang*, Yue Cao*, Yutong Lin, Jianmin Bao, Zhuliang Yao, Qi Dai and Han Hu*. This repo is the official implementation of

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

RaceBERT -- A transformer based model to predict race and ethnicty from names

RaceBERT -- A transformer based model to predict race and ethnicty from names Installation pip install racebert Using a virtual environment is highly

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

Spatial Transformer Nets in TensorFlow/ TensorLayer

MOVED TO HERE Spatial Transformer Networks Spatial Transformer Networks (STN) is a dynamic mechanism that produces transformations of input images (or

This is Official implementation for "Pose-guided Feature Disentangling for Occluded Person Re-Identification Based on Transformer" in AAAI2022

PFD:Pose-guided Feature Disentangling for Occluded Person Re-identification based on Transformer This repo is the official implementation of "Pose-gui

Learning Tracking Representations via Dual-Branch Fully Transformer Networks

Learning Tracking Representations via Dual-Branch Fully Transformer Networks DualTFR ⭐ We achieves the runner-ups for both VOT2021ST (short-term) and

Codes for the AAAI'22 paper "TransZero: Attribute-guided Transformer for Zero-Shot Learning"

TransZero [arXiv] This repository contains the testing code for the paper "TransZero: Attribute-guided Transformer for Zero-Shot Learning" accepted to

Reproduction of Vision Transformer in Tensorflow2. Train from scratch and Finetune.

Vision Transformer(ViT) in Tensorflow2 Tensorflow2 implementation of the Vision Transformer(ViT). This repository is for An image is worth 16x16 words

Official pytorch code for SSAT: A Symmetric Semantic-Aware Transformer Network for Makeup Transfer and Removal

SSAT: A Symmetric Semantic-Aware Transformer Network for Makeup Transfer and Removal This is the official pytorch code for SSAT: A Symmetric Semantic-

Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fusion Framework via Self-Supervised Multi-Task Learning. Code will be available soon.

Official-PyTorch-Implementation-of-TransMEF Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fu

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Code for the ACL 2021 paper "Structural Guidance for Transformer Language Models"

Structural Guidance for Transformer Language Models This repository accompanies the paper, Structural Guidance for Transformer Language Models, publis

SwinTrack: A Simple and Strong Baseline for Transformer Tracking

SwinTrack This is the official repo for SwinTrack. A Simple and Strong Baseline Prerequisites Environment conda (recommended) conda create -y -n SwinT

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

The official repository for "Score Transformer: Generating Musical Scores from Note-level Representation" (MMAsia '21)

Score Transformer This is the official repository for "Score Transformer": Score Transformer: Generating Musical Scores from Note-level Representation

Code for A Volumetric Transformer for Accurate 3D Tumor Segmentation

VT-UNet This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmentaion results of VT-UNet. Environmen

Implementation of the paper 'Sentence Bottleneck Autoencoders from Transformer Language Models'

Introduction This repository contains the code for the paper Sentence Bottleneck Autoencoders from Transformer Language Models by Ivan Montero, Nikola

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network)

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network) BERTAC is a framework that combines a