237 Repositories

Python airflow-pipeline Libraries

Repositório para estudo do airflow

airflow-101 Repositório para estudo do airflow Docker criado baseado no tutorial Exemplo de API da pokeapi Para executar clone o repo execute as confi

Crypto Stats and Tweets Data Pipeline using Airflow

Crypto Stats and Tweets Data Pipeline using Airflow Introduction Project Overview This project was brought upon through Udacity's nanodegree program.

Datashader is a data rasterization pipeline for automating the process of creating meaningful representations of large amounts of data.

Datashader is a data rasterization pipeline for automating the process of creating meaningful representations of large amounts of data.

BookNLP, a natural language processing pipeline for books

BookNLP BookNLP is a natural language processing pipeline that scales to books and other long documents (in English), including: Part-of-speech taggin

Predicting the usefulness of reviews given the review text and metadata surrounding the reviews.

Predicting Yelp Review Quality Table of Contents Introduction Motivation Goal and Central Questions The Data Data Storage and ETL EDA Data Pipeline Da

Backtesting an algorithmic trading strategy using Machine Learning and Sentiment Analysis.

Trading Tesla with Machine Learning and Sentiment Analysis An interactive program to train a Random Forest Classifier to predict Tesla daily prices us

Full automated data pipeline using docker images

Create postgres tables from CSV files This first section is only relate to creating tables from CSV files using postgres container alone. Just one of

Building house price data pipelines with Apache Beam and Spark on GCP

This project contains the process from building a web crawler to extract the raw data of house price to create ETL pipelines using Google Could Platform services.

Automated Machine Learning Pipeline for tabular data. Designed for predictive maintenance applications, failure identification, failure prediction, condition monitoring, etc.

Automated Machine Learning Pipeline for tabular data. Designed for predictive maintenance applications, failure identification, failure prediction, condition monitoring, etc.

A simple python application for running a CI pipeline locally

A simple python application for running a CI pipeline locally This app currently supports GitLab CI scripts

Two phase pipeline + StreamlitTwo phase pipeline + Streamlit

Two phase pipeline + Streamlit This is an example project that demonstrates how to create a pipeline that consists of two phases of execution. In betw

A data preprocessing and feature engineering script for a machine learning pipeline is prepared.

FEATURE ENGINEERING Business Problem: A data preprocessing and feature engineering script for a machine learning pipeline needs to be prepared. It is

Required for a machine learning pipeline data preprocessing and variable engineering script needs to be prepared

Feature-Engineering Required for a machine learning pipeline data preprocessing and variable engineering script needs to be prepared. When the dataset

A simple python application for running a CI pipeline locally This app currently supports GitLab CI scripts

🏃 Simple Local CI Runner 🏃 A simple python application for running a CI pipeline locally This app currently supports GitLab CI scripts ⚙️ Setup Inst

A deep-learning pipeline for segmentation of ambiguous microscopic images.

Welcome to Official repository of deepflash2 - a deep-learning pipeline for segmentation of ambiguous microscopic images. Quick Start in 30 seconds se

Identifies the faulty wafer before it can be used for the fabrication of integrated circuits and, in photovoltaics, to manufacture solar cells.

Identifies the faulty wafer before it can be used for the fabrication of integrated circuits and, in photovoltaics, to manufacture solar cells. The project retrains itself after every prediction, making it more robust and generalized over time.

Created covid data pipeline using PySpark and MySQL that collected data stream from API and do some processing and store it into MYSQL database.

Created covid data pipeline using PySpark and MySQL that collected data stream from API and do some processing and store it into MYSQL database.

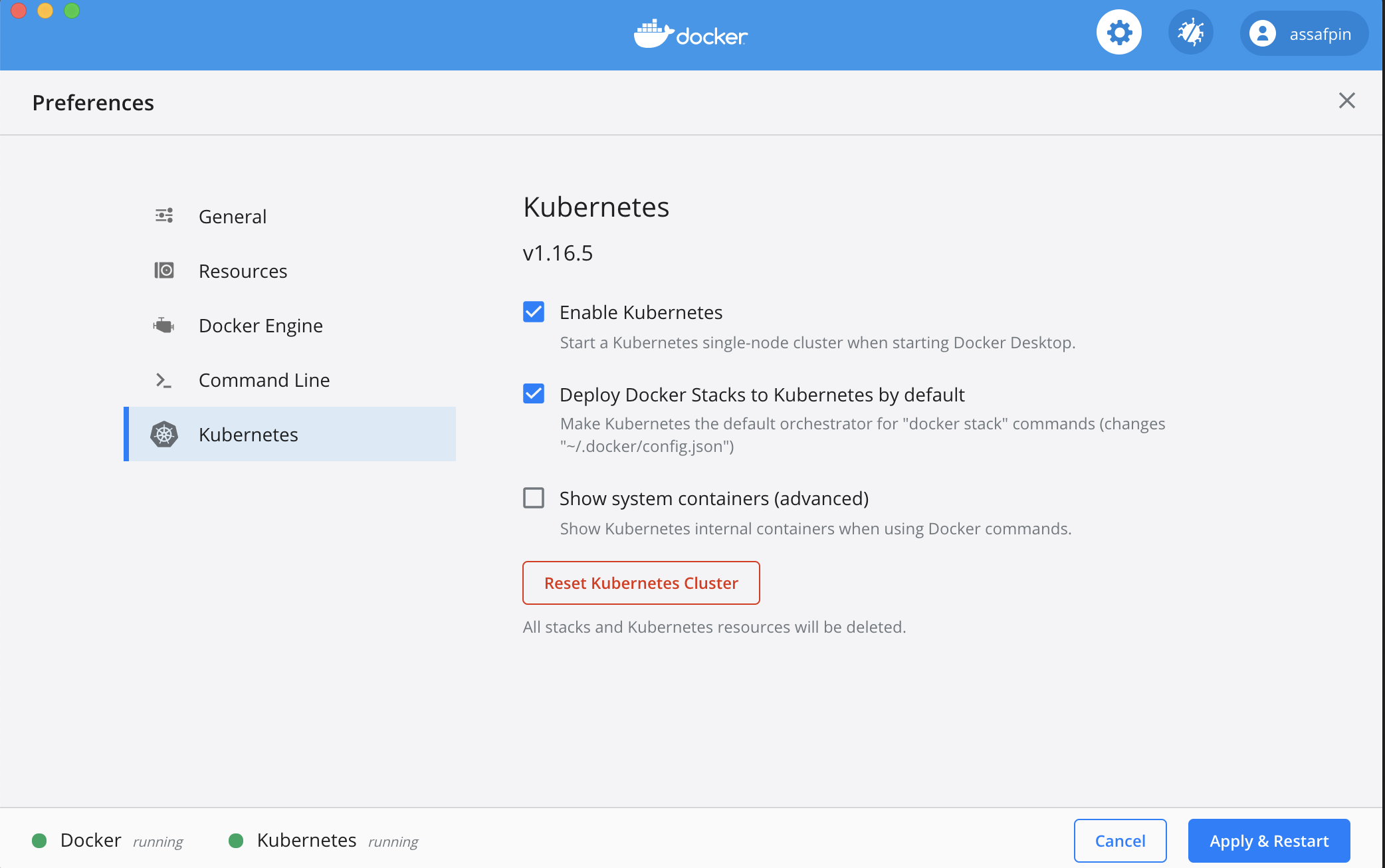

Bodywork deploys machine learning projects developed in Python, to Kubernetes.

Bodywork deploys machine learning projects developed in Python, to Kubernetes. It helps you to: serve models as microservices execute batch jobs run r

MHtyper is an end-to-end pipeline for recognized the Forensic microhaplotypes in Nanopore sequencing data.

MHtyper is an end-to-end pipeline for recognized the Forensic microhaplotypes in Nanopore sequencing data. It is implemented using Python.

A pipeline that creates consensus sequences from a Nanopore reads. I

A pipeline that creates consensus sequences from a Nanopore reads. It clusters reads that are similar to each other and creates a consensus that is then identified using BLAST.

Sample code for Harry's Airflow online trainng course

Sample code for Harry's Airflow online trainng course You can find the videos on youtube or bilibili. I am working on adding below things: the slide p

Inference pipeline for our participation in the FeTA challenge 2021.

feta-inference Inference pipeline for our participation in the FeTA challenge 2021. Team name: TRABIT Installation Download the two folders in https:/

This tool parses log data and allows to define analysis pipelines for anomaly detection.

logdata-anomaly-miner This tool parses log data and allows to define analysis pipelines for anomaly detection. It was designed to run the analysis wit

AQP is a modular pipeline built to enable the comparison and testing of different quality metric configurations.

Audio Quality Platform - AQP An Open Modular Python Platform for Objective Speech and Audio Quality Metrics AQP is a highly modular pipeline designed

A simple pytorch pipeline for semantic segmentation.

SegmentationPipeline -- Pytorch A simple pytorch pipeline for semantic segmentation. Requirements : torch=1.9.0 tqdm albumentations=1.0.3 opencv-pyt

Pipeline and Dataset helpers for complex algorithm evaluation.

tpcp - Tiny Pipelines for Complex Problems A generic way to build object-oriented datasets and algorithm pipelines and tools to evaluate them pip inst

SNV calling pipeline developed explicitly to process individual or trio vcf files obtained from Illumina based pipeline (grch37/grch38).

SNV Pipeline SNV calling pipeline developed explicitly to process individual or trio vcf files obtained from Illumina based pipeline (grch37/grch38).

In this project, ETL pipeline is build on data warehouse hosted on AWS Redshift.

ETL Pipeline for AWS Project Description In this project, ETL pipeline is build on data warehouse hosted on AWS Redshift. The data is loaded from S3 t

MLOps will help you to understand how to build a Continuous Integration and Continuous Delivery pipeline for an ML/AI project.

page_type languages products description sample python azure azure-machine-learning-service azure-devops Code which demonstrates how to set up and ope

fMRIprep Pipeline To Machine Learning

fMRIprep Pipeline To Machine Learning(Demo) 所有配置均在config.py文件下定义 前置环境(lilab) 各个节点均安装docker,并有fmripre的镜像 可以使用conda中的base环境(相应的第三份包之后更新) 1. fmriprep scr

PdpCLI is a pandas DataFrame processing CLI tool which enables you to build a pandas pipeline from a configuration file.

PdpCLI Quick Links Introduction Installation Tutorial Basic Usage Data Reader / Writer Plugins Introduction PdpCLI is a pandas DataFrame processing CL

Colossal-AI: A Unified Deep Learning System for Large-Scale Parallel Training

ColossalAI An integrated large-scale model training system with efficient parallelization techniques. arXiv: Colossal-AI: A Unified Deep Learning Syst

DWIPrep is a robust and easy-to-use pipeline for preprocessing of diverse dMRI data.

DWIPrep: A Robust Preprocessing Pipeline for dMRI Data DWIPrep is a robust and easy-to-use pipeline for preprocessing of diverse dMRI data. The transp

An Airflow operator to call the main function from the dbt-core Python package

airflow-dbt-python An Airflow operator to call the main function from the dbt-core Python package Motivation Airflow running in a managed environment

Colossal-AI: A Unified Deep Learning System for Large-Scale Parallel Training

ColossalAI An integrated large-scale model training system with efficient parallelization techniques Installation PyPI pip install colossalai Install

TrackTech: Real-time tracking of subjects and objects on multiple cameras

TrackTech: Real-time tracking of subjects and objects on multiple cameras This project is part of the 2021 spring bachelor final project of the Bachel

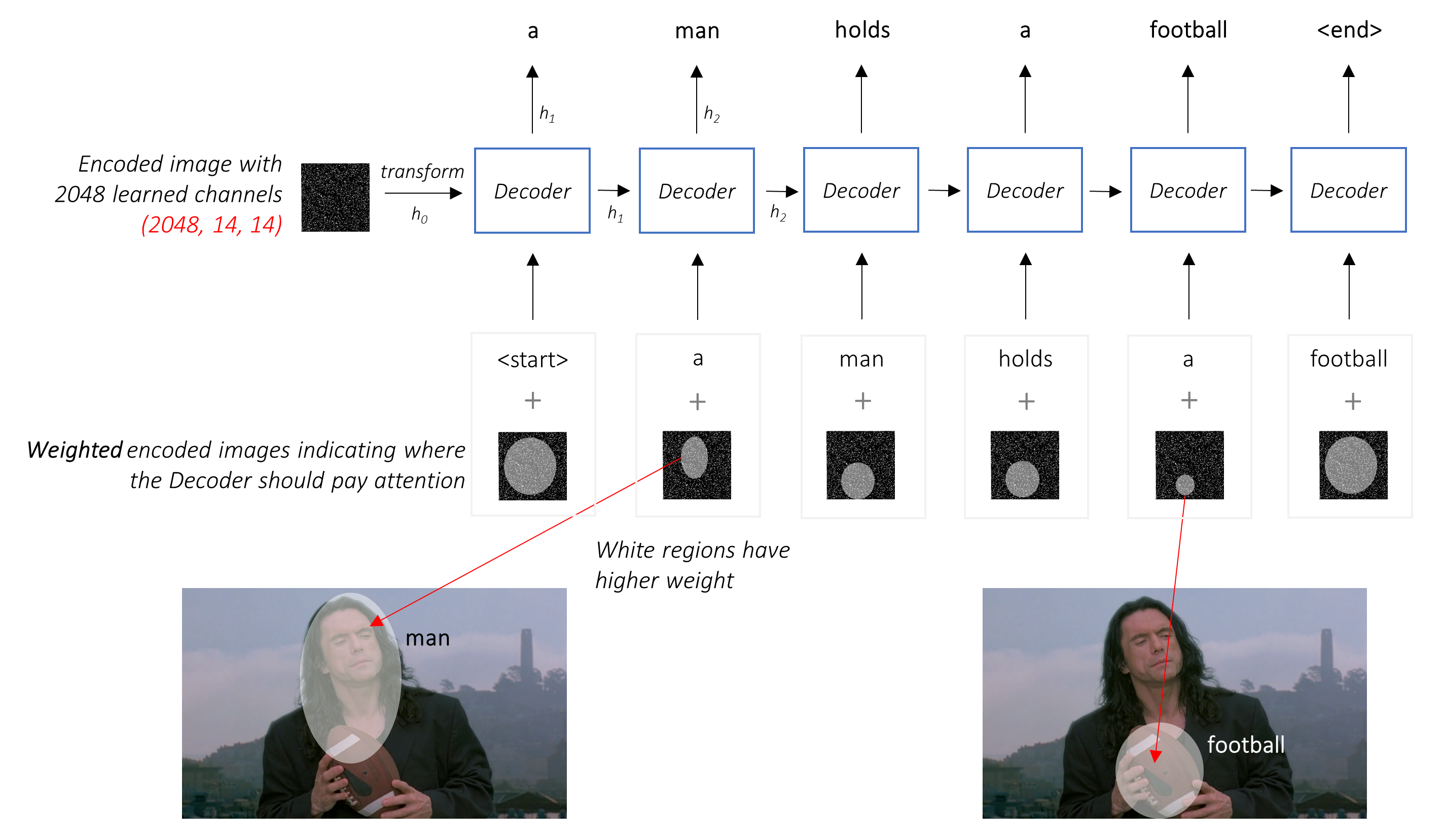

A Context-aware Visual Attention-based training pipeline for Object Detection from a Webpage screenshot!

CoVA: Context-aware Visual Attention for Webpage Information Extraction Abstract Webpage information extraction (WIE) is an important step to create k

A novel pipeline framework for multi-hop complex KGQA task. About the paper title: Improving Multi-hop Embedded Knowledge Graph Question Answering by Introducing Relational Chain Reasoning

Rce-KGQA A novel pipeline framework for multi-hop complex KGQA task. This framework mainly contains two modules, answering_filtering_module and relati

Python ML pipeline that showcases mltrace functionality.

mltrace tutorial Date: October 2021 This tutorial builds a training and testing pipeline for a toy ML prediction problem: to predict whether a passeng

PyTorch framework for Deep Learning research and development.

Accelerated DL & RL PyTorch framework for Deep Learning research and development. It was developed with a focus on reproducibility, fast experimentati

Tracking Pipeline helps you to solve the tracking problem more easily

Tracking_Pipeline Tracking_Pipeline helps you to solve the tracking problem more easily I integrate detection algorithms like: Yolov5, Yolov4, YoloX,

pipeline for migrating lichess data into postgresql

How Long Does It Take Ordinary People To "Get Good" At Chess? TL;DR: According to 5.5 years of data from 2.3 million players and 450 million games, mo

This is a practice on Airflow, which is building virtual env, installing Airflow and constructing data pipeline (DAGs)

airflow-test This is a practice on Airflow, which is Builing virtualbox env and setting Airflow on that env Installing Airflow using python virtual en

Complete portable pipeline for masking of Aadhaar Number adhering to Govt. Privacy Guidelines.

Aadhaar Number Masking Pipeline Implementation of a complete pipeline that masks the Aadhaar Number in given images to adhere to Govt. of India's Priv

Open source Python implementation of the HDR+ photography pipeline

hdrplus-python Open source Python implementation of the HDR+ photography pipeline, originally developped by Google and presented in a 2016 article. Th

Multiparametric Image Analysis

Documentation The documentation is available on populse_mia's website here Installation From PyPI, for users By cloning the package, for developers Fr

Lale is a Python library for semi-automated data science.

Lale is a Python library for semi-automated data science. Lale makes it easy to automatically select algorithms and tune hyperparameters of pipelines that are compatible with scikit-learn, in a type-safe fashion.

Framework for the Complete Gaze Tracking Pipeline

Framework for the Complete Gaze Tracking Pipeline The figure below shows a general representation of the camera-to-screen gaze tracking pipeline [1].

A tutorial presents several practical examples of how to build DAGs in Apache Airflow

Apache Airflow - Python Brasil 2021 Este tutorial apresenta vários exemplos práticos de como construir DAGs no Apache Airflow. Background Apache Airfl

MONAI Deploy App SDK offers a framework and associated tools to design, develop and verify AI-driven applications in the healthcare imaging domain.

MONAI Deploy App SDK offers a framework and associated tools to design, develop and verify AI-driven applications in the healthcare imaging domain.

Show you how to integrate Zeppelin with Airflow

Introduction This repository is to show you how to integrate Zeppelin with Airflow. The philosophy behind the ingtegration is to make the transition f

Simple and flexible ML workflow engine.

This is a simple and flexible ML workflow engine. It helps to orchestrate events across a set of microservices and create executable flow to handle requests. Engine is designed to be configurable with any microservices. Enjoy!

The easy way to combine mlflow, hydra and optuna into one machine learning pipeline.

mlflow_hydra_optuna_the_easy_way The easy way to combine mlflow, hydra and optuna into one machine learning pipeline. Objective TODO Usage 1. build do

A web scraping pipeline project that retrieves TV and movie data from two sources, then transforms and stores data in a MySQL database.

New to Streaming Scraper An in-progress web scraping project built with Python, R, and SQL. The scraped data are movie and TV show information. The go

A brand new hub for Scene Graph Generation methods based on MMdetection (2021). The pipeline of from detection, scene graph generation to downstream tasks (e.g., image cpationing) is supported. Pytorch version implementation of HetH (ECCV 2020) and TopicSG (ICCV 2021) is included.

MMSceneGraph Introduction MMSceneneGraph is an open source code hub for scene graph generation as well as supporting downstream tasks based on the sce

Automatizando a criação de DAGs usando Jinja e YAML

Automatizando a criação de DAGs no Airflow usando Jinja e YAML Arquitetura do Repo: Pastas por contexto de negócio (ex: Marketing, Analytics, HR, etc)

A geometric deep learning pipeline for predicting protein interface contacts.

A geometric deep learning pipeline for predicting protein interface contacts.

Multi-Branch CI/CD Pipeline using CDK Pipelines.

Using AWS CDK Pipelines and AWS Lambda for multi-branch pipeline management and infrastructure deployment. This project shows how to use the AWS CDK P

Write maintainable, production-ready pipelines using Jupyter or your favorite text editor. Develop locally, deploy to the cloud. ☁️

Write maintainable, production-ready pipelines using Jupyter or your favorite text editor. Develop locally, deploy to the cloud. ☁️

Pipelines de datos, 2021.

Este repo ilustra un proceso sencillo de automatización de transformación y modelado de datos, a través de un pipeline utilizando Luigi. Stack princip

Elementary is an open-source data reliability framework for modern data teams. The first module of the framework is data lineage.

Data lineage made simple, reliable, and automated. Effortlessly track the flow of data, understand dependencies and analyze impact. Features Visualiza

Shotgrid Toolkit Engine for Gaffer

Shotgun toolkit engine for Gaffer Contact : Diego Garcia Huerta Overview Implementation of a shotgun engine for Gaffer. It supports the classic bootst

Know your customer pipeline in apache air flow

KYC_pipline Know your customer pipeline in apache air flow For a successful pipeline run take these steps: Run you Airflow server Admin - connection

An adaptable Snakemake workflow which uses GATKs best practice recommendations to perform germline mutation calling starting with BAM files

Germline Mutation Calling This Snakemake workflow follows the GATK best-practice recommandations to call small germline variants. The pipeline require

A Multilingual Latent Dirichlet Allocation (LDA) Pipeline with Stop Words Removal, n-gram features, and Inverse Stemming, in Python.

Multilingual Latent Dirichlet Allocation (LDA) Pipeline This project is for text clustering using the Latent Dirichlet Allocation (LDA) algorithm. It

A production-ready pipeline for text mining and subject indexing

A production-ready pipeline for text mining and subject indexing

Project repository of Apache Airflow, deployed on Docker in Amazon EC2 via GitLab.

Airflow on Docker in EC2 + GitLab's CI/CD Personal project for simple data pipeline using Airflow. Airflow will be installed inside Docker container,

Pipeline is an asset packaging library for Django.

Pipeline Pipeline is an asset packaging library for Django, providing both CSS and JavaScript concatenation and compression, built-in JavaScript templ

A spaCy wrapper of OpenTapioca for named entity linking on Wikidata

spaCyOpenTapioca A spaCy wrapper of OpenTapioca for named entity linking on Wikidata. Table of contents Installation How to use Local OpenTapioca Vizu

Pokemon catch events project to demonstrate data pipeline on AWS

Pokemon Catches Data Pipeline This is a sample project to practice end-to-end data project; Terraform is used to deploy infrastructure; Kafka is the t

Kedro is an open-source Python framework for creating reproducible, maintainable and modular data science code

A Python framework for creating reproducible, maintainable and modular data science code.

A scalable implementation of WobblyStitcher for 3D microscopy images

WobblyStitcher Introduction A scalable implementation of WobblyStitcher Dependencies $ python -m pip install numpy scikit-image Visualization ImageJ

A data annotation pipeline to generate high-quality, large-scale speech datasets with machine pre-labeling and fully manual auditing.

About This repository provides data and code for the paper: Scalable Data Annotation Pipeline for High-Quality Large Speech Datasets Development (subm

Pipeline for fast building text classification TF-IDF + LogReg baselines.

Text Classification Baseline Pipeline for fast building text classification TF-IDF + LogReg baselines. Usage Instead of writing custom code for specif

CPOST is a CLI tool to assist with the proper sizing of Clara Deploy pipelines

CPOST (Clara Pipeline Operator Sizing Tool) Tool to measure resource usage of Clara Platform pipeline operators Cpost is a tool that will help you run

This repository contains a streaming Dataflow pipeline written in Python with Apache Beam, reading data from PubSub.

Sample streaming Dataflow pipeline written in Python This repository contains a streaming Dataflow pipeline written in Python with Apache Beam, readin

A robust pointcloud registration pipeline based on correlation.

PHASER: A Robust and Correspondence-Free Global Pointcloud Registration Ubuntu 18.04+ROS Melodic: Overview Pointcloud registration using correspondenc

Procedural 3D data generation pipeline for architecture

Synthetic Dataset Generator Authors: Stanislava Fedorova Alberto Tono Meher Shashwat Nigam Jiayao Zhang Amirhossein Ahmadnia Cecilia bolognesi Dominik

Airflow Operator for running Soda SQL scans

Airflow Operator for running Soda SQL scans

Finetuning Pipeline

KLUE Baseline Korean(한국어) KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark. See our paper fo

This demo showcase the use of onnxruntime-rs with a GPU on CUDA 11 to run Bert in a data pipeline with Rust.

Demo BERT ONNX pipeline written in rust This demo showcase the use of onnxruntime-rs with a GPU on CUDA 11 to run Bert in a data pipeline with Rust. R

Distributed DataLoader For Pytorch Based On Ray

Dpex——用户无感知分布式数据预处理组件 一、前言 随着GPU与CPU的算力差距越来越大以及模型训练时的预处理Pipeline变得越来越复杂,CPU部分的数据预处理已经逐渐成为了模型训练的瓶颈所在,这导致单机的GPU配置的提升并不能带来期望的线性加速。预处理性能瓶颈的本质在于每个GPU能够使用的C

Simplified diarization pipeline using some pretrained models - audio file to diarized segments in a few lines of code

simple_diarizer Simplified diarization pipeline using some pretrained models. Made to be a simple as possible to go from an input audio file to diariz

TorchX is a library containing standard DSLs for authoring and running PyTorch related components for an E2E production ML pipeline.

TorchX is a library containing standard DSLs for authoring and running PyTorch related components for an E2E production ML pipeline

The elegance of Airflow + the power of AWS

Orkestra The elegance of Airflow + the power of AWS

This repository contains the code for our fast polygonal building extraction from overhead images pipeline.

Polygonal Building Segmentation by Frame Field Learning We add a frame field output to an image segmentation neural network to improve segmentation qu

This repository contains all the source code that is needed for the project : An Efficient Pipeline For Bloom’s Taxonomy Using Natural Language Processing and Deep Learning

Pipeline For NLP with Bloom's Taxonomy Using Improved Question Classification and Question Generation using Deep Learning This repository contains all

Pipeline for chemical image-to-text competition

BMS-Molecular-Translation Introduction This is a pipeline for Bristol-Myers Squibb – Molecular Translation by Vadim Timakin and Maksim Zhdanov. We got

Evaluation Pipeline for our ECCV2020: Journey Towards Tiny Perceptual Super-Resolution.

Journey Towards Tiny Perceptual Super-Resolution Test code for our ECCV2020 paper: https://arxiv.org/abs/2007.04356 Our x4 upscaling pre-trained model

Clairvoyance: a Unified, End-to-End AutoML Pipeline for Medical Time Series

Clairvoyance: A Pipeline Toolkit for Medical Time Series Authors: van der Schaar Lab This repository contains implementations of Clairvoyance: A Pipel

Guide & Examples to create deeplearning gstreamer plugins and use them in your pipeline

upai-gst-dl-plugins Guide & Examples to create deeplearning gstreamer plugins and use them in your pipeline Introduction Thanks to the work done by @j

A declarative Kubeflow Management Tool inspired by Terraform

🍭 KRSH is Alpha version, so many bugs can be reported. If you find a bug, please write an Issue and grow the project together! A declarative Kubeflow

Procedural 3D data generation pipeline for architecture

Synthetic Dataset Generator Authors: Stanislava Fedorova Alberto Tono Meher Shashwat Nigam Jiayao Zhang Amirhossein Ahmadnia Cecilia bolognesi Dominik

![[CVPR-2021] UnrealPerson: An adaptive pipeline for costless person re-identification](https://github.com/FlyHighest/UnrealPerson/raw/main/imgs/unrealperson.jpg)

[CVPR-2021] UnrealPerson: An adaptive pipeline for costless person re-identification

UnrealPerson: An Adaptive Pipeline for Costless Person Re-identification In our paper (arxiv), we propose a novel pipeline, UnrealPerson, that decreas

Apache Liminal is an end-to-end platform for data engineers & scientists, allowing them to build, train and deploy machine learning models in a robust and agile way

Apache Liminals goal is to operationalise the machine learning process, allowing data scientists to quickly transition from a successful experiment to an automated pipeline of model training, validation, deployment and inference in production. Liminal provides a Domain Specific Language to build ML workflows on top of Apache Airflow.

Cloud-native, data onboarding architecture for the Google Cloud Public Datasets program

Public Datasets Pipelines Cloud-native, data pipeline architecture for onboarding datasets to the Google Cloud Public Datasets Program. Overview Requi

This is a repository for the Duke University Cloud Computing course project on Serveless Data Engineering Pipeline. For this project, I recreated the below pipeline.

AWS Data Engineering Pipeline This is a repository for the Duke University Cloud Computing course project on Serverless Data Engineering Pipeline. For

Focus on Algorithm Design, Not on Data Wrangling

The dataTap Python library is the primary interface for using dataTap's rich data management tools. Create datasets, stream annotations, and analyze model performance all with one library.

Educational project on how to build an ETL (Extract, Transform, Load) data pipeline, orchestrated with Airflow.

ETL Pipeline with Airflow, Spark, s3, MongoDB and Amazon Redshift

Socorro is the Mozilla crash ingestion pipeline. It accepts and processes Breakpad-style crash reports. It provides analysis tools.

Socorro Socorro is a Mozilla-centric ingestion pipeline and analysis tools for crash reports using the Breakpad libraries. Support This is a Mozilla-s