901 Repositories

Python behavior-sequence-transformer Libraries

Trax — Deep Learning with Clear Code and Speed

Trax — Deep Learning with Clear Code and Speed Trax is an end-to-end library for deep learning that focuses on clear code and speed. It is actively us

RuDOLPH: One Hyper-Modal Transformer can be creative as DALL-E and smart as CLIP

[Paper] [Хабр] [Model Card] [Colab] [Kaggle] RuDOLPH 🦌 🎄 ☃️ One Hyper-Modal Tr

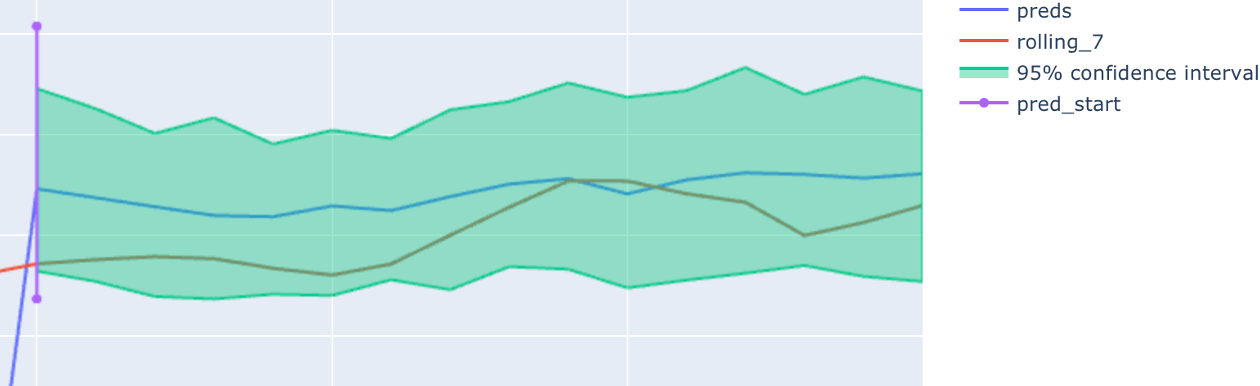

Research into Forex price prediction from price history using Deep Sequence Modeling with Stacked LSTMs.

Forex Data Prediction via Recurrent Neural Network Deep Sequence Modeling Research Paper Our research paper can be viewed here Installation Clone the

A Transformer-Based Siamese Network for Change Detection

ChangeFormer: A Transformer-Based Siamese Network for Change Detection (Under review at IGARSS-2022) Wele Gedara Chaminda Bandara, Vishal M. Patel Her

![End-to-end Temporal Action Detection with Transformer. [Under review]](https://github.com/xlliu7/TadTR/raw/master/arch.png)

End-to-end Temporal Action Detection with Transformer. [Under review]

TadTR: End-to-end Temporal Action Detection with Transformer By Xiaolong Liu, Qimeng Wang, Yao Hu, Xu Tang, Song Bai, Xiang Bai. This repo holds the c

This is a super simple visualization toolbox (script) for transformer attention visualization ✌

Trans_attention_vis This is a super simple visualization toolbox (script) for transformer attention visualization ✌ 1. How to prepare your attention m

Trained T5 and T5-large model for creating keywords from text

text to keywords Trained T5-base and T5-large model for creating keywords from text. Supported languages: ru Pretraining Large version | Pretraining B

Adversarial Robustness Comparison of Vision Transformer and MLP-Mixer to CNNs

Adversarial Robustness Comparison of Vision Transformer and MLP-Mixer to CNNs ArXiv Abstract Convolutional Neural Networks (CNNs) have become the de f

Deep learning PyTorch library for time series forecasting, classification, and anomaly detection

Deep learning for time series forecasting Flow forecast is an open-source deep learning for time series forecasting framework. It provides all the lat

PASSL包含 SimCLR,MoCo,BYOL,CLIP等基于对比学习的图像自监督算法以及 Vision-Transformer,Swin-Transformer,BEiT,CVT,T2T,MLP_Mixer等视觉Transformer算法

PASSL Introduction PASSL is a Paddle based vision library for state-of-the-art Self-Supervised Learning research with PaddlePaddle. PASSL aims to acce

Jupyter Notebook tutorials on solving real-world problems with Machine Learning & Deep Learning using PyTorch

Jupyter Notebook tutorials on solving real-world problems with Machine Learning & Deep Learning using PyTorch. Topics: Face detection with Detectron 2, Time Series anomaly detection with LSTM Autoencoders, Object Detection with YOLO v5, Build your first Neural Network, Time Series forecasting for Coronavirus daily cases, Sentiment Analysis with BERT.

Simulate genealogical trees and genomic sequence data using population genetic models

msprime msprime is a population genetics simulator based on tskit. Msprime can simulate random ancestral histories for a sample of individuals (consis

Code for the paper "Jukebox: A Generative Model for Music"

Status: Archive (code is provided as-is, no updates expected) Jukebox Code for "Jukebox: A Generative Model for Music" Paper Blog Explorer Colab Insta

Chinese version of GPT2 training code, using BERT tokenizer.

GPT2-Chinese Description Chinese version of GPT2 training code, using BERT tokenizer or BPE tokenizer. It is based on the extremely awesome repository

Repository for Project Insight: NLP as a Service

Project Insight NLP as a Service Contents Introduction Features Installation Setup and Documentation Project Details Demonstration Directory Details H

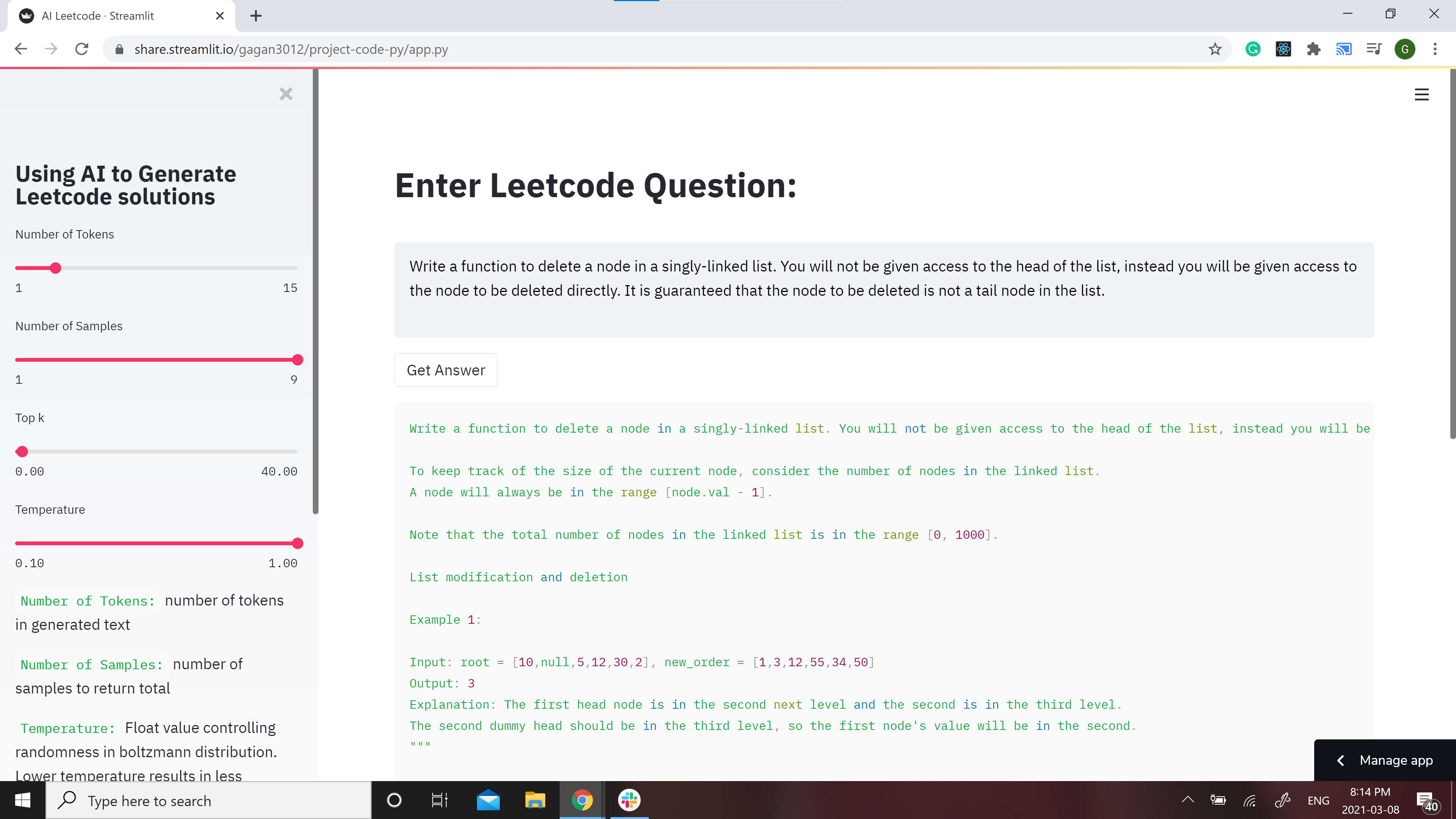

GPT-2 Model for Leetcode Questions in python

Leetcode using AI 🤖 GPT-2 Model for Leetcode Questions in python New demo here: https://huggingface.co/spaces/gagan3012/project-code-py Note: the Ans

CV backbones including GhostNet, TinyNet and TNT, developed by Huawei Noah's Ark Lab.

CV Backbones including GhostNet, TinyNet, TNT (Transformer in Transformer) developed by Huawei Noah's Ark Lab. GhostNet Code TinyNet Code TNT Code Pyr

A Transformer-Based Siamese Network for Change Detection

ChangeFormer: A Transformer-Based Siamese Network for Change Detection (Under review at IGARSS-2022) Wele Gedara Chaminda Bandara, Vishal M. Patel Her

Repository accompanying the "Sign Pose-based Transformer for Word-level Sign Language Recognition" paper

by Matyáš Boháček and Marek Hrúz, University of West Bohemia Should you have any questions or inquiries, feel free to contact us here. Repository acco

Official Pytorch Implementation for Splicing ViT Features for Semantic Appearance Transfer presenting Splice

Splicing ViT Features for Semantic Appearance Transfer [Project Page] Splice is a method for semantic appearance transfer, as described in Splicing Vi

Understanding and Overcoming the Challenges of Efficient Transformer Quantization

Transformer Quantization This repository contains the implementation and experiments for the paper presented in Yelysei Bondarenko1, Markus Nagel1, Ti

Transformer - Transformer in PyTorch

Transformer 完成进度 Embeddings and PositionalEncoding with example. MultiHeadAttent

Code for the paper: Fusformer: A Transformer-based Fusion Approach for Hyperspectral Image Super-resolution

Fusformer Code for the paper: "Fusformer: A Transformer-based Fusion Approach for Hyperspectral Image Super-resolution" Plateform Python 3.8.5 + Pytor

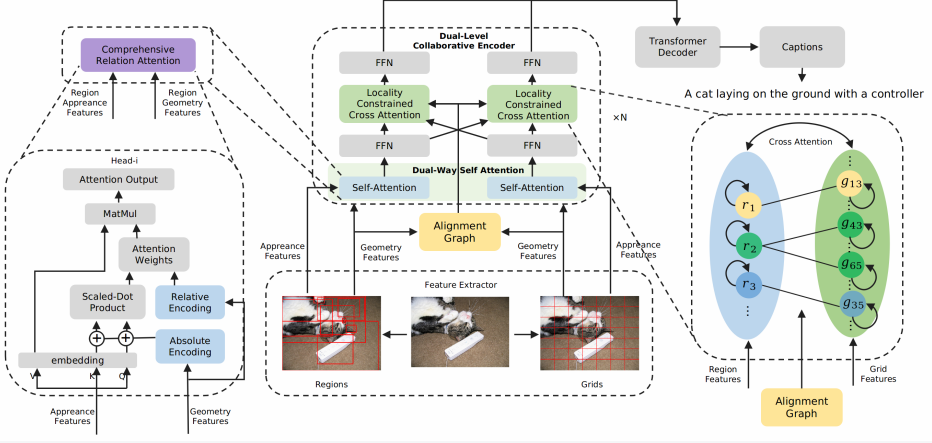

Official pytorch implementation of paper Dual-Level Collaborative Transformer for Image Captioning (AAAI 2021).

Dual-Level Collaborative Transformer for Image Captioning This repository contains the reference code for the paper Dual-Level Collaborative Transform

Meshed-Memory Transformer for Image Captioning. CVPR 2020

M²: Meshed-Memory Transformer This repository contains the reference code for the paper Meshed-Memory Transformer for Image Captioning (CVPR 2020). Pl

PyTorch code for MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning

MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning PyTorch code for our ACL 2020 paper "MART: Memory-Augmented Recur

Implementation of the Object Relation Transformer for Image Captioning

Object Relation Transformer This is a PyTorch implementation of the Object Relation Transformer published in NeurIPS 2019. You can find the paper here

Seq2seq - Sequence to Sequence Learning with Keras

Seq2seq Sequence to Sequence Learning with Keras Hi! You have just found Seq2Seq. Seq2Seq is a sequence to sequence learning add-on for the python dee

DeepSpeech - Easy-to-use Speech Toolkit including SOTA ASR pipeline, influential TTS with text frontend and End-to-End Speech Simultaneous Translation.

(简体中文|English) Quick Start | Documents | Models List PaddleSpeech is an open-source toolkit on PaddlePaddle platform for a variety of critical tasks i

Computer-Vision-Paper-Reviews - Computer Vision Paper Reviews with Key Summary along Papers & Codes

Computer-Vision-Paper-Reviews Computer Vision Paper Reviews with Key Summary along Papers & Codes. Jonathan Choi 2021 50+ Papers across Computer Visio

Keras Realtime Multi-Person Pose Estimation - Keras version of Realtime Multi-Person Pose Estimation project

This repository has become incompatible with the latest and recommended version of Tensorflow 2.0 Instead of refactoring this code painfully, I create

Transformer - A TensorFlow Implementation of the Transformer: Attention Is All You Need

[UPDATED] A TensorFlow Implementation of Attention Is All You Need When I opened this repository in 2017, there was no official code yet. I tried to i

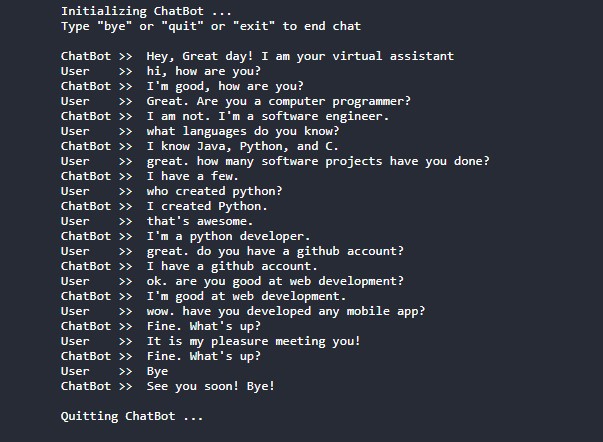

Conversational-AI-ChatBot - Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users!

Conversational AI ChatBot Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users! In this project? Thi

SPT_LSA_ViT - Implementation for Visual Transformer for Small-size Datasets

Vision Transformer for Small-Size Datasets Seung Hoon Lee and Seunghyun Lee and Byung Cheol Song | Paper Inha University Abstract Recently, the Vision

Image-popularity-score - A novel deep regression method for image scoring.

Image-popularity-score - A novel deep regression method for image scoring.

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyTorch Implementation of SSTNs for hyperspectral image classifications from the IEEE T-GRS paper "Spectral-Spatial Transformer Network for Hyperspectral Image Classification: A FAS Framework."

PyTorch Implementation of SSTN for Hyperspectral Image Classification Paper links: SSTN published on IEEE T-GRS. Also, you can directly find the imple

Implementation of ICLR 2020 paper "Revisiting Self-Training for Neural Sequence Generation"

Self-Training for Neural Sequence Generation This repo includes instructions for running noisy self-training algorithms from the following paper: Revi

Neural network sequence labeling model

Sequence labeler This is a neural network sequence labeling system. Given a sequence of tokens, it will learn to assign labels to each token. Can be u

The official pytorch implementation of ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias

ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias Introduction | Updates | Usage | Results&Pretrained Models | Statement | Intr

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation Where we are ? 12.27 目前和原论文仍有1%左右得差距,但已经力压很多SOTA了 ckpt__448_epoch_25.pth mIoU

Variable Transformer Calculator

✠ VASCO - VAriable tranSformer CalculatOr Software que calcula informações de transformadores feita para a matéria de "Conversão Eletromecânica de Ene

Official release of MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis of Pancreatic Cancer axriv: http://arxiv.org/abs/2112.13513

MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis This is the official page of the MSHT with its experimental script and records. We de

Bidirectionally transformed strings

bistring The bistring library provides non-destructive versions of common string processing operations like normalization, case folding, and find/repl

Unofficial TensorFlow implementation of the Keyword Spotting Transformer model

Keyword Spotting Transformer This is the unofficial TensorFlow implementation of the Keyword Spotting Transformer model. This model is used to train o

This program analyzes a DNA sequence and outputs snippets of DNA that are likely to be protein-coding genes.

This program analyzes a DNA sequence and outputs snippets of DNA that are likely to be protein-coding genes.

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

Retrieve annotated intron sequences and classify them as minor (U12-type) or major (U2-type)

(intron I nterrogator and C lassifier) intronIC is a program that can be used to classify intron sequences as minor (U12-type) or major (U2-type), usi

Machine Learning Course Project, IMDB movie review sentiment analysis by lstm, cnn, and transformer

IMDB Sentiment Analysis This is the final project of Machine Learning Courses in Huazhong University of Science and Technology, School of Artificial I

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

A website which allows you to play with the GPT-2 transformer

transformers A website which allows you to play with the GPT-2 model Built with ❤️ by raphtlw Table of contents Model Setup About Contributors Model T

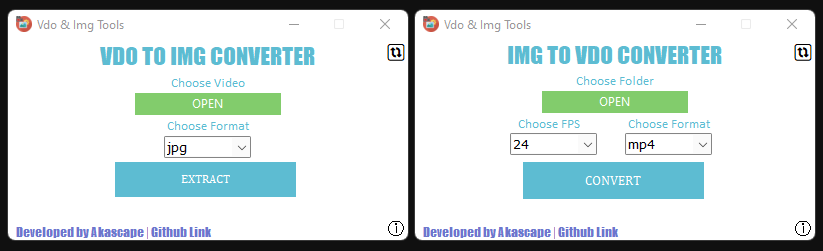

An easy to use GUI based video to image sequence converter (and vice versa).

Vdo & Img Conversion Tools This is a quick conversion tool made with python that can save you a lot of time. With this tool you can extract image sequ

Tensorflow implementation for "Improved Transformer for High-Resolution GANs" (NeurIPS 2021).

HiT-GAN Official TensorFlow Implementation HiT-GAN presents a Transformer-based generator that is trained based on Generative Adversarial Networks (GA

Sequence lineage information extracted from RKI sequence data repo

Pango lineage information for German SARS-CoV-2 sequences This repository contains a join of the metadata and pango lineage tables of all German SARS-

Python package for analyzing behavioral data for Brain Observatory: Visual Behavior

Allen Institute Visual Behavior Analysis package This repository contains code for analyzing behavioral data from the Allen Brain Observatory: Visual

Group Activity Recognition with Clustered Spatial Temporal Transformer

GroupFormer Group Activity Recognition with Clustered Spatial-TemporalTransformer Backbone Style Action Acc Activity Acc Config Download Inv3+flow+pos

A toolkit to generate MR sequence diagrams

mrsd: a toolkit to generate MR sequence diagrams mrsd is a Python toolkit to generate MR sequence diagrams, as shown below for the basic FLASH sequenc

Source code of SIGIR2021 Paper 'One Chatbot Per Person: Creating Personalized Chatbots based on Implicit Profiles'

DHAP Source code of SIGIR2021 Long Paper: One Chatbot Per Person: Creating Personalized Chatbots based on Implicit User Profiles . Preinstallation Fir

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

These are the scripts used for the project of ‘Assembly of a pan-genome for global cattle reveals missing sequence and novel structural variation, providing new insights into their diversity and evolution history’

script-SV-genotyping These are the scripts used for the project of ‘Assembly of a pan-genome for global cattle reveals missing sequence and novel stru

This repository contains a CBIR system that uses swin transformer to extract image's feature.

Swin-transformer based CBIR This repository contains a CBIR(content-based image retrieval) system. Here we use Swin-transformer to extract query image

StyleSwin: Transformer-based GAN for High-resolution Image Generation

StyleSwin This repo is the official implementation of "StyleSwin: Transformer-based GAN for High-resolution Image Generation". By Bowen Zhang, Shuyang

Official Pytorch implementation of the paper: "Locally Shifted Attention With Early Global Integration"

Locally-Shifted-Attention-With-Early-Global-Integration Pretrained models You can download all the models from here. Training Imagenet python -m torch

A Japanese tokenizer based on recurrent neural networks

Nagisa is a python module for Japanese word segmentation/POS-tagging. It is designed to be a simple and easy-to-use tool. This tool has the following

Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021).

STAR-pytorch Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021). CVF (pdf) STAR-DC

This project aims to explore the deployment of Swin-Transformer based on TensorRT, including the test results of FP16 and INT8.

Swin Transformer This project aims to explore the deployment of SwinTransformer based on TensorRT, including the test results of FP16 and INT8. Introd

OSLO: Open Source framework for Large-scale transformer Optimization

O S L O Open Source framework for Large-scale transformer Optimization What's New: December 21, 2021 Released OSLO 1.0. What is OSLO about? OSLO is a

TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning

TransZero++ This repository contains the testing code for the paper "TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning" submitted

MISSFormer: An Effective Medical Image Segmentation Transformer

MISSFormer Code for paper "MISSFormer: An Effective Medical Image Segmentation Transformer". Please read our preprint at the following link: paper_add

TensorFlow implementation of "TokenLearner: What Can 8 Learned Tokens Do for Images and Videos?"

TokenLearner: What Can 8 Learned Tokens Do for Images and Videos? Source: Improving Vision Transformer Efficiency and Accuracy by Learning to Tokenize

BARTpho: Pre-trained Sequence-to-Sequence Models for Vietnamese

Table of contents Introduction Using BARTpho with fairseq Using BARTpho with transformers Notes BARTpho: Pre-trained Sequence-to-Sequence Models for V

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Tensorflow Implementation of ECCV'18 paper: Multimodal Human Motion Synthesis

MT-VAE for Multimodal Human Motion Synthesis This is the code for ECCV 2018 paper MT-VAE: Learning Motion Transformations to Generate Multimodal Human

"Learning and Analyzing Generation Order for Undirected Sequence Models" in Findings of EMNLP, 2021

undirected-generation-dev This repo contains the source code of the models described in the following paper "Learning and Analyzing Generation Order f

Mixed Transformer UNet for Medical Image Segmentation

MT-UNet Update 2021/11/19 Thank you for your interest in our work. We have uploaded the code of our MTUNet to help peers conduct further research on i

This was initially the repo for the project of PSYC626@USC of Asaf Mazar, Millad Kassaie and Georgios Chochlakis named "Powered by the Will? Exploring Lay Theories of Behavior Change through Social Media"

Subreddit Analysis This repo includes tools for Subreddit analysis, originally developed for our class project of PSYC 626 in USC, titled "Powered by

SeqLike - flexible biological sequence objects in Python

SeqLike - flexible biological sequence objects in Python Introduction A single object API that makes working with biological sequences in Python more

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

[ICCV2021] TransReID: Transformer-based Object Re-Identification [pdf] The official repository for TransReID: Transformer-based Object Re-Identificati

This is a Boids Simulation, written in Python with Pygame.

PyNBoids A Python Boids Simulation This is a Boids simulation, written in Python3, with Pygame2 and NumPy. To use: Save the pynboids_sp.py file (and n

This repository builds a basic vision transformer from scratch so that one beginner can understand the theory of vision transformer.

vision-transformer-from-scratch This repository includes several kinds of vision transformers from scratch so that one beginner can understand the the

A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization

University1652-Baseline [Paper] [Slide] [Explore Drone-view Data] [Explore Satellite-view Data] [Explore Street-view Data] [Video Sample] [中文介绍] This

Official PyTorch implementation of SegFormer

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

FastFormers - highly efficient transformer models for NLU

FastFormers FastFormers provides a set of recipes and methods to achieve highly efficient inference of Transformer models for Natural Language Underst

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models

PEGASUS library Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models, or PEGASUS, uses self-supervised

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

The code for two papers: Feedback Transformer and Expire-Span.

transformer-sequential This repo contains the code for two papers: Feedback Transformer Expire-Span The training code is structured for long sequentia

Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing

Introduction Funnel-Transformer is a new self-attention model that gradually compresses the sequence of hidden states to a shorter one and hence reduc

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

An implementation of the Pay Attention when Required transformer

Pay Attention when Required (PAR) Transformer-XL An implementation of the Pay Attention when Required transformer from the paper: https://arxiv.org/pd

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

Code for EMNLP20 paper: "ProphetNet: Predicting Future N-gram for Sequence-to-Sequence Pre-training"

ProphetNet-X This repo provides the code for reproducing the experiments in ProphetNet. In the paper, we propose a new pre-trained language model call