901 Repositories

Python behavior-sequence-transformer Libraries

A graph-to-sequence model for one-step retrosynthesis and reaction outcome prediction.

Graph2SMILES A graph-to-sequence model for one-step retrosynthesis and reaction outcome prediction. 1. Environmental setup System requirements Ubuntu:

Implementation of the paper "Generating Symbolic Reasoning Problems with Transformer GANs"

Generating Symbolic Reasoning Problems with Transformer GANs This is the implementation of the paper Generating Symbolic Reasoning Problems with Trans

Video Swin Transformer - PyTorch

Video-Swin-Transformer-Pytorch This repo is a simple usage of the official implementation "Video Swin Transformer". Introduction Video Swin Transforme

Source code for "Taming Visually Guided Sound Generation" (Oral at the BMVC 2021)

Taming Visually Guided Sound Generation • [Project Page] • [ArXiv] • [Poster] • • Listen for the samples on our project page. Overview We propose to t

xFormers is a modular and field agnostic library to flexibly generate transformer architectures by interoperable and optimized building blocks.

Description xFormers is a modular and field agnostic library to flexibly generate transformer architectures by interoperable and optimized building bl

Transformer Based Korean Sentence Spacing Corrector

TKOrrector Transformer Based Korean Sentence Spacing Corrector License Summary This solution is made available under Apache 2 license. See the LICENSE

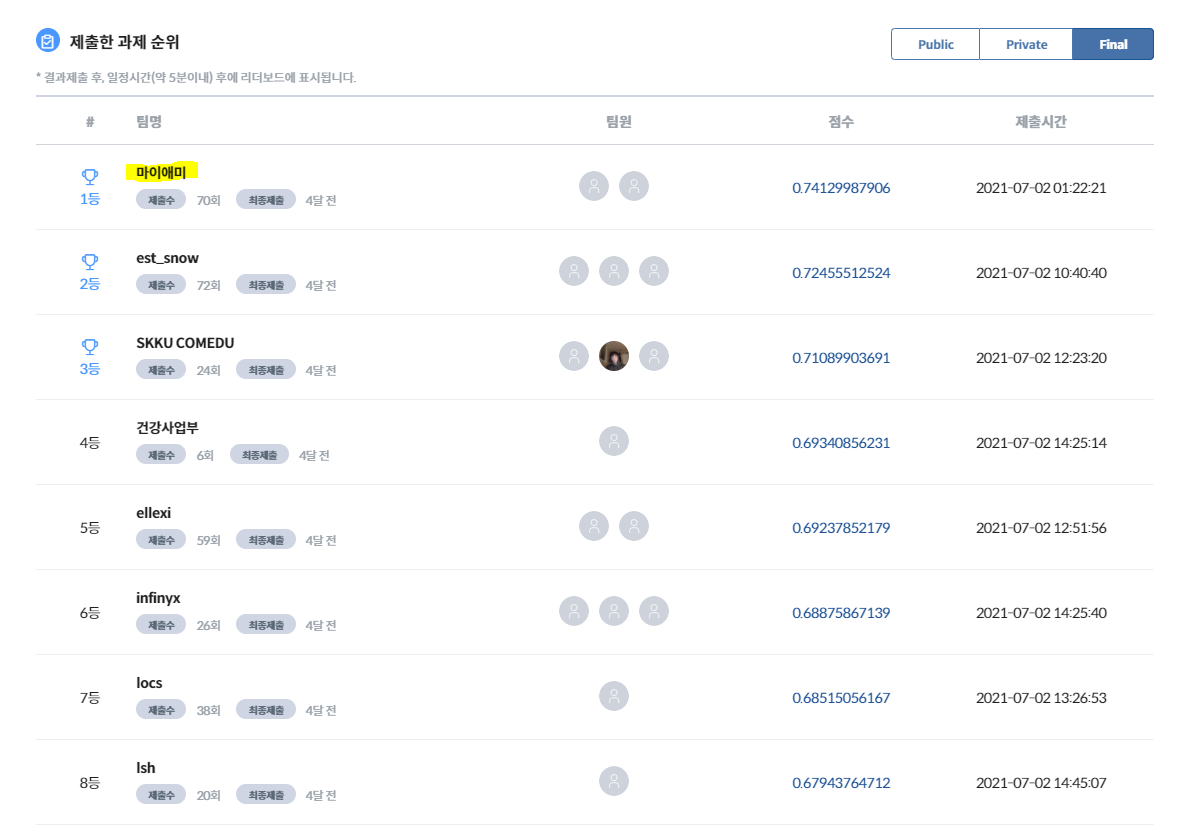

1st ranked 'driver careless behavior detection' for AI Online Competition 2021, hosted by MSIT Korea.

2021AICompetition-03 본 repo 는 mAy-I Inc. 팀으로 참가한 2021 인공지능 온라인 경진대회 중 [이미지] 운전 사고 예방을 위한 운전자 부주의 행동 검출 모델] 태스크 수행을 위한 레포지토리입니다. mAy-I 는 과학기술정보통신부가 주최하

This is our implementation of GHCF: Graph Heterogeneous Collaborative Filtering (AAAI 2021)

GHCF This is our implementation of the paper: Chong Chen, Weizhi Ma, Min Zhang, Zhaowei Wang, Xiuqiang He, Chenyang Wang, Yiqun Liu and Shaoping Ma. 2

Beyond Clicks: Modeling Multi-Relational Item Graph for Session-Based Target Behavior Prediction

MGNN-SPred This is our Tensorflow implementation for the paper: WenWang,Wei Zhang, Shukai Liu, Qi Liu, Bo Zhang, Leyu Lin, and Hongyuan Zha. 2020. Bey

Continuous-Time Sequential Recommendation with Temporal Graph Collaborative Transformer

Introduction This is the repository of our accepted CIKM 2021 paper "Continuous-Time Sequential Recommendation with Temporal Graph Collaborative Trans

High-Fidelity Pluralistic Image Completion with Transformers (ICCV 2021)

Image Completion Transformer (ICT) Project Page | Paper (ArXiv) | Pre-trained Models | Supplemental Material This repository is the official pytorch i

Official implementation for paper: A Latent Transformer for Disentangled Face Editing in Images and Videos.

A Latent Transformer for Disentangled Face Editing in Images and Videos Official implementation for paper: A Latent Transformer for Disentangled Face

Officially unofficial re-implementation of paper: Paint Transformer: Feed Forward Neural Painting with Stroke Prediction, ICCV 2021.

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction [Paper] [Official Paddle Implementation] [Huggingface Gradio Demo] [Unofficial

Styled Handwritten Text Generation with Transformers (ICCV 21)

⚡ Handwriting Transformers [PDF] Ankan Kumar Bhunia, Salman Khan, Hisham Cholakkal, Rao Muhammad Anwer, Fahad Shahbaz Khan & Mubarak Shah Abstract: We

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Source code for CAST - Crisis Domain Adaptation Using Sequence-to-sequence Transformers (Accepted to ISCRAM 2021, CorePaper).

Source code for CAST: Crisis Domain Adaptation UsingSequence-to-sequenceTransformers (Paper, BibTeX, Accepted to ISCRAM 2021, CorePaper) Quick start D

Source code for GNN-LSPE (Graph Neural Networks with Learnable Structural and Positional Representations)

Graph Neural Networks with Learnable Structural and Positional Representations Source code for the paper "Graph Neural Networks with Learnable Structu

Source code for Transformer-based Multi-task Learning for Disaster Tweet Categorisation (UCD's participation in TREC-IS 2020A, 2020B and 2021A).

Source code for "UCD participation in TREC-IS 2020A, 2020B and 2021A". *** update at: 2021/05/25 This repo so far relates to the following work: Trans

Official implementation for paper: A Latent Transformer for Disentangled Face Editing in Images and Videos.

A Latent Transformer for Disentangled Face Editing in Images and Videos Official implementation for paper: A Latent Transformer for Disentangled Face

Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer

MT5_paddle Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer English | 简体中文 mT5: A Massively

Pytorch reimplementation of the Vision Transformer (An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale)

Vision Transformer Pytorch reimplementation of Google's repository for the ViT model that was released with the paper An Image is Worth 16x16 Words: T

Implementation of ConvMixer in TensorFlow and Keras

ConvMixer ConvMixer, an extremely simple model that is similar in spirit to the ViT and the even-more-basic MLP-Mixer in that it operates directly on

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision Training Efficiency We show the training efficiency of our DSLP model b

A curated list of awesome resources combining Transformers with Neural Architecture Search

A curated list of awesome resources combining Transformers with Neural Architecture Search

Official repo for BMVC2021 paper ASFormer: Transformer for Action Segmentation

ASFormer: Transformer for Action Segmentation This repo provides training & inference code for BMVC 2021 paper: ASFormer: Transformer for Action Segme

Rethinking Transformer-based Set Prediction for Object Detection

Rethinking Transformer-based Set Prediction for Object Detection Here are the code for the ICCV paper. The code is adapted from Detectron2 and AdelaiD

A collection of papers about Transformer in the field of medical image analysis.

A collection of papers about Transformer in the field of medical image analysis.

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision Training Efficiency We show the training efficiency of our DSLP model b

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation We propose a novel approach to translate unpaired contrast computed

FG-transformer-TTS Fine-grained style control in transformer-based text-to-speech synthesis

LST-TTS Official implementation for the paper Fine-grained style control in transformer-based text-to-speech synthesis. Submitted to ICASSP 2022. Audi

Code for the preprint "Well-classified Examples are Underestimated in Classification with Deep Neural Networks"

This is a repository for the paper of "Well-classified Examples are Underestimated in Classification with Deep Neural Networks" The implementation and

Quantum-enhanced transformer neural network

Example of a Quantum-enhanced transformer neural network Get the code: git clone https://github.com/rdisipio/qtransformer.git cd qtransformer Create

Repository sharing code and the model for the paper "Rescoring Sequence-to-Sequence Models for Text Line Recognition with CTC-Prefixes"

Rescoring Sequence-to-Sequence Models for Text Line Recognition with CTC-Prefixes Setup virtualenv -p python3 venv source venv/bin/activate pip instal

This is an official implementation for "Swin Transformer: Hierarchical Vision Transformer using Shifted Windows" on Semantic Segmentation.

Swin Transformer for Semantic Segmentation of satellite images This repo contains the supported code and configuration files to reproduce semantic seg

This package implements THOR: Transformer with Stochastic Experts.

THOR: Transformer with Stochastic Experts This PyTorch package implements Taming Sparsely Activated Transformer with Stochastic Experts. Installation

PyTorch implementation for paper StARformer: Transformer with State-Action-Reward Representations.

StARformer This repository contains the PyTorch implementation for our paper titled StARformer: Transformer with State-Action-Reward Representations.

Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation.

Unified-EPT Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation. Installation Linux, CUDA=10.0,

![[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer](https://github.com/AllenXiangX/SnowflakeNet/raw/main/pics/network.png)

[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer

This repository contains the source code for the paper SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer (ICCV 2021 Oral). The project page is here.

Pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering".

TRAnsformer Routing Networks (TRAR) This is an official implementation for ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visu

Unofficial PyTorch implementation of MobileViT based on paper "MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer".

MobileViT RegNet Unofficial PyTorch implementation of MobileViT based on paper MOBILEVIT: LIGHT-WEIGHT, GENERAL-PURPOSE, AND MOBILE-FRIENDLY VISION TR

Google's Meena transformer chatbot implementation

Here's my attempt at recreating Meena, a state of the art chatbot developed by Google Research and described in the paper Towards a Human-like Open-Domain Chatbot.

Mesh Graphormer is a new transformer-based method for human pose and mesh reconsruction from an input image

MeshGraphormer ✨ ✨ This is our research code of Mesh Graphormer. Mesh Graphormer is a new transformer-based method for human pose and mesh reconsructi

3D-Transformer: Molecular Representation with Transformer in 3D Space

3D-Transformer: Molecular Representation with Transformer in 3D Space

ULMFiT for Genomic Sequence Data

Genomic ULMFiT This is an implementation of ULMFiT for genomics classification using Pytorch and Fastai. The model architecture used is based on the A

![[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax](https://github.com/scaomath/galerkin-transformer/raw/main/data/simple_ft.png)

[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax

[NeurIPS 2021] Galerkin Transformer: linear attention without softmax Summary A non-numerical analyst oriented explanation on Toward Data Science abou

Improving 3D Object Detection with Channel-wise Transformer

"Improving 3D Object Detection with Channel-wise Transformer" Thanks for the OpenPCDet, this implementation of the CT3D is mainly based on the pcdet v

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

An official repository for Paper "Uformer: A General U-Shaped Transformer for Image Restoration".

Uformer: A General U-Shaped Transformer for Image Restoration Zhendong Wang, Xiaodong Cun, Jianmin Bao and Jianzhuang Liu Paper: https://arxiv.org/abs

A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You can find two approaches for achieving this in this repo.

multitask-learning-transformers A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You

VSR-Transformer - This paper proposes a new Transformer for video super-resolution (called VSR-Transformer).

VSR-Transformer By Jiezhang Cao, Yawei Li, Kai Zhang, Luc Van Gool This paper proposes a new Transformer for video super-resolution (called VSR-Transf

Code release for ICCV 2021 paper "Anticipative Video Transformer"

Anticipative Video Transformer Ranked first in the Action Anticipation task of the CVPR 2021 EPIC-Kitchens Challenge! (entry: AVT-FB-UT) [project page

A simple but complete full-attention transformer with a set of promising experimental features from various papers

x-transformers A concise but fully-featured transformer, complete with a set of promising experimental features from various papers. Install $ pip ins

Implementation of Neural Distance Embeddings for Biological Sequences (NeuroSEED) in PyTorch

Neural Distance Embeddings for Biological Sequences Official implementation of Neural Distance Embeddings for Biological Sequences (NeuroSEED) in PyTo

WRENCH: Weak supeRvision bENCHmark

🔧 What is it? Wrench is a benchmark platform containing diverse weak supervision tasks. It also provides a common and easy framework for development

Unofficial Implementation of Zero-Shot Text-to-Speech for Text-Based Insertion in Audio Narration

Zero-Shot Text-to-Speech for Text-Based Insertion in Audio Narration This repo contains only model Implementation of Zero-Shot Text-to-Speech for Text

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

This repository contains an overview of important follow-up works based on the original Vision Transformer (ViT) by Google.

This repository contains an overview of important follow-up works based on the original Vision Transformer (ViT) by Google.

GluonMM is a library of transformer models for computer vision and multi-modality research

GluonMM is a library of transformer models for computer vision and multi-modality research. It contains reference implementations of widely adopted baseline models and also research work from Amazon Research.

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

[ICLR'19] Trellis Networks for Sequence Modeling

TrellisNet for Sequence Modeling This repository contains the experiments done in paper Trellis Networks for Sequence Modeling by Shaojie Bai, J. Zico

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

A Fast Sequence Transducer Implementation with PyTorch Bindings

transducer A Fast Sequence Transducer Implementation with PyTorch Bindings. The corresponding publication is Sequence Transduction with Recurrent Neur

Sequence modeling benchmarks and temporal convolutional networks

Sequence Modeling Benchmarks and Temporal Convolutional Networks (TCN) This repository contains the experiments done in the work An Empirical Evaluati

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

The GitHub repository for the paper: “Time Series is a Special Sequence: Forecasting with Sample Convolution and Interaction“.

SCINet This is the original PyTorch implementation of the following work: Time Series is a Special Sequence: Forecasting with Sample Convolution and I

Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models.

Tevatron Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models. The toolkit has a modularized

Sequence-to-Sequence learning using PyTorch

Seq2Seq in PyTorch This is a complete suite for training sequence-to-sequence models in PyTorch. It consists of several models and code to both train

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Reinforcement learning models in ViZDoom environment

DoomNet DoomNet is a ViZDoom agent trained by reinforcement learning. The agent is a neural network that outputs a probability of actions given only p

Sequence to Sequence Models with PyTorch

Sequence to Sequence models with PyTorch This repository contains implementations of Sequence to Sequence (Seq2Seq) models in PyTorch At present it ha

Code repo for realtime multi-person pose estimation in CVPR'17 (Oral)

Realtime Multi-Person Pose Estimation By Zhe Cao, Tomas Simon, Shih-En Wei, Yaser Sheikh. Introduction Code repo for winning 2016 MSCOCO Keypoints Cha

Official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT This repository is the official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification. ArXiv If

Official implement of Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer

Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer This repository contains the PyTorch code for Evo-ViT. This work proposes a slow-fas

Supervised Contrastive Learning for Downstream Optimized Sequence Representations

SupCL-Seq 📖 Supervised Contrastive Learning for Downstream Optimized Sequence representations (SupCS-Seq) accepted to be published in EMNLP 2021, ext

Learning based AI for playing multi-round Koi-Koi hanafuda card games. Have fun.

Koi-Koi AI Learning based AI for playing multi-round Koi-Koi hanafuda card games. Platform Python PyTorch PySimpleGUI (for the interface playing vs AI

Natural Language Processing with transformers

we want to create a repo to illustrate usage of transformers in chinese

A collection of SOTA Image Classification Models in PyTorch

A collection of SOTA Image Classification Models in PyTorch

This is the official code for the paper "Tracker Meets Night: A Transformer Enhancer for UAV Tracking".

SCT This is the official code for the paper "Tracker Meets Night: A Transformer Enhancer for UAV Tracking" The spatial-channel Transformer (SCT) enhan

Implementation of a Transformer, but completely in Triton

Transformer in Triton (wip) Implementation of a Transformer, but completely in Triton. I'm completely new to lower-level neural net code, so this repo

Code & Models for 3DETR - an End-to-end transformer model for 3D object detection

3DETR: An End-to-End Transformer Model for 3D Object Detection PyTorch implementation and models for 3DETR. 3DETR (3D DEtection TRansformer) is a simp

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Implementation of Hierarchical Transformer Memory (HTM) for Pytorch

Hierarchical Transformer Memory (HTM) - Pytorch Implementation of Hierarchical Transformer Memory (HTM) for Pytorch. This Deepmind paper proposes a si

Selene is a Python library and command line interface for training deep neural networks from biological sequence data such as genomes.

Selene is a Python library and command line interface for training deep neural networks from biological sequence data such as genomes.

Code for the paper: Sequence-to-Sequence Learning with Latent Neural Grammars

Code for the paper: Sequence-to-Sequence Learning with Latent Neural Grammars

Optimized code based on M2 for faster image captioning training

Transformer Captioning This repository contains the code for Transformer-based image captioning. Based on meshed-memory-transformer, we further optimi

Tutorial to pretrain & fine-tune a 🤗 Flax T5 model on a TPUv3-8 with GCP

Pretrain and Fine-tune a T5 model with Flax on GCP This tutorial details how pretrain and fine-tune a FlaxT5 model from HuggingFace using a TPU VM ava

Code and checkpoints for training the transformer-based Table QA models introduced in the paper TAPAS: Weakly Supervised Table Parsing via Pre-training.

End-to-end neural table-text understanding models.

CTRL-C: Camera calibration TRansformer with Line-Classification

CTRL-C: Camera calibration TRansformer with Line-Classification This repository contains the official code and pretrained models for CTRL-C (Camera ca

nnFormer: Interleaved Transformer for Volumetric Segmentation Code for paper "nnFormer: Interleaved Transformer for Volumetric Segmentation "

nnFormer: Interleaved Transformer for Volumetric Segmentation Code for paper "nnFormer: Interleaved Transformer for Volumetric Segmentation ". Please

Code for the paper "Reinforcement Learning as One Big Sequence Modeling Problem"

Trajectory Transformer Code release for Reinforcement Learning as One Big Sequence Modeling Problem. Installation All python dependencies are in envir

Voxel Transformer for 3D object detection

Voxel Transformer This is a reproduced repo of Voxel Transformer for 3D object detection. The code is mainly based on OpenPCDet. Introduction We provi

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019)

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019) Introduction Official implementation of Adaptive Pyramid Context Network

ZSL-KG is a general-purpose zero-shot learning framework with a novel transformer graph convolutional network (TrGCN) to learn class representation from common sense knowledge graphs.

ZSL-KG is a general-purpose zero-shot learning framework with a novel transformer graph convolutional network (TrGCN) to learn class representa

Image Captioning using CNN and Transformers

Image-Captioning Keras/Tensorflow Image Captioning application using CNN and Transformer as encoder/decoder. In particulary, the architecture consists

MWPToolkit is a PyTorch-based toolkit for Math Word Problem (MWP) solving.

MWPToolkit is a PyTorch-based toolkit for Math Word Problem (MWP) solving. It is a comprehensive framework for research purpose that integrates popular MWP benchmark datasets and typical deep learning-based MWP algorithms.

Train GPT-3 model on V100(16GB Mem) Using improved Transformer.

GPT-X using transformer pytorch