223 Repositories

Python context-encoder Libraries

![[CVPR 2016] Unsupervised Feature Learning by Image Inpainting using GANs](https://github.com/pathak22/context-encoder/raw/master/images/teaser.jpg)

[CVPR 2016] Unsupervised Feature Learning by Image Inpainting using GANs

Context Encoders: Feature Learning by Inpainting CVPR 2016 [Project Website] [Imagenet Results] Sample results on held-out images: This is the trainin

An implementation of a sequence to sequence neural network using an encoder-decoder

Keras implementation of a sequence to sequence model for time series prediction using an encoder-decoder architecture. I created this post to share a

DeepSTD: Mining Spatio-temporal Disturbances of Multiple Context Factors for Citywide Traffic Flow Prediction

DeepSTD: Mining Spatio-temporal Disturbances of Multiple Context Factors for Citywide Traffic Flow Prediction This is the implementation of DeepSTD in

Code for our paper "Multi-scale Guided Attention for Medical Image Segmentation"

Medical Image Segmentation with Guided Attention This repository contains the code of our paper: "'Multi-scale self-guided attention for medical image

High-resolution networks and Segmentation Transformer for Semantic Segmentation

High-resolution networks and Segmentation Transformer for Semantic Segmentation Branches This is the implementation for HRNet + OCR. The PyTroch 1.1 v

DeepLab is a state-of-art deep learning system for semantic image segmentation built on top of Caffe.

DeepLab Introduction DeepLab is a state-of-art deep learning system for semantic image segmentation built on top of Caffe. It combines densely-compute

A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation

Segnet is deep fully convolutional neural network architecture for semantic pixel-wise segmentation. This is implementation of http://arxiv.org/pdf/15

Implementation of SegNet: A Deep Convolutional Encoder-Decoder Architecture for Semantic Pixel-Wise Labelling

Caffe SegNet This is a modified version of Caffe which supports the SegNet architecture As described in SegNet: A Deep Convolutional Encoder-Decoder A

UNet model with VGG11 encoder pre-trained on Kaggle Carvana dataset

TernausNet: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation By Vladimir Iglovikov and Alexey Shvets Introduction TernausNet is

Very simple encoding scheme that will encode data as a series of OwOs or UwUs.

OwO Encoder Very simple encoding scheme that will encode data as a series of OwOs or UwUs. The encoder is a simple state machine. Still needs a decode

Encode stuff with ducks!

Duckify Encoder Usage Download main.py and run it. main.py has an encoded version in encoded_main.py.txt. As A Module Download the duckify folder (or

This is a package that allows you to create a key-value vault for storing variables in a global context

This is a package that allows you to create a key-value vault for storing variables in a global context. It allows you to set up a keyring with pre-defined constants which act as keys for the vault. These constants are then what is stored inside the vault. A key is just a string, but the value that the key is mapped to can be assigned to any type of object in Python. If the object is serializable (like a list or a dict), it can also be writen to a JSON file You can then use a decorator to annotate functions that you want to have use this vault to either store return variables in or to extract variables to be used as input for the function.

BaseCrack is a tool written in Python that can decode all alphanumeric base encoding schemes.

BaseCrack Decoder For Base Encoding Schemes BaseCrack is a tool written in Python that can decode all alphanumeric base encoding schemes. This tool ca

Demo of using Auto Encoder for Image Denoising

Demo of using Auto Encoder for Image Denoising

Data Intelligence Applications - Online Product Advertising and Pricing with Context Generation

Data Intelligence Applications - Online Product Advertising and Pricing with Context Generation Overview Consider the scenario in which advertisement

python scripts and other files to generate induction encoder PCBs in Kicad

induction_encoder python scripts and other files to generate induction encoder PCBs in Kicad Targeting the Renesas IPS2200 encoder chips.

Python package to parse and generate C/C++ code as context aware preprocessor.

Devana Devana is a python tool that make it easy to parsing, format, transform and generate C++ (or C) code. This tool uses libclang to parse the code

Experiments and code to generate the GINC small-scale in-context learning dataset from "An Explanation for In-context Learning as Implicit Bayesian Inference"

GINC small-scale in-context learning dataset GINC (Generative In-Context learning Dataset) is a small-scale synthetic dataset for studying in-context

STS Benchmark comprises a selection of the English datasets used in the STS tasks organized in the context of SemEval between 2012 and 2017. The selection of datasets include text from image captions, news headlines and user forums.

stsb_multi_mt_en STS Benchmark comprises a selection of the English datasets used in the STS tasks organized in the context of SemEval between 2012 an

Multimodal Temporal Context Network (MTCN)

Multimodal Temporal Context Network (MTCN) This repository implements the model proposed in the paper: Evangelos Kazakos, Jaesung Huh, Arsha Nagrani,

Trajectory Prediction with Graph-based Dual-scale Context Fusion

DSP: Trajectory Prediction with Graph-based Dual-scale Context Fusion Introduction This is the project page of the paper Lu Zhang, Peiliang Li, Jing C

Context-free grammar to Sublime-syntax file

Generate a sublime-syntax file from a non-left-recursive, follow-determined, context-free grammar

An original implementation of "MetaICL Learning to Learn In Context" by Sewon Min, Mike Lewis, Luke Zettlemoyer and Hannaneh Hajishirzi

MetaICL: Learning to Learn In Context This includes an original implementation of "MetaICL: Learning to Learn In Context" by Sewon Min, Mike Lewis, Lu

An original implementation of "MetaICL Learning to Learn In Context" by Sewon Min, Mike Lewis, Luke Zettlemoyer and Hannaneh Hajishirzi

MetaICL: Learning to Learn In Context This includes an original implementation of "MetaICL: Learning to Learn In Context" by Sewon Min, Mike Lewis, Lu

Unified MultiWOZ evaluation scripts for the context-to-response task.

MultiWOZ Context-to-Response Evaluation Standardized and easy to use Inform, Success, BLEU ~ See the paper ~ Easy-to-use scripts for standardized eval

Unofficial pytorch implementation of the paper "Context Reasoning Attention Network for Image Super-Resolution (ICCV 2021)"

CRAN Unofficial pytorch implementation of the paper "Context Reasoning Attention Network for Image Super-Resolution (ICCV 2021)" This code doesn't exa

jel - Japanese Entity Linker - is Bi-encoder based entity linker for japanese.

jel: Japanese Entity Linker jel - Japanese Entity Linker - is Bi-encoder based entity linker for japanese. Usage Currently, link and question methods

A Context-aware Visual Attention-based training pipeline for Object Detection from a Webpage screenshot!

CoVA: Context-aware Visual Attention for Webpage Information Extraction Abstract Webpage information extraction (WIE) is an important step to create k

Official Code Release for Container : Context Aggregation Network

Container: Context Aggregation Network Official Code Release for Container : Context Aggregation Network Comparion between CNN, MLP-Mixer and Transfor

PyTorch implementation of SQN based on CloserLook3D's encoder

SQN_pytorch This repo is an implementation of Semantic Query Network (SQN) using CloserLook3D's encoder in Pytorch. For TensorFlow implementation, che

LSTC: Boosting Atomic Action Detection with Long-Short-Term Context

LSTC: Boosting Atomic Action Detection with Long-Short-Term Context This Repository contains the code on AVA of our ACM MM 2021 paper: LSTC: Boosting

The source code for "Global Context Enhanced Graph Neural Network for Session-based Recommendation".

GCE-GNN Code This is the source code for SIGIR 2020 Paper: Global Context Enhanced Graph Neural Networks for Session-based Recommendation. Requirement

Global Context Enhanced Social Recommendation with Hierarchical Graph Neural Networks

SR-HGNN ICDM-2020 《Global Context Enhanced Social Recommendation with Hierarchical Graph Neural Networks》 Environments python 3.8 pytorch-1.6 DGL 0.5.

Cross-platform command-line AV1 / VP9 / HEVC / H264 encoding framework with per scene quality encoding

Av1an A cross-platform framework to streamline encoding Easy, Fast, Efficient and Feature Rich An easy way to start using AV1 / HEVC / H264 / VP9 / VP

This repository implements variational graph auto encoder by Thomas Kipf.

Variational Graph Auto-encoder in Pytorch This repository implements variational graph auto-encoder by Thomas Kipf. For details of the model, refer to

Mix3D: Out-of-Context Data Augmentation for 3D Scenes (3DV 2021)

Mix3D: Out-of-Context Data Augmentation for 3D Scenes (3DV 2021) Alexey Nekrasov*, Jonas Schult*, Or Litany, Bastian Leibe, Francis Engelmann Mix3D is

Code for CodeT5: a new code-aware pre-trained encoder-decoder model.

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation This is the official PyTorch implementation

FrostedGlass is a translucent frosted glass effect widget, that creates a context with the background behind it.

FrostedGlass FrostedGlass is a translucent frosted glass effect widget, that creates a context with the background behind it. The effect is drawn on t

ICLR 2021: Pre-Training for Context Representation in Conversational Semantic Parsing

SCoRe: Pre-Training for Context Representation in Conversational Semantic Parsing This repository contains code for the ICLR 2021 paper "SCoRE: Pre-Tr

Physics-informed convolutional-recurrent neural networks for solving spatiotemporal PDEs

PhyCRNet Physics-informed convolutional-recurrent neural networks for solving spatiotemporal PDEs Paper link: [ArXiv] By: Pu Ren, Chengping Rao, Yang

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

RNN-for-Joint-NLU Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

![Exploring Relational Context for Multi-Task Dense Prediction [ICCV 2021]](https://github.com/brdav/atrc/raw/main/docs/teaser.png)

Exploring Relational Context for Multi-Task Dense Prediction [ICCV 2021]

Adaptive Task-Relational Context (ATRC) This repository provides source code for the ICCV 2021 paper Exploring Relational Context for Multi-Task Dense

A lightweight wrapper for PyTorch that provides a simple declarative API for context switching between devices, distributed modes, mixed-precision, and PyTorch extensions.

A lightweight wrapper for PyTorch that provides a simple declarative API for context switching between devices, distributed modes, mixed-precision, and PyTorch extensions.

This is the source code for: Context-aware Entity Typing in Knowledge Graphs.

This is the source code for: Context-aware Entity Typing in Knowledge Graphs.

Container : Context Aggregation Network

Container : Context Aggregation Network If you use this code for a paper please cite: @article{gao2021container, title={Container: Context Aggregati

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

Code for the paper "Adversarial Generator-Encoder Networks"

This repository contains code for the paper "Adversarial Generator-Encoder Networks" (AAAI'18) by Dmitry Ulyanov, Andrea Vedaldi, Victor Lempitsky. Pr

Official PyTorch code of DeepPanoContext: Panoramic 3D Scene Understanding with Holistic Scene Context Graph and Relation-based Optimization (ICCV 2021 Oral).

DeepPanoContext (DPC) [Project Page (with interactive results)][Paper] DeepPanoContext: Panoramic 3D Scene Understanding with Holistic Scene Context G

Line as a Visual Sentence: Context-aware Line Descriptor for Visual Localization

Line as a Visual Sentence with LineTR This repository contains the inference code, pretrained model, and demo scripts of the following paper. It suppo

DeepLabv3+:Encoder-Decoder with Atrous Separable Convolution语义分割模型在tensorflow2当中的实现

DeepLabv3+:Encoder-Decoder with Atrous Separable Convolution语义分割模型在tensorflow2当中的实现 目录 性能情况 Performance 所需环境 Environment 注意事项 Attention 文件下载 Download

![[ICCV 2021] Encoder-decoder with Multi-level Attention for 3D Human Shape and Pose Estimation](https://github.com/ziniuwan/maed/raw/master/doc/figs/net_arch.png)

[ICCV 2021] Encoder-decoder with Multi-level Attention for 3D Human Shape and Pose Estimation

MAED: Encoder-decoder with Multi-level Attention for 3D Human Shape and Pose Estimation Getting Started Our codes are implemented and tested with pyth

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019)

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019) Introduction Official implementation of Adaptive Pyramid Context Network

CAPRI: Context-Aware Interpretable Point-of-Interest Recommendation Framework

CAPRI: Context-Aware Interpretable Point-of-Interest Recommendation Framework This repository contains a framework for Recommender Systems (RecSys), a

PaddleViT: State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 2.0+

PaddlePaddle Vision Transformers State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 🤖 PaddlePaddle Visual Transformers (PaddleViT or

PyTorch Implement of Context Encoders: Feature Learning by Inpainting

Context Encoders: Feature Learning by Inpainting This is the Pytorch implement of CVPR 2016 paper on Context Encoders 1) Semantic Inpainting Demo Inst

GAN encoders in PyTorch that could match PGGAN, StyleGAN v1/v2, and BigGAN. Code also integrates the implementation of these GANs.

MTV-TSA: Adaptable GAN Encoders for Image Reconstruction via Multi-type Latent Vectors with Two-scale Attentions. This is the official code release fo

Context Axial Reverse Attention Network for Small Medical Objects Segmentation

CaraNet: Context Axial Reverse Attention Network for Small Medical Objects Segmentation This repository contains the implementation of a novel attenti

This repository is the offical Pytorch implementation of ContextPose: Context Modeling in 3D Human Pose Estimation: A Unified Perspective (CVPR 2021).

Context Modeling in 3D Human Pose Estimation: A Unified Perspective (CVPR 2021) Introduction This repository is the offical Pytorch implementation of

Video Contrastive Learning with Global Context

Video Contrastive Learning with Global Context (VCLR) This is the official PyTorch implementation of our VCLR paper. Install dependencies environments

[ACL-IJCNLP 2021] Improving Named Entity Recognition by External Context Retrieving and Cooperative Learning

CLNER The code is for our ACL-IJCNLP 2021 paper: Improving Named Entity Recognition by External Context Retrieving and Cooperative Learning CLNER is a

An application that maps an image of a LaTeX math equation to LaTeX code.

Convert images of LaTex math equations into LaTex code.

REST API for sentence tokenization and embedding using Multilingual Universal Sentence Encoder.

MUSE stands for Multilingual Universal Sentence Encoder - multilingual extension (supports 16 languages) of Universal Sentence Encoder (USE).

CVPR2021: Temporal Context Aggregation Network for Temporal Action Proposal Refinement

Temporal Context Aggregation Network - Pytorch This repo holds the pytorch-version codes of paper: "Temporal Context Aggregation Network for Temporal

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Locally Enhanced Self-Attention: Rethinking Self-Attention as Local and Context Terms

LESA Introduction This repository contains the official implementation of Locally Enhanced Self-Attention: Rethinking Self-Attention as Local and Cont

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

A Joint Video and Image Encoder for End-to-End Retrieval

Frozen️ in Time ❄️ ️️️️ ⏳ A Joint Video and Image Encoder for End-to-End Retrieval project page | arXiv | webvid-data Repository containing the code,

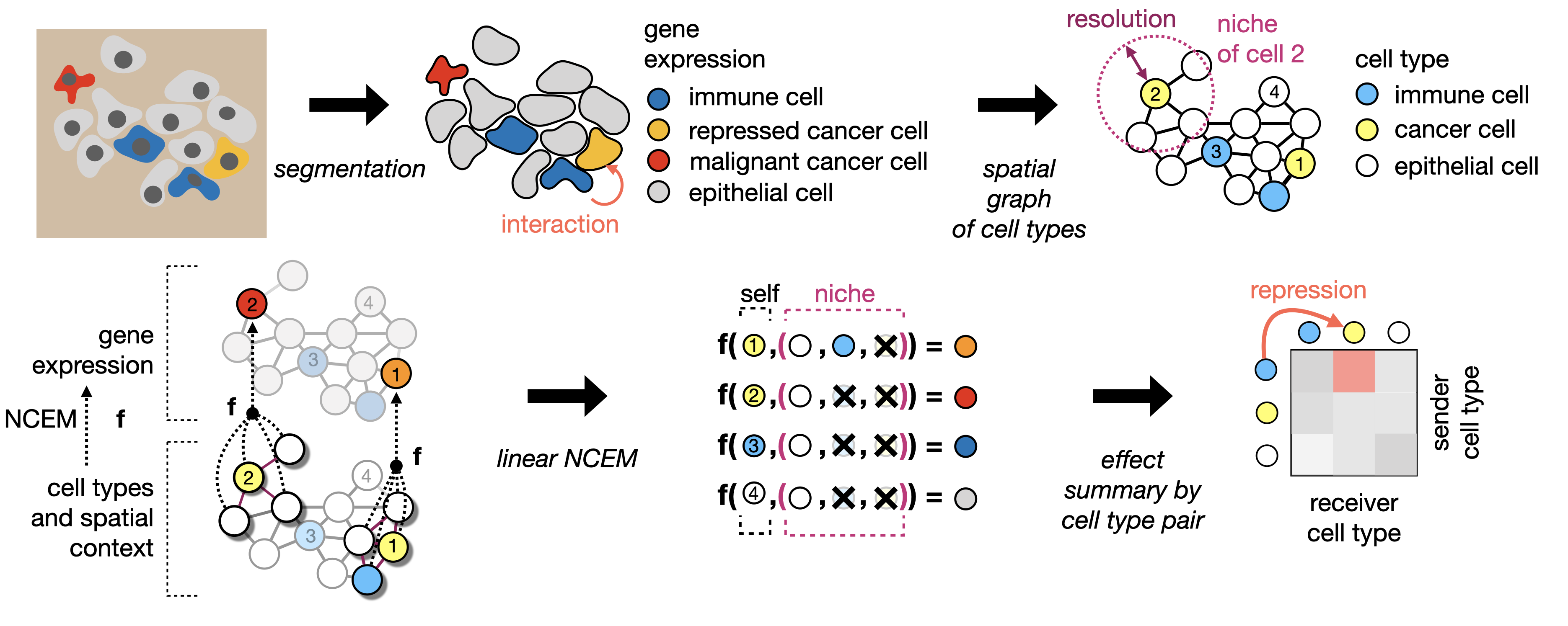

Learning cell communication from spatial graphs of cells

ncem Features Repository for the manuscript Fischer, D. S., Schaar, A. C. and Theis, F. Learning cell communication from spatial graphs of cells. 2021

Official PyTorch implementation of UACANet: Uncertainty Aware Context Attention for Polyp Segmentation

UACANet: Uncertainty Aware Context Attention for Polyp Segmentation Official pytorch implementation of UACANet: Uncertainty Aware Context Attention fo

codes for paper Combining Dynamic Local Context Focus and Dependency Cluster Attention for Aspect-level sentiment classification

DLCF-DCA codes for paper Combining Dynamic Local Context Focus and Dependency Cluster Attention for Aspect-level sentiment classification. submitted t

PyTorch implementation of "ContextNet: Improving Convolutional Neural Networks for Automatic Speech Recognition with Global Context" (INTERSPEECH 2020)

ContextNet ContextNet has CNN-RNN-transducer architecture and features a fully convolutional encoder that incorporates global context information into

REST API for sentence tokenization and embedding using Multilingual Universal Sentence Encoder.

What is MUSE? MUSE stands for Multilingual Universal Sentence Encoder - multilingual extension (16 languages) of Universal Sentence Encoder (USE). MUS

Using context-free grammar formalism to parse English sentences to determine their structure to help computer to better understand the meaning of the sentence.

Sentance Parser Executing the Program Make sure Python 3.6+ is installed. Install requirements $ pip install requirements.txt Run the program:

Source code for paper "Document-Level Relation Extraction with Adaptive Thresholding and Localized Context Pooling", AAAI 2021

ATLOP Code for AAAI 2021 paper Document-Level Relation Extraction with Adaptive Thresholding and Localized Context Pooling. If you make use of this co

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Uncertainty-aware Semantic Segmentation of LiDAR Point Clouds for Autonomous Driving

SalsaNext: Fast, Uncertainty-aware Semantic Segmentation of LiDAR Point Clouds for Autonomous Driving Abstract In this paper, we introduce SalsaNext f

IAUnet: Global Context-Aware Feature Learning for Person Re-Identification

IAUnet This repository contains the code for the paper: IAUnet: Global Context-Aware Feature Learning for Person Re-Identification Ruibing Hou, Bingpe

Non-Official Pytorch implementation of "Face Identity Disentanglement via Latent Space Mapping" https://arxiv.org/abs/2005.07728 Using StyleGAN2 instead of StyleGAN

Face Identity Disentanglement via Latent Space Mapping - Implement in pytorch with StyleGAN 2 Description Pytorch implementation of the paper Face Ide

Semi-supervised Semantic Segmentation with Directional Context-aware Consistency (CVPR 2021)

Semi-supervised Semantic Segmentation with Directional Context-aware Consistency (CAC) Xin Lai*, Zhuotao Tian*, Li Jiang, Shu Liu, Hengshuang Zhao, Li

A Joint Video and Image Encoder for End-to-End Retrieval

Frozen️ in Time ❄️ ️️️️ ⏳ A Joint Video and Image Encoder for End-to-End Retrieval (arXiv) Repository to contain the code, models, data for end-to-end

A Telegram bot to convert videos into x265/x264 format via ffmpeg.

Video Encoder Bot A Telegram bot to convert videos into x265/x264 format via ffmpeg. Configuration Add values in environment variables or add them in

Collaborative variational bandwidth auto-encoder (VBAE) for recommender systems.

Collaborative Variational Bandwidth Auto-encoder The codes are associated with the following paper: Collaborative Variational Bandwidth Auto-encoder f

Simple GUI menu for micropython using a rotary encoder and basic display.

Micropython encoder based menu This is a simple menu system written in micropython. It uses a switch, a rotary encoder and an OLED display.

:hot_pepper: R²SQL: "Dynamic Hybrid Relation Network for Cross-Domain Context-Dependent Semantic Parsing." (AAAI 2021)

R²SQL The PyTorch implementation of paper Dynamic Hybrid Relation Network for Cross-Domain Context-Dependent Semantic Parsing. (AAAI 2021) Requirement

simplejson is a simple, fast, extensible JSON encoder/decoder for Python

simplejson simplejson is a simple, fast, complete, correct and extensible JSON http://json.org encoder and decoder for Python 3.3+ with legacy suppo

Visual Tracking by TridenAlign and Context Embedding

Visual Tracking by TridentAlign and Context Embedding (TACT) Test code for "Visual Tracking by TridentAlign and Context Embedding" Janghoon Choi, Juns

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Context Decoupling Augmentation for Weakly Supervised Semantic Segmentation

Context Decoupling Augmentation for Weakly Supervised Semantic Segmentation The code of: Context Decoupling Augmentation for Weakly Supervised Semanti

TTS is a library for advanced Text-to-Speech generation.

TTS is a library for advanced Text-to-Speech generation. It's built on the latest research, was designed to achieve the best trade-off among ease-of-training, speed and quality. TTS comes with pretrained models, tools for measuring dataset quality and already used in 20+ languages for products and research projects.

Semi-supervised Semantic Segmentation with Directional Context-aware Consistency (CVPR 2021)

Semi-supervised Semantic Segmentation with Directional Context-aware Consistency (CAC) Xin Lai*, Zhuotao Tian*, Li Jiang, Shu Liu, Hengshuang Zhao, Li

Trading Gym is an open source project for the development of reinforcement learning algorithms in the context of trading.

Trading Gym Trading Gym is an open-source project for the development of reinforcement learning algorithms in the context of trading. It is currently

Code for the Active Speakers in Context Paper (CVPR2020)

Active Speakers in Context This repo contains the official code and models for the "Active Speakers in Context" CVPR 2020 paper. Before Training The c

Official Implementation for "ReStyle: A Residual-Based StyleGAN Encoder via Iterative Refinement" https://arxiv.org/abs/2104.02699

ReStyle: A Residual-Based StyleGAN Encoder via Iterative Refinement Recently, the power of unconditional image synthesis has significantly advanced th

Official implementation for Likelihood Regret: An Out-of-Distribution Detection Score For Variational Auto-encoder at NeurIPS 2020

Likelihood-Regret Official implementation of Likelihood Regret: An Out-of-Distribution Detection Score For Variational Auto-encoder at NeurIPS 2020. T

Top2Vec is an algorithm for topic modeling and semantic search.

Top2Vec is an algorithm for topic modeling and semantic search. It automatically detects topics present in text and generates jointly embedded topic, document and word vectors.

《LXMERT: Learning Cross-Modality Encoder Representations from Transformers》(EMNLP 2020)

The Most Important Thing. Our code is developed based on: LXMERT: Learning Cross-Modality Encoder Representations from Transformers

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Implementation of the paper "Language-agnostic representation learning of source code from structure and context".

Code Transformer This is an official PyTorch implementation of the CodeTransformer model proposed in: D. Zügner, T. Kirschstein, M. Catasta, J. Leskov