304 Repositories

Python datasets-preprocessing Libraries

Create Fast and easy image datasets using reddit

Reddit-Image-Scraper Reddit Reddit is an American Social news aggregation, web content rating, and discussion website. Reddit has been devided by topi

The tool to make NLP datasets ready to use

chazutsu photo from Kaikado, traditional Japanese chazutsu maker chazutsu is the dataset downloader for NLP. import chazutsu r = chazutsu.data

TorchGeo is a PyTorch domain library, similar to torchvision, that provides datasets, transforms, samplers, and pre-trained models specific to geospatial data.

TorchGeo is a PyTorch domain library, similar to torchvision, that provides datasets, transforms, samplers, and pre-trained models specific to geospatial data.

S3-plugin is a high performance PyTorch dataset library to efficiently access datasets stored in S3 buckets.

S3-plugin is a high performance PyTorch dataset library to efficiently access datasets stored in S3 buckets.

Roboflow makes managing, preprocessing, augmenting, and versioning datasets for computer vision seamless.

Roboflow makes managing, preprocessing, augmenting, and versioning datasets for computer vision seamless. This is the official Roboflow python package that interfaces with the Roboflow API.

This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced in the paper titled "BanglaBERT: Combating Embedding Barrier in Multilingual Models for Low-Resource Language Understanding".

BanglaBERT This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced i

This python module is an easy-to-use port of the text normalization used in the paper "Not low-resource anymore: Aligner ensembling, batch filtering, and new datasets for Bengali-English machine translation". It is intended to be used for normalizing / cleaning Bengali and English text.

normalizer This python module is an easy-to-use port of the text normalization used in the paper "Not low-resource anymore: Aligner ensembling, batch

An easy way to build PyTorch datasets. Modularly build datasets and automatically cache processed results

EasyDatas An easy way to build PyTorch datasets. Modularly build datasets and automatically cache processed results Installation pip install git+https

TorchIO is a Medical image preprocessing and augmentation toolkit for deep learning. Part of the PyTorch Ecosystem.

Medical image preprocessing and augmentation toolkit for deep learning. Part of the PyTorch Ecosystem.

OpenCVのGrabCut()を利用したセマンティックセグメンテーション向けアノテーションツール(Annotation tool using GrabCut() of OpenCV. It can be used to create datasets for semantic segmentation.)

[Japanese/English] GrabCut-Annotation-Tool GrabCut-Annotation-Tool.mp4 OpenCVのGrabCut()を利用したアノテーションツールです。 セマンティックセグメンテーション向けのデータセット作成にご使用いただけます。 ※Grab

A data annotation pipeline to generate high-quality, large-scale speech datasets with machine pre-labeling and fully manual auditing.

About This repository provides data and code for the paper: Scalable Data Annotation Pipeline for High-Quality Large Speech Datasets Development (subm

Collection of NLP model explanations and accompanying analysis tools

Thermostat is a large collection of NLP model explanations and accompanying analysis tools. Combines explainability methods from the captum library wi

Ray-based parallel data preprocessing for NLP and ML.

Wrangl Ray-based parallel data preprocessing for NLP and ML. pip install wrangl # for latest pip install git+https://github.com/vzhong/wrangl See exa

praudio provides audio preprocessing framework for Deep Learning audio applications

praudio provides objects and a script for performing complex preprocessing operations on entire audio datasets with one command.

The official repository for our paper "The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers". We significantly improve the systematic generalization of transformer models on a variety of datasets using simple tricks and careful considerations.

Codebase for training transformers on systematic generalization datasets. The official repository for our EMNLP 2021 paper The Devil is in the Detail:

GraphGT: Machine Learning Datasets for Graph Generation and Transformation

GraphGT: Machine Learning Datasets for Graph Generation and Transformation Dataset Website | Paper Installation Using pip To install the core environm

Base pretrained models and datasets in pytorch (MNIST, SVHN, CIFAR10, CIFAR100, STL10, AlexNet, VGG16, VGG19, ResNet, Inception, SqueezeNet)

This is a playground for pytorch beginners, which contains predefined models on popular dataset. Currently we support mnist, svhn cifar10, cifar100 st

Minimal But Practical Image Classifier Pipline Using Pytorch, Finetune on ResNet18, Got 99% Accuracy on Own Small Datasets.

PyTorch Image Classifier Updates As for many users request, I released a new version of standared pytorch immage classification example at here: http:

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics. Jury offers a smooth and easy-to-use interface. It uses datasets for underlying metric computation, and hence adding custom metric is easy as adopting datasets.Metric.

We evaluate our method on different datasets (including ShapeNet, CUB-200-2011, and Pascal3D+) and achieve state-of-the-art results, outperforming all the other supervised and unsupervised methods and 3D representations, all in terms of performance, accuracy, and training time.

An Effective Loss Function for Generating 3D Models from Single 2D Image without Rendering Papers with code | Paper Nikola Zubić Pietro Lio University

Codes for processing meeting summarization datasets AMI and ICSI.

Meeting Summarization Dataset Meeting plays an essential part in our daily life, which allows us to share information and collaborate with others. Wit

Code Repo for the ACL21 paper "Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning"

Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning This is the Github repository of our paper, "Common S

![[IJCAI'21] Deep Automatic Natural Image Matting](https://github.com/JizhiziLi/AIM/raw/master/demo/network.png)

[IJCAI'21] Deep Automatic Natural Image Matting

Deep Automatic Natural Image Matting [IJCAI-21] This is the official repository of the paper Deep Automatic Natural Image Matting. Introduction | Netw

A curated list of amazingly awesome Cybersecurity datasets

A curated list of amazingly awesome Cybersecurity datasets

PyTorch implementation of popular datasets and models in remote sensing

PyTorch Remote Sensing (torchrs) (WIP) PyTorch implementation of popular datasets and models in remote sensing tasks (Change Detection, Image Super Re

Deduplicating Training Data Makes Language Models Better

Deduplicating Training Data Makes Language Models Better This repository contains code to deduplicate language model datasets as descrbed in the paper

Official PyTorch implementation of "Rapid Neural Architecture Search by Learning to Generate Graphs from Datasets" (ICLR 2021)

Rapid Neural Architecture Search by Learning to Generate Graphs from Datasets This is the official PyTorch implementation for the paper Rapid Neural A

Project page for the paper Semi-Supervised Raw-to-Raw Mapping 2021.

Project page for the paper Semi-Supervised Raw-to-Raw Mapping 2021.

A Pytorch implementation of CVPR 2021 paper "RSG: A Simple but Effective Module for Learning Imbalanced Datasets"

RSG: A Simple but Effective Module for Learning Imbalanced Datasets (CVPR 2021) A Pytorch implementation of our CVPR 2021 paper "RSG: A Simple but Eff

A data preprocessing package for time series data. Design for machine learning and deep learning.

A data preprocessing package for time series data. Design for machine learning and deep learning.

Meerkat provides fast and flexible data structures for working with complex machine learning datasets.

Meerkat makes it easier for ML practitioners to interact with high-dimensional, multi-modal data. It provides simple abstractions for data inspection, model evaluation and model training supported by efficient and robust IO under the hood.

This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard evaluation metric to measure the accuracy and robustness of 3D face reconstruction methods from a single image under variations in viewing angle, lighting, and common occlusions.

NoW Evaluation This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard e

Preprocessed Datasets for our Multimodal NER paper

Unified Multimodal Transformer (UMT) for Multimodal Named Entity Recognition (MNER) Two MNER Datasets and Codes for our ACL'2020 paper: Improving Mult

Code and datasets for our paper "PTR: Prompt Tuning with Rules for Text Classification"

PTR Code and datasets for our paper "PTR: Prompt Tuning with Rules for Text Classification" If you use the code, please cite the following paper: @art

Official PyTorch code for CVPR 2020 paper "Deep Active Learning for Biased Datasets via Fisher Kernel Self-Supervision"

Deep Active Learning for Biased Datasets via Fisher Kernel Self-Supervision https://arxiv.org/abs/2003.00393 Abstract Active learning (AL) aims to min

The toolkit to generate auto labeled datasets

Ozeu Ozeu is the toolkit to autolabal dataset for instance segmentation. You can generate datasets labaled with segmentation mask and bounding box fro

Datasets of Automatic Keyphrase Extraction

This repository contains 20 annotated datasets of Automatic Keyphrase Extraction made available by the research community. Following are the datasets and the original papers that proposed them. If you know more datasets, and want to contribute, please, notify me.

This repository contains the implementations related to the experiments of a set of publicly available datasets that are used in the time series forecasting research space.

TSForecasting This repository contains the implementations related to the experiments of a set of publicly available datasets that are used in the tim

covid question answering datasets and fine tuned models

Covid-QA Fine tuned models for question answering on Covid-19 data. Hosted Inference This model has been contributed to huggingface.Click here to see

Combines Bayesian analyses from many datasets.

PosteriorStacker Combines Bayesian analyses from many datasets. Introduction Method Tutorial Output plot and files Introduction Fitting a model to a d

BOOKSUM: A Collection of Datasets for Long-form Narrative Summarization

BOOKSUM: A Collection of Datasets for Long-form Narrative Summarization Authors: Wojciech Kryściński, Nazneen Rajani, Divyansh Agarwal, Caiming Xiong,

A collection of Korean Text Datasets ready to use using Tensorflow-Datasets.

tfds-korean A collection of Korean Text Datasets ready to use using Tensorflow-Datasets. TensorFlow-Datasets를 이용한 한국어/한글 데이터셋 모음입니다. Dataset Catalog |

Cloud-native, data onboarding architecture for the Google Cloud Public Datasets program

Public Datasets Pipelines Cloud-native, data pipeline architecture for onboarding datasets to the Google Cloud Public Datasets Program. Overview Requi

Ever felt tired after preprocessing the dataset, and not wanting to write any code further to train your model? Ever encountered a situation where you wanted to record the hyperparameters of the trained model and able to retrieve it afterward? Models Playground is here to help you do that. Models playground allows you to train your models right from the browser.

Models Playground 🗂️ Upload a Preprocessed Dataset 🌠 Choose whether to perform Classification or Regression 🦹 Enter the Dependent Variable ?

Awesome Video Datasets

Awesome Video Datasets

Official implementation of "Towards Good Practices for Efficiently Annotating Large-Scale Image Classification Datasets" (CVPR2021)

Towards Good Practices for Efficiently Annotating Large-Scale Image Classification Datasets This is the official implementation of "Towards Good Pract

Task-based datasets, preprocessing, and evaluation for sequence models.

SeqIO: Task-based datasets, preprocessing, and evaluation for sequence models. SeqIO is a library for processing sequential data to be fed into downst

Code and datasets for the paper "Combining Events and Frames using Recurrent Asynchronous Multimodal Networks for Monocular Depth Prediction" (RA-L, 2021)

Combining Events and Frames using Recurrent Asynchronous Multimodal Networks for Monocular Depth Prediction This is the code for the paper Combining E

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

NLPretext packages in a unique library all the text preprocessing functions you need to ease your NLP project.

NLPretext packages in a unique library all the text preprocessing functions you need to ease your NLP project.

Implementation of "Debiasing Item-to-Item Recommendations With Small Annotated Datasets" (RecSys '20)

Debiasing Item-to-Item Recommendations With Small Annotated Datasets This is the code for our RecSys '20 paper. Other materials can be found here: Ful

![[CVPR2021] DoDNet: Learning to segment multi-organ and tumors from multiple partially labeled datasets](https://github.com/jianpengz/DoDNet/raw/main/a_DynConv/dodnet.png)

[CVPR2021] DoDNet: Learning to segment multi-organ and tumors from multiple partially labeled datasets

DoDNet This repo holds the pytorch implementation of DoDNet: DoDNet: Learning to segment multi-organ and tumors from multiple partially labeled datase

This repository contains the code for "Generating Datasets with Pretrained Language Models".

Datasets from Instructions (DINO 🦕 ) This repository contains the code for Generating Datasets with Pretrained Language Models. The paper introduces

Draw datasets from within Jupyter.

drawdata This small python app allows you to draw a dataset in a jupyter notebook. This should be very useful when teaching machine learning algorithm

A Pythonic introduction to methods for scaling your data science and machine learning work to larger datasets and larger models, using the tools and APIs you know and love from the PyData stack (such as numpy, pandas, and scikit-learn).

This tutorial's purpose is to introduce Pythonistas to methods for scaling their data science and machine learning work to larger datasets and larger models, using the tools and APIs they know and love from the PyData stack (such as numpy, pandas, and scikit-learn).

User friendly Rasterio plugin to read raster datasets.

rio-tiler User friendly Rasterio plugin to read raster datasets. Documentation: https://cogeotiff.github.io/rio-tiler/ Source Code: https://github.com

Client library for interfacing with USGS datasets

USGS API USGS is a python module for interfacing with the US Geological Survey's API. It provides submodules to interact with various endpoints, and c

Summary statistics of geospatial raster datasets based on vector geometries.

rasterstats rasterstats is a Python module for summarizing geospatial raster datasets based on vector geometries. It includes functions for zonal stat

A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models

wav2vec-toolkit A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models This repository accompanies the

UA-GEC: Grammatical Error Correction and Fluency Corpus for the Ukrainian Language

UA-GEC: Grammatical Error Correction and Fluency Corpus for the Ukrainian Language This repository contains UA-GEC data and an accompanying Python lib

A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''.

P-tuning A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''. How to use our code We have released the code

A Python Package to Tackle the Curse of Imbalanced Datasets in Machine Learning

imbalanced-learn imbalanced-learn is a python package offering a number of re-sampling techniques commonly used in datasets showing strong between-cla

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

A Python library for detecting patterns and anomalies in massive datasets using the Matrix Profile

matrixprofile-ts matrixprofile-ts is a Python 2 and 3 library for evaluating time series data using the Matrix Profile algorithms developed by the Keo

Technical Analysis Library using Pandas and Numpy

Technical Analysis Library in Python It is a Technical Analysis library useful to do feature engineering from financial time series datasets (Open, Cl

Rasterio reads and writes geospatial raster datasets

Rasterio Rasterio reads and writes geospatial raster data. Geographic information systems use GeoTIFF and other formats to organize and store gridded,

Source code, datasets and trained models for the paper Learning Advanced Mathematical Computations from Examples (ICLR 2021), by François Charton, Amaury Hayat (ENPC-Rutgers) and Guillaume Lample

Maths from examples - Learning advanced mathematical computations from examples This is the source code and data sets relevant to the paper Learning a

A collection of resources (including the papers and datasets) of OCR (Optical Character Recognition).

OCR Resources This repository contains a collection of resources (including the papers and datasets) of OCR (Optical Character Recognition). Contents

Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics

Dataset Cartography Code for the paper Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics at EMNLP 2020. This repository cont

Baselines for TrajNet++

TrajNet++ : The Trajectory Forecasting Framework PyTorch implementation of Human Trajectory Forecasting in Crowds: A Deep Learning Perspective TrajNet

An open source multi-tool for exploring and publishing data

Datasette An open source multi-tool for exploring and publishing data Datasette is a tool for exploring and publishing data. It helps people take data

BatchFlow helps you conveniently work with random or sequential batches of your data and define data processing and machine learning workflows even for datasets that do not fit into memory.

BatchFlow BatchFlow helps you conveniently work with random or sequential batches of your data and define data processing and machine learning workflo

Datasets, Transforms and Models specific to Computer Vision

torchvision The torchvision package consists of popular datasets, model architectures, and common image transformations for computer vision. Installat

A Python Package to Tackle the Curse of Imbalanced Datasets in Machine Learning

imbalanced-learn imbalanced-learn is a python package offering a number of re-sampling techniques commonly used in datasets showing strong between-cla

MLBox is a powerful Automated Machine Learning python library.

MLBox is a powerful Automated Machine Learning python library. It provides the following features: Fast reading and distributed data preprocessing/cle

Allows including an action inside another action (by preprocessing the Yaml file). This is how composite actions should have worked.

actions-includes Allows including an action inside another action (by preprocessing the Yaml file). Instead of using uses or run in your action step,

Object detection on multiple datasets with an automatically learned unified label space.

Simple multi-dataset detection An object detector trained on multiple large-scale datasets with a unified label space; Winning solution of E

Publish Xarray Datasets via a REST API.

Xpublish Publish Xarray Datasets via a REST API. Serverside: Publish a Xarray Dataset through a rest API ds.rest.serve(host="0.0.0.0", port=9000) Clie

xarray: N-D labeled arrays and datasets

xarray is an open source project and Python package that makes working with labelled multi-dimensional arrays simple, efficient, and fun!

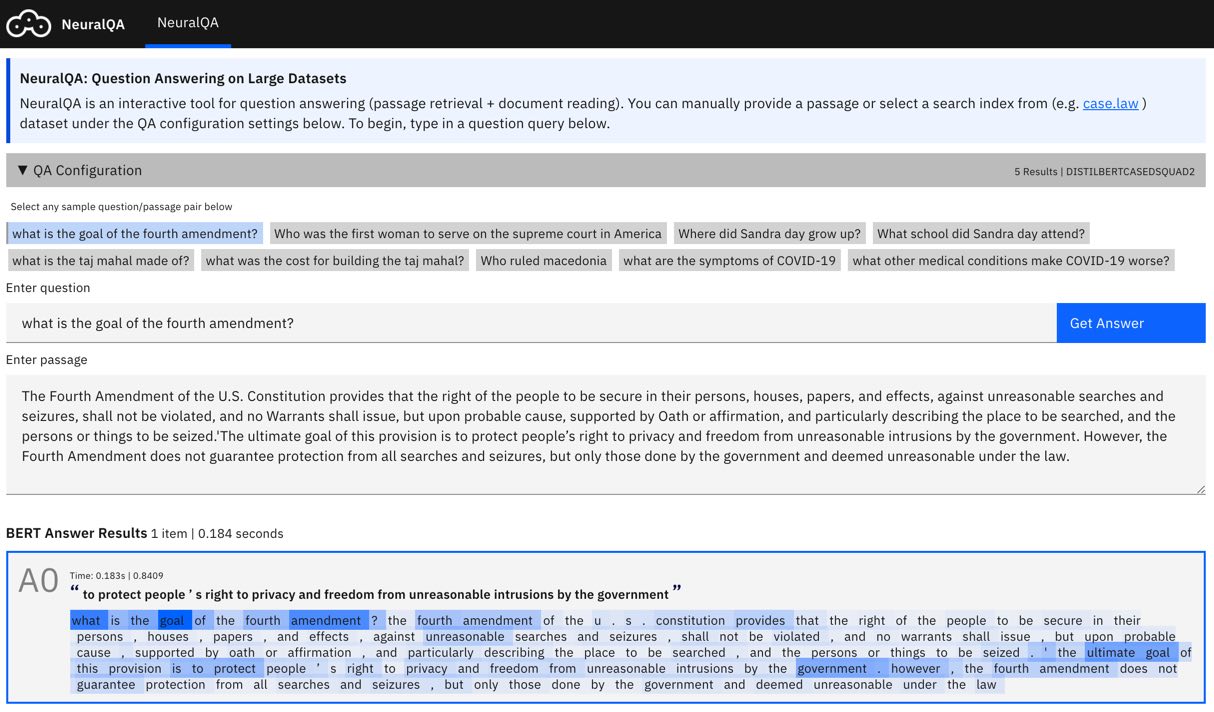

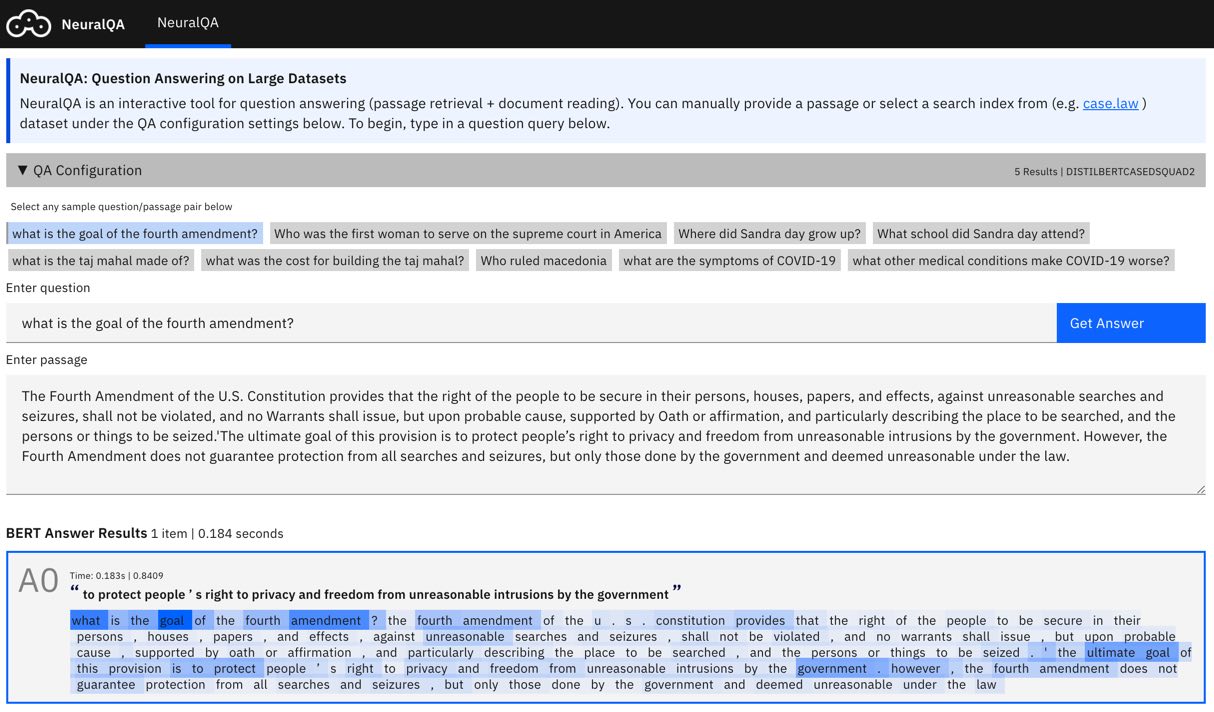

NeuralQA: A Usable Library for Question Answering on Large Datasets with BERT

NeuralQA: A Usable Library for (Extractive) Question Answering on Large Datasets with BERT Still in alpha, lots of changes anticipated. View demo on n

Text preprocessing, representation and visualization from zero to hero.

Text preprocessing, representation and visualization from zero to hero. From zero to hero • Installation • Getting Started • Examples • API • FAQ • Co

A framework for training and evaluating AI models on a variety of openly available dialogue datasets.

ParlAI (pronounced “par-lay”) is a python framework for sharing, training and testing dialogue models, from open-domain chitchat, to task-oriented dia

Dimensionality reduction in very large datasets using Siamese Networks

ivis Implementation of the ivis algorithm as described in the paper Structure-preserving visualisation of high dimensional single-cell datasets. Ivis

The open-source tool for building high-quality datasets and computer vision models

The open-source tool for building high-quality datasets and computer vision models. Website • Docs • Try it Now • Tutorials • Examples • Blog • Commun

Visualize and compare datasets, target values and associations, with one line of code.

In-depth EDA (target analysis, comparison, feature analysis, correlation) in two lines of code! Sweetviz is an open-source Python library that generat

Visualizations for machine learning datasets

Introduction The facets project contains two visualizations for understanding and analyzing machine learning datasets: Facets Overview and Facets Dive

Python library for handling audio datasets.

AUDIOMATE Audiomate is a library for easy access to audio datasets. It provides the datastructures for accessing/loading different datasets in a gener

UA-GEC: Grammatical Error Correction and Fluency Corpus for the Ukrainian Language

UA-GEC: Grammatical Error Correction and Fluency Corpus for the Ukrainian Language This repository contains UA-GEC data and an accompanying Python lib

NeuralQA: A Usable Library for Question Answering on Large Datasets with BERT

NeuralQA: A Usable Library for (Extractive) Question Answering on Large Datasets with BERT Still in alpha, lots of changes anticipated. View demo on n

Text preprocessing, representation and visualization from zero to hero.

Text preprocessing, representation and visualization from zero to hero. From zero to hero • Installation • Getting Started • Examples • API • FAQ • Co

A framework for training and evaluating AI models on a variety of openly available dialogue datasets.

ParlAI (pronounced “par-lay”) is a python framework for sharing, training and testing dialogue models, from open-domain chitchat, to task-oriented dia

Dimensionality reduction in very large datasets using Siamese Networks

ivis Implementation of the ivis algorithm as described in the paper Structure-preserving visualisation of high dimensional single-cell datasets. Ivis

The open-source tool for building high-quality datasets and computer vision models

The open-source tool for building high-quality datasets and computer vision models. Website • Docs • Try it Now • Tutorials • Examples • Blog • Commun

Visualize and compare datasets, target values and associations, with one line of code.

In-depth EDA (target analysis, comparison, feature analysis, correlation) in two lines of code! Sweetviz is an open-source Python library that generat

Visualizations for machine learning datasets

Introduction The facets project contains two visualizations for understanding and analyzing machine learning datasets: Facets Overview and Facets Dive

Library to scrape and clean web pages to create massive datasets.

lazynlp A straightforward library that allows you to crawl, clean up, and deduplicate webpages to create massive monolingual datasets. Using this libr

Web scraping library and command-line tool for text discovery and extraction (main content, metadata, comments)

trafilatura: Web scraping tool for text discovery and retrieval Description Trafilatura is a Python package and command-line tool which seamlessly dow

Code, Models and Datasets for OpenViDial Dataset

OpenViDial This repo contains downloading instructions for the OpenViDial dataset in 《OpenViDial: A Large-Scale, Open-Domain Dialogue Dataset with Vis

Backtest 1000s of minute-by-minute trading algorithms for training AI with automated pricing data from: IEX, Tradier and FinViz. Datasets and trading performance automatically published to S3 for building AI training datasets for teaching DNNs how to trade. Runs on Kubernetes and docker-compose. 150 million trading history rows generated from +5000 algorithms. Heads up: Yahoo's Finance API was disabled on 2019-01-03 https://developer.yahoo.com/yql/

Stock Analysis Engine Build and tune investment algorithms for use with artificial intelligence (deep neural networks) with a distributed stack for ru

Python Module for Tabular Datasets in XLS, CSV, JSON, YAML, &c.

Tablib: format-agnostic tabular dataset library _____ ______ ___________ ______ __ /_______ ____ /_ ___ /___(_)___ /_ _ __/_ __ `/__ _