427 Repositories

Python gradient-optimization Libraries

Source code for CVPR 2021 paper "Riggable 3D Face Reconstruction via In-Network Optimization"

Riggable 3D Face Reconstruction via In-Network Optimization Source code for CVPR 2021 paper "Riggable 3D Face Reconstruction via In-Network Optimizati

This is the pytorch implementation of the paper - Axiomatic Attribution for Deep Networks.

Integrated Gradients This is the pytorch implementation of "Axiomatic Attribution for Deep Networks". The original tensorflow version could be found h

This is an implementation of the proximal policy optimization algorithm for the C++ API of Pytorch

This is an implementation of the proximal policy optimization algorithm for the C++ API of Pytorch. It uses a simple TestEnvironment to test the algorithm

Pyomo is an object-oriented algebraic modeling language in Python for structured optimization problems.

Pyomo is a Python-based open-source software package that supports a diverse set of optimization capabilities for formulating and analyzing optimization models. Pyomo can be used to define symbolic problems, create concrete problem instances, and solve these instances with standard solvers.

Bonsai: Gradient Boosted Trees + Bayesian Optimization

Bonsai is a wrapper for the XGBoost and Catboost model training pipelines that leverages Bayesian optimization for computationally efficient hyperparameter tuning.

Official implementation of paper Gradient Matching for Domain Generalization

Gradient Matching for Domain Generalisation This is the official PyTorch implementation of Gradient Matching for Domain Generalisation. In our paper,

Computationally Efficient Optimization of Plackett-Luce Ranking Models for Relevance and Fairness

Computationally Efficient Optimization of Plackett-Luce Ranking Models for Relevance and Fairness This repository contains the code used for the exper

Hardware accelerated, batchable and differentiable optimizers in JAX.

JAXopt Installation | Examples | References Hardware accelerated (GPU/TPU), batchable and differentiable optimizers in JAX. Installation JAXopt can be

The MLOps platform for innovators 🚀

DS2.ai is an integrated AI operation solution that supports all stages from custom AI development to deployment. It is an AI-specialized platform service that collects data, builds a training dataset through data labeling, and enables automatic development of artificial intelligence and easy deployment and operation.

noisy labels; missing labels; semi-supervised learning; entropy; uncertainty; robustness and generalisation.

ProSelfLC: CVPR 2021 ProSelfLC: Progressive Self Label Correction for Training Robust Deep Neural Networks For any specific discussion or potential fu

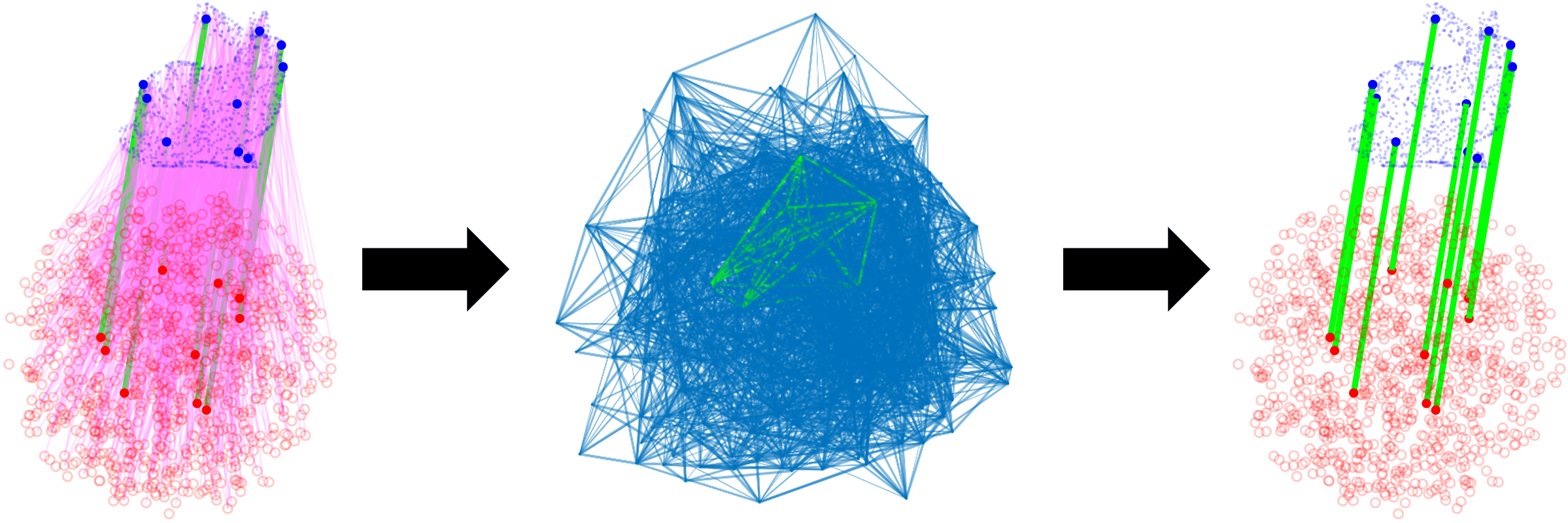

graph-theoretic framework for robust pairwise data association

CLIPPER: A Graph-Theoretic Framework for Robust Data Association Data association is a fundamental problem in robotics and autonomy. CLIPPER provides

Implemented fully documented Particle Swarm Optimization algorithm (basic model with few advanced features) using Python programming language

Implemented fully documented Particle Swarm Optimization (PSO) algorithm in Python which includes a basic model along with few advanced features such as updating inertia weight, cognitive, social learning coefficients and maximum velocity of the particle.

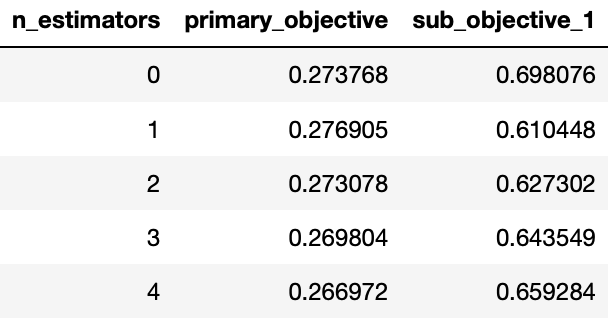

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees.

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees. MooGBT optimizes for multiple objectives by defining constraints on sub-objective(s) along with a primary objective. The constraints are defined as upper bounds on sub-objective loss function. MooGBT uses a Augmented Lagrangian(AL) based constrained optimization framework with Gradient Boosted Trees, to optimize for multiple objectives.

Automated modeling and machine learning framework FEDOT

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML). It can build custom modeling pipelines for different real-world processes in an automated way using an evolutionary approach. FEDOT supports classification (binary and multiclass), regression, clustering, and time series prediction tasks.

CoSA: Scheduling by Constrained Optimization for Spatial Accelerators

CoSA is a scheduler for spatial DNN accelerators that generate high-performance schedules in one shot using mixed integer programming

Implementation of Learning Gradient Fields for Molecular Conformation Generation (ICML 2021).

[PDF] | [Slides] The official implementation of Learning Gradient Fields for Molecular Conformation Generation (ICML 2021 Long talk) Installation Inst

How Do Adam and Training Strategies Help BNNs Optimization? In ICML 2021.

AdamBNN This is the pytorch implementation of our paper "How Do Adam and Training Strategies Help BNNs Optimization?", published in ICML 2021. In this

Custom TensorFlow2 implementations of forward and backward computation of soft-DTW algorithm in batch mode.

Batch Soft-DTW(Dynamic Time Warping) in TensorFlow2 including forward and backward computation Custom TensorFlow2 implementations of forward and backw

ML Optimizers from scratch using JAX

Toy implementations of some popular ML optimizers using Python/JAX

On the model-based stochastic value gradient for continuous reinforcement learning

On the model-based stochastic value gradient for continuous reinforcement learning This repository is by Brandon Amos, Samuel Stanton, Denis Yarats, a

Probabilistic Gradient Boosting Machines

PGBM Probabilistic Gradient Boosting Machines (PGBM) is a probabilistic gradient boosting framework in Python based on PyTorch/Numba, developed by Air

The official implementation of You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient.

You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient (paper) @misc{zhang2021compress,

Fusion-DHL: WiFi, IMU, and Floorplan Fusion for Dense History of Locations in Indoor Environments

Fusion-DHL: WiFi, IMU, and Floorplan Fusion for Dense History of Locations in Indoor Environments Paper: arXiv (ICRA 2021) Video : https://youtu.be/CC

Code release to accompany paper "Geometry-Aware Gradient Algorithms for Neural Architecture Search."

Geometry-Aware Gradient Algorithms for Neural Architecture Search This repository contains the code required to run the experiments for the DARTS sear

This is code to fit per-pixel environment map with spherical Gaussian lobes, using LBFGS optimization

Spherical Gaussian Optimization This is code to fit per-pixel environment map with spherical Gaussian lobes, using LBFGS optimization. This code has b

VOGUE: Try-On by StyleGAN Interpolation Optimization

VOGUE is a StyleGAN interpolation optimization algorithm for photo-realistic try-on. Top: shirt try-on automatically synthesized by our method in two different examples.

jaxfg - Factor graph-based nonlinear optimization library for JAX.

Factor graphs + nonlinear optimization in JAX

Pytorch implementation of AngularGrad: A New Optimization Technique for Angular Convergence of Convolutional Neural Networks

AngularGrad Optimizer This repository contains the oficial implementation for AngularGrad: A New Optimization Technique for Angular Convergence of Con

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

An end-to-end machine learning library to directly optimize AUC loss

LibAUC An end-to-end machine learning library for AUC optimization. Why LibAUC? Deep AUC Maximization (DAM) is a paradigm for learning a deep neural n

![[ECCV 2020] Gradient-Induced Co-Saliency Detection](https://github.com/zzhanghub/gicd/raw/master/img/GICD_LOGO.png)

[ECCV 2020] Gradient-Induced Co-Saliency Detection

Gradient-Induced Co-Saliency Detection Zhao Zhang*, Wenda Jin*, Jun Xu, Ming-Ming Cheng ⭐ Project Home » The official repo of the ECCV 2020 paper Grad

Combining Reinforcement Learning and Constraint Programming for Combinatorial Optimization

Hybrid solving process for combinatorial optimization problems Combinatorial optimization has found applications in numerous fields, from aerospace to

Paddle-RLBooks is a reinforcement learning code study guide based on pure PaddlePaddle.

Paddle-RLBooks Welcome to Paddle-RLBooks which is a reinforcement learning code study guide based on pure PaddlePaddle. 欢迎来到Paddle-RLBooks,该仓库主要是针对强化学

Bayesian optimization in JAX

Bayesian optimization in JAX

A collection of various RL algorithms like policy gradients, DQN and PPO. The goal of this repo will be to make it a go-to resource for learning about RL. How to visualize, debug and solve RL problems. I've additionally included playground.py for learning more about OpenAI gym, etc.

Reinforcement Learning (PyTorch) 🤖 + 🍰 = ❤️ This repo will contain PyTorch implementation of various fundamental RL algorithms. It's aimed at making

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

Ray provides a simple, universal API for building distributed applications.

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

A Closer Look at Invalid Action Masking in Policy Gradient Algorithms

A Closer Look at Invalid Action Masking in Policy Gradient Algorithms This repo contains the source code to reproduce the results in the paper A Close

DC3: A Learning Method for Optimization with Hard Constraints

DC3: A learning method for optimization with hard constraints This repository is by Priya L. Donti, David Rolnick, and J. Zico Kolter and contains the

A tf.keras implementation of Facebook AI's MadGrad optimization algorithm

MADGRAD Optimization Algorithm For Tensorflow This package implements the MadGrad Algorithm proposed in Adaptivity without Compromise: A Momentumized,

Official code for "End-to-End Optimization of Scene Layout" -- including VAE, Diff Render, SPADE for colorization (CVPR 2020 Oral)

End-to-End Optimization of Scene Layout Code release for: End-to-End Optimization of Scene Layout CVPR 2020 (Oral) Project site, Bibtex For help conta

OCTIS: Comparing Topic Models is Simple! A python package to optimize and evaluate topic models (accepted at EACL2021 demo track)

OCTIS : Optimizing and Comparing Topic Models is Simple! OCTIS (Optimizing and Comparing Topic models Is Simple) aims at training, analyzing and compa

![[CVPRW 21]](https://github.com/VITA-Group/BNN_NoBN/raw/main/Figs/res.png)

[CVPRW 21] "BNN - BN = ? Training Binary Neural Networks without Batch Normalization", Tianlong Chen, Zhenyu Zhang, Xu Ouyang, Zechun Liu, Zhiqiang Shen, Zhangyang Wang

BNN - BN = ? Training Binary Neural Networks without Batch Normalization Codes for this paper BNN - BN = ? Training Binary Neural Networks without Bat

PyTorch code for SENTRY: Selective Entropy Optimization via Committee Consistency for Unsupervised DA

PyTorch Code for SENTRY: Selective Entropy Optimization via Committee Consistency for Unsupervised Domain Adaptation Viraj Prabhu, Shivam Khare, Deeks

CS 7301: Spring 2021 Course on Advanced Topics in Optimization in Machine Learning

CS 7301: Spring 2021 Course on Advanced Topics in Optimization in Machine Learning

Exact Pareto Optimal solutions for preference based Multi-Objective Optimization

Exact Pareto Optimal solutions for preference based Multi-Objective Optimization

DABO: Data Augmentation with Bilevel Optimization

DABO: Data Augmentation with Bilevel Optimization [Paper] The goal is to automatically learn an efficient data augmentation regime for image classific

AutoOED: Automated Optimal Experiment Design Platform

AutoOED is an optimal experiment design platform powered with automated machine learning to accelerate the discovery of optimal solutions. Our platform solves multi-objective optimization problems and automatically guides the design of experiment to be evaluated.

POT : Python Optimal Transport

This open source Python library provide several solvers for optimization problems related to Optimal Transport for signal, image processing and machine learning.

PGPortfolio: Policy Gradient Portfolio, the source code of "A Deep Reinforcement Learning Framework for the Financial Portfolio Management Problem"(https://arxiv.org/pdf/1706.10059.pdf).

This is the original implementation of our paper, A Deep Reinforcement Learning Framework for the Financial Portfolio Management Problem (arXiv:1706.1

A resource for learning about deep learning techniques from regression to LSTM and Reinforcement Learning using financial data and the fitness functions of algorithmic trading

A tour through tensorflow with financial data I present several models ranging in complexity from simple regression to LSTM and policy networks. The s

This project provides a stock market environment using OpenGym with Deep Q-learning and Policy Gradient.

Stock Trading Market OpenAI Gym Environment with Deep Reinforcement Learning using Keras Overview This project provides a general environment for stoc

Scalable, event-driven, deep-learning-friendly backtesting library

...Minimizing the mean square error on future experience. - Richard S. Sutton BTGym Scalable event-driven RL-friendly backtesting library. Build on

Transformer related optimization, including BERT, GPT

This repository provides a script and recipe to run the highly optimized transformer-based encoder and decoder component, and it is tested and maintained by NVIDIA.

Automated Machine Learning Pipeline with Feature Engineering and Hyper-Parameters Tuning

The mljar-supervised is an Automated Machine Learning Python package that works with tabular data. I

Genetic Algorithm, Particle Swarm Optimization, Simulated Annealing, Ant Colony Optimization Algorithm,Immune Algorithm, Artificial Fish Swarm Algorithm, Differential Evolution and TSP(Traveling salesman)

scikit-opt Swarm Intelligence in Python (Genetic Algorithm, Particle Swarm Optimization, Simulated Annealing, Ant Colony Algorithm, Immune Algorithm,A

torch-optimizer -- collection of optimizers for Pytorch

torch-optimizer torch-optimizer -- collection of optimizers for PyTorch compatible with optim module. Simple example import torch_optimizer as optim

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective. 10x Larger Models 10x Faster Trainin

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Ray provides a simple, universal API for building distributed applications. Ray is packaged with the following libraries for accelerating machine lear

High-performance TensorFlow library for quantitative finance.

TF Quant Finance: TensorFlow based Quant Finance Library Table of contents Introduction Installation TensorFlow training Development roadmap Examples

Open-L2O: A Comprehensive and Reproducible Benchmark for Learning to Optimize Algorithms

Open-L2O This repository establishes the first comprehensive benchmark efforts of existing learning to optimize (L2O) approaches on a number of proble

Code for: Gradient-based Hierarchical Clustering using Continuous Representations of Trees in Hyperbolic Space. Nicholas Monath, Manzil Zaheer, Daniel Silva, Andrew McCallum, Amr Ahmed. KDD 2019.

gHHC Code for: Gradient-based Hierarchical Clustering using Continuous Representations of Trees in Hyperbolic Space. Nicholas Monath, Manzil Zaheer, D

Optimising chemical reactions using machine learning

Summit Summit is a set of tools for optimising chemical processes. We’ve started by targeting reactions. What is Summit? Currently, reaction optimisat

![[ICLR 2021] Is Attention Better Than Matrix Decomposition?](https://github.com/Gsunshine/Enjoy-Hamburger/raw/main/assets/Hamburger.jpg)

[ICLR 2021] Is Attention Better Than Matrix Decomposition?

Enjoy-Hamburger 🍔 Official implementation of Hamburger, Is Attention Better Than Matrix Decomposition? (ICLR 2021) Under construction. Introduction T

An abstraction layer for mathematical optimization solvers.

MathOptInterface Documentation Build Status Social An abstraction layer for mathematical optimization solvers. Replaces MathProgBase. Citing MathOptIn

Official implementation of "GS-WGAN: A Gradient-Sanitized Approach for Learning Differentially Private Generators" (NeurIPS 2020)

GS-WGAN This repository contains the implementation for GS-WGAN: A Gradient-Sanitized Approach for Learning Differentially Private Generators (NeurIPS

This is the source code of RPG (Reward-Randomized Policy Gradient)

RPG (Reward-Randomized Policy Gradient) Zhenggang Tang*, Chao Yu*, Boyuan Chen, Huazhe Xu, Xiaolong Wang, Fei Fang, Simon Shaolei Du, Yu Wang, Yi Wu (

library for nonlinear optimization, wrapping many algorithms for global and local, constrained or unconstrained, optimization

NLopt is a library for nonlinear local and global optimization, for functions with and without gradient information. It is designed as a simple, unifi

Hyperparameter Optimization for TensorFlow, Keras and PyTorch

Hyperparameter Optimization for Keras Talos • Key Features • Examples • Install • Support • Docs • Issues • License • Download Talos radically changes

POT : Python Optimal Transport

POT: Python Optimal Transport This open source Python library provide several solvers for optimization problems related to Optimal Transport for signa

Bayesian Optimization using GPflow

Note: This package is for use with GPFlow 1. For Bayesian optimization using GPFlow 2 please see Trieste, a joint effort with Secondmind. GPflowOpt GP

A Free and Open Source Python Library for Multiobjective Optimization

Platypus What is Platypus? Platypus is a framework for evolutionary computing in Python with a focus on multiobjective evolutionary algorithms (MOEAs)

A research toolkit for particle swarm optimization in Python

PySwarms is an extensible research toolkit for particle swarm optimization (PSO) in Python. It is intended for swarm intelligence researchers, practit

🎯 A comprehensive gradient-free optimization framework written in Python

Solid is a Python framework for gradient-free optimization. It contains basic versions of many of the most common optimization algorithms that do not

Sequential model-based optimization with a `scipy.optimize` interface

Scikit-Optimize Scikit-Optimize, or skopt, is a simple and efficient library to minimize (very) expensive and noisy black-box functions. It implements

Safe Bayesian Optimization

SafeOpt - Safe Bayesian Optimization This code implements an adapted version of the safe, Bayesian optimization algorithm, SafeOpt [1], [2]. It also p

A Python implementation of global optimization with gaussian processes.

Bayesian Optimization Pure Python implementation of bayesian global optimization with gaussian processes. PyPI (pip): $ pip install bayesian-optimizat

Hyper-parameter optimization for sklearn

hyperopt-sklearn Hyperopt-sklearn is Hyperopt-based model selection among machine learning algorithms in scikit-learn. See how to use hyperopt-sklearn

Distributed Asynchronous Hyperparameter Optimization in Python

Hyperopt: Distributed Hyperparameter Optimization Hyperopt is a Python library for serial and parallel optimization over awkward search spaces, which

optimization routines for hyperparameter tuning

Optunity is a library containing various optimizers for hyperparameter tuning. Hyperparameter tuning is a recurrent problem in many machine learning t

Sequential Model-based Algorithm Configuration

SMAC v3 Project Copyright (C) 2016-2018 AutoML Group Attention: This package is a reimplementation of the original SMAC tool (see reference below). Ho

Bayesian optimization in PyTorch

BoTorch is a library for Bayesian Optimization built on PyTorch. BoTorch is currently in beta and under active development! Why BoTorch ? BoTorch Prov

A strongly-typed genetic programming framework for Python

monkeys "If an army of monkeys were strumming on typewriters they might write all the books in the British Museum." monkeys is a framework designed to

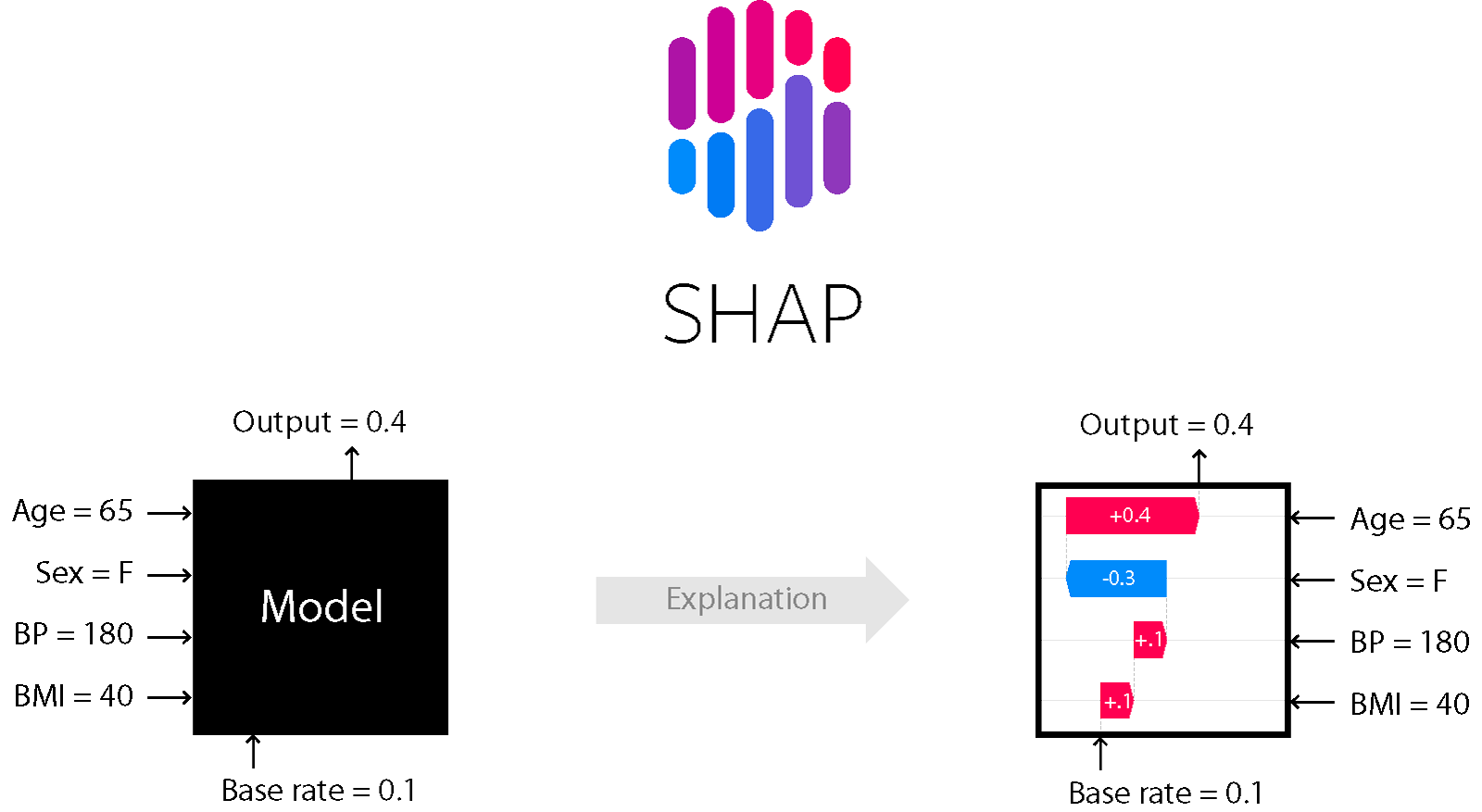

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

Keras + Hyperopt: A very simple wrapper for convenient hyperparameter optimization

This project is now archived. It's been fun working on it, but it's time for me to move on. Thank you for all the support and feedback over the last c

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

MLBox is a powerful Automated Machine Learning python library.

MLBox is a powerful Automated Machine Learning python library. It provides the following features: Fast reading and distributed data preprocessing/cle

Automated Machine Learning with scikit-learn

auto-sklearn auto-sklearn is an automated machine learning toolkit and a drop-in replacement for a scikit-learn estimator. Find the documentation here

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

Module for statistical learning, with a particular emphasis on time-dependent modelling

Operating system Build Status Linux/Mac Windows tick tick is a Python 3 module for statistical learning, with a particular emphasis on time-dependent

50% faster, 50% less RAM Machine Learning. Numba rewritten Sklearn. SVD, NNMF, PCA, LinearReg, RidgeReg, Randomized, Truncated SVD/PCA, CSR Matrices all 50+% faster

[Due to the time taken @ uni, work + hell breaking loose in my life, since things have calmed down a bit, will continue commiting!!!] [By the way, I'm

A modular active learning framework for Python

Modular Active Learning framework for Python3 Page contents Introduction Active learning from bird's-eye view modAL in action From zero to one in a fe

Official code for paper "Optimization for Oriented Object Detection via Representation Invariance Loss".

Optimization for Oriented Object Detection via Representation Invariance Loss By Qi Ming, Zhiqiang Zhou, Lingjuan Miao, Xue Yang, and Yunpeng Dong. Th

Implements Gradient Centralization and allows it to use as a Python package in TensorFlow

Gradient Centralization TensorFlow This Python package implements Gradient Centralization in TensorFlow, a simple and effective optimization technique

MazeRL is an application oriented Deep Reinforcement Learning (RL) framework

MazeRL is an application oriented Deep Reinforcement Learning (RL) framework, addressing real-world decision problems. Our vision is to cover the complete development life cycle of RL applications ranging from simulation engineering up to agent development, training and deployment.

Storchastic is a PyTorch library for stochastic gradient estimation in Deep Learning

Storchastic is a PyTorch library for stochastic gradient estimation in Deep Learning

A tool to convert AWS EC2 instances back and forth between On-Demand and Spot billing models.

ec2-spot-converter This tool converts existing AWS EC2 instances back and forth between On-Demand and 'persistent' Spot billing models while preservin

NFNets and Adaptive Gradient Clipping for SGD implemented in PyTorch

PyTorch implementation of Normalizer-Free Networks and SGD - Adaptive Gradient Clipping Paper: https://arxiv.org/abs/2102.06171.pdf Original code: htt