130 Repositories

Python conjugate-gradient-descent Libraries

TigerLily: Finding drug interactions in silico with the Graph.

Drug Interaction Prediction with Tigerlily Documentation | Example Notebook | Youtube Video | Project Report Tigerlily is a TigerGraph based system de

Machine learning beginner to Kaggle competitor in 30 days. Non-coders welcome. The program starts Monday, August 2, and lasts four weeks. It's designed for people who want to learn machine learning.

30-Days-of-ML-Kaggle 🔥 About the Hands On Program 💻 Machine learning beginner → Kaggle competitor in 30 days. Non-coders welcome The program starts

GLODISMO: Gradient-Based Learning of Discrete Structured Measurement Operators for Signal Recovery

GLODISMO: Gradient-Based Learning of Discrete Structured Measurement Operators for Signal Recovery This is the code to the paper: Gradient-Based Learn

A working (ish) python script to convert text to a gradient.

verticle-horiontal-gradient-script A working (ish) python script to convert text to a gradient. This script is poorly made with the well known python

The Implicit Bias of Gradient Descent on Generalized Gated Linear Networks

The Implicit Bias of Gradient Descent on Generalized Gated Linear Networks This folder contains the code to reproduce the data in "The Implicit Bias o

OMLT: Optimization and Machine Learning Toolkit

OMLT is a Python package for representing machine learning models (neural networks and gradient-boosted trees) within the Pyomo optimization environment.

LightGBM + Optuna: no brainer

AutoLGBM LightGBM + Optuna: no brainer auto train lightgbm directly from CSV files auto tune lightgbm using optuna auto serve best lightgbm model usin

Implementation of linesearch Optimization Algorithms in Python

Nonlinear Optimization Algorithms During my time as Scientific Assistant at the Karlsruhe Institute of Technology (Germany) I implemented various Opti

MetaBalance: Improving Multi-Task Recommendations via Adapting Gradient Magnitudes of Auxiliary Tasks

MetaBalance: Improving Multi-Task Recommendations via Adapting Gradient Magnitudes of Auxiliary Tasks Introduction This repo contains the pytorch impl

Structured Data Gradient Pruning (SDGP)

Structured Data Gradient Pruning (SDGP) Weight pruning is a technique to make Deep Neural Network (DNN) inference more computationally efficient by re

Code for Phase diagram of Stochastic Gradient Descent in high-dimensional two-layer neural networks

Phase diagram of Stochastic Gradient Descent in high-dimensional two-layer neural networks Under construction. Description Code for Phase diagram of S

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc)

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc). Structured a custom ensemble model and a neural network. Found a outperformed model for heart failure prediction accuracy of 88 percent.

hgboost - Hyperoptimized Gradient Boosting

hgboost is short for Hyperoptimized Gradient Boosting and is a python package for hyperparameter optimization for xgboost, catboost and lightboost using cross-validation, and evaluating the results on an independent validation set. hgboost can be applied for classification and regression tasks.

Fibonacci Method Gradient Descent

An implementation of the Fibonacci method for gradient descent, featuring a TKinter GUI for inputting the function / parameters to be examined and a matplotlib plot of the function and results.

Contrastive Loss Gradient Attack (CLGA)

Contrastive Loss Gradient Attack (CLGA) Official implementation of Unsupervised Graph Poisoning Attack via Contrastive Loss Back-propagation, WWW22 Bu

Bag of Tricks for Natural Policy Gradient Reinforcement Learning

Bag of Tricks for Natural Policy Gradient Reinforcement Learning [ArXiv] Setup Python 3.8.0 pip install -r req.txt Mujoco 200 license Main Files main.

Implementation of the federated dual coordinate descent (FedDCD) method.

FedDCD.jl Implementation of the federated dual coordinate descent (FedDCD) method. Installation To install, just call Pkg.add("https://github.com/Zhen

Born-Infeld (BI) for AI: Energy-Conserving Descent (ECD) for Optimization

Born-Infeld (BI) for AI: Energy-Conserving Descent (ECD) for Optimization This repository contains the code for the BBI optimizer, introduced in the p

Gradient - A Python program designed to create a reactive and ambient music listening experience

Gradient is a Python program designed to create a reactive and ambient music listening experience.

Neuron class provides LNU (Linear Neural Unit), QNU (Quadratic Neural Unit), RBF (Radial Basis Function), MLP (Multi Layer Perceptron), MLP-ELM (Multi Layer Perceptron - Extreme Learning Machine) neurons learned with Gradient descent or LeLevenberg–Marquardt algorithm

Neuron class provides LNU (Linear Neural Unit), QNU (Quadratic Neural Unit), RBF (Radial Basis Function), MLP (Multi Layer Perceptron), MLP-ELM (Multi Layer Perceptron - Extreme Learning Machine) neurons learned with Gradient descent or LeLevenberg–Marquardt algorithm

Optimizing synthesizer parameters using gradient approximation

Optimizing synthesizer parameters using gradient approximation NASH 2021 Hackathon! These are some experiments I conducted during NASH 2021, the Neura

Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechanism

Period-alternatives-of-Softmax Experimental Demo for our paper 'Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechani

Implementation for ACProp ( Momentum centering and asynchronous update for adaptive gradient methdos, NeurIPS 2021)

This repository contains code to reproduce results for submission NeurIPS 2021, "Momentum Centering and Asynchronous Update for Adaptive Gradient Meth

Policy Gradient Algorithms (One Step Actor Critic & PPO) from scratch using Numpy

Policy Gradient Algorithms From Scratch (NumPy) This repository showcases two policy gradient algorithms (One Step Actor Critic and Proximal Policy Op

Plotting points that lie on the intersection of the given curves using gradient descent.

Plotting intersection of curves using gradient descent Webapp Link --- What's the app about Why this app Plotting functions and their intersection. A

Python implementation of the Learning Time-Series Shapelets method, that learns a shapelet-based time-series classifier with gradient descent.

shaplets Python implementation of the Learning Time-Series Shapelets method by Josif Grabocka et al., that learns a shapelet-based time-series classif

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control.

Tianshou - An elegant PyTorch deep reinforcement learning library.

Tianshou (天授) is a reinforcement learning platform based on pure PyTorch. Unlike existing reinforcement learning libraries, which are mainly based on

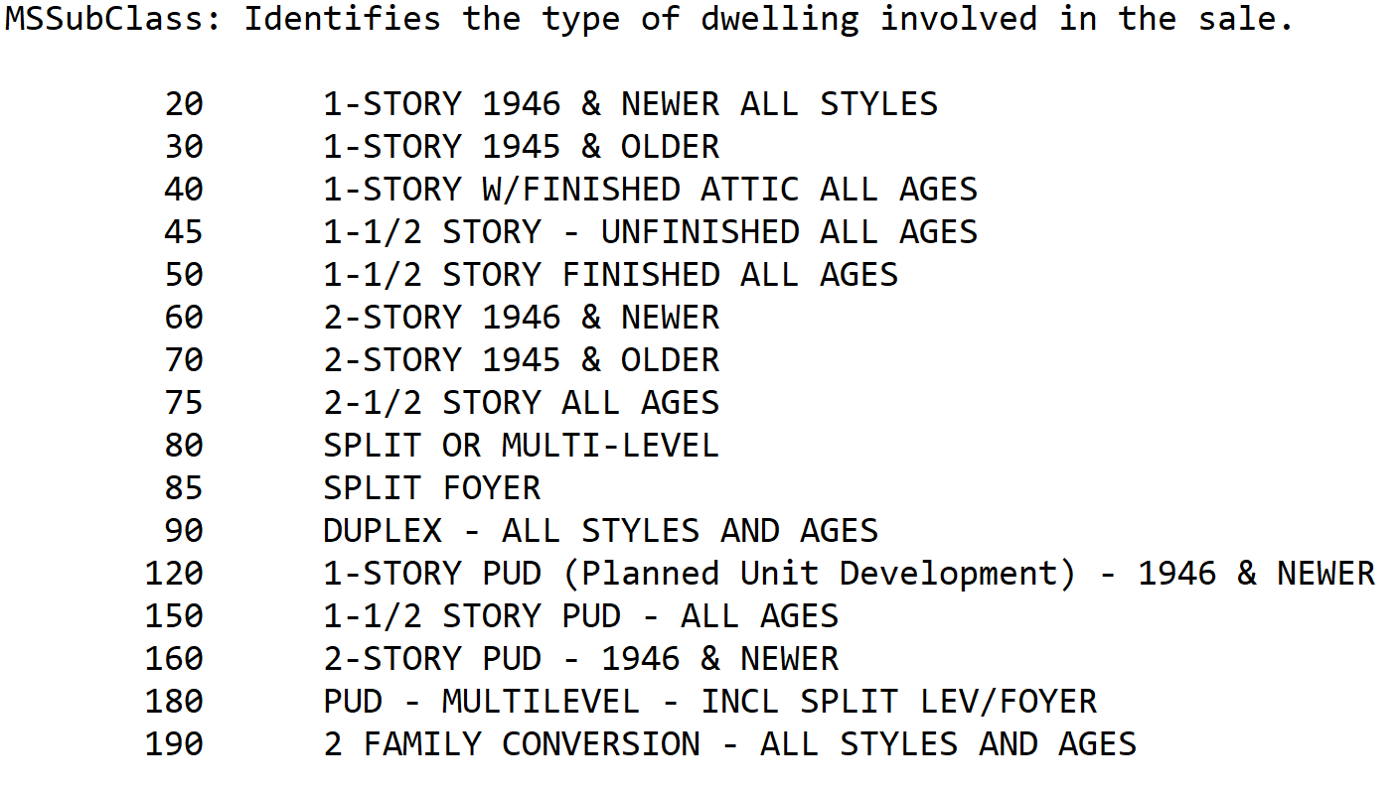

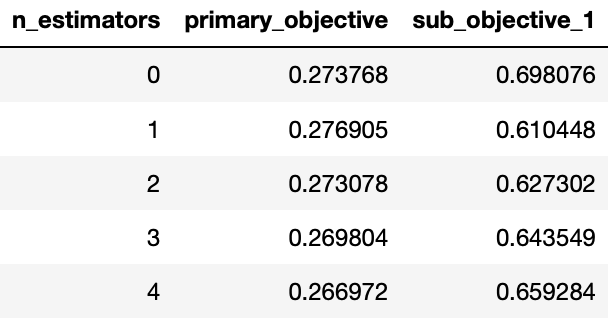

House_prices_kaggle - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

Houseprices - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization

MADGRAD Optimization Method A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization pip install madgrad Try it out! A best

Minimisation of a negative log likelihood fit to extract the lifetime of the D^0 meson (MNLL2ELDM)

Minimisation of a negative log likelihood fit to extract the lifetime of the D^0 meson (MNLL2ELDM) Introduction The average lifetime of the $D^{0}$ me

![[TPDS'21] COSCO: Container Orchestration using Co-Simulation and Gradient Based Optimization for Fog Computing Environments](https://github.com/imperial-qore/COSCO/raw/master/wiki/COSCO.jpg)

[TPDS'21] COSCO: Container Orchestration using Co-Simulation and Gradient Based Optimization for Fog Computing Environments

COSCO Framework COSCO is an AI based coupled-simulation and container orchestration framework for integrated Edge, Fog and Cloud Computing Environment

A TensorFlow implementation of the Mnemonic Descent Method.

MDM A Tensorflow implementation of the Mnemonic Descent Method. Mnemonic Descent Method: A recurrent process applied for end-to-end face alignment G.

Enhancing Twin Delayed Deep Deterministic Policy Gradient with Cross-Entropy Method

Enhancing Twin Delayed Deep Deterministic Policy Gradient with Cross-Entropy Method Hieu Trung Nguyen, Khang Tran and Ngoc Hoang Luong Setup Clone thi

Code repository for "Reducing Underflow in Mixed Precision Training by Gradient Scaling" presented at IJCAI '20

Reducing Underflow in Mixed Precision Training by Gradient Scaling This project implements the gradient scaling method to improve the performance of m

Forecasting with Gradient Boosted Time Series Decomposition

ThymeBoost ThymeBoost combines time series decomposition with gradient boosting to provide a flexible mix-and-match time series framework for spicy fo

Public Code for NIPS submission SimiGrad: Fine-Grained Adaptive Batching for Large ScaleTraining using Gradient Similarity Measurement

Public code for NIPS submission "SimiGrad: Fine-Grained Adaptive Batching for Large Scale Training using Gradient Similarity Measurement" This repo co

Official implementation of the NeurIPS 2021 paper Online Learning Of Neural Computations From Sparse Temporal Feedback

Online Learning Of Neural Computations From Sparse Temporal Feedback This repository is the official implementation of the NeurIPS 2021 paper Online L

LLVM-based compiler for LightGBM gradient-boosted trees. Speeds up prediction by ≥10x.

LLVM-based compiler for LightGBM gradient-boosted trees. Speeds up prediction by ≥10x.

PyNNDescent is a Python nearest neighbor descent for approximate nearest neighbors.

PyNNDescent PyNNDescent is a Python nearest neighbor descent for approximate nearest neighbors. It provides a python implementation of Nearest Neighbo

InfiniteBoost: building infinite ensembles with gradient descent

InfiniteBoost Code for a paper InfiniteBoost: building infinite ensembles with gradient descent (arXiv:1706.01109). A. Rogozhnikov, T. Likhomanenko De

Multiple implementations for abstractive text summurization , using google colab

Text Summarization models if you are able to endorse me on Arxiv, i would be more than glad https://arxiv.org/auth/endorse?x=FRBB89 thanks This repo i

Implementation of Sequence Generative Adversarial Nets with Policy Gradient

SeqGAN Requirements: Tensorflow r1.0.1 Python 2.7 CUDA 7.5+ (For GPU) Introduction Apply Generative Adversarial Nets to generating sequences of discre

Stochastic gradient descent with model building

Stochastic Model Building (SMB) This repository includes a new fast and robust stochastic optimization algorithm for training deep learning models. Th

Responsible Machine Learning with Python

Examples of techniques for training interpretable ML models, explaining ML models, and debugging ML models for accuracy, discrimination, and security.

Fit interpretable models. Explain blackbox machine learning.

InterpretML - Alpha Release In the beginning machines learned in darkness, and data scientists struggled in the void to explain them. Let there be lig

Nevergrad - A gradient-free optimization platform

Nevergrad - A gradient-free optimization platform nevergrad is a Python 3.6+ library. It can be installed with: pip install nevergrad More installati

Code for the paper "There is no Double-Descent in Random Forests"

Code for the paper "There is no Double-Descent in Random Forests" This repository contains the code to run the experiments for our paper called "There

Code for Greedy Gradient Ensemble for Visual Question Answering (ICCV 2021, Oral)

Greedy Gradient Ensemble for De-biased VQA Code release for "Greedy Gradient Ensemble for Robust Visual Question Answering" (ICCV 2021, Oral). GGE can

PyTorch implementation of Spiking Neural Networks trained on surrogate gradient & BPTT using snntorch.

snn-localization repo PyTorch implementation of Spiking Neural Networks trained on surrogate gradient & BPTT using snntorch. Install Dependencies Orig

Trains an agent with stochastic policy gradient ascent to solve the Lunar Lander challenge from OpenAI

Introduction This script trains an agent with stochastic policy gradient ascent to solve the Lunar Lander challenge from OpenAI. In order to run this

Official implementation of Generalized Data Weighting via Class-level Gradient Manipulation (NeurIPS 2021).

Generalized Data Weighting via Class-level Gradient Manipulation This repository is the official implementation of Generalized Data Weighting via Clas

Generalized Data Weighting via Class-level Gradient Manipulation

Generalized Data Weighting via Class-level Gradient Manipulation This repository is the official implementation of Generalized Data Weighting via Clas

The command line interface for Gradient - Gradient is an an end-to-end MLOps platform

Gradient CLI Get started: Create Account • Install CLI • Tutorials • Docs Resources: Website • Blog • Support • Contact Sales Gradient is an an end-to

Gradient representations in ReLU networks as similarity functions

Gradient representations in ReLU networks as similarity functions by Dániel Rácz and Bálint Daróczy. This repo contains the python code related to our

CARMS: Categorical-Antithetic-REINFORCE Multi-Sample Gradient Estimator

CARMS: Categorical-Antithetic-REINFORCE Multi-Sample Gradient Estimator This is the official code repository for NeurIPS 2021 paper: CARMS: Categorica

Learning where to learn - Gradient sparsity in meta and continual learning

Learning where to learn - Gradient sparsity in meta and continual learning In this paper, we investigate gradient sparsity found by MAML in various co

Gradient Inversion with Generative Image Prior

Gradient Inversion with Generative Image Prior This repository is an implementation of "Gradient Inversion with Generative Image Prior", accepted to N

Iterative stochastic gradient descent (SGD) linear regressor with regularization

SGD-Linear-Regressor Iterative stochastic gradient descent (SGD) linear regressor with regularization Dataset: Kaggle “Graduate Admission 2” https://w

Time Discretization-Invariant Safe Action Repetition for Policy Gradient Methods

Time Discretization-Invariant Safe Action Repetition for Policy Gradient Methods This repository is the official implementation of Seohong Park, Jaeky

Stochastic Gradient Trees implementation in Python

Stochastic Gradient Trees - Python Stochastic Gradient Trees1 by Henry Gouk, Bernhard Pfahringer, and Eibe Frank implementation in Python. Based on th

[WACV 2022] Contextual Gradient Scaling for Few-Shot Learning

CxGrad - Official PyTorch Implementation Contextual Gradient Scaling for Few-Shot Learning Sanghyuk Lee, Seunghyun Lee, and Byung Cheol Song In WACV 2

MEND: Model Editing Networks using Gradient Decomposition

MEND: Model Editing Networks using Gradient Decomposition Setup Environment This codebase uses Python 3.7.9. Other versions may work as well. Create a

A new mini-batch framework for optimal transport in deep generative models, deep domain adaptation, approximate Bayesian computation, color transfer, and gradient flow.

BoMb-OT Python3 implementation of the papers On Transportation of Mini-batches: A Hierarchical Approach and Improving Mini-batch Optimal Transport via

Ranger - a synergistic optimizer using RAdam (Rectified Adam), Gradient Centralization and LookAhead in one codebase

Ranger-Deep-Learning-Optimizer Ranger - a synergistic optimizer combining RAdam (Rectified Adam) and LookAhead, and now GC (gradient centralization) i

Official PyTorch implementation of "Edge Rewiring Goes Neural: Boosting Network Resilience via Policy Gradient".

Edge Rewiring Goes Neural: Boosting Network Resilience via Policy Gradient This repository is the official PyTorch implementation of "Edge Rewiring Go

PyTorch implementation of Constrained Policy Optimization

PyTorch implementation of Constrained Policy Optimization (CPO) This repository has a simple to understand and use implementation of CPO in PyTorch. A

Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train format

ttopt Description Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train (TT) format and maximu

BasicRL: easy and fundamental codes for deep reinforcement learning。It is an improvement on rainbow-is-all-you-need and OpenAI Spinning Up.

BasicRL: easy and fundamental codes for deep reinforcement learning BasicRL is an improvement on rainbow-is-all-you-need and OpenAI Spinning Up. It is

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

Gradient Step Denoiser for convergent Plug-and-Play

Source code for the paper "Gradient Step Denoiser for convergent Plug-and-Play"

Training vision models with full-batch gradient descent and regularization

Stochastic Training is Not Necessary for Generalization -- Training competitive vision models without stochasticity This repository implements trainin

Pytorch implementation of convolutional neural network visualization techniques

Convolutional Neural Network Visualizations This repository contains a number of convolutional neural network visualization techniques implemented in

Tutorial for surrogate gradient learning in spiking neural networks

SpyTorch A tutorial on surrogate gradient learning in spiking neural networks Version: 0.4 This repository contains tutorial files to get you started

A PyTorch implementation of Learning to learn by gradient descent by gradient descent

Intro PyTorch implementation of Learning to learn by gradient descent by gradient descent. Run python main.py TODO Initial implementation Toy data LST

A PyTorch implementation of Learning to learn by gradient descent by gradient descent

Intro PyTorch implementation of Learning to learn by gradient descent by gradient descent. Run python main.py TODO Initial implementation Toy data LST

Implementation of algorithms for continuous control (DDPG and NAF).

DEPRECATION This repository is deprecated and is no longer maintaned. Please see a more recent implementation of RL for continuous control at jax-sac.

Code for the paper "TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks"

TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks This is a Python3 / Pytorch implementation of TadGAN paper. The associated

Continuum Learning with GEM: Gradient Episodic Memory

Gradient Episodic Memory for Continual Learning Source code for the paper: @inproceedings{GradientEpisodicMemory, title={Gradient Episodic Memory

Pytorch Implementation of paper "Noisy Natural Gradient as Variational Inference"

Noisy Natural Gradient as Variational Inference PyTorch implementation of Noisy Natural Gradient as Variational Inference. Requirements Python 3 Pytor

Proximal Backpropagation - a neural network training algorithm that takes implicit instead of explicit gradient steps

Proximal Backpropagation Proximal Backpropagation (ProxProp) is a neural network training algorithm that takes implicit instead of explicit gradient s

This is the pytorch implementation of the paper - Axiomatic Attribution for Deep Networks.

Integrated Gradients This is the pytorch implementation of "Axiomatic Attribution for Deep Networks". The original tensorflow version could be found h

Bonsai: Gradient Boosted Trees + Bayesian Optimization

Bonsai is a wrapper for the XGBoost and Catboost model training pipelines that leverages Bayesian optimization for computationally efficient hyperparameter tuning.

Official implementation of paper Gradient Matching for Domain Generalization

Gradient Matching for Domain Generalisation This is the official PyTorch implementation of Gradient Matching for Domain Generalisation. In our paper,

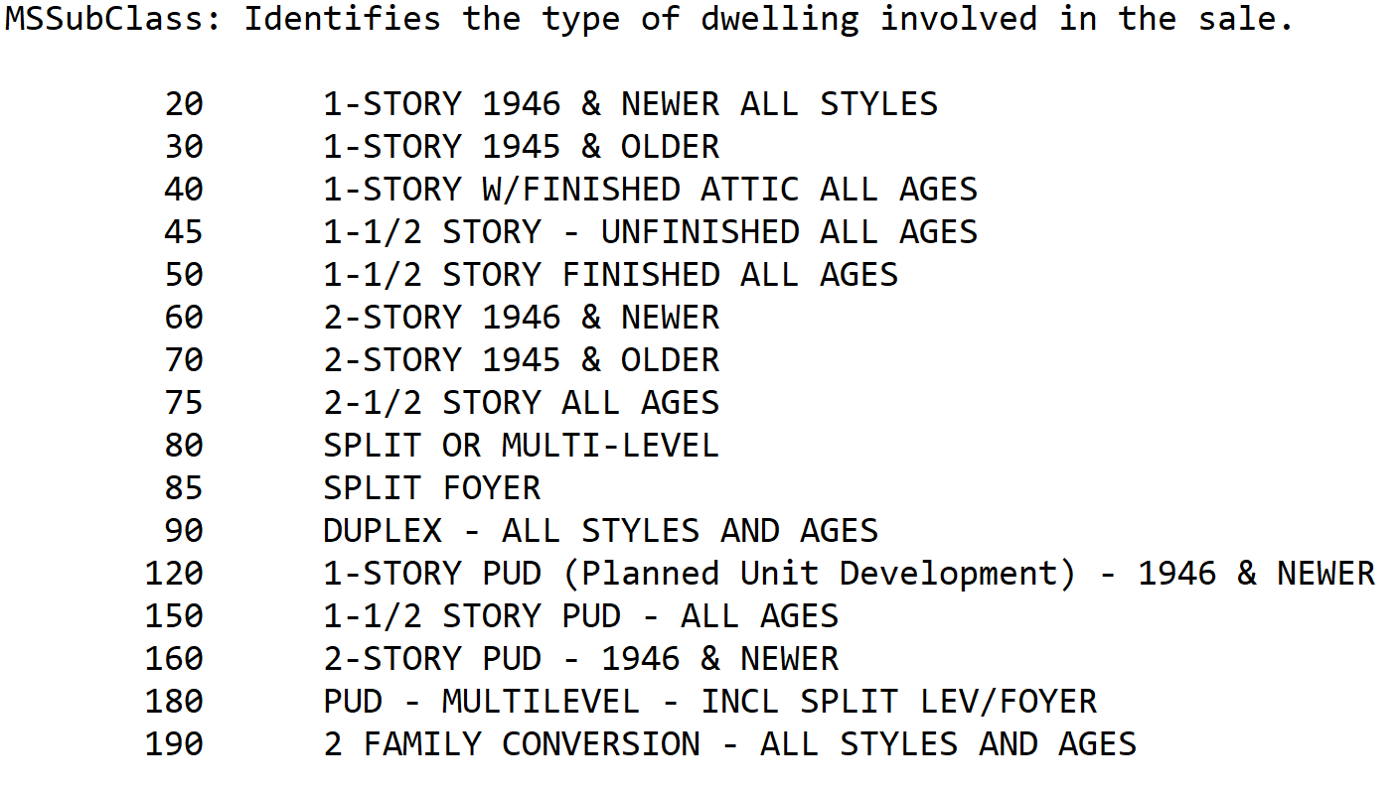

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees.

MooGBT is a library for Multi-objective optimization in Gradient Boosted Trees. MooGBT optimizes for multiple objectives by defining constraints on sub-objective(s) along with a primary objective. The constraints are defined as upper bounds on sub-objective loss function. MooGBT uses a Augmented Lagrangian(AL) based constrained optimization framework with Gradient Boosted Trees, to optimize for multiple objectives.

Implementation of Learning Gradient Fields for Molecular Conformation Generation (ICML 2021).

[PDF] | [Slides] The official implementation of Learning Gradient Fields for Molecular Conformation Generation (ICML 2021 Long talk) Installation Inst

A versatile token stream for handwritten parsers.

Writing recursive-descent parsers by hand can be quite elegant but it's often a bit more verbose than expected, especially when it comes to handling indentation and reporting proper syntax errors. This package provides a powerful general-purpose token stream that addresses these issues and more.

Custom TensorFlow2 implementations of forward and backward computation of soft-DTW algorithm in batch mode.

Batch Soft-DTW(Dynamic Time Warping) in TensorFlow2 including forward and backward computation Custom TensorFlow2 implementations of forward and backw

ML Optimizers from scratch using JAX

Toy implementations of some popular ML optimizers using Python/JAX

On the model-based stochastic value gradient for continuous reinforcement learning

On the model-based stochastic value gradient for continuous reinforcement learning This repository is by Brandon Amos, Samuel Stanton, Denis Yarats, a

Probabilistic Gradient Boosting Machines

PGBM Probabilistic Gradient Boosting Machines (PGBM) is a probabilistic gradient boosting framework in Python based on PyTorch/Numba, developed by Air

The official implementation of You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient.

You Only Compress Once: Towards Effective and Elastic BERT Compression via Exploit-Explore Stochastic Nature Gradient (paper) @misc{zhang2021compress,

Code release to accompany paper "Geometry-Aware Gradient Algorithms for Neural Architecture Search."

Geometry-Aware Gradient Algorithms for Neural Architecture Search This repository contains the code required to run the experiments for the DARTS sear

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

![[ECCV 2020] Gradient-Induced Co-Saliency Detection](https://github.com/zzhanghub/gicd/raw/master/img/GICD_LOGO.png)

[ECCV 2020] Gradient-Induced Co-Saliency Detection

Gradient-Induced Co-Saliency Detection Zhao Zhang*, Wenda Jin*, Jun Xu, Ming-Ming Cheng ⭐ Project Home » The official repo of the ECCV 2020 paper Grad

Paddle-RLBooks is a reinforcement learning code study guide based on pure PaddlePaddle.

Paddle-RLBooks Welcome to Paddle-RLBooks which is a reinforcement learning code study guide based on pure PaddlePaddle. 欢迎来到Paddle-RLBooks,该仓库主要是针对强化学

A collection of various RL algorithms like policy gradients, DQN and PPO. The goal of this repo will be to make it a go-to resource for learning about RL. How to visualize, debug and solve RL problems. I've additionally included playground.py for learning more about OpenAI gym, etc.

Reinforcement Learning (PyTorch) 🤖 + 🍰 = ❤️ This repo will contain PyTorch implementation of various fundamental RL algorithms. It's aimed at making

A Closer Look at Invalid Action Masking in Policy Gradient Algorithms

A Closer Look at Invalid Action Masking in Policy Gradient Algorithms This repo contains the source code to reproduce the results in the paper A Close

![[CVPRW 21]](https://github.com/VITA-Group/BNN_NoBN/raw/main/Figs/res.png)

[CVPRW 21] "BNN - BN = ? Training Binary Neural Networks without Batch Normalization", Tianlong Chen, Zhenyu Zhang, Xu Ouyang, Zechun Liu, Zhiqiang Shen, Zhangyang Wang

BNN - BN = ? Training Binary Neural Networks without Batch Normalization Codes for this paper BNN - BN = ? Training Binary Neural Networks without Bat