177 Repositories

Python stochastic-gradient-descent Libraries

TigerLily: Finding drug interactions in silico with the Graph.

Drug Interaction Prediction with Tigerlily Documentation | Example Notebook | Youtube Video | Project Report Tigerlily is a TigerGraph based system de

![[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).](https://github.com/Graph-COM/GSAT/raw/main/data/arch.png)

[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).

Graph Stochastic Attention (GSAT) The official implementation of GSAT for our paper: Interpretable and Generalizable Graph Learning via Stochastic Att

Machine learning beginner to Kaggle competitor in 30 days. Non-coders welcome. The program starts Monday, August 2, and lasts four weeks. It's designed for people who want to learn machine learning.

30-Days-of-ML-Kaggle 🔥 About the Hands On Program 💻 Machine learning beginner → Kaggle competitor in 30 days. Non-coders welcome The program starts

PyTorch implementation of SCAFFOLD (Stochastic Controlled Averaging for Federated Learning, ICML 2020).

Scaffold-Federated-Learning PyTorch implementation of SCAFFOLD (Stochastic Controlled Averaging for Federated Learning, ICML 2020). Environment numpy=

This is the code repository for LRM Stochastic watershed model.

LRM-Squannacook Input data for generating stochastic streamflows are observed and simulated timeseries of streamflow. their format needs to be CSV wit

GLODISMO: Gradient-Based Learning of Discrete Structured Measurement Operators for Signal Recovery

GLODISMO: Gradient-Based Learning of Discrete Structured Measurement Operators for Signal Recovery This is the code to the paper: Gradient-Based Learn

A working (ish) python script to convert text to a gradient.

verticle-horiontal-gradient-script A working (ish) python script to convert text to a gradient. This script is poorly made with the well known python

The Implicit Bias of Gradient Descent on Generalized Gated Linear Networks

The Implicit Bias of Gradient Descent on Generalized Gated Linear Networks This folder contains the code to reproduce the data in "The Implicit Bias o

Code needed to reproduce the examples found in "The Temporal Robustness of Stochastic Signals"

The Temporal Robustness of Stochastic Signals Code needed to reproduce the examples found in "The Temporal Robustness of Stochastic Signals" Case stud

OMLT: Optimization and Machine Learning Toolkit

OMLT is a Python package for representing machine learning models (neural networks and gradient-boosted trees) within the Pyomo optimization environment.

LightGBM + Optuna: no brainer

AutoLGBM LightGBM + Optuna: no brainer auto train lightgbm directly from CSV files auto tune lightgbm using optuna auto serve best lightgbm model usin

Implementation of linesearch Optimization Algorithms in Python

Nonlinear Optimization Algorithms During my time as Scientific Assistant at the Karlsruhe Institute of Technology (Germany) I implemented various Opti

MetaBalance: Improving Multi-Task Recommendations via Adapting Gradient Magnitudes of Auxiliary Tasks

MetaBalance: Improving Multi-Task Recommendations via Adapting Gradient Magnitudes of Auxiliary Tasks Introduction This repo contains the pytorch impl

Structured Data Gradient Pruning (SDGP)

Structured Data Gradient Pruning (SDGP) Weight pruning is a technique to make Deep Neural Network (DNN) inference more computationally efficient by re

Code for Phase diagram of Stochastic Gradient Descent in high-dimensional two-layer neural networks

Phase diagram of Stochastic Gradient Descent in high-dimensional two-layer neural networks Under construction. Description Code for Phase diagram of S

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc)

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc). Structured a custom ensemble model and a neural network. Found a outperformed model for heart failure prediction accuracy of 88 percent.

hgboost - Hyperoptimized Gradient Boosting

hgboost is short for Hyperoptimized Gradient Boosting and is a python package for hyperparameter optimization for xgboost, catboost and lightboost using cross-validation, and evaluating the results on an independent validation set. hgboost can be applied for classification and regression tasks.

Fibonacci Method Gradient Descent

An implementation of the Fibonacci method for gradient descent, featuring a TKinter GUI for inputting the function / parameters to be examined and a matplotlib plot of the function and results.

Contrastive Loss Gradient Attack (CLGA)

Contrastive Loss Gradient Attack (CLGA) Official implementation of Unsupervised Graph Poisoning Attack via Contrastive Loss Back-propagation, WWW22 Bu

Bag of Tricks for Natural Policy Gradient Reinforcement Learning

Bag of Tricks for Natural Policy Gradient Reinforcement Learning [ArXiv] Setup Python 3.8.0 pip install -r req.txt Mujoco 200 license Main Files main.

Implementation of the federated dual coordinate descent (FedDCD) method.

FedDCD.jl Implementation of the federated dual coordinate descent (FedDCD) method. Installation To install, just call Pkg.add("https://github.com/Zhen

Born-Infeld (BI) for AI: Energy-Conserving Descent (ECD) for Optimization

Born-Infeld (BI) for AI: Energy-Conserving Descent (ECD) for Optimization This repository contains the code for the BBI optimizer, introduced in the p

Our implementation of Gillespie's Stochastic Simulation Algorithm (SSA)

SSA Our implementation of Gillespie's Stochastic Simulation Algorithm (SSA) Requirements python =3.7 numpy pandas matplotlib pyyaml Command line usag

Sinkformers: Transformers with Doubly Stochastic Attention

Code for the paper : "Sinkformers: Transformers with Doubly Stochastic Attention" Paper You will find our paper here. Compat This package has been dev

Gradient - A Python program designed to create a reactive and ambient music listening experience

Gradient is a Python program designed to create a reactive and ambient music listening experience.

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

Neuron class provides LNU (Linear Neural Unit), QNU (Quadratic Neural Unit), RBF (Radial Basis Function), MLP (Multi Layer Perceptron), MLP-ELM (Multi Layer Perceptron - Extreme Learning Machine) neurons learned with Gradient descent or LeLevenberg–Marquardt algorithm

Neuron class provides LNU (Linear Neural Unit), QNU (Quadratic Neural Unit), RBF (Radial Basis Function), MLP (Multi Layer Perceptron), MLP-ELM (Multi Layer Perceptron - Extreme Learning Machine) neurons learned with Gradient descent or LeLevenberg–Marquardt algorithm

Optimizing synthesizer parameters using gradient approximation

Optimizing synthesizer parameters using gradient approximation NASH 2021 Hackathon! These are some experiments I conducted during NASH 2021, the Neura

Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechanism

Period-alternatives-of-Softmax Experimental Demo for our paper 'Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechani

Implementation for ACProp ( Momentum centering and asynchronous update for adaptive gradient methdos, NeurIPS 2021)

This repository contains code to reproduce results for submission NeurIPS 2021, "Momentum Centering and Asynchronous Update for Adaptive Gradient Meth

Policy Gradient Algorithms (One Step Actor Critic & PPO) from scratch using Numpy

Policy Gradient Algorithms From Scratch (NumPy) This repository showcases two policy gradient algorithms (One Step Actor Critic and Proximal Policy Op

Performance Analysis of Multi-user NOMA Wireless-Powered mMTC Networks: A Stochastic Geometry Approach

Performance Analysis of Multi-user NOMA Wireless-Powered mMTC Networks: A Stochastic Geometry Approach Thanh Luan Nguyen, Tri Nhu Do, Georges Kaddoum

Use stochastic processes to generate samples and use them to train a fully-connected neural network based on Keras

Use stochastic processes to generate samples and use them to train a fully-connected neural network based on Keras which will then be used to generate residuals

Plotting points that lie on the intersection of the given curves using gradient descent.

Plotting intersection of curves using gradient descent Webapp Link --- What's the app about Why this app Plotting functions and their intersection. A

Python implementation of the Learning Time-Series Shapelets method, that learns a shapelet-based time-series classifier with gradient descent.

shaplets Python implementation of the Learning Time-Series Shapelets method by Josif Grabocka et al., that learns a shapelet-based time-series classif

Official re-implementation of the Calibrated Adversarial Refinement model described in the paper Calibrated Adversarial Refinement for Stochastic Semantic Segmentation

Official re-implementation of the Calibrated Adversarial Refinement model described in the paper Calibrated Adversarial Refinement for Stochastic Semantic Segmentation

Pytorch implemenation of Stochastic Multi-Label Image-to-image Translation (SMIT)

SMIT: Stochastic Multi-Label Image-to-image Translation This repository provides a PyTorch implementation of SMIT. SMIT can stochastically translate a

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control.

Tianshou - An elegant PyTorch deep reinforcement learning library.

Tianshou (天授) is a reinforcement learning platform based on pure PyTorch. Unlike existing reinforcement learning libraries, which are mainly based on

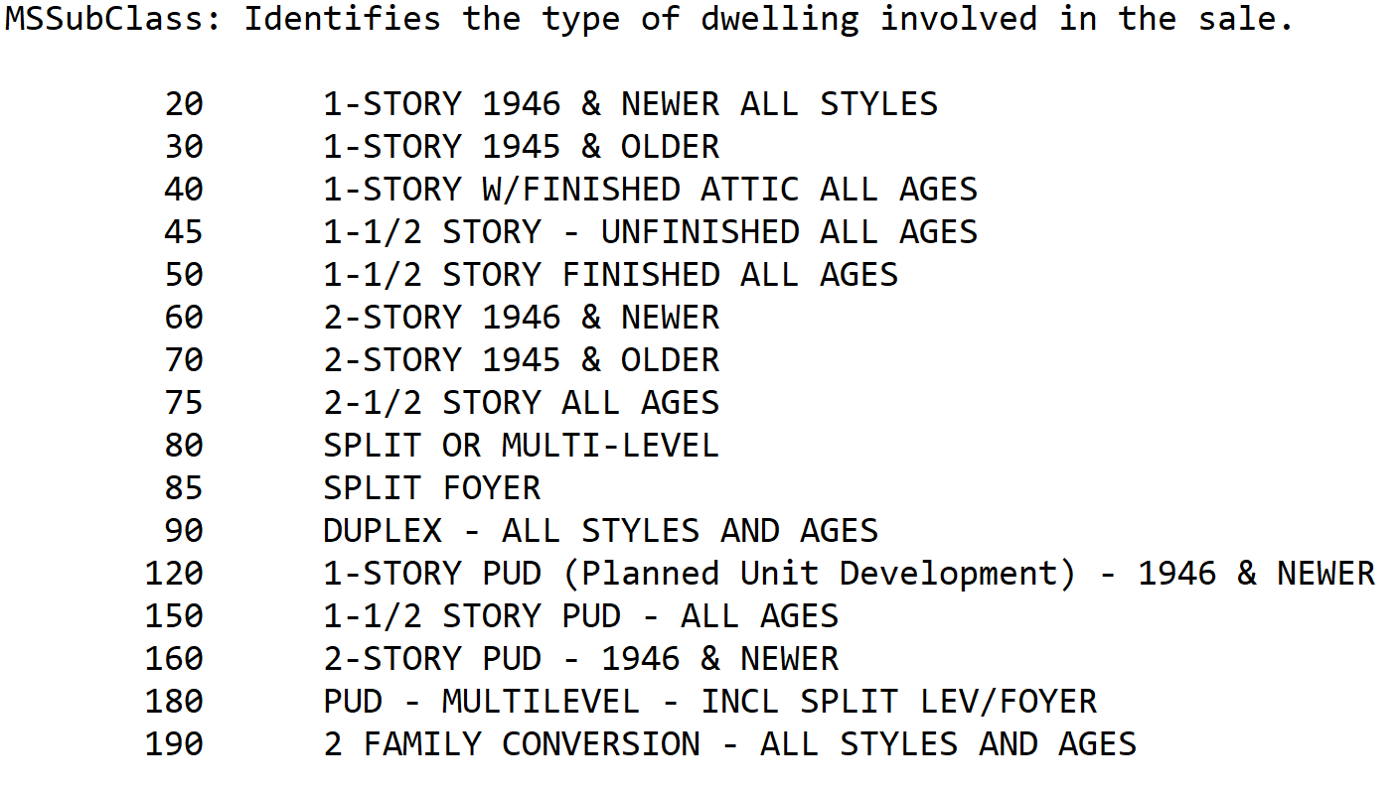

House_prices_kaggle - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

Houseprices - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization

MADGRAD Optimization Method A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization pip install madgrad Try it out! A best

JumpDiff: Non-parametric estimator for Jump-diffusion processes for Python

jumpdiff jumpdiff is a python library with non-parametric Nadaraya─Watson estimators to extract the parameters of jump-diffusion processes. With jumpd

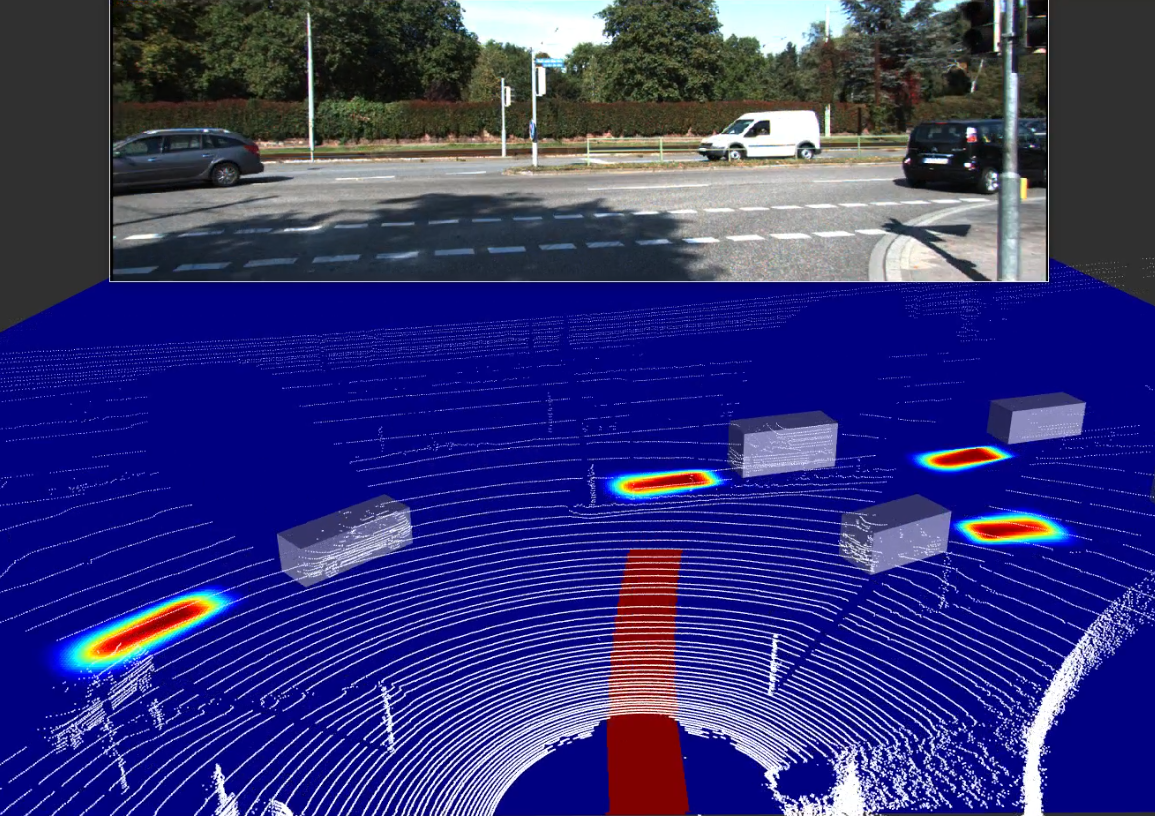

Stochastic Tensor Optimization for Robot Motion - A GPU Robot Motion Toolkit

STORM Stochastic Tensor Optimization for Robot Motion - A GPU Robot Motion Toolkit [Install Instructions] [Paper] [Website] This package contains code

Minimisation of a negative log likelihood fit to extract the lifetime of the D^0 meson (MNLL2ELDM)

Minimisation of a negative log likelihood fit to extract the lifetime of the D^0 meson (MNLL2ELDM) Introduction The average lifetime of the $D^{0}$ me

![[TPDS'21] COSCO: Container Orchestration using Co-Simulation and Gradient Based Optimization for Fog Computing Environments](https://github.com/imperial-qore/COSCO/raw/master/wiki/COSCO.jpg)

[TPDS'21] COSCO: Container Orchestration using Co-Simulation and Gradient Based Optimization for Fog Computing Environments

COSCO Framework COSCO is an AI based coupled-simulation and container orchestration framework for integrated Edge, Fog and Cloud Computing Environment

Python framework for Stochastic Differential Equations modeling

SDElearn: a Python package for SDE modeling This package implements functionalities for working with Stochastic Differential Equations models (SDEs fo

A TensorFlow implementation of the Mnemonic Descent Method.

MDM A Tensorflow implementation of the Mnemonic Descent Method. Mnemonic Descent Method: A recurrent process applied for end-to-end face alignment G.

Enhancing Twin Delayed Deep Deterministic Policy Gradient with Cross-Entropy Method

Enhancing Twin Delayed Deep Deterministic Policy Gradient with Cross-Entropy Method Hieu Trung Nguyen, Khang Tran and Ngoc Hoang Luong Setup Clone thi

SLAMP: Stochastic Latent Appearance and Motion Prediction

SLAMP: Stochastic Latent Appearance and Motion Prediction Official implementation of the paper SLAMP: Stochastic Latent Appearance and Motion Predicti

Code repository for "Reducing Underflow in Mixed Precision Training by Gradient Scaling" presented at IJCAI '20

Reducing Underflow in Mixed Precision Training by Gradient Scaling This project implements the gradient scaling method to improve the performance of m

Forecasting with Gradient Boosted Time Series Decomposition

ThymeBoost ThymeBoost combines time series decomposition with gradient boosting to provide a flexible mix-and-match time series framework for spicy fo

This is the official repository of the paper Stocastic bandits with groups of similar arms (NeurIPS 2021). It contains the code that was used to compute the figures and experiments of the paper.

Experiments How to reproduce experimental results of Stochastic bandits with groups of similar arms submitted paper ? Section 5 of the paper To reprod

Public Code for NIPS submission SimiGrad: Fine-Grained Adaptive Batching for Large ScaleTraining using Gradient Similarity Measurement

Public code for NIPS submission "SimiGrad: Fine-Grained Adaptive Batching for Large Scale Training using Gradient Similarity Measurement" This repo co

Official implementation of the NeurIPS 2021 paper Online Learning Of Neural Computations From Sparse Temporal Feedback

Online Learning Of Neural Computations From Sparse Temporal Feedback This repository is the official implementation of the NeurIPS 2021 paper Online L

LLVM-based compiler for LightGBM gradient-boosted trees. Speeds up prediction by ≥10x.

LLVM-based compiler for LightGBM gradient-boosted trees. Speeds up prediction by ≥10x.

This thesis is mainly concerned with state-space methods for a class of deep Gaussian process (DGP) regression problems

Doctoral dissertation of Zheng Zhao This thesis is mainly concerned with state-space methods for a class of deep Gaussian process (DGP) regression pro

PyNNDescent is a Python nearest neighbor descent for approximate nearest neighbors.

PyNNDescent PyNNDescent is a Python nearest neighbor descent for approximate nearest neighbors. It provides a python implementation of Nearest Neighbo

InfiniteBoost: building infinite ensembles with gradient descent

InfiniteBoost Code for a paper InfiniteBoost: building infinite ensembles with gradient descent (arXiv:1706.01109). A. Rogozhnikov, T. Likhomanenko De

PyTorch implementation for Score-Based Generative Modeling through Stochastic Differential Equations (ICLR 2021, Oral)

Score-Based Generative Modeling through Stochastic Differential Equations This repo contains a PyTorch implementation for the paper Score-Based Genera

Multiple implementations for abstractive text summurization , using google colab

Text Summarization models if you are able to endorse me on Arxiv, i would be more than glad https://arxiv.org/auth/endorse?x=FRBB89 thanks This repo i

Implementation of Sequence Generative Adversarial Nets with Policy Gradient

SeqGAN Requirements: Tensorflow r1.0.1 Python 2.7 CUDA 7.5+ (For GPU) Introduction Apply Generative Adversarial Nets to generating sequences of discre

Stochastic gradient descent with model building

Stochastic Model Building (SMB) This repository includes a new fast and robust stochastic optimization algorithm for training deep learning models. Th

Stochastic Extragradient: General Analysis and Improved Rates

Stochastic Extragradient: General Analysis and Improved Rates This repository is the official implementation of the paper "Stochastic Extragradient: G

Responsible Machine Learning with Python

Examples of techniques for training interpretable ML models, explaining ML models, and debugging ML models for accuracy, discrimination, and security.

Fit interpretable models. Explain blackbox machine learning.

InterpretML - Alpha Release In the beginning machines learned in darkness, and data scientists struggled in the void to explain them. Let there be lig

Nevergrad - A gradient-free optimization platform

Nevergrad - A gradient-free optimization platform nevergrad is a Python 3.6+ library. It can be installed with: pip install nevergrad More installati

A simple Python library for stochastic graphical ecological models

What is Viridicle? Viridicle is a library for simulating stochastic graphical ecological models. It implements the continuous time models described in

Code for the paper "There is no Double-Descent in Random Forests"

Code for the paper "There is no Double-Descent in Random Forests" This repository contains the code to run the experiments for our paper called "There

Collision risk estimation using stochastic motion models

collision_risk_estimation Collision risk estimation using stochastic motion models. This is a new approach, based on stochastic models, to predict the

This package is a python library with tools for the Molecular Simulation - Software Gromos.

This package is a python library with tools for the Molecular Simulation - Software Gromos. It allows you to easily set up, manage and analyze simulations in python.

Code for Greedy Gradient Ensemble for Visual Question Answering (ICCV 2021, Oral)

Greedy Gradient Ensemble for De-biased VQA Code release for "Greedy Gradient Ensemble for Robust Visual Question Answering" (ICCV 2021, Oral). GGE can

PyTorch implementation of Spiking Neural Networks trained on surrogate gradient & BPTT using snntorch.

snn-localization repo PyTorch implementation of Spiking Neural Networks trained on surrogate gradient & BPTT using snntorch. Install Dependencies Orig

Trains an agent with stochastic policy gradient ascent to solve the Lunar Lander challenge from OpenAI

Introduction This script trains an agent with stochastic policy gradient ascent to solve the Lunar Lander challenge from OpenAI. In order to run this

Stochastic Scene-Aware Motion Prediction

Stochastic Scene-Aware Motion Prediction [Project Page] [Paper] Description This repository contains the training code for MotionNet and GoalNet of SA

Official implementation of Generalized Data Weighting via Class-level Gradient Manipulation (NeurIPS 2021).

Generalized Data Weighting via Class-level Gradient Manipulation This repository is the official implementation of Generalized Data Weighting via Clas

Generalized Data Weighting via Class-level Gradient Manipulation

Generalized Data Weighting via Class-level Gradient Manipulation This repository is the official implementation of Generalized Data Weighting via Clas

The command line interface for Gradient - Gradient is an an end-to-end MLOps platform

Gradient CLI Get started: Create Account • Install CLI • Tutorials • Docs Resources: Website • Blog • Support • Contact Sales Gradient is an an end-to

Gradient representations in ReLU networks as similarity functions

Gradient representations in ReLU networks as similarity functions by Dániel Rácz and Bálint Daróczy. This repo contains the python code related to our

CARMS: Categorical-Antithetic-REINFORCE Multi-Sample Gradient Estimator

CARMS: Categorical-Antithetic-REINFORCE Multi-Sample Gradient Estimator This is the official code repository for NeurIPS 2021 paper: CARMS: Categorica

Learning where to learn - Gradient sparsity in meta and continual learning

Learning where to learn - Gradient sparsity in meta and continual learning In this paper, we investigate gradient sparsity found by MAML in various co

Gradient Inversion with Generative Image Prior

Gradient Inversion with Generative Image Prior This repository is an implementation of "Gradient Inversion with Generative Image Prior", accepted to N

Iterative stochastic gradient descent (SGD) linear regressor with regularization

SGD-Linear-Regressor Iterative stochastic gradient descent (SGD) linear regressor with regularization Dataset: Kaggle “Graduate Admission 2” https://w

Code of the paper "Multi-Task Meta-Learning Modification with Stochastic Approximation".

Multi-Task Meta-Learning Modification with Stochastic Approximation This repository contains the code for the paper "Multi-Task Meta-Learning Modifica

Unsupervised Document Expansion for Information Retrieval with Stochastic Text Generation

Unsupervised Document Expansion for Information Retrieval with Stochastic Text Generation Official Code Repository for the paper "Unsupervised Documen

This GitHub repository contains code used for plots in NeurIPS 2021 paper 'Stochastic Multi-Armed Bandits with Control Variates.'

About Repository This repository contains code used for plots in NeurIPS 2021 paper 'Stochastic Multi-Armed Bandits with Control Variates.' About Code

Time Discretization-Invariant Safe Action Repetition for Policy Gradient Methods

Time Discretization-Invariant Safe Action Repetition for Policy Gradient Methods This repository is the official implementation of Seohong Park, Jaeky

Stochastic Gradient Trees implementation in Python

Stochastic Gradient Trees - Python Stochastic Gradient Trees1 by Henry Gouk, Bernhard Pfahringer, and Eibe Frank implementation in Python. Based on th

[WACV 2022] Contextual Gradient Scaling for Few-Shot Learning

CxGrad - Official PyTorch Implementation Contextual Gradient Scaling for Few-Shot Learning Sanghyuk Lee, Seunghyun Lee, and Byung Cheol Song In WACV 2

MEND: Model Editing Networks using Gradient Decomposition

MEND: Model Editing Networks using Gradient Decomposition Setup Environment This codebase uses Python 3.7.9. Other versions may work as well. Create a

A new mini-batch framework for optimal transport in deep generative models, deep domain adaptation, approximate Bayesian computation, color transfer, and gradient flow.

BoMb-OT Python3 implementation of the papers On Transportation of Mini-batches: A Hierarchical Approach and Improving Mini-batch Optimal Transport via

Ranger - a synergistic optimizer using RAdam (Rectified Adam), Gradient Centralization and LookAhead in one codebase

Ranger-Deep-Learning-Optimizer Ranger - a synergistic optimizer combining RAdam (Rectified Adam) and LookAhead, and now GC (gradient centralization) i

Official PyTorch implementation of "Edge Rewiring Goes Neural: Boosting Network Resilience via Policy Gradient".

Edge Rewiring Goes Neural: Boosting Network Resilience via Policy Gradient This repository is the official PyTorch implementation of "Edge Rewiring Go

PyTorch implementation of Constrained Policy Optimization

PyTorch implementation of Constrained Policy Optimization (CPO) This repository has a simple to understand and use implementation of CPO in PyTorch. A

Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train format

ttopt Description Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train (TT) format and maximu

Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE)

OG-SPACE Introduction Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE) is a computational framewo

Imitating Deep Learning Dynamics via Locally Elastic Stochastic Differential Equations

Imitating Deep Learning Dynamics via Locally Elastic Stochastic Differential Equations This repo contains official code for the NeurIPS 2021 paper Imi

BasicRL: easy and fundamental codes for deep reinforcement learning。It is an improvement on rainbow-is-all-you-need and OpenAI Spinning Up.

BasicRL: easy and fundamental codes for deep reinforcement learning BasicRL is an improvement on rainbow-is-all-you-need and OpenAI Spinning Up. It is

This package implements THOR: Transformer with Stochastic Experts.

THOR: Transformer with Stochastic Experts This PyTorch package implements Taming Sparsely Activated Transformer with Stochastic Experts. Installation

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo