1088 Repositories

Python graph-attention Libraries

TorchDrug is a PyTorch-based machine learning toolbox designed for drug discovery

A powerful and flexible machine learning platform for drug discovery

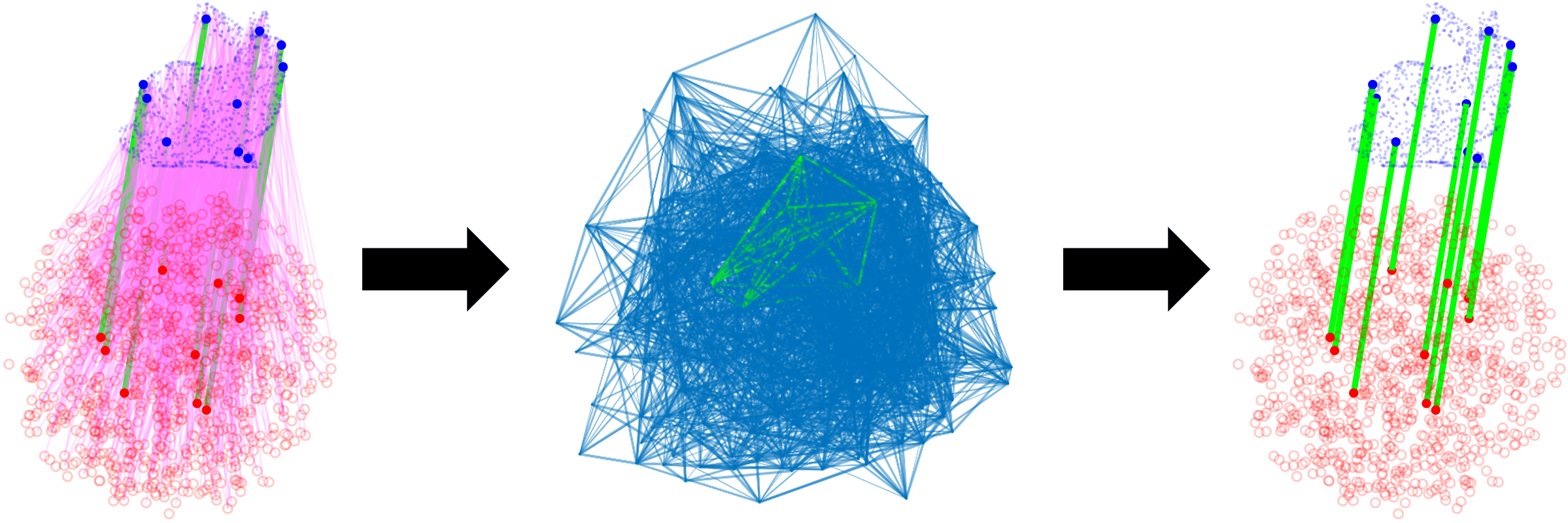

Collective Multi-type Entity Alignment Between Knowledge Graphs (WWW'20)

CG-MuAlign A reference implementation for "Collective Multi-type Entity Alignment Between Knowledge Graphs", published in WWW 2020. If you find our pa

Defending graph neural networks against adversarial attacks (NeurIPS 2020)

GNNGuard: Defending Graph Neural Networks against Adversarial Attacks Authors: Xiang Zhang ([email protected]), Marinka Zitnik (marinka@hms.

PyTorch implementation of paper: AdaAttN: Revisit Attention Mechanism in Arbitrary Neural Style Transfer, ICCV 2021.

AdaAttN: Revisit Attention Mechanism in Arbitrary Neural Style Transfer [Paper] [PyTorch Implementation] [Paddle Implementation] Overview This reposit

PyGCL: Graph Contrastive Learning Library for PyTorch

PyGCL is an open-source library for graph contrastive learning (GCL), which features modularized GCL components from published papers, standardized evaluation, and experiment management.

open-information-extraction-system, build open-knowledge-graph(SPO, subject-predicate-object) by pyltp(version==3.4.0)

中文开放信息抽取系统, open-information-extraction-system, build open-knowledge-graph(SPO, subject-predicate-object) by pyltp(version==3.4.0)

Investigating Attention Mechanism in 3D Point Cloud Object Detection (arXiv 2021)

Investigating Attention Mechanism in 3D Point Cloud Object Detection (arXiv 2021) This repository is for the following paper: "Investigating Attention

PyGCL: Graph Contrastive Learning Library for PyTorch

PyGCL: Graph Contrastive Learning for PyTorch PyGCL is an open-source library for graph contrastive learning (GCL), which features modularized GCL com

Scenic: A Jax Library for Computer Vision and Beyond

Scenic Scenic is a codebase with a focus on research around attention-based models for computer vision. Scenic has been successfully used to develop c

Code for paper "ASAP-Net: Attention and Structure Aware Point Cloud Sequence Segmentation"

ASAP-Net This project implements ASAP-Net of paper ASAP-Net: Attention and Structure Aware Point Cloud Sequence Segmentation (BMVC2020). Overview We i

Code for ICCV 2021 paper "Distilling Holistic Knowledge with Graph Neural Networks"

HKD Code for ICCV 2021 paper "Distilling Holistic Knowledge with Graph Neural Networks" cifia-100 result The implementation of compared methods are ba

The code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention.

CrossFormer This repository is the code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention. Introduction Existin

An efficient PyTorch implementation of the winning entry of the 2017 VQA Challenge.

Bottom-Up and Top-Down Attention for Visual Question Answering An efficient PyTorch implementation of the winning entry of the 2017 VQA Challenge. The

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

Train an RL agent to execute natural language instructions in a 3D Environment (PyTorch)

Gated-Attention Architectures for Task-Oriented Language Grounding This is a PyTorch implementation of the AAAI-18 paper: Gated-Attention Architecture

A PyTorch Implementation of Gated Graph Sequence Neural Networks (GGNN)

A PyTorch Implementation of GGNN This is a PyTorch implementation of the Gated Graph Sequence Neural Networks (GGNN) as described in the paper Gated G

Convolutional 2D Knowledge Graph Embeddings resources

ConvE Convolutional 2D Knowledge Graph Embeddings resources. Paper: Convolutional 2D Knowledge Graph Embeddings Used in the paper, but do not use thes

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

Bilinear attention networks for visual question answering

Bilinear Attention Networks This repository is the implementation of Bilinear Attention Networks for the visual question answering and Flickr30k Entit

A PyTorch Implementation of the Luna: Linear Unified Nested Attention

Unofficial PyTorch implementation of Luna: Linear Unified Nested Attention The quadratic computational and memory complexities of the Transformer’s at

GNN4Traffic - This is the repository for the collection of Graph Neural Network for Traffic Forecasting

GNN4Traffic - This is the repository for the collection of Graph Neural Network for Traffic Forecasting

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning

H-Transformer-1D Implementation of H-Transformer-1D, Transformer using hierarchical Attention for sequence learning with subquadratic costs. For now,

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

This is an official implementation of "Polarized Self-Attention: Towards High-quality Pixel-wise Regression"

Polarized Self-Attention: Towards High-quality Pixel-wise Regression This is an official implementation of: Huajun Liu, Fuqiang Liu, Xinyi Fan and Don

TilinGNN: Learning to Tile with Self-Supervised Graph Neural Network (SIGGRAPH 2020)

TilinGNN: Learning to Tile with Self-Supervised Graph Neural Network (SIGGRAPH 2020) About The goal of our research problem is illustrated below: give

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Locally Enhanced Self-Attention: Rethinking Self-Attention as Local and Context Terms

LESA Introduction This repository contains the official implementation of Locally Enhanced Self-Attention: Rethinking Self-Attention as Local and Cont

Rubrix is a free and open-source tool for exploring and iterating on data for artificial intelligence projects.

Open-source tool for exploring, labeling, and monitoring data for AI projects

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

An implementation of DeepMind's Relational Recurrent Neural Networks in PyTorch.

relational-rnn-pytorch An implementation of DeepMind's Relational Recurrent Neural Networks (Santoro et al. 2018) in PyTorch. Relational Memory Core (

Pytorch implementation of face attention network

Face Attention Network Pytorch implementation of face attention network as described in Face Attention Network: An Effective Face Detector for the Occ

A PyTorch implementation of "Graph Wavelet Neural Network" (ICLR 2019)

Graph Wavelet Neural Network ⠀⠀ A PyTorch implementation of Graph Wavelet Neural Network (ICLR 2019). Abstract We present graph wavelet neural network

A PyTorch Implementation of "Watch Your Step: Learning Node Embeddings via Graph Attention" (NeurIPS 2018).

Attention Walk ⠀⠀ A PyTorch Implementation of Watch Your Step: Learning Node Embeddings via Graph Attention (NIPS 2018). Abstract Graph embedding meth

A PyTorch implementation of "Signed Graph Convolutional Network" (ICDM 2018).

SGCN ⠀ A PyTorch implementation of Signed Graph Convolutional Network (ICDM 2018). Abstract Due to the fact much of today's data can be represented as

A PyTorch Implementation of "SINE: Scalable Incomplete Network Embedding" (ICDM 2018).

Scalable Incomplete Network Embedding ⠀⠀ A PyTorch implementation of Scalable Incomplete Network Embedding (ICDM 2018). Abstract Attributed network em

A PyTorch implementation of "Graph Classification Using Structural Attention" (KDD 2018).

GAM ⠀⠀ A PyTorch implementation of Graph Classification Using Structural Attention (KDD 2018). Abstract Graph classification is a problem with practic

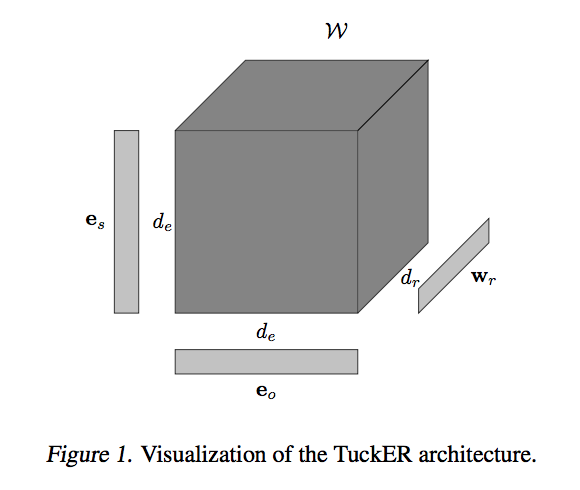

TuckER: Tensor Factorization for Knowledge Graph Completion

TuckER: Tensor Factorization for Knowledge Graph Completion This codebase contains PyTorch implementation of the paper: TuckER: Tensor Factorization f

A PyTorch implementation of "SimGNN: A Neural Network Approach to Fast Graph Similarity Computation" (WSDM 2019).

SimGNN ⠀⠀⠀ A PyTorch implementation of SimGNN: A Neural Network Approach to Fast Graph Similarity Computation (WSDM 2019). Abstract Graph similarity s

A PyTorch implementation of "Predict then Propagate: Graph Neural Networks meet Personalized PageRank" (ICLR 2019).

APPNP ⠀ A PyTorch implementation of Predict then Propagate: Graph Neural Networks meet Personalized PageRank (ICLR 2019). Abstract Neural message pass

An implementation of "MixHop: Higher-Order Graph Convolutional Architectures via Sparsified Neighborhood Mixing" (ICML 2019).

MixHop and N-GCN ⠀ A PyTorch implementation of "MixHop: Higher-Order Graph Convolutional Architectures via Sparsified Neighborhood Mixing" (ICML 2019)

A Pytorch implementation of "Splitter: Learning Node Representations that Capture Multiple Social Contexts" (WWW 2019).

Splitter ⠀⠀ A PyTorch implementation of Splitter: Learning Node Representations that Capture Multiple Social Contexts (WWW 2019). Abstract Recent inte

A PyTorch implementation of "Capsule Graph Neural Network" (ICLR 2019).

CapsGNN ⠀⠀ A PyTorch implementation of Capsule Graph Neural Network (ICLR 2019). Abstract The high-quality node embeddings learned from the Graph Neur

A PyTorch implementation of "Semi-Supervised Graph Classification: A Hierarchical Graph Perspective" (WWW 2019)

SEAL ⠀⠀⠀ A PyTorch implementation of Semi-Supervised Graph Classification: A Hierarchical Graph Perspective (WWW 2019) Abstract Node classification an

A PyTorch implementation of "Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks" (KDD 2019).

ClusterGCN ⠀⠀ A PyTorch implementation of "Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks" (KDD 2019). A

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Implementation of Invariant Point Attention, used for coordinate refinement in the structure module of Alphafold2, as a standalone Pytorch module

Invariant Point Attention - Pytorch Implementation of Invariant Point Attention as a standalone module, which was used in the structure module of Alph

Code for the paper: "On the Bottleneck of Graph Neural Networks and Its Practical Implications"

On the Bottleneck of Graph Neural Networks and its Practical Implications This is the official implementation of the paper: On the Bottleneck of Graph

Deep RGB-D Saliency Detection with Depth-Sensitive Attention and Automatic Multi-Modal Fusion (CVPR'2021, Oral)

DSA^2 F: Deep RGB-D Saliency Detection with Depth-Sensitive Attention and Automatic Multi-Modal Fusion (CVPR'2021, Oral) This repo is the official imp

PyTorch code for our ECCV 2020 paper "Single Image Super-Resolution via a Holistic Attention Network"

HAN PyTorch code for our ECCV 2020 paper "Single Image Super-Resolution via a Holistic Attention Network" This repository is for HAN introduced in the

A heterogeneous entity-augmented academic language model based on Open Academic Graph (OAG)

Library | Paper | Slack We released two versions of OAG-BERT in CogDL package. OAG-BERT is a heterogeneous entity-augmented academic language model wh

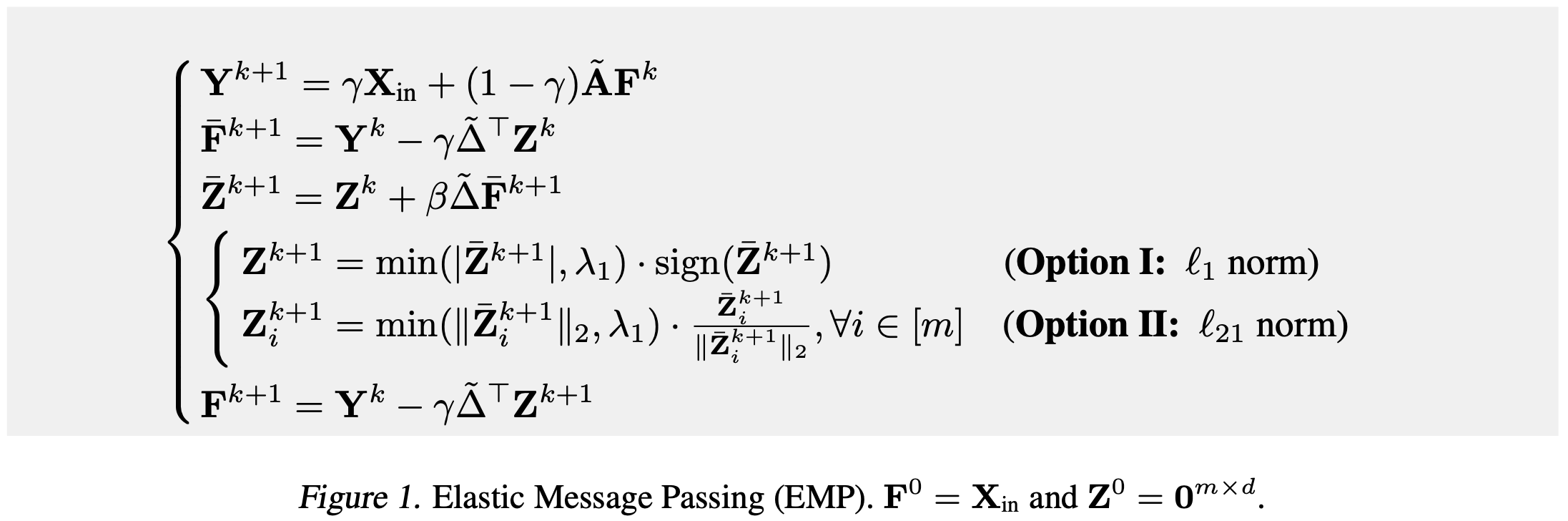

Official implementation of the ICML2021 paper "Elastic Graph Neural Networks"

ElasticGNN This repository includes the official implementation of ElasticGNN in the paper "Elastic Graph Neural Networks" [ICML 2021]. Xiaorui Liu, W

Parameterized Explainer for Graph Neural Network

PGExplainer This is a Tensorflow implementation of the paper: Parameterized Explainer for Graph Neural Network https://arxiv.org/abs/2011.04573 NeurIP

Plug and play transformer you can find network structure and official complete code by clicking List

Plug-and-play Module Plug and play transformer you can find network structure and official complete code by clicking List The following is to quickly

A collection of research papers and software related to explainability in graph machine learning.

A collection of research papers and software related to explainability in graph machine learning.

A collection of 100 Deep Learning images and visualizations

A collection of Deep Learning images and visualizations. The project has been developed by the AI Summer team and currently contains almost 100 images.

PyTorch code for our paper "Image Super-Resolution with Non-Local Sparse Attention" (CVPR2021).

Image Super-Resolution with Non-Local Sparse Attention This repository is for NLSN introduced in the following paper "Image Super-Resolution with Non-

A collection of 100 Deep Learning images and visualizations

A collection of Deep Learning images and visualizations. The project has been developed by the AI Summer team and currently contains almost 100 images.

🤖 A Python library for learning and evaluating knowledge graph embeddings

PyKEEN PyKEEN (Python KnowlEdge EmbeddiNgs) is a Python package designed to train and evaluate knowledge graph embedding models (incorporating multi-m

Official PyTorch implementation of UACANet: Uncertainty Aware Context Attention for Polyp Segmentation

UACANet: Uncertainty Aware Context Attention for Polyp Segmentation Official pytorch implementation of UACANet: Uncertainty Aware Context Attention fo

Monitor your Binance portfolio

Binance Report Bot The intent of this bot is to take a snapshot of your binance wallet, e.g. the current balances and store it for further plotting. I

2021-MICCAI-Progressively Normalized Self-Attention Network for Video Polyp Segmentation

2021-MICCAI-Progressively Normalized Self-Attention Network for Video Polyp Segmentation Authors: Ge-Peng Ji*, Yu-Cheng Chou*, Deng-Ping Fan, Geng Che

graph-theoretic framework for robust pairwise data association

CLIPPER: A Graph-Theoretic Framework for Robust Data Association Data association is a fundamental problem in robotics and autonomy. CLIPPER provides

Implementation of Self-supervised Graph-level Representation Learning with Local and Global Structure (ICML 2021).

Self-supervised Graph-level Representation Learning with Local and Global Structure Introduction This project is an implementation of ``Self-supervise

Code for KDD'20 "An Efficient Neighborhood-based Interaction Model for Recommendation on Heterogeneous Graph"

Heterogeneous INteract and aggreGatE (GraphHINGE) This is a pytorch implementation of GraphHINGE model. This is the experiment code in the following w

Codes for TS-CAM: Token Semantic Coupled Attention Map for Weakly Supervised Object Localization.

TS-CAM: Token Semantic Coupled Attention Map for Weakly SupervisedObject Localization This is the official implementaion of paper TS-CAM: Token Semant

box is a text-based visual programming language inspired by Unreal Engine Blueprint function graphs.

Box is a text-based visual programming language inspired by Unreal Engine blueprint function graphs. $ cat factorial.box ┌─ƒ(Factorial)───┐

Selective Wavelet Attention Learning for Single Image Deraining

SWAL Code for Paper "Selective Wavelet Attention Learning for Single Image Deraining" Prerequisites Python 3 PyTorch Models We provide the models trai

PyTorch implementation of Graph Convolutional Networks in Feature Space for Image Deblurring and Super-resolution, IJCNN 2021.

GCResNet PyTorch implementation of Graph Convolutional Networks in Feature Space for Image Deblurring and Super-resolution, IJCNN 2021. The code will

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

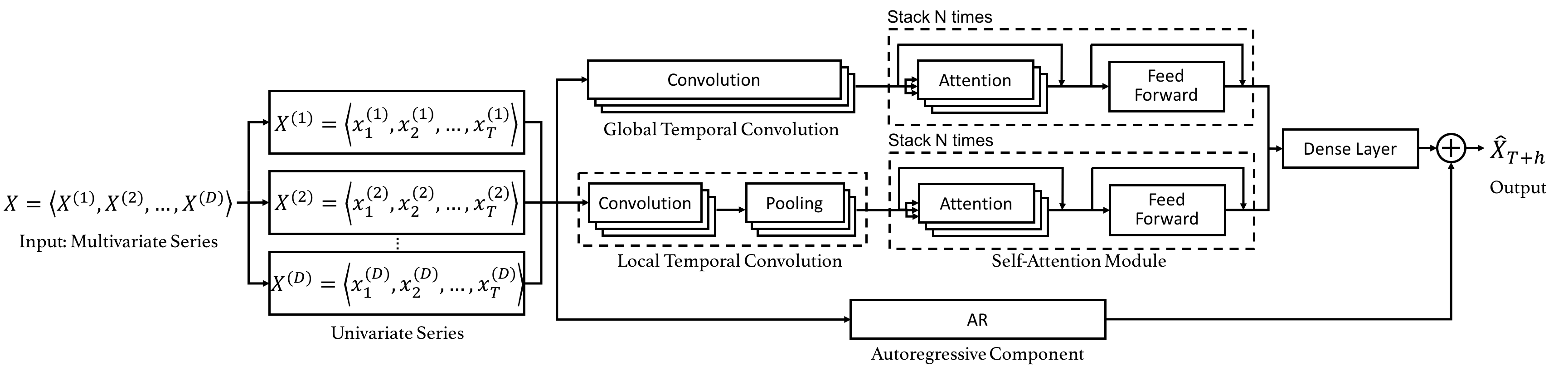

Code for the CIKM 2019 paper "DSANet: Dual Self-Attention Network for Multivariate Time Series Forecasting".

Dual Self-Attention Network for Multivariate Time Series Forecasting 20.10.26 Update: Due to the difficulty of installation and code maintenance cause

This is the source code for our ICLR2021 paper: Adaptive Universal Generalized PageRank Graph Neural Network.

GPRGNN This is the source code for our ICLR2021 paper: Adaptive Universal Generalized PageRank Graph Neural Network. Hidden state feature extraction i

Official PyTorch implementation of "Physics-aware Difference Graph Networks for Sparsely-Observed Dynamics".

Physics-aware Difference Graph Networks for Sparsely-Observed Dynamics This repository is the official PyTorch implementation of "Physics-aware Differ

Code for "Learning Canonical Representations for Scene Graph to Image Generation", Herzig & Bar et al., ECCV2020

Learning Canonical Representations for Scene Graph to Image Generation (ECCV 2020) Roei Herzig*, Amir Bar*, Huijuan Xu, Gal Chechik, Trevor Darrell, A

Multi-Task Temporal Shift Attention Networks for On-Device Contactless Vitals Measurement (NeurIPS 2020)

MTTS-CAN: Multi-Task Temporal Shift Attention Networks for On-Device Contactless Vitals Measurement Paper Xin Liu, Josh Fromm, Shwetak Patel, Daniel M

Pytorch implementation of “Recursive Non-Autoregressive Graph-to-Graph Transformer for Dependency Parsing with Iterative Refinement”

Graph-to-Graph Transformers Self-attention models, such as Transformer, have been hugely successful in a wide range of natural language processing (NL

Graph4nlp is the library for the easy use of Graph Neural Networks for NLP

Graph4NLP Graph4NLP is an easy-to-use library for R&D at the intersection of Deep Learning on Graphs and Natural Language Processing (i.e., DLG4NLP).

Implementation of Graph Transformer in Pytorch, for potential use in replicating Alphafold2

Graph Transformer - Pytorch Implementation of Graph Transformer in Pytorch, for potential use in replicating Alphafold2. This was recently used by bot

PyTorch implementation of ARM-Net: Adaptive Relation Modeling Network for Structured Data.

A ready-to-use framework of latest models for structured (tabular) data learning with PyTorch. Applications include recommendation, CRT prediction, healthcare analytics, and etc.

Attention mechanism with MNIST dataset

[TensorFlow] Attention mechanism with MNIST dataset Usage $ python run.py Result Training Loss graph. Test Each figure shows input digit, attention ma

Shared Attention for Multi-label Zero-shot Learning

Shared Attention for Multi-label Zero-shot Learning Overview This repository contains the implementation of Shared Attention for Multi-label Zero-shot

Cancer Drug Response Prediction via a Hybrid Graph Convolutional Network

DeepCDR Cancer Drug Response Prediction via a Hybrid Graph Convolutional Network This work has been accepted to ECCB2020 and was also published in the

codes for paper Combining Dynamic Local Context Focus and Dependency Cluster Attention for Aspect-level sentiment classification

DLCF-DCA codes for paper Combining Dynamic Local Context Focus and Dependency Cluster Attention for Aspect-level sentiment classification. submitted t

FinGAT: A Financial Graph Attention Networkto Recommend Top-K Profitable Stocks

FinGAT: A Financial Graph Attention Networkto Recommend Top-K Profitable Stocks This is our implementation for the paper: FinGAT: A Financial Graph At

Random Walk Graph Neural Networks

Random Walk Graph Neural Networks This repository is the official implementation of Random Walk Graph Neural Networks. Requirements Code is written in

JittorVis is a deep neural network computational graph visualization library based on Jittor.

JittorVis - Visual understanding of deep learning model.

Create a Neo4J graph of users and roles trust policies within an AWS Organization.

AWS_ORG_MAPPER This tool uses sso-oidc to authenticate to the AWS organization. Once authenticated the tool will attempt to enumerate all users and ro

Continuous Diffusion Graph Neural Network

We present Graph Neural Diffusion (GRAND) that approaches deep learning on graphs as a continuous diffusion process and treats Graph Neural Networks (GNNs) as discretisations of an underlying PDE.

graph learning code for ogb

The final code for OGB Installation Requirements: ogb=1.3.1 torch=1.7.0 torch-geometric=1.7.0 torch-scatter=2.0.6 torch-sparse=0.6.9 Baseline models T

Episodic Transformer (E.T.) is a novel attention-based architecture for vision-and-language navigation. E.T. is based on a multimodal transformer that encodes language inputs and the full episode history of visual observations and actions.

Episodic Transformers (E.T.) Episodic Transformer for Vision-and-Language Navigation Alexander Pashevich, Cordelia Schmid, Chen Sun Episodic Transform

Using pretrained language models for biomedical knowledge graph completion.

LMs for biomedical KG completion This repository contains code to run the experiments described in: Scientific Language Models for Biomedical Knowledg

LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant Self-At

![[ICML 2021]](https://github.com/Shen-Lab/GraphCL_Automated/raw/master/joao.png)

[ICML 2021] "Graph Contrastive Learning Automated" by Yuning You, Tianlong Chen, Yang Shen, Zhangyang Wang

Graph Contrastive Learning Automated PyTorch implementation for Graph Contrastive Learning Automated [talk] [poster] [appendix] Yuning You, Tianlong C

Leveraging Two Types of Global Graph for Sequential Fashion Recommendation, ICMR 2021

This is the repo for the paper: Leveraging Two Types of Global Graph for Sequential Fashion Recommendation Requirements OS: Ubuntu 16.04 or higher ver

Implementation of Uformer, Attention-based Unet, in Pytorch

Uformer - Pytorch Implementation of Uformer, Attention-based Unet, in Pytorch. It will only offer the concat-cross-skip connection. This repository wi

Shuffle Attention for MobileNetV3

SA-MobileNetV3 Shuffle Attention for MobileNetV3 Train Run the following command for train model on your own dataset: python train.py --dataset mnist

This is the official implementation for "Do Transformers Really Perform Bad for Graph Representation?".

Graphormer By Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng*, Guolin Ke, Di He*, Yanming Shen and Tie-Yan Liu. This repo is the official impl

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network

EPSANet:An Efficient Pyramid Split Attention Block on Convolutional Neural Network This repo contains the official Pytorch implementaion code and conf

Code + pre-trained models for the paper Keeping Your Eye on the Ball Trajectory Attention in Video Transformers

Motionformer This is an official pytorch implementation of paper Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers. In this rep

FANet - Real-time Semantic Segmentation with Fast Attention

FANet Real-time Semantic Segmentation with Fast Attention Ping Hu, Federico Perazzi, Fabian Caba Heilbron, Oliver Wang, Zhe Lin, Kate Saenko , Stan Sc

Official implement of "CAT: Cross Attention in Vision Transformer".

CAT: Cross Attention in Vision Transformer This is official implement of "CAT: Cross Attention in Vision Transformer". Abstract Since Transformer has