115 Repositories

Python mnist-vae-scaling Libraries

Official Pytorch and JAX implementation of "Efficient-VDVAE: Less is more"

The Official Pytorch and JAX implementation of "Efficient-VDVAE: Less is more" Arxiv preprint Louay Hazami · Rayhane Mama · Ragavan Thurairatn

How to train a CNN to 99% accuracy on MNIST in less than a second on a laptop

Training a NN to 99% accuracy on MNIST in 0.76 seconds A quick study on how fast you can reach 99% accuracy on MNIST with a single laptop. Our answer

The official implementation of Autoregressive Image Generation using Residual Quantization (CVPR '22)

Autoregressive Image Generation using Residual Quantization (CVPR 2022) The official implementation of "Autoregressive Image Generation using Residual

As-ViT: Auto-scaling Vision Transformers without Training

As-ViT: Auto-scaling Vision Transformers without Training [PDF] Wuyang Chen, Wei Huang, Xianzhi Du, Xiaodan Song, Zhangyang Wang, Denny Zhou In ICLR 2

![[SIGGRAPH'22] StyleGAN-XL: Scaling StyleGAN to Large Diverse Datasets](https://github.com/autonomousvision/stylegan_xl/raw/main/media/banner.png)

[SIGGRAPH'22] StyleGAN-XL: Scaling StyleGAN to Large Diverse Datasets

[Project] [PDF] This repository contains code for our SIGGRAPH'22 paper "StyleGAN-XL: Scaling StyleGAN to Large Diverse Datasets" by Axel Sauer, Katja

Everything you want about DP-Based Federated Learning, including Papers and Code. (Mechanism: Laplace or Gaussian, Dataset: femnist, shakespeare, mnist, cifar-10 and fashion-mnist. )

Differential Privacy (DP) Based Federated Learning (FL) Everything about DP-based FL you need is here. (所有你需要的DP-based FL的信息都在这里) Code Tip: the code o

Creating a custom CNN hypertunned architeture for the Fashion MNIST dataset with Python, Keras and Tensorflow.

custom-cnn-fashion-mnist Creating a custom CNN hypertunned architeture for the Fashion MNIST dataset with Python, Keras and Tensorflow. The following

Experimental Python implementation of OpenVINO Inference Engine (very slow, limited functionality). All codes are written in Python. Easy to read and modify.

PyOpenVINO - An Experimental Python Implementation of OpenVINO Inference Engine (minimum-set) Description The PyOpenVINO is a spin-off product from my

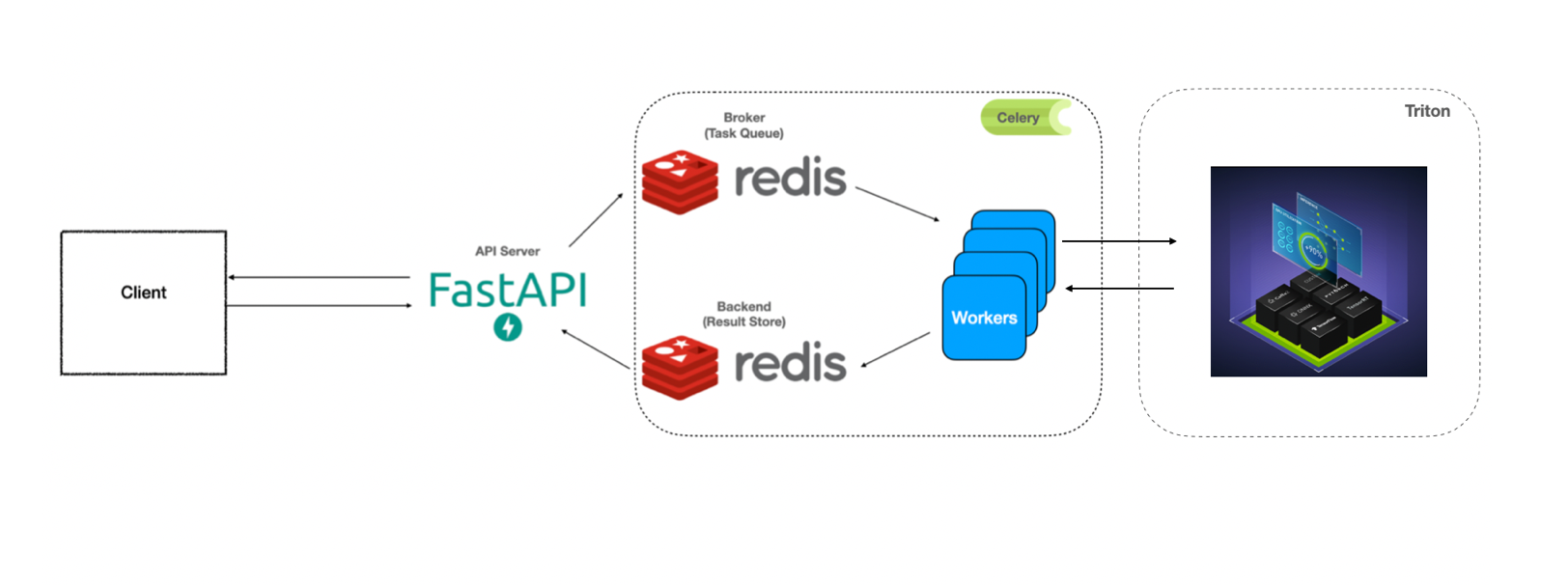

Simple example of FastAPI + Celery + Triton for benchmarking

You can see the previous work from: https://github.com/Curt-Park/producer-consumer-fastapi-celery https://github.com/Curt-Park/triton-inference-server

Split Variational AutoEncoder

Split-VAE Split Variational AutoEncoder Introduction This repository contains and implemementation of a Split Variational AutoEncoder (SVAE). In a SVA

Mnist API server w/ FastAPI

Mnist API server w/ FastAPI

Template repository for managing machine learning research projects built with PyTorch-Lightning

Tutorial Repository with a minimal example for showing how to deploy training across various compute infrastructure.

Dynamic vae - Dynamic VAE algorithm is used for anomaly detection of battery data

Dynamic VAE frame Automatic feature extraction can be achieved by probability di

PyTorch implementation of image classification models for CIFAR-10/CIFAR-100/MNIST/FashionMNIST/Kuzushiji-MNIST/ImageNet

PyTorch Image Classification Following papers are implemented using PyTorch. ResNet (1512.03385) ResNet-preact (1603.05027) WRN (1605.07146) DenseNet

LegoDNN: a block-grained scaling tool for mobile vision systems

Table of contents 1 Introduction 1.1 Major features 1.2 Architecture 2 Code and Installation 2.1 Code 2.2 Installation 3 Repository of DNNs in vision

Scaling Vision with Sparse Mixture of Experts

Scaling Vision with Sparse Mixture of Experts This repository contains the code for training and fine-tuning Sparse MoE models for vision (V-MoE) on I

classify fashion-mnist dataset with pytorch

Fashion-Mnist Classifier with PyTorch Inference 1- clone this repository: git clone https://github.com/Jhamed7/Fashion-Mnist-Classifier.git 2- Instal

Unofficial PyTorch reimplementation of the paper Swin Transformer V2: Scaling Up Capacity and Resolution

PyTorch reimplementation of the paper Swin Transformer V2: Scaling Up Capacity and Resolution [arXiv 2021].

FEMDA: Robust classification with Flexible Discriminant Analysis in heterogeneous data

FEMDA: Robust classification with Flexible Discriminant Analysis in heterogeneous data. Flexible EM-Inspired Discriminant Analysis is a robust supervised classification algorithm that performs well in noisy and contaminated datasets.

A PyTorch implementation of the continual learning experiments with deep neural networks

Brain-Inspired Replay A PyTorch implementation of the continual learning experiments with deep neural networks described in the following paper: Brain

Mina is a new cryptocurrency with a constant size blockchain, improving scaling while maintaining decentralization and security.

Mina Mina is the first cryptocurrency with a lightweight, constant-sized blockchain. This is the main source code repository for the Mina project. It

deep learning model with only python and numpy with test accuracy 99 % on mnist dataset and different optimization choices

deep_nn_model_with_only_python_100%_test_accuracy deep learning model with only python and numpy with test accuracy 99 % on mnist dataset and differen

Code for the paper "Jukebox: A Generative Model for Music"

Status: Archive (code is provided as-is, no updates expected) Jukebox Code for "Jukebox: A Generative Model for Music" Paper Blog Explorer Colab Insta

Fashion Entity Classification

Fashion-Entity-Classification - Fashion-MNIST is a dataset of Zalando's article images—consisting of a training set of 60,000 examples and a test set of 10,000 examples. Each example is a 28x28 grayscale image, associated with a label from 10 classes. Zalando intends Fashion-MNIST to serve as a direct drop-in replacement for the original MNIST dataset for benchmarking machine learning algorithms. It shares the same image size and structure of training and testing splits.

Benchmark VAE - Library for Variational Autoencoder benchmarking

Documentation pythae This library implements some of the most common (Variational) Autoencoder models. In particular it provides the possibility to pe

50-days-of-Statistics-for-Data-Science - This repository consist of a 50-day program

50-days-of-Statistics-for-Data-Science - This repository consist of a 50-day program. All the statistics required for the complete understanding of data science will be uploaded in this repository.

Scaling and Benchmarking Self-Supervised Visual Representation Learning

FAIR Self-Supervision Benchmark is deprecated. Please see VISSL, a ground-up rewrite of benchmark in PyTorch. FAIR Self-Supervision Benchmark This cod

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

A framework based on tornado for easier development, scaling up and maintenance

turbo 中文文档 Turbo is a framework for fast building web site and RESTFul api, based on tornado. Easily scale up and maintain Rapid development for RESTF

Evolutionary Population Curriculum for Scaling Multi-Agent Reinforcement Learning

Evolutionary Population Curriculum for Scaling Multi-Agent Reinforcement Learning This is the code for implementing the MADDPG algorithm presented in

Texture mapping with variational auto-encoders

vae-textures This is an experiment with using variational autoencoders (VAEs) to perform mesh parameterization. This was also my first project using J

PyTorch implementation of Uncertainty Estimation via Response Scaling for Pseudo-mask Noise Mitigation in Weakly-supervised Semantic Segmentation

Uncertainty Estimation via Response Scaling for Pseudo-mask Noise Mitigation in Weakly-supervised Semantic Segmentation Introduction This is a PyTorch

An implementation of quantum convolutional neural network with MindQuantum. Huawei, classifying MNIST dataset

关于实现的一点说明 山东大学 2020级 苏博南 www.subonan.com 文件说明 tools.py 这里面主要有两个函数: resize(a, lenb) 这其实是我找同学写的一个小算法hhh。给出一个$28\times 28$的方阵a,返回一个$lenb\times lenb$的方阵。因

Uncertainty Estimation via Response Scaling for Pseudo-mask Noise Mitigation in Weakly-supervised Semantic Segmentation

Uncertainty Estimation via Response Scaling for Pseudo-mask Noise Mitigation in Weakly-supervised Semantic Segmentation Introduction This is a PyTorch

Implementation of parameterized soft-exponential activation function.

Soft-Exponential-Activation-Function: Implementation of parameterized soft-exponential activation function. In this implementation, the parameters are

Rapid experimentation and scaling of deep learning models on molecular and crystal graphs.

LitMatter A template for rapid experimentation and scaling deep learning models on molecular and crystal graphs. How to use Clone this repository and

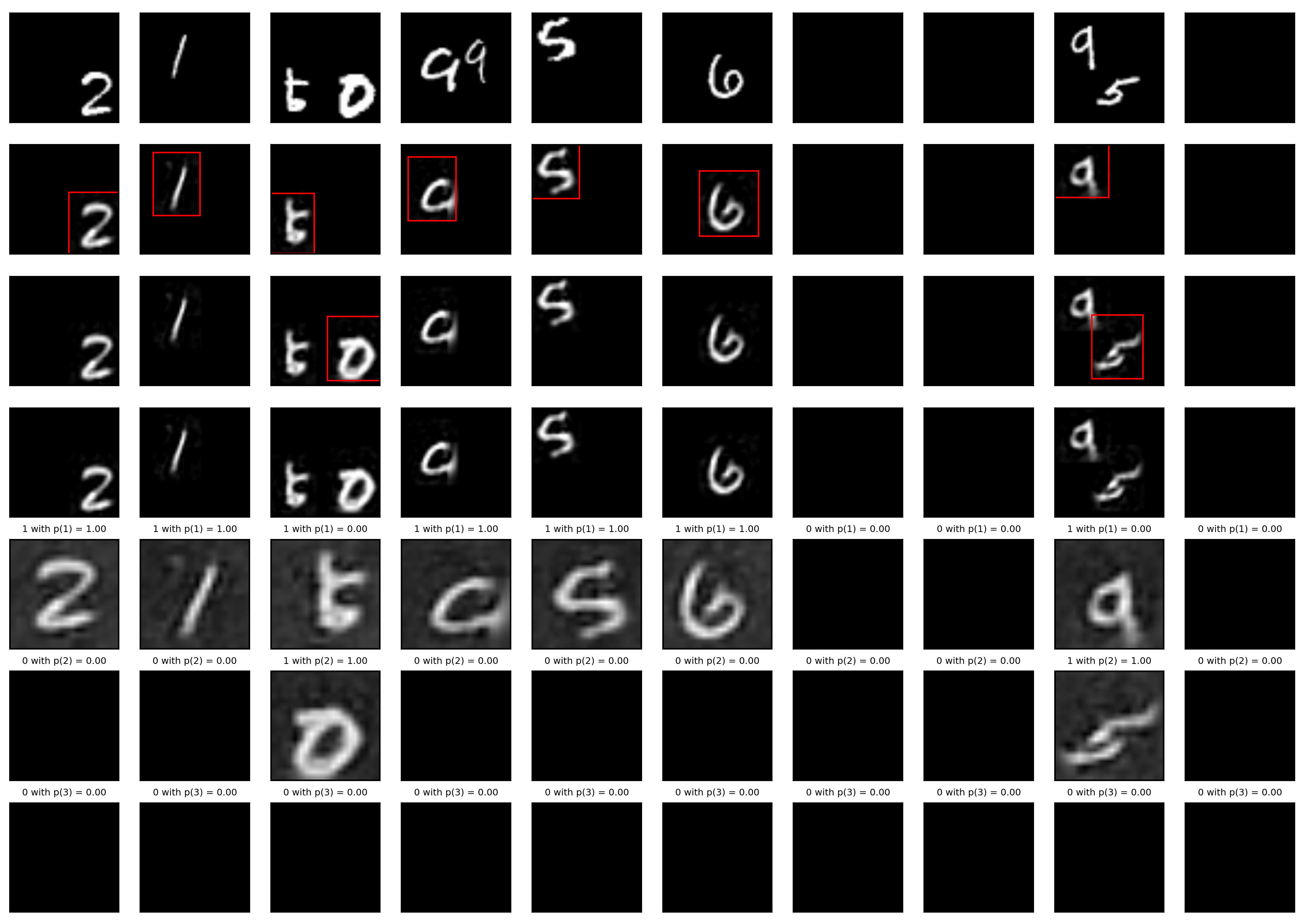

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

Semi-Supervised Learning with Ladder Networks in Keras. Get 98% test accuracy on MNIST with just 100 labeled examples !

Semi-Supervised Learning with Ladder Networks in Keras This is an implementation of Ladder Network in Keras. Ladder network is a model for semi-superv

SLAMP: Stochastic Latent Appearance and Motion Prediction

SLAMP: Stochastic Latent Appearance and Motion Prediction Official implementation of the paper SLAMP: Stochastic Latent Appearance and Motion Predicti

Code repository for "Reducing Underflow in Mixed Precision Training by Gradient Scaling" presented at IJCAI '20

Reducing Underflow in Mixed Precision Training by Gradient Scaling This project implements the gradient scaling method to improve the performance of m

Optimus: the first large-scale pre-trained VAE language model

Optimus: the first pre-trained Big VAE language model This repository contains source code necessary to reproduce the results presented in the EMNLP 2

Official implementation of the NeurIPS 2021 paper Online Learning Of Neural Computations From Sparse Temporal Feedback

Online Learning Of Neural Computations From Sparse Temporal Feedback This repository is the official implementation of the NeurIPS 2021 paper Online L

Official implementation of the NeurIPS'21 paper 'Conditional Generation Using Polynomial Expansions'.

Conditional Generation Using Polynomial Expansions Official implementation of the conditional image generation experiments as described on the NeurIPS

Cluttered MNIST Dataset

Cluttered MNIST Dataset A setup script will download MNIST and produce mnist/*.t7 files: luajit download_mnist.lua Example usage: local mnist_clutter

Research code for the paper "Variational Gibbs inference for statistical estimation from incomplete data".

Variational Gibbs inference (VGI) This repository contains the research code for Simkus, V., Rhodes, B., Gutmann, M. U., 2021. Variational Gibbs infer

DivNoising is an unsupervised denoising method to generate diverse denoised samples for any noisy input image. This repository contains the code to reproduce the results reported in the paper https://openreview.net/pdf?id=agHLCOBM5jP

DivNoising: Diversity Denoising with Fully Convolutional Variational Autoencoders Mangal Prakash1, Alexander Krull1,2, Florian Jug2 1Authors contribut

Universal Probability Distributions with Optimal Transport and Convex Optimization

Sylvester normalizing flows for variational inference Pytorch implementation of Sylvester normalizing flows, based on our paper: Sylvester normalizing

An interactive UMAP visualization of the MNIST data set.

Code for an interactive UMAP visualization of the MNIST data set. Demo at https://grantcuster.github.io/umap-explorer/. You can read more about the de

Train CPPNs as a Generative Model, using Generative Adversarial Networks and Variational Autoencoder techniques to produce high resolution images.

cppn-gan-vae tensorflow Train Compositional Pattern Producing Network as a Generative Model, using Generative Adversarial Networks and Variational Aut

GAN example for Keras. Cuz MNIST is too small and there should be something more realistic.

Keras-GAN-Animeface-Character GAN example for Keras. Cuz MNIST is too small and there should an example on something more realistic. Some results Trai

Ladder Variational Autoencoders (LVAE) in PyTorch

Ladder Variational Autoencoders (LVAE) PyTorch implementation of Ladder Variational Autoencoders (LVAE) [1]: where the variational distributions q at

Collection of generative models in Tensorflow

tensorflow-generative-model-collections Tensorflow implementation of various GANs and VAEs. Related Repositories Pytorch version Pytorch version of th

Python framework for creating and scaling up production of vector graphics assets.

Board Game Factory Contributors are welcome here! See the end of readme. This is a vector-graphics framework intended for creating and scaling up prod

Implementation of "Scaled-YOLOv4: Scaling Cross Stage Partial Network" using PyTorch framwork.

YOLOv4-large This is the implementation of "Scaled-YOLOv4: Scaling Cross Stage Partial Network" using PyTorch framwork. YOLOv4-CSP YOLOv4-tiny YOLOv4-

Minesweeper clone with 3 modes of difficulty, UI scaling and custom games feature.

Insect Sweeper Minesweeper clone with 3 modes of difficulty, UI scaling and custom games feature. Mines are replaced with random insects that a player

Companion repo of the UCC 2021 paper "Predictive Auto-scaling with OpenStack Monasca"

Predictive Auto-scaling with OpenStack Monasca Giacomo Lanciano*, Filippo Galli, Tommaso Cucinotta, Davide Bacciu, Andrea Passarella 2021 IEEE/ACM 14t

![[v1 (ISBI'21) + v2] MedMNIST: A Large-Scale Lightweight Benchmark for 2D and 3D Biomedical Image Classification](https://github.com/MedMNIST/MedMNIST/raw/main/assets/medmnistv2.jpg)

[v1 (ISBI'21) + v2] MedMNIST: A Large-Scale Lightweight Benchmark for 2D and 3D Biomedical Image Classification

MedMNIST Project (Website) | Dataset (Zenodo) | Paper (arXiv) | MedMNIST v1 (ISBI'21) Jiancheng Yang, Rui Shi, Donglai Wei, Zequan Liu, Lin Zhao, Bili

PyTorch Autoencoders - Implementing a Variational Autoencoder (VAE) Series in Pytorch.

PyTorch Autoencoders Implementing a Variational Autoencoder (VAE) Series in Pytorch. Inspired by this repository Model List check model paper conferen

A script that trains a model to recognize handwritten digits using the MNIST data set.

handwritten-digits-recognition A script that trains a model to recognize handwritten digits using the MNIST data set. Then it loads external files and

Code for the paper "Regularizing Variational Autoencoder with Diversity and Uncertainty Awareness"

DU-VAE This is the pytorch implementation of the paper "Regularizing Variational Autoencoder with Diversity and Uncertainty Awareness" Acknowledgement

Official code for On Path Integration of Grid Cells: Group Representation and Isotropic Scaling (NeurIPS 2021)

On Path Integration of Grid Cells: Group Representation and Isotropic Scaling This repo contains the official implementation for the paper On Path Int

For auto aligning, cropping, and scaling HR and LR images for training image based neural networks

ImgAlign For auto aligning, cropping, and scaling HR and LR images for training image based neural networks Usage Make sure OpenCV is installed, 'pip

This repository contains the code for the paper ``Identifiable VAEs via Sparse Decoding''.

Sparse VAE This repository contains the code for the paper ``Identifiable VAEs via Sparse Decoding''. Data Sources The datasets used in this paper wer

[WACV 2022] Contextual Gradient Scaling for Few-Shot Learning

CxGrad - Official PyTorch Implementation Contextual Gradient Scaling for Few-Shot Learning Sanghyuk Lee, Seunghyun Lee, and Byung Cheol Song In WACV 2

A MNIST-like fashion product database. Benchmark

Fashion-MNIST Table of Contents Why we made Fashion-MNIST Get the Data Usage Benchmark Visualization Contributing Contact Citing Fashion-MNIST License

Official Pytorch implementation of the paper "Action-Conditioned 3D Human Motion Synthesis with Transformer VAE", ICCV 2021

ACTOR Official Pytorch implementation of the paper "Action-Conditioned 3D Human Motion Synthesis with Transformer VAE", ICCV 2021. Please visit our we

Code image classification of MNIST dataset using different architectures: simple linear NN, autoencoder, and highway network

Deep Learning for image classification pip install -r http://webia.lip6.fr/~baskiotisn/requirements-amal.txt Train an autoencoder python3 train_auto

Simple PyTorch implementations of Badnets on MNIST and CIFAR10.

Simple PyTorch implementations of Badnets on MNIST and CIFAR10.

This repository outlines deploying a local Kubeflow v1.3 instance on microk8s and deploying a simple MNIST classifier using KFServing.

Zero to Inference with Kubeflow Getting Started This repository houses all of the tools, utilities, and example pipeline implementations for exploring

Multiple style transfer via variational autoencoder

ST-VAE Multiple style transfer via variational autoencoder By Zhi-Song Liu, Vicky Kalogeiton and Marie-Paule Cani This repo only provides simple testi

LSTM-VAE Implementation and Relevant Evaluations

LSTM-VAE Implementation and Relevant Evaluations Before using any file in this repository, please create two directories under the root directory name

PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech

PortaSpeech - PyTorch Implementation PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech. Model Size Module Nor

PortaSpeech - PyTorch Implementation

PortaSpeech - PyTorch Implementation PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech. Model Size Module Nor

TensorFlow implementation of "Variational Inference with Normalizing Flows"

[TensorFlow 2] Variational Inference with Normalizing Flows TensorFlow implementation of "Variational Inference with Normalizing Flows" [1] Concept Co

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

Recurrent Variational Autoencoder that generates sequential data implemented with pytorch

Pytorch Recurrent Variational Autoencoder Model: This is the implementation of Samuel Bowman's Generating Sentences from a Continuous Space with Kim's

Official pytorch implementation of "Scaling-up Disentanglement for Image Translation", ICCV 2021.

Official pytorch implementation of "Scaling-up Disentanglement for Image Translation", ICCV 2021.

Brainfuck rollup scaling experiment for fun

Optimistic Brainfuck Ever wanted to run Brainfuck on ethereum? Don't ask, now you can! And at a fraction of the cost, thanks to optimistic rollup tech

Demonstrates how to divide a DL model into multiple IR model files (division) and introduce a simplest way to implement a custom layer works with OpenVINO IR models.

Demonstration of OpenVINO techniques - Model-division and a simplest-way to support custom layers Description: Model Optimizer in Intel(r) OpenVINO(tm

Classifying audio using Wavelet transform and deep learning

Audio Classification using Wavelet Transform and Deep Learning A step-by-step tutorial to classify audio signals using continuous wavelet transform (C

Huggingface package for the discrete VAE used for DALL-E.

DALL-E-Tokenizer Huggingface package for the discrete VAE used for DALL-E.

Base pretrained models and datasets in pytorch (MNIST, SVHN, CIFAR10, CIFAR100, STL10, AlexNet, VGG16, VGG19, ResNet, Inception, SqueezeNet)

This is a playground for pytorch beginners, which contains predefined models on popular dataset. Currently we support mnist, svhn cifar10, cifar100 st

PyTorch Implementation of CycleGAN and SSGAN for Domain Transfer (Minimal)

MNIST-to-SVHN and SVHN-to-MNIST PyTorch Implementation of CycleGAN and Semi-Supervised GAN for Domain Transfer. Prerequites Python 3.5 PyTorch 0.1.12

Implement Decoupled Neural Interfaces using Synthetic Gradients in Pytorch

disclaimer: this code is modified from pytorch-tutorial Image classification with synthetic gradient in Pytorch I implement the Decoupled Neural Inter

Collection of generative models in Pytorch version.

pytorch-generative-model-collections Original : [Tensorflow version] Pytorch implementation of various GANs. This repository was re-implemented with r

PyTorch experiments with the Zalando fashion-mnist dataset

zalando-pytorch PyTorch experiments with the Zalando fashion-mnist dataset Project Organization ├── LICENSE ├── Makefile - Makefile with co

Code for "Finetuning Pretrained Transformers into Variational Autoencoders"

transformers-into-vaes Code for Finetuning Pretrained Transformers into Variational Autoencoders (our submission to NLP Insights Workshop 2021). Gathe

MNIST, but with Bezier curves instead of pixels

bezier-mnist This is a work-in-progress vector version of the MNIST dataset. Samples Here are some samples from the training set. Note that, while the

PyTorch implementation of NIPS 2017 paper Dynamic Routing Between Capsules

Dynamic Routing Between Capsules - PyTorch implementation PyTorch implementation of NIPS 2017 paper Dynamic Routing Between Capsules from Sara Sabour,

Random Erasing Data Augmentation. Experiments on CIFAR10, CIFAR100 and Fashion-MNIST

Random Erasing Data Augmentation =============================================================== black white random This code has the source code for

Official code for UnICORNN (ICML 2021)

UnICORNN (Undamped Independent Controlled Oscillatory RNN) [ICML 2021] This repository contains the implementation to reproduce the numerical experime

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Advanced Deep Learning with TensorFlow 2 and Keras (Updated for 2nd Edition)

Advanced Deep Learning with TensorFlow 2 and Keras (Updated for 2nd Edition)

Annotated, understandable, and visually interpretable PyTorch implementations of: VAE, BIRVAE, NSGAN, MMGAN, WGAN, WGANGP, LSGAN, DRAGAN, BEGAN, RaGAN, InfoGAN, fGAN, FisherGAN

Overview PyTorch 0.4.1 | Python 3.6.5 Annotated implementations with comparative introductions for minimax, non-saturating, wasserstein, wasserstein g

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

A PyTorch implementation of " EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks."

EfficientNet A PyTorch implementation of EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. [arxiv] [Official TF Repo] Implemen

Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow.

Generative Models Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow. Also present here are RBM and Helmholtz Machine. Note: Gen

The official implementation of VAENAR-TTS, a VAE based non-autoregressive TTS model.

VAENAR-TTS This repo contains code accompanying the paper "VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis". Sa

The official implementation of VAENAR-TTS, a VAE based non-autoregressive TTS model.

VAENAR-TTS This repo contains code accompanying the paper "VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis". Sa