374 Repositories

Python hyper-parameter-optimization Libraries

Second Order Optimization and Curvature Estimation with K-FAC in JAX.

KFAC-JAX - Second Order Optimization with Approximate Curvature in JAX Installation | Quickstart | Documentation | Examples | Citing KFAC-JAX KFAC-JAX

RuDOLPH: One Hyper-Modal Transformer can be creative as DALL-E and smart as CLIP

[Paper] [Хабр] [Model Card] [Colab] [Kaggle] RuDOLPH 🦌 🎄 ☃️ One Hyper-Modal Transformer can be creative as DALL-E and smart as CLIP Russian Diffusio

Easy-to-use library to boost AI inference leveraging state-of-the-art optimization techniques.

NEW RELEASE How Nebullvm Works • Tutorials • Benchmarks • Installation • Get Started • Optimization Examples Discord | Website | LinkedIn | Twitter Ne

Code for T-Few from "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning"

T-Few This repository contains the official code for the paper: "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learni

Code for intrusion detection system (IDS) development using CNN models and transfer learning

Intrusion-Detection-System-Using-CNN-and-Transfer-Learning This is the code for the paper entitled "A Transfer Learning and Optimized CNN Based Intrus

Python code to control laboratory hardware and perform Bayesian reaction optimization on the MIT Make-It system for chemical synthesis

Description This repository contains code accompanying the following paper on the Make-It robotic flow chemistry platform developed by the Jensen Rese

A toolkit for Lagrangian-based constrained optimization in Pytorch

Cooper About Cooper is a toolkit for Lagrangian-based constrained optimization in Pytorch. This library aims to encourage and facilitate the study of

Machine learning beginner to Kaggle competitor in 30 days. Non-coders welcome. The program starts Monday, August 2, and lasts four weeks. It's designed for people who want to learn machine learning.

30-Days-of-ML-Kaggle 🔥 About the Hands On Program 💻 Machine learning beginner → Kaggle competitor in 30 days. Non-coders welcome The program starts

🏎️ Accelerate training and inference of 🤗 Transformers with easy to use hardware optimization tools

Hugging Face Optimum 🤗 Optimum is an extension of 🤗 Transformers, providing a set of performance optimization tools enabling maximum efficiency to t

An end-to-end framework for mixed-integer optimization with data-driven learned constraints.

OptiCL OptiCL is an end-to-end framework for mixed-integer optimization (MIO) with data-driven learned constraints. We address a problem setting in wh

Code for the Findings of NAACL 2022(Long Paper): AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks

AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks arXiv link: upcoming To be published in Findings of NA

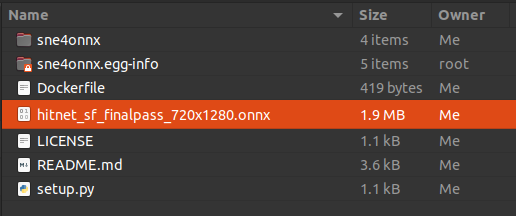

A very simple tool for situations where optimization with onnx-simplifier would exceed the Protocol Buffers upper file size limit of 2GB, or simply to separate onnx files to any size you want.

sne4onnx A very simple tool for situations where optimization with onnx-simplifier would exceed the Protocol Buffers upper file size limit of 2GB, or

Athena is the only tool that you will ever need to optimize your portfolio.

Athena Portfolio optimization is the process of selecting the best portfolio (asset distribution), out of the set of all portfolios being considered,

Multiple-criteria decision-making (MCDM) with Electre, Promethee, Weighted Sum and Pareto

EasyMCDM - Quick Installation methods Install with PyPI Once you have created your Python environment (Python 3.6+) you can simply type: pip3 install

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

Autolfads-tf2 - A TensorFlow 2.0 implementation of Latent Factor Analysis via Dynamical Systems (LFADS) and AutoLFADS

autolfads-tf2 A TensorFlow 2.0 implementation of LFADS and AutoLFADS. Installati

OpenDelta - An Open-Source Framework for Paramter Efficient Tuning.

OpenDelta is a toolkit for parameter efficient methods (we dub it as delta tuning), by which users could flexibly assign (or add) a small amount parameters to update while keeping the most paramters frozen. By using OpenDelta, users could easily implement prefix-tuning, adapters, Lora, or any other types of delta tuning with preferred PTMs.

B2EA: An Evolutionary Algorithm Assisted by Two Bayesian Optimization Modules for Neural Architecture Search

B2EA: An Evolutionary Algorithm Assisted by Two Bayesian Optimization Modules for Neural Architecture Search This is the offical implementation of the

Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration

This repo is for the paper: Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration The DAC environment is based on the Dynam

Code for our WACV 2022 paper "Hyper-Convolution Networks for Biomedical Image Segmentation"

Hyper-Convolution Networks for Biomedical Image Segmentation Code for our WACV 2022 paper "Hyper-Convolution Networks for Biomedical Image Segmentatio

Learning Features with Parameter-Free Layers, ICLR 2022

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

OMLT: Optimization and Machine Learning Toolkit

OMLT is a Python package for representing machine learning models (neural networks and gradient-boosted trees) within the Pyomo optimization environment.

LightGBM + Optuna: no brainer

AutoLGBM LightGBM + Optuna: no brainer auto train lightgbm directly from CSV files auto tune lightgbm using optuna auto serve best lightgbm model usin

A curated list of automated deep learning (including neural architecture search and hyper-parameter optimization) resources.

Awesome AutoDL A curated list of automated deep learning related resources. Inspired by awesome-deep-vision, awesome-adversarial-machine-learning, awe

Monocular 3D Object Detection: An Extrinsic Parameter Free Approach (CVPR2021)

Monocular 3D Object Detection: An Extrinsic Parameter Free Approach (CVPR2021) Yunsong Zhou, Yuan He, Hongzi Zhu, Cheng Wang, Hongyang Li, Qinhong Jia

Learning Features with Parameter-Free Layers (ICLR 2022)

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

SGPT: Multi-billion parameter models for semantic search

SGPT: Multi-billion parameter models for semantic search This repository contains code, results and pre-trained models for the paper SGPT: Multi-billi

Implementation of linesearch Optimization Algorithms in Python

Nonlinear Optimization Algorithms During my time as Scientific Assistant at the Karlsruhe Institute of Technology (Germany) I implemented various Opti

An open-source hyper-heuristic framework for multi-objective optimization

MOEA-HH An open-source hyper-heuristic framework for multi-objective optimization. Introduction The multi-objective optimization technique is widely u

Code Impementation for "Mold into a Graph: Efficient Bayesian Optimization over Mixed Spaces"

Code Impementation for "Mold into a Graph: Efficient Bayesian Optimization over Mixed Spaces" This repo contains the implementation of GEBO algorithm.

FLIght SCheduling OPTimization - a simple optimization library for flight scheduling and related problems in the discrete domain

Fliscopt FLIght SCheduling OPTimization 🛫 or fliscopt is a simple optimization library for flight scheduling and related problems in the discrete dom

This repository is the code of the paper Accelerating Deep Reinforcement Learning for Digital Twin Network Optimization with Evolutionary Strategies

ES_OTN_Public Carlos Güemes Palau, Paul Almasan, Pere Barlet Ros, Albert Cabellos Aparicio Contact us: [email protected], contactus@bn

Filtering variational quantum algorithms for combinatorial optimization

Current gate-based quantum computers have the potential to provide a computational advantage if algorithms use quantum hardware efficiently.

Crypto Portfolio Clustering with and without optimization techniques (elbow method, PCA).

Crypto Portfolio Clustering Crypto Portfolio Clustering with and without optimization techniques (elbow method, PCA). Analysis This is an anlysis of c

SuperSonic, a new open-source framework to allow compiler developers to integrate RL into compilers easily, regardless of their RL expertise

Automating reinforcement learning architecture design for code optimization. Che

An Empirical Review of Optimization Techniques for Quantum Variational Circuits

QVC Optimizer Review Code for the paper "An Empirical Review of Optimization Techniques for Quantum Variational Circuits". Each of the python files ca

RRT algorithm and its optimization

RRT-Algorithm-Visualisation This is a project that aims to develop upon the RRT

This repository contains the source code for the paper Tutorial on amortized optimization for learning to optimize over continuous domains by Brandon Amos

Tutorial on Amortized Optimization This repository contains the source code for the paper Tutorial on amortized optimization for learning to optimize

LINUX-AOS (Automatic Optimization System)

LINUX-AOS (Automatic Optimization System)

Fibonacci Method Gradient Descent

An implementation of the Fibonacci method for gradient descent, featuring a TKinter GUI for inputting the function / parameters to be examined and a matplotlib plot of the function and results.

TensorFlow implementation for Bayesian Modeling and Uncertainty Quantification for Learning to Optimize: What, Why, and How

Bayesian Modeling and Uncertainty Quantification for Learning to Optimize: What, Why, and How TensorFlow implementation for Bayesian Modeling and Unce

HEAM: High-Efficiency Approximate Multiplier Optimization for Deep Neural Networks

Approximate Multiplier by HEAM What's HEAM? HEAM is a general optimization method to generate high-efficiency approximate multipliers for specific app

Improved Fitness Optimization Landscapes for Sequence Design

ReLSO Improved Fitness Optimization Landscapes for Sequence Design Description Citation How to run Training models Original data source Description In

In the AI for TSP competition we try to solve optimization problems using machine learning.

AI for TSP Competition Goal In the AI for TSP competition we try to solve optimization problems using machine learning. The competition will be hosted

Born-Infeld (BI) for AI: Energy-Conserving Descent (ECD) for Optimization

Born-Infeld (BI) for AI: Energy-Conserving Descent (ECD) for Optimization This repository contains the code for the BBI optimizer, introduced in the p

Hyperparameters tuning and features selection are two common steps in every machine learning pipeline.

shap-hypetune A python package for simultaneous Hyperparameters Tuning and Features Selection for Gradient Boosting Models. Overview Hyperparameters t

Tools for mathematical optimization region

Tools for mathematical optimization region

TheTimeMachine - Weaponizing WaybackUrls for Recon, BugBounties , OSINT, Sensitive Endpoints and what not

The Time Machine - Weaponizing WaybackUrls for Recon, BugBounties , OSINT, Sensi

Pynomial - a lightweight python library for implementing the many confidence intervals for the risk parameter of a binomial model

Pynomial - a lightweight python library for implementing the many confidence intervals for the risk parameter of a binomial model

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML)

package tests docs license stats support This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML

Optuna is an automatic hyperparameter optimization software framework, particularly designed for machine learning

Optuna is an automatic hyperparameter optimization software framework, particularly designed for machine learning. It features an imperative, define-by-run style user API.

Climin is a Python package for optimization, heavily biased to machine learning scenarios

climin climin is a Python package for optimization, heavily biased to machine learning scenarios distributed under the BSD 3-clause license. It works

A handy tool for common machine learning models' hyper-parameter tuning.

Common machine learning models' hyperparameter tuning This repo is for a collection of hyper-parameter tuning for "common" machine learning models, in

Visual Python and C++ nanosecond profiler, logger, tests enabler

Look into Palanteer and get an omniscient view of your program Palanteer is a set of lean and efficient tools to improve the quality of software, for

Qcover is an open source effort to help exploring combinatorial optimization problems in Noisy Intermediate-scale Quantum(NISQ) processor.

Qcover is an open source effort to help exploring combinatorial optimization problems in Noisy Intermediate-scale Quantum(NISQ) processor. It is devel

A computational optimization project towards the goal of gerrymandering the results of a hypothetical election in the UK.

A computational optimization project towards the goal of gerrymandering the results of a hypothetical election in the UK.

PyGRANSO: A PyTorch-enabled port of GRANSO with auto-differentiation

PyGRANSO PyGRANSO: A PyTorch-enabled port of GRANSO with auto-differentiation Please check https://ncvx.org/PyGRANSO for detailed instructions (introd

Official implementation for the paper "SAPE: Spatially-Adaptive Progressive Encoding for Neural Optimization".

SAPE Project page Paper Official implementation for the paper "SAPE: Spatially-Adaptive Progressive Encoding for Neural Optimization". Environment Cre

PEPit is a package enabling computer-assisted worst-case analyses of first-order optimization methods.

PEPit: Performance Estimation in Python This open source Python library provides a generic way to use PEP framework in Python. Performance estimation

PyTorch implementation of "Optimization Planning for 3D ConvNets"

Optimization-Planning-for-3D-ConvNets Code for the ICML 2021 paper: Optimization Planning for 3D ConvNets. Authors: Zhaofan Qiu, Ting Yao, Chong-Wah N

Use graph-based analysis to re-classify stocks and to improve Markowitz portfolio optimization

Dynamic Stock Industrial Classification Use graph-based analysis to re-classify stocks and experiment different re-classification methodologies to imp

Course on computational design, non-linear optimization, and dynamics of soft systems at UIUC.

Computational Design and Dynamics of Soft Systems · This is a repository that contains the source code for generating the lecture notes, handouts, exe

End-To-End Optimization of LiDAR Beam Configuration

End-To-End Optimization of LiDAR Beam Configuration arXiv | IEEE Xplore This repository is the official implementation of the paper: End-To-End Optimi

Aircraft design optimization made fast through modern automatic differentiation

Aircraft design optimization made fast through modern automatic differentiation. Plug-and-play analysis tools for aerodynamics, propulsion, structures, trajectory design, and much more.

Designed a greedy algorithm based on Markov sequential decision-making process in MATLAB/Python to optimize using Gurobi solver

Designed a greedy algorithm based on Markov sequential decision-making process in MATLAB/Python to optimize using Gurobi solver, the wheel size, gear shifting sequence by modeling drivetrain constraints to achieve maximum laps in a race with a 2-hour time window.

Financial portfolio optimisation in python, including classical efficient frontier, Black-Litterman, Hierarchical Risk Parity

PyPortfolioOpt has recently been published in the Journal of Open Source Software 🎉 PyPortfolioOpt is a library that implements portfolio optimizatio

GPOEO is a micro-intrusive GPU online energy optimization framework for iterative applications

GPOEO GPOEO is a micro-intrusive GPU online energy optimization framework for iterative applications. We also implement ODPP [1] as a comparison. [1]

A hyper-user friendly bot framework built on hikari

Framework A hyper-user friendly bot framework built on hikari. Framework is based off the blocking discord library disco, In both modularity and struc

RuDOLPH: One Hyper-Modal Transformer can be creative as DALL-E and smart as CLIP

[Paper] [Хабр] [Model Card] [Colab] [Kaggle] RuDOLPH 🦌 🎄 ☃️ One Hyper-Modal Tr

Medical appointments No-Show classifier

Medical Appointments No-shows Why do 20% of patients miss their scheduled appointments? A person makes a doctor appointment, receives all the instruct

Open-source implementation of Google Vizier for hyper parameters tuning

Advisor Introduction Advisor is the hyper parameters tuning system for black box optimization. It is the open-source implementation of Google Vizier w

deep learning model with only python and numpy with test accuracy 99 % on mnist dataset and different optimization choices

deep_nn_model_with_only_python_100%_test_accuracy deep learning model with only python and numpy with test accuracy 99 % on mnist dataset and differen

Gpt2-WebAPI - The objective of this API is to provide the 3 best possible responses to sentences that the user would input via http GET request as a parameter

This repository is a modification of: https://github.com/openai/gpt-2 for our sp

Machine Learning in Asset Management (by @firmai)

Machine Learning in Asset Management If you like this type of content then visit ML Quant site below: https://www.ml-quant.com/ Part One Follow this l

Benchmarks for Model-Based Optimization

Design-Bench Design-Bench is a benchmarking framework for solving automatic design problems that involve choosing an input that maximizes a black-box

We provided a matlab implementation for an evolutionary multitasking AUC optimization framework (EMTAUC).

EMTAUC We provided a matlab implementation for an evolutionary multitasking AUC optimization framework (EMTAUC). In this code, SBGA is considered a ba

Data-Scrapping SEO - the project uses various data scrapping and Google autocompletes API tools to provide relevant points of different keywords so that search engines can be optimized

Data-Scrapping SEO - the project uses various data scrapping and Google autocompletes API tools to provide relevant points of different keywords so that search engines can be optimized; as this information is gathered, the marketing team can target the top keywords to get your company’s website higher on a results page.

Parameter-ensemble-differential-evolution - Shows how to do parameter ensembling using differential evolution.

Ensembling parameters with differential evolution This repository shows how to ensemble parameters of two trained neural networks using differential e

AutoGluon: AutoML for Text, Image, and Tabular Data

AutoML for Text, Image, and Tabular Data AutoGluon automates machine learning tasks enabling you to easily achieve strong predictive performance in yo

Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai

Coursera-deep-learning-specialization - Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai: (i) Neural Networks and Deep Learning; (ii) Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization; (iii) Structuring Machine Learning Projects; (iv) Convolutional Neural Networks; (v) Sequence Models

Implementation of hyperparameter optimization/tuning methods for machine learning & deep learning models

Hyperparameter Optimization of Machine Learning Algorithms This code provides a hyper-parameter optimization implementation for machine learning algor

Optimizers-visualized - Visualization of different optimizers on local minimas and saddle points.

Optimizers Visualized Visualization of how different optimizers handle mathematical functions for optimization. Contents Installation Usage Functions

Codeflare - Scale complex AI/ML pipelines anywhere

Scale complex AI/ML pipelines anywhere CodeFlare is a framework to simplify the integration, scaling and acceleration of complex multi-step analytics

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend This project acts as both a tuto

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation .

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation .

The implementation of Parameter Differentiation based Multilingual Neural Machine Translation

The implementation of Parameter Differentiation based Multilingual Neural Machin

NOMAD - A blackbox optimization software

################################################################################### #

Translation to python of Chris Sims' optimization function

pycsminwel This is a locol minimization algorithm. Uses a quasi-Newton method with BFGS update of the estimated inverse hessian. It is robust against

A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization

MADGRAD Optimization Method A Momentumized, Adaptive, Dual Averaged Gradient Method for Stochastic Optimization pip install madgrad Try it out! A best

Implementation of paper "Towards a Unified View of Parameter-Efficient Transfer Learning"

A Unified Framework for Parameter-Efficient Transfer Learning This is the official implementation of the paper: Towards a Unified View of Parameter-Ef

An End-to-End Machine Learning Library to Optimize AUC (AUROC, AUPRC).

Logo by Zhuoning Yuan LibAUC: A Machine Learning Library for AUC Optimization Website | Updates | Installation | Tutorial | Research | Github LibAUC a

Python compiler that massively increases Python's code performance without code changes.

Flyable - A python compiler for highly performant code Flyable is a Python compiler that generates efficient native code. It uses different techniques

SIR model parameter estimation using a novel algorithm for differentiated uniformization.

TenSIR Parameter estimation on epidemic data under the SIR model using a novel algorithm for differentiated uniformization of Markov transition rate m

Optimizaciones incrementales al problema N-Body con el fin de evaluar y comparar las prestaciones de los traductores de Python en el ámbito de HPC.

Python HPC Optimizaciones incrementales de N-Body (all-pairs) con el fin de evaluar y comparar las prestaciones de los traductores de Python en el ámb

Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning"

Prompt-Tuning Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning" Currently, we support the following huggigface models: Bart

Code for the paper "Curriculum Dropout", ICCV 2017

Curriculum Dropout Dropout is a very effective way of regularizing neural networks. Stochastically "dropping out" units with a certain probability dis

OSLO: Open Source framework for Large-scale transformer Optimization

O S L O Open Source framework for Large-scale transformer Optimization What's New: December 21, 2021 Released OSLO 1.0. What is OSLO about? OSLO is a

Software Platform for solving and manipulating multiparametric programs in Python

PPOPT Python Parametric OPtimization Toolbox (PPOPT) is a software platform for solving and manipulating multiparametric programs in Python. This pack

Convex optimization for fun and profit.

CFMM Optimal Routing This repository contains the code needed to generate the figures used in the paper Optimal Routing for Constant Function Market M