1177 Repositories

Python silero-models Libraries

In this tutorial, raster models of soil depth and soil water holding capacity for the United States will be sampled at random geographic coordinates within the state of Colorado.

Raster_Sampling_Demo (Resulting graph of this demo) Background Sampling values of a raster at specific geographic coordinates can be done with a numbe

Code for the paper "On the Power of Edge Independent Graph Models"

Edge Independent Graph Models Code for the paper: "On the Power of Edge Independent Graph Models" Sudhanshu Chanpuriya, Cameron Musco, Konstantinos So

DSEE: Dually Sparsity-embedded Efficient Tuning of Pre-trained Language Models

DSEE Codes for [Preprint] DSEE: Dually Sparsity-embedded Efficient Tuning of Pre-trained Language Models Xuxi Chen, Tianlong Chen, Yu Cheng, Weizhu Ch

Personal thermal comfort models using digital twins: Preference prediction with BIM-extracted spatial-temporal proximity data from Build2Vec

Personal thermal comfort models using digital twins: Preference prediction with BIM-extracted spatial-temporal proximity data from Build2Vec This repo

A treasure chest for visual recognition powered by PaddlePaddle

简体中文 | English PaddleClas 简介 飞桨图像识别套件PaddleClas是飞桨为工业界和学术界所准备的一个图像识别任务的工具集,助力使用者训练出更好的视觉模型和应用落地。 近期更新 2021.11.1 发布PP-ShiTu技术报告,新增饮料识别demo 2021.10.23 发

Bayes-Newton—A Gaussian process library in JAX, with a unifying view of approximate Bayesian inference as variants of Newton's algorithm.

Bayes-Newton Bayes-Newton is a library for approximate inference in Gaussian processes (GPs) in JAX (with objax), built and actively maintained by Wil

Constructing Neural Network-Based Models for Simulating Dynamical Systems

Constructing Neural Network-Based Models for Simulating Dynamical Systems Note this repo is work in progress prior to reviewing This is a companion re

This repo contains the code for the paper "Efficient hierarchical Bayesian inference for spatio-temporal regression models in neuroimaging" that has been accepted to NeurIPS 2021.

Dugh-NeurIPS-2021 This repo contains the code for the paper "Efficient hierarchical Bayesian inference for spatio-temporal regression models in neuroi

This codebase facilitates fast experimentation of differentially private training of Hugging Face transformers.

private-transformers This codebase facilitates fast experimentation of differentially private training of Hugging Face transformers. What is this? Why

A collection of easy-to-use, ready-to-use, interesting deep neural network models

Interesting and reproducible research works should be conserved. This repository wraps a collection of deep neural network models into a simple and un

Python scripts using the Mediapipe models for Halloween.

Mediapipe-Halloween-Examples Python scripts using the Mediapipe models for Halloween. WHY Mainly for fun. But this repository also includes useful exa

Dynamic Visual Reasoning by Learning Differentiable Physics Models from Video and Language (NeurIPS 2021)

VRDP (NeurIPS 2021) Dynamic Visual Reasoning by Learning Differentiable Physics Models from Video and Language Mingyu Ding, Zhenfang Chen, Tao Du, Pin

TAug :: Time Series Data Augmentation using Deep Generative Models

TAug :: Time Series Data Augmentation using Deep Generative Models Note!!! The package is under development so be careful for using in production! Fea

Molecular Sets (MOSES): A benchmarking platform for molecular generation models

Molecular Sets (MOSES): A benchmarking platform for molecular generation models Deep generative models are rapidly becoming popular for the discovery

Image inpainting using Gaussian Mixture Models

dmfa_inpainting Source code for: MisConv: Convolutional Neural Networks for Missing Data (to be published at WACV 2022) Estimating conditional density

Code for Paper "Evidential Softmax for Sparse MultimodalDistributions in Deep Generative Models"

Evidential Softmax for Sparse Multimodal Distributions in Deep Generative Models Abstract Many applications of generative models rely on the marginali

Open source single image super-resolution toolbox containing various functionality for training a diverse number of state-of-the-art super-resolution models. Also acts as the companion code for the IEEE signal processing letters paper titled 'Improving Super-Resolution Performance using Meta-Attention Layers’.

Deep-FIR Codebase - Super Resolution Meta Attention Networks About This repository contains the main coding framework accompanying our work on meta-at

State-of-the-art language models can match human performance on many tasks

Status: Archive (code is provided as-is, no updates expected) Grade School Math [Blog Post] [Paper] State-of-the-art language models can match human p

Easily Process a Batch of Cox Models

ezcox: Easily Process a Batch of Cox Models The goal of ezcox is to operate a batch of univariate or multivariate Cox models and return tidy result. ⏬

Regularized Frank-Wolfe for Dense CRFs: Generalizing Mean Field and Beyond

CRF - Conditional Random Fields A library for dense conditional random fields (CRFs). This is the official accompanying code for the paper Regularized

Deep generative models of 3D grids for structure-based drug discovery

What is liGAN? liGAN is a research codebase for training and evaluating deep generative models for de novo drug design based on 3D atomic density grid

This repository is the official implementation of Using Time-Series Privileged Information for Provably Efficient Learning of Prediction Models

Using Time-Series Privileged Information for Provably Efficient Learning of Prediction Models Link to paper Abstract We study prediction of future out

With this package, you can generate mixed-integer linear programming (MIP) models of trained artificial neural networks (ANNs) using the rectified linear unit (ReLU) activation function

With this package, you can generate mixed-integer linear programming (MIP) models of trained artificial neural networks (ANNs) using the rectified linear unit (ReLU) activation function. At the moment, only TensorFlow sequential models are supported. Interfaces to either the Pyomo or Gurobi modeling environments are offered.

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

PatrickStar: Parallel Training of Large Language Models via a Chunk-based Memory Management Meeting PatrickStar Pre-Trained Models (PTM) are becoming

Data, model training, and evaluation code for "PubTables-1M: Towards a universal dataset and metrics for training and evaluating table extraction models".

PubTables-1M This repository contains training and evaluation code for the paper "PubTables-1M: Towards a universal dataset and metrics for training a

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

AI Assistant for Building Reliable, High-performing and Fair Multilingual NLP Systems

AI Assistant for Building Reliable, High-performing and Fair Multilingual NLP Systems

ResNEsts and DenseNEsts: Block-based DNN Models with Improved Representation Guarantees

ResNEsts and DenseNEsts: Block-based DNN Models with Improved Representation Guarantees This repository is the official implementation of the empirica

Can we visualize a large scientific data set with a surrogate model? We're building a GAN for the Earth's Mantle Convection data set to see if we can!

EarthGAN - Earth Mantle Surrogate Modeling Can a surrogate model of the Earth’s Mantle Convection data set be built such that it can be readily run in

Dynamica causal Bayesian optimisation

Dynamic Causal Bayesian Optimization This is a Python implementation of Dynamic Causal Bayesian Optimization as presented at NeurIPS 2021. Abstract Th

A PyTorch Lightning Callback for pushing models to the Hugging Face Hub 🤗⚡️

hf-hub-lightning A callback for pushing lightning models to the Hugging Face Hub. Note: I made this package for myself, mostly...if folks seem to be i

Simple PyTorch hierarchical models.

A python package adding basic hierarchal networks in pytorch for classification tasks. It implements a simple hierarchal network structure based on feed-backward outputs.

Anuvada: Interpretable Models for NLP using PyTorch

Anuvada: Interpretable Models for NLP using PyTorch So, you want to know why your classifier arrived at a particular decision or why your flashy new d

Quantized tflite models for ailia TFLite Runtime

ailia-models-tflite Quantized tflite models for ailia TFLite Runtime About ailia TFLite Runtime ailia TF Lite Runtime is a TensorFlow Lite compatible

Language Models for the legal domain in Spanish done @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish legal domain Language Model ⚖️ This repository contains the page for two main resources for the Spanish legal domain: A RoBERTa model: https:/

Hierarchical Few-Shot Generative Models

Hierarchical Few-Shot Generative Models Giorgio Giannone, Ole Winther This repo contains code and experiments for the paper Hierarchical Few-Shot Gene

Team Enigma at ArgMining 2021 Shared Task: Leveraging Pretrained Language Models for Key Point Matching

Team Enigma at ArgMining 2021 Shared Task: Leveraging Pretrained Language Models for Key Point Matching This is our attempt of the shared task on Quan

Assessing the Influence of Models on the Performance of Reinforcement Learning Algorithms applied on Continuous Control Tasks

Assessing the Influence of Models on the Performance of Reinforcement Learning Algorithms applied on Continuous Control Tasks This is the master thesi

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrai

A PyTorch-based library for fast prototyping and sharing of deep neural network models.

A PyTorch-based library for fast prototyping and sharing of deep neural network models.

pytorch implementation of the ICCV'21 paper "MVTN: Multi-View Transformation Network for 3D Shape Recognition"

MVTN: Multi-View Transformation Network for 3D Shape Recognition (ICCV 2021) By Abdullah Hamdi, Silvio Giancola, Bernard Ghanem Paper | Video | Tutori

PyTorch Code for NeurIPS 2021 paper Anti-Backdoor Learning: Training Clean Models on Poisoned Data.

Anti-Backdoor Learning PyTorch Code for NeurIPS 2021 paper Anti-Backdoor Learning: Training Clean Models on Poisoned Data. Check the unlearning effect

A little Python application to auto tag your photos with the power of machine learning.

Tag Machine A little Python application to auto tag your photos with the power of machine learning. Report a bug or request a feature Table of Content

Segmentation models with pretrained backbones. PyTorch.

Python library with Neural Networks for Image Segmentation based on PyTorch. The main features of this library are: High level API (just two lines to

This code provides various models combining dilated convolutions with residual networks

Overview This code provides various models combining dilated convolutions with residual networks. Our models can achieve better performance with less

PyTorch and Tensorflow functional model definitions

functional-zoo Model definitions and pretrained weights for PyTorch and Tensorflow PyTorch, unlike lua torch, has autograd in it's core, so using modu

PyTorch implementation of Octave Convolution with pre-trained Oct-ResNet and Oct-MobileNet models

octconv.pytorch PyTorch implementation of Octave Convolution in Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octa

This repository is the official implementation of Unleashing the Power of Contrastive Self-Supervised Visual Models via Contrast-Regularized Fine-Tuning (NeurIPS21).

Core-tuning This repository is the official implementation of ``Unleashing the Power of Contrastive Self-Supervised Visual Models via Contrast-Regular

This is a Machine Learning Based Hand Detector Project, It Uses Machine Learning Models and Modules Like Mediapipe, Developed By Google!

Machine Learning Hand Detector This is a Machine Learning Based Hand Detector Project, It Uses Machine Learning Models and Modules Like Mediapipe, Dev

High-fidelity performance metrics for generative models in PyTorch

High-fidelity performance metrics for generative models in PyTorch

This is the code of NeurIPS'21 paper "Towards Enabling Meta-Learning from Target Models".

ST This is the code of NeurIPS 2021 paper "Towards Enabling Meta-Learning from Target Models". If you use any content of this repo for your work, plea

Collections of pydantic models

pydantic-collections The pydantic-collections package provides BaseCollectionModel class that allows you to manipulate collections of pydantic models

HiddenMarkovModel implements hidden Markov models with Gaussian mixtures as distributions on top of TensorFlow

Class HiddenMarkovModel HiddenMarkovModel implements hidden Markov models with Gaussian mixtures as distributions on top of TensorFlow 2.0 Installatio

A collection of models for image-text generation in ACM MM 2021.

Bi-directional Image and Text Generation UMT-BITG (image & text generator) Unifying Multimodal Transformer for Bi-directional Image and Text Generatio

This repository contains all source code, pre-trained models related to the paper "An Empirical Study on GANs with Margin Cosine Loss and Relativistic Discriminator"

An Empirical Study on GANs with Margin Cosine Loss and Relativistic Discriminator This is a Pytorch implementation for the paper "An Empirical Study o

A new mini-batch framework for optimal transport in deep generative models, deep domain adaptation, approximate Bayesian computation, color transfer, and gradient flow.

BoMb-OT Python3 implementation of the papers On Transportation of Mini-batches: A Hierarchical Approach and Improving Mini-batch Optimal Transport via

Reverse engineer your pytorch vision models, in style

🔍 Rover Reverse engineer your CNNs, in style Rover will help you break down your CNN and visualize the features from within the model. No need to wri

A bunch of random PyTorch models using PyTorch's C++ frontend

PyTorch Deep Learning Models using the C++ frontend Gettting started Clone the repo 1. https://github.com/mrdvince/pytorchcpp 2. cd fashionmnist or

A Word Level Transformer layer based on PyTorch and 🤗 Transformers.

Transformer Embedder A Word Level Transformer layer based on PyTorch and 🤗 Transformers. How to use Install the library from PyPI: pip install transf

LWCC: A LightWeight Crowd Counting library for Python that includes several pretrained state-of-the-art models.

LWCC: A LightWeight Crowd Counting library for Python LWCC is a lightweight crowd counting framework for Python. It wraps four state-of-the-art models

JittorVis - Visual understanding of deep learning models

JittorVis: Visual understanding of deep learning model JittorVis is an open-source library for understanding the inner workings of Jittor models by vi

A modular framework for vision & language multimodal research from Facebook AI Research (FAIR)

MMF is a modular framework for vision and language multimodal research from Facebook AI Research. MMF contains reference implementations of state-of-t

[EMNLP 2021] Mirror-BERT: Converting Pretrained Language Models to universal text encoders without labels.

[EMNLP 2021] Mirror-BERT: Converting Pretrained Language Models to universal text encoders without labels.

Train a state-of-the-art yolov3 object detector from scratch!

TrainYourOwnYOLO: Building a Custom Object Detector from Scratch This repo let's you train a custom image detector using the state-of-the-art YOLOv3 c

NLMpy - A Python package to create neutral landscape models

NLMpy is a Python package for the creation of neutral landscape models that are widely used by landscape ecologists to model ecological patterns

Learning to Prompt for Vision-Language Models.

CoOp Paper: Learning to Prompt for Vision-Language Models Authors: Kaiyang Zhou, Jingkang Yang, Chen Change Loy, Ziwei Liu CoOp (Context Optimization)

A collection of models for image - text generation in ACM MM 2021.

Bi-directional Image and Text Generation UMT-BITG (image & text generator) Unifying Multimodal Transformer for Bi-directional Image and Text Generatio

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

PyTorch implementation of D2C: Diffuison-Decoding Models for Few-shot Conditional Generation.

D2C: Diffuison-Decoding Models for Few-shot Conditional Generation Project | Paper PyTorch implementation of D2C: Diffuison-Decoding Models for Few-sh

Rendering color and depth images for ShapeNet models.

Color & Depth Renderer for ShapeNet This library includes the tools for rendering multi-view color and depth images of ShapeNet models. Physically bas

PyTorch image models, scripts, pretrained weights -- ResNet, ResNeXT, EfficientNet, EfficientNetV2, NFNet, Vision Transformer, MixNet, MobileNet-V3/V2, RegNet, DPN, CSPNet, and more

PyTorch Image Models Sponsors What's New Introduction Models Features Results Getting Started (Documentation) Train, Validation, Inference Scripts Awe

Class activation maps for your PyTorch models (CAM, Grad-CAM, Grad-CAM++, Smooth Grad-CAM++, Score-CAM, SS-CAM, IS-CAM, XGrad-CAM, Layer-CAM)

TorchCAM: class activation explorer Simple way to leverage the class-specific activation of convolutional layers in PyTorch. Quick Tour Setting your C

An NLP library with Awesome pre-trained Transformer models and easy-to-use interface, supporting wide-range of NLP tasks from research to industrial applications.

简体中文 | English News [2021-10-12] PaddleNLP 2.1版本已发布!新增开箱即用的NLP任务能力、Prompt Tuning应用示例与生成任务的高性能推理! 🎉 更多详细升级信息请查看Release Note。 [2021-08-22]《千言:面向事实一致性的生

Production First and Production Ready End-to-End Speech Recognition Toolkit

WeNet 中文版 Discussions | Docs | Papers | Runtime (x86) | Runtime (android) | Pretrained Models We share neural Net together. The main motivation of WeN

Mengzi Pretrained Models

中文 | English Mengzi 尽管预训练语言模型在 NLP 的各个领域里得到了广泛的应用,但是其高昂的时间和算力成本依然是一个亟需解决的问题。这要求我们在一定的算力约束下,研发出各项指标更优的模型。 我们的目标不是追求更大的模型规模,而是轻量级但更强大,同时对部署和工业落地更友好的模型。

Scalable training for dense retrieval models.

Scalable implementation of dense retrieval. Training on cluster By default it trains locally: PYTHONPATH=.:$PYTHONPATH python dpr_scale/main.py traine

NVIDIA Merlin is an open source library providing end-to-end GPU-accelerated recommender systems, from feature engineering and preprocessing to training deep learning models and running inference in production.

NVIDIA Merlin NVIDIA Merlin is an open source library designed to accelerate recommender systems on NVIDIA’s GPUs. It enables data scientists, machine

A framework to train language models to learn invariant representations.

Invariant Language Modeling Implementation of the training for invariant language models. Motivation Modern pretrained language models are critical co

PixelPyramids: Exact Inference Models from Lossless Image Pyramids (ICCV 2021)

PixelPyramids: Exact Inference Models from Lossless Image Pyramids This repository contains the PyTorch implementation of the paper PixelPyramids: Exa

Discovering and Achieving Goals via World Models

Discovering and Achieving Goals via World Models [Project Website] [Benchmark Code] [Video (2min)] [Oral Talk (13min)] [Paper] Russell Mendonca*1, Ole

A Library for Modelling Probabilistic Hierarchical Graphical Models in PyTorch

A Library for Modelling Probabilistic Hierarchical Graphical Models in PyTorch

MIT-Machine Learning with Python–From Linear Models to Deep Learning

MIT-Machine Learning with Python–From Linear Models to Deep Learning | One of the 5 courses in MIT MicroMasters in Statistics & Data Science Welcome t

![[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis](https://raw.githubusercontent.com/EndlessSora/focal-frequency-loss/master/resources/teaser.jpg)

[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis

Focal Frequency Loss - Official PyTorch Implementation This repository provides the official PyTorch implementation for the following paper: Focal Fre

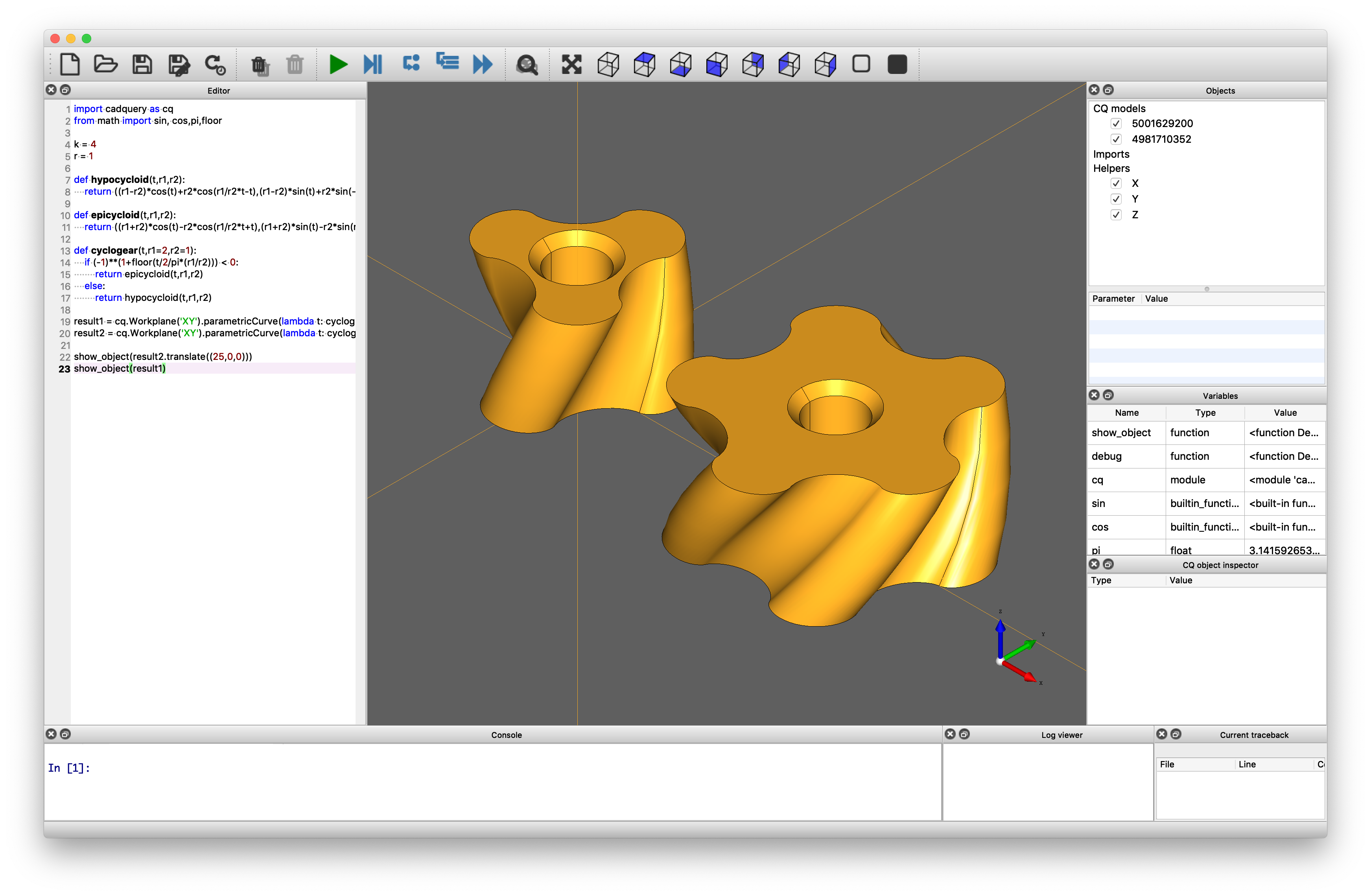

CadQuery is an intuitive, easy-to-use Python module for building parametric 3D CAD models.

A python parametric CAD scripting framework based on OCCT

Library of deep learning models and datasets designed to make deep learning more accessible and accelerate ML research.

Tensor2Tensor Tensor2Tensor, or T2T for short, is a library of deep learning models and datasets designed to make deep learning more accessible and ac

This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model inference.

PyTorch Infer Utils This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model infer

Hapi is a Python library for building Conceptual Distributed Model using HBV96 lumped model & Muskingum routing method

Current build status All platforms: Current release info Name Downloads Version Platforms Hapi - Hydrological library for Python Hapi is an open-sourc

The Multi-Mission Maximum Likelihood framework (3ML)

PyPi Conda The Multi-Mission Maximum Likelihood framework (3ML) A framework for multi-wavelength/multi-messenger analysis for astronomy/astrophysics.

Tangram makes it easy for programmers to train, deploy, and monitor machine learning models.

Tangram Website | Discord Tangram makes it easy for programmers to train, deploy, and monitor machine learning models. Run tangram train to train a mo

A practical and feature-rich paraphrasing framework to augment human intents in text form to build robust NLU models for conversational engines. Created by Prithiviraj Damodaran. Open to pull requests and other forms of collaboration.

Parrot Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more t

PyPI package for scaffolding out code for decision tree models that can learn to find relationships between the attributes of an object.

Decision Tree Writer This package allows you to train a binary classification decision tree on a list of labeled dictionaries or class instances, and

Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE)

OG-SPACE Introduction Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE) is a computational framewo

Analysis of rationale selection in neural rationale models

Neural Rationale Interpretability Analysis We analyze the neural rationale models proposed by Lei et al. (2016) and Bastings et al. (2019), as impleme

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees"

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees" Installa

Benchmarking the robustness of Spatial-Temporal Models

Benchmarking the robustness of Spatial-Temporal Models This repositery contains the code for the paper Benchmarking the Robustness of Spatial-Temporal

Codebase of deep learning models for inferring stability of mRNA molecules

Kaggle OpenVaccine Models Codebase of deep learning models for inferring stability of mRNA molecules, corresponding to the Kaggle Open Vaccine Challen

The self-supervised goal reaching benchmark introduced in Discovering and Achieving Goals via World Models

Lexa-Benchmark Codebase for the self-supervised goal reaching benchmark introduced in 'Discovering and Achieving Goals via World Models'. Setup Create

Code & Data for the Paper "Time Masking for Temporal Language Models", WSDM 2022

Time Masking for Temporal Language Models This repository provides a reference implementation of the paper: Time Masking for Temporal Language Models

Neural text generators like the GPT models promise a general-purpose means of manipulating texts.

Boolean Prompting for Neural Text Generators Neural text generators like the GPT models promise a general-purpose means of manipulating texts. These m

Bayesian regularization for functional graphical models.

BayesFGM Paper: Jiajing Niu, Andrew Brown. Bayesian regularization for functional graphical models. Requirements R version 3.6.3 and up Python 3.6 and