88 Repositories

Python tensor-computation Libraries

(Personalized) Page-Rank computation using PyTorch

torch-ppr This package allows calculating page-rank and personalized page-rank via power iteration with PyTorch, which also supports calculation on GP

Fast SHAP value computation for interpreting tree-based models

FastTreeSHAP FastTreeSHAP package is built based on the paper Fast TreeSHAP: Accelerating SHAP Value Computation for Trees published in NeurIPS 2021 X

A pure PyTorch batched computation implementation of "CIF: Continuous Integrate-and-Fire for End-to-End Speech Recognition"

A pure PyTorch batched computation implementation of "CIF: Continuous Integrate-and-Fire for End-to-End Speech Recognition"

Julia package for contraction of tensor networks, based on the sweep line algorithm outlined in the paper General tensor network decoding of 2D Pauli codes

Julia package for contraction of tensor networks, based on the sweep line algorithm outlined in the paper General tensor network decoding of 2D Pauli codes

CATE: Computation-aware Neural Architecture Encoding with Transformers

CATE: Computation-aware Neural Architecture Encoding with Transformers Code for paper: CATE: Computation-aware Neural Architecture Encoding with Trans

Self-Correcting Quantum Many-Body Control using Reinforcement Learning with Tensor Networks

Self-Correcting Quantum Many-Body Control using Reinforcement Learning with Tensor Networks This repository contains the code and data for the corresp

PyTorch framework, for reproducing experiments from the paper Implicit Regularization in Hierarchical Tensor Factorization and Deep Convolutional Neural Networks

Implicit Regularization in Hierarchical Tensor Factorization and Deep Convolutional Neural Networks. Code, based on the PyTorch framework, for reprodu

Adaptable tools to make reinforcement learning and evolutionary computation algorithms.

Pearl The Parallel Evolutionary and Reinforcement Learning Library (Pearl) is a pytorch based package with the goal of being excellent for rapid proto

This Deep Learning Model Predicts that from which disease you are suffering.

Deep-Learning-Project This Deep Learning Model Predicts that from which disease you are suffering. This Project Covers the Topics of Deep Learning Int

Tree Nested PyTorch Tensor Lib

DI-treetensor treetensor is a generalized tree-based tensor structure mainly developed by OpenDILab Contributors. Almost all the operation can be supp

Tzer: TVM Implementation of "Coverage-Guided Tensor Compiler Fuzzing with Joint IR-Pass Mutation (OOPSLA'22)“.

Artifact • Reproduce Bugs • Quick Start • Installation • Extend Tzer Coverage-Guided Tensor Compiler Fuzzing with Joint IR-Pass Mutation This is the s

Bayesian Modeling and Computation in Python

Bayesian Modeling and Computation in Python Open access and Code This repository contains the open access version of the text and the code examples in

Semantic similarity computation with different state-of-the-art metrics

Semantic similarity computation with different state-of-the-art metrics Description • Installation • Usage • License Description TaxoSS is a semantic

Numerical-computing-is-fun - Learning numerical computing with notebooks for all ages.

As much as this series is to educate aspiring computer programmers and data scientists of all ages and all backgrounds, it is also a reminder to mysel

Aesara is a Python library that allows one to define, optimize, and efficiently evaluate mathematical expressions involving multi-dimensional arrays.

Aesara is a Python library that allows one to define, optimize, and efficiently evaluate mathematical expressions involving multi-dimensional arrays.

Memory efficient transducer loss computation

Introduction This project implements the optimization techniques proposed in Improving RNN Transducer Modeling for End-to-End Speech Recognition to re

MixText: Linguistically-Informed Interpolation of Hidden Space for Semi-Supervised Text Classification

MixText This repo contains codes for the following paper: Jiaao Chen, Zichao Yang, Diyi Yang: MixText: Linguistically-Informed Interpolation of Hidden

Antchain-MPC is a library of MPC (Multi-Parties Computation)

Antchain-MPC Antchain-MPC is a library of MPC (Multi-Parties Computation). It include Morse-STF: A tool for machine learning using MPC. Others: Commin

Tensor-Based Quantum Machine Learning

TensorLy_Quantum TensorLy-Quantum is a Python library for Tensor-Based Quantum Machine Learning that builds on top of TensorLy and PyTorch. Website: h

SymPy-powered, Wolfram|Alpha-like answer engine totally in your browser, without backend computation

SymPy Beta SymPy Beta is a fork of SymPy Gamma. The purpose of this project is to run a SymPy-powered, Wolfram|Alpha-like answer engine totally in you

A set of tests for evaluating large-scale algorithms for Wasserstein-2 transport maps computation.

Continuous Wasserstein-2 Benchmark This is the official Python implementation of the NeurIPS 2021 paper Do Neural Optimal Transport Solvers Work? A Co

PyTea: PyTorch Tensor shape error analyzer

PyTea: PyTorch Tensor Shape Error Analyzer paper project page Requirements node.js = 12.x python = 3.8 z3-solver = 4.8 How to install and use # ins

Stochastic Tensor Optimization for Robot Motion - A GPU Robot Motion Toolkit

STORM Stochastic Tensor Optimization for Robot Motion - A GPU Robot Motion Toolkit [Install Instructions] [Paper] [Website] This package contains code

A Framework for Encrypted Machine Learning in TensorFlow

TF Encrypted is a framework for encrypted machine learning in TensorFlow. It looks and feels like TensorFlow, taking advantage of the ease-of-use of t

Python library for multilinear algebra and tensor factorizations

scikit-tensor is a Python module for multilinear algebra and tensor factorizations

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

code from "Tensor decomposition of higher-order correlations by nonlinear Hebbian plasticity"

Code associated with the paper "Tensor decomposition of higher-order correlations by nonlinear Hebbian learning," Ocker & Buice, Neurips 2021. "plot_f

Probabilistic Tensor Decomposition of Neural Population Spiking Activity

Probabilistic Tensor Decomposition of Neural Population Spiking Activity Matlab (recommended) and Python (in developement) implementations of Soulat e

Official code repository for the publication "Latent Equilibrium: A unified learning theory for arbitrarily fast computation with arbitrarily slow neurons"

Latent Equilibrium: A unified learning theory for arbitrarily fast computation with arbitrarily slow neurons This repository contains the code to repr

⚡️Optimizing einsum functions in NumPy, Tensorflow, Dask, and more with contraction order optimization.

Optimized Einsum Optimized Einsum: A tensor contraction order optimizer Optimized einsum can significantly reduce the overall execution time of einsum

Prevent `CUDA error: out of memory` in just 1 line of code.

🐨 Koila Koila solves CUDA error: out of memory error painlessly. Fix it with just one line of code, and forget it. 🚀 Features 🙅 Prevents CUDA error

tmm_fast is a lightweight package to speed up optical planar multilayer thin-film device computation.

tmm_fast tmm_fast or transfer-matrix-method_fast is a lightweight package to speed up optical planar multilayer thin-film device computation. It is es

DynaTune: Dynamic Tensor Program Optimization in Deep Neural Network Compilation

DynaTune: Dynamic Tensor Program Optimization in Deep Neural Network Compilation This repository is the implementation of DynaTune paper. This folder

Mars is a tensor-based unified framework for large-scale data computation which scales numpy, pandas, scikit-learn and Python functions.

Mars is a tensor-based unified framework for large-scale data computation which scales numpy, pandas, scikit-learn and many other libraries. Documenta

A simple script written using symbolic python that takes as input a desired metric and automatically calculates and outputs the Christoffel Pseudo-Tensor, Riemann Curvature Tensor, Ricci Tensor, Scalar Curvature and the Kretschmann Scalar

A simple script written using symbolic python that takes as input a desired metric and automatically calculates and outputs the Christoffel Pseudo-Tensor, Riemann Curvature Tensor, Ricci Tensor, Scalar Curvature and the Kretschmann Scalar

Epidemiology analysis package

zEpid zEpid is an epidemiology analysis package, providing easy to use tools for epidemiologists coding in Python 3.5+. The purpose of this library is

Code to reproduce the results in the paper "Tensor Component Analysis for Interpreting the Latent Space of GANs".

Tensor Component Analysis for Interpreting the Latent Space of GANs [ paper | project page ] Code to reproduce the results in the paper "Tensor Compon

A python package to manage the stored receiver-side Strain Green's Tensor (SGT) database of 3D background models and able to generate Green's function and synthetic waveform

A python package to manage the stored receiver-side Strain Green's Tensor (SGT) database of 3D background models and able to generate Green's function and synthetic waveform

Taichi is a parallel programming language for high-performance numerical computations.

Taichi is a parallel programming language for high-performance numerical computations.

Hummingbird compiles trained ML models into tensor computation for faster inference.

Hummingbird Introduction Hummingbird is a library for compiling trained traditional ML models into tensor computations. Hummingbird allows users to se

Hangar is version control for tensor data. Commit, branch, merge, revert, and collaborate in the data-defined software era.

Overview docs tests package Hangar is version control for tensor data. Commit, branch, merge, revert, and collaborate in the data-defined software era

Geometric Algebra package for JAX

JAXGA - JAX Geometric Algebra GitHub | Docs JAXGA is a Geometric Algebra package on top of JAX. It can handle high dimensional algebras by storing onl

Tensor-based approaches for fMRI classification

tensor-fmri Using tensor-based approaches to classify fMRI data from StarPLUS. Citation If you use any code in this repository, please cite the follow

Unofficial implementation of the paper: PonderNet: Learning to Ponder in TensorFlow

PonderNet-TensorFlow This is an Unofficial Implementation of the paper: PonderNet: Learning to Ponder in TensorFlow. Official PyTorch Implementation:

![[NeurIPS 2021]](https://github.com/VITA-Group/DePT/raw/main/figs/dept_pipeline.png)

[NeurIPS 2021] "Delayed Propagation Transformer: A Universal Computation Engine towards Practical Control in Cyber-Physical Systems"

Delayed Propagation Transformer: A Universal Computation Engine towards Practical Control in Cyber-Physical Systems Introduction Multi-agent control i

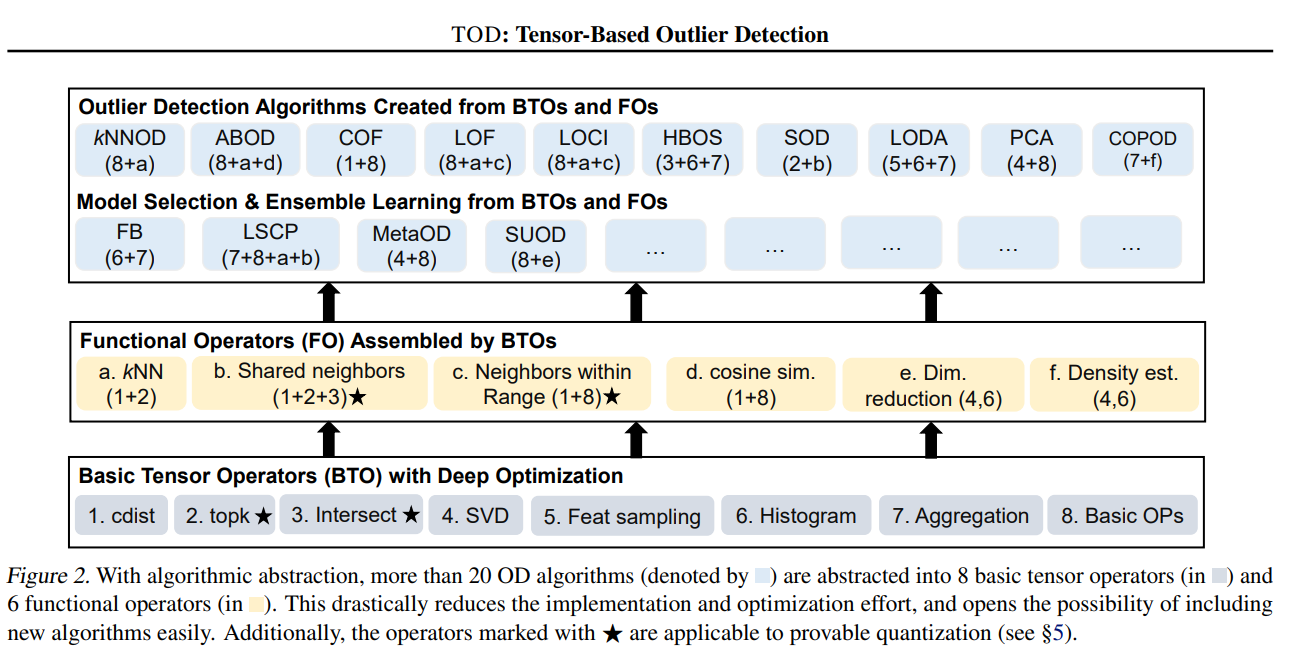

(Py)TOD: Tensor-based Outlier Detection, A General GPU-Accelerated Framework

(Py)TOD: Tensor-based Outlier Detection, A General GPU-Accelerated Framework Background: Outlier detection (OD) is a key data mining task for identify

A lightweight python AUTOmatic-arRAY library.

A lightweight python AUTOmatic-arRAY library. Write numeric code that works for: numpy cupy dask autograd jax mars tensorflow pytorch ... and indeed a

A new mini-batch framework for optimal transport in deep generative models, deep domain adaptation, approximate Bayesian computation, color transfer, and gradient flow.

BoMb-OT Python3 implementation of the papers On Transportation of Mini-batches: A Hierarchical Approach and Improving Mini-batch Optimal Transport via

A bare-bones Python library for quality diversity optimization.

pyribs Website Source PyPI Conda CI/CD Docs Docs Status Twitter pyribs.org GitHub docs.pyribs.org A bare-bones Python library for quality diversity op

Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train format

ttopt Description Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train (TT) format and maximu

Transform-Invariant Non-Negative Matrix Factorization

Transform-Invariant Non-Negative Matrix Factorization A comprehensive Python package for Non-Negative Matrix Factorization (NMF) with a focus on learn

A torch.Tensor-like DataFrame library supporting multiple execution runtimes and Arrow as a common memory format

TorchArrow (Warning: Unstable Prototype) This is a prototype library currently under heavy development. It does not currently have stable releases, an

This project is used for the paper Differentiable Programming of Isometric Tensor Network

This project is used for the paper "Differentiable Programming of Isometric Tensor Network". (arXiv:2110.03898)

NVIDIA Deep Learning Examples for Tensor Cores

NVIDIA Deep Learning Examples for Tensor Cores Introduction This repository provides State-of-the-Art Deep Learning examples that are easy to train an

Scientific Computation Methods in C and Python (Open for Hacktoberfest 2021)

Sci - cpy README is a stub. Do expand it. Objective This repository is meant to be a ready reference for scientific computation methods. Do ⭐ it if yo

Pretty Tensor - Fluent Neural Networks in TensorFlow

Pretty Tensor provides a high level builder API for TensorFlow. It provides thin wrappers on Tensors so that you can easily build multi-layer neural networks.

Sample and Computation Redistribution for Efficient Face Detection

Introduction SCRFD is an efficient high accuracy face detection approach which initially described in Arxiv. Performance Precision, flops and infer ti

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

FluidNet re-written with ATen tensor lib

fluidnet_cxx: Accelerating Fluid Simulation with Convolutional Neural Networks. A PyTorch/ATen Implementation. This repository is based on the paper,

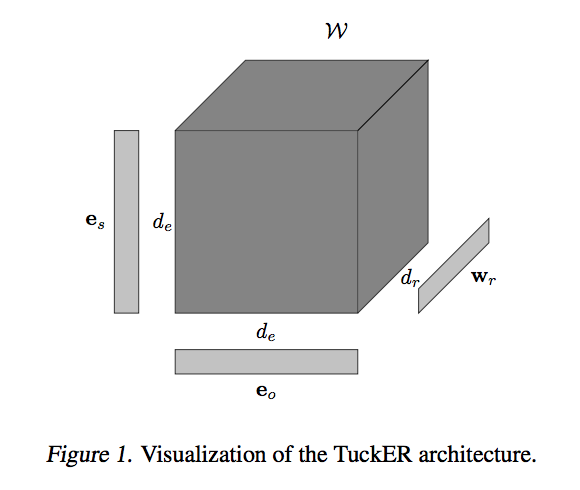

TuckER: Tensor Factorization for Knowledge Graph Completion

TuckER: Tensor Factorization for Knowledge Graph Completion This codebase contains PyTorch implementation of the paper: TuckER: Tensor Factorization f

A PyTorch implementation of "SimGNN: A Neural Network Approach to Fast Graph Similarity Computation" (WSDM 2019).

SimGNN ⠀⠀⠀ A PyTorch implementation of SimGNN: A Neural Network Approach to Fast Graph Similarity Computation (WSDM 2019). Abstract Graph similarity s

ocaml-torch provides some ocaml bindings for the PyTorch tensor library.

ocaml-torch provides some ocaml bindings for the PyTorch tensor library. This brings to OCaml NumPy-like tensor computations with GPU acceleration and tape-based automatic differentiation.

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Lyapunov-guided Deep Reinforcement Learning for Stable Online Computation Offloading in Mobile-Edge Computing Networks

PyTorch code to reproduce LyDROO algorithm [1], which is an online computation offloading algorithm to maximize the network data processing capability subject to the long-term data queue stability and average power constraints. It applies Lyapunov optimization to decouple the multi-stage stochastic MINLP into deterministic per-frame MINLP subproblems and solves each subproblem via DROO algorithm. It includes:

The AugNet Python module contains functions for the fast computation of image similarity.

AugNet AugNet: End-to-End Unsupervised Visual Representation Learning with Image Augmentation arxiv link In our work, we propose AugNet, a new deep le

Custom TensorFlow2 implementations of forward and backward computation of soft-DTW algorithm in batch mode.

Batch Soft-DTW(Dynamic Time Warping) in TensorFlow2 including forward and backward computation Custom TensorFlow2 implementations of forward and backw

pytorch-kaldi is a project for developing state-of-the-art DNN/RNN hybrid speech recognition systems. The DNN part is managed by pytorch, while feature extraction, label computation, and decoding are performed with the kaldi toolkit.

The PyTorch-Kaldi Speech Recognition Toolkit PyTorch-Kaldi is an open-source repository for developing state-of-the-art DNN/HMM speech recognition sys

TorchShard is a lightweight engine for slicing a PyTorch tensor into parallel shards

TorchShard is a lightweight engine for slicing a PyTorch tensor into parallel shards. It can reduce GPU memory and scale up the training when the model has massive linear layers (e.g., ViT, BERT and GPT) or huge classes (millions). It has the same API design as PyTorch.

A NumPy-compatible array library accelerated by CUDA

CuPy : A NumPy-compatible array library accelerated by CUDA Website | Docs | Install Guide | Tutorial | Examples | API Reference | Forum CuPy is an im

Official codebase for Pretrained Transformers as Universal Computation Engines.

universal-computation Overview Official codebase for Pretrained Transformers as Universal Computation Engines. Contains demo notebook and scripts to r

Simulating Sycamore quantum circuits classically using tensor network algorithm.

Simulating the Sycamore quantum supremacy circuit This repo contains data we have obtained in simulating the Sycamore quantum supremacy circuits with

Deep learning with dynamic computation graphs in TensorFlow

TensorFlow Fold TensorFlow Fold is a library for creating TensorFlow models that consume structured data, where the structure of the computation graph

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

50% faster, 50% less RAM Machine Learning. Numba rewritten Sklearn. SVD, NNMF, PCA, LinearReg, RidgeReg, Randomized, Truncated SVD/PCA, CSR Matrices all 50+% faster

[Due to the time taken @ uni, work + hell breaking loose in my life, since things have calmed down a bit, will continue commiting!!!] [By the way, I'm

Sparse Beta-Divergence Tensor Factorization Library

NTFLib Sparse Beta-Divergence Tensor Factorization Library Based off of this beta-NTF project this library is specially-built to handle tensors where

Fast and Easy Infinite Neural Networks in Python

Neural Tangents ICLR 2020 Video | Paper | Quickstart | Install guide | Reference docs | Release notes Overview Neural Tangents is a high-level neural

Deep learning operations reinvented (for pytorch, tensorflow, jax and others)

This video in better quality. einops Flexible and powerful tensor operations for readable and reliable code. Supports numpy, pytorch, tensorflow, and

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

Tensors and Dynamic neural networks in Python with strong GPU acceleration

PyTorch is a Python package that provides two high-level features: Tensor computation (like NumPy) with strong GPU acceleration Deep neural networks b

An open framework for Federated Learning.

Welcome to Intel® Open Federated Learning Federated learning is a distributed machine learning approach that enables organizations to collaborate on m

The Levenshtein Python C extension module contains functions for fast computation of Levenshtein distance and string similarity

Contents Maintainer wanted Introduction Installation Documentation License History Source code Authors Maintainer wanted I am looking for a new mainta

Levenshtein and Hamming distance computation

distance - Utilities for comparing sequences This package provides helpers for computing similarities between arbitrary sequences. Included metrics ar

A library for answering questions using data you cannot see

A library for computing on data you do not own and cannot see PySyft is a Python library for secure and private Deep Learning. PySyft decouples privat

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

aka "Bayesian Methods for Hackers": An introduction to Bayesian methods + probabilistic programming with a computation/understanding-first, mathematics-second point of view. All in pure Python ;)

Bayesian Methods for Hackers Using Python and PyMC The Bayesian method is the natural approach to inference, yet it is hidden from readers behind chap

Tensors and Dynamic neural networks in Python with strong GPU acceleration

PyTorch is a Python package that provides two high-level features: Tensor computation (like NumPy) with strong GPU acceleration Deep neural networks b