905 Repositories

Python transformer-architecture Libraries

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts

t5-japanese Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts. The following is a list of models that

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation [arxiv] This is the official repository for CDTrans: Cross-domain Transformer for

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Continuous Augmented Positional Embeddings (CAPE) implementation for PyTorch

PyTorch implementation of Continuous Augmented Positional Embeddings (CAPE), by Likhomanenko et al. Enhance your Transformer positional embeddings with easy-to-use augmentations!

ScaleNet: A Shallow Architecture for Scale Estimation

ScaleNet: A Shallow Architecture for Scale Estimation Repository for the code of ScaleNet paper: "ScaleNet: A Shallow Architecture for Scale Estimatio

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

Embracing Single Stride 3D Object Detector with Sparse Transformer

SST: Single-stride Sparse Transformer This is the official implementation of paper: Embracing Single Stride 3D Object Detector with Sparse Transformer

ScaleNet: A Shallow Architecture for Scale Estimation

ScaleNet: A Shallow Architecture for Scale Estimation Repository for the code of ScaleNet paper: "ScaleNet: A Shallow Architecture for Scale Estimatio

This is the official code for the paper "Learning with Nested Scene Modeling and Cooperative Architecture Search for Low-Light Vision"

RUAS This is the official code for the paper "Learning with Nested Scene Modeling and Cooperative Architecture Search for Low-Light Vision" A prelimin

Implementation of the paper Recurrent Glimpse-based Decoder for Detection with Transformer.

REGO-Deformable DETR By Zhe Chen, Jing Zhang, and Dacheng Tao. This repository is the implementation of the paper Recurrent Glimpse-based Decoder for

A new video text spotting framework with Transformer

TransVTSpotter: End-to-end Video Text Spotter with Transformer Introduction A Multilingual, Open World Video Text Dataset and End-to-end Video Text Sp

![[KDD 2021, Research Track] DiffMG: Differentiable Meta Graph Search for Heterogeneous Graph Neural Networks](https://user-images.githubusercontent.com/22978940/122879585-a77ed280-d36b-11eb-9b49-6e8fed99faba.png)

[KDD 2021, Research Track] DiffMG: Differentiable Meta Graph Search for Heterogeneous Graph Neural Networks

DiffMG This repository contains the code for our KDD 2021 Research Track paper: DiffMG: Differentiable Meta Graph Search for Heterogeneous Graph Neura

K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce (EMNLP Founding 2021)

Introduction K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce. Installation PyTor

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

Pynavt is a cli tool to create clean architecture app for you including Fastapi, bcrypt and jwt.

Pynavt _____ _ | __ \ | | | |__) | _ _ __ __ ___ _| |_ | ___/ | | | '_ \ / _` \ \ / /

Temperature Monitoring and Prediction Using a Modified Lambda Architecture

Temperature Monitoring and Prediction Using a Modified Lambda Architecture A more detailed write up can be seen in this paper. Original Lambda Archite

SimMIM: A Simple Framework for Masked Image Modeling

SimMIM By Zhenda Xie*, Zheng Zhang*, Yue Cao*, Yutong Lin, Jianmin Bao, Zhuliang Yao, Qi Dai and Han Hu*. This repo is the official implementation of

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

Rapid experimentation and scaling of deep learning models on molecular and crystal graphs.

LitMatter A template for rapid experimentation and scaling deep learning models on molecular and crystal graphs. How to use Clone this repository and

RaceBERT -- A transformer based model to predict race and ethnicty from names

RaceBERT -- A transformer based model to predict race and ethnicty from names Installation pip install racebert Using a virtual environment is highly

SAMO: Streaming Architecture Mapping Optimisation

SAMO: Streaming Architecture Mapping Optimiser The SAMO framework provides a method of optimising the mapping of a Convolutional Neural Network model

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

An ongoing curated list of frameworks, libraries, learning tutorials, software and resources in Python Language.

Python Development Welcome to the world of Python. An ongoing curated list of frameworks, libraries, learning tutorials, software and resources in Pyt

Spatial Transformer Nets in TensorFlow/ TensorLayer

MOVED TO HERE Spatial Transformer Networks Spatial Transformer Networks (STN) is a dynamic mechanism that produces transformations of input images (or

A PaddlePaddle implementation of STGCN with a few modifications in the model architecture in order to forecast traffic jam.

About This repository contains the code of a PaddlePaddle implementation of STGCN based on the paper Spatio-Temporal Graph Convolutional Networks: A D

This is Official implementation for "Pose-guided Feature Disentangling for Occluded Person Re-Identification Based on Transformer" in AAAI2022

PFD:Pose-guided Feature Disentangling for Occluded Person Re-identification based on Transformer This repo is the official implementation of "Pose-gui

Learning Tracking Representations via Dual-Branch Fully Transformer Networks

Learning Tracking Representations via Dual-Branch Fully Transformer Networks DualTFR ⭐ We achieves the runner-ups for both VOT2021ST (short-term) and

Codes for the AAAI'22 paper "TransZero: Attribute-guided Transformer for Zero-Shot Learning"

TransZero [arXiv] This repository contains the testing code for the paper "TransZero: Attribute-guided Transformer for Zero-Shot Learning" accepted to

Reproduction of Vision Transformer in Tensorflow2. Train from scratch and Finetune.

Vision Transformer(ViT) in Tensorflow2 Tensorflow2 implementation of the Vision Transformer(ViT). This repository is for An image is worth 16x16 words

Official pytorch code for SSAT: A Symmetric Semantic-Aware Transformer Network for Makeup Transfer and Removal

SSAT: A Symmetric Semantic-Aware Transformer Network for Makeup Transfer and Removal This is the official pytorch code for SSAT: A Symmetric Semantic-

Flask pre-setup architecture. This can be used in any flask project for a faster and better project code structure.

Flask pre-setup architecture. This can be used in any flask project for a faster and better project code structure. All the required libraries are already installed easily to use in any big project.

Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fusion Framework via Self-Supervised Multi-Task Learning. Code will be available soon.

Official-PyTorch-Implementation-of-TransMEF Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fu

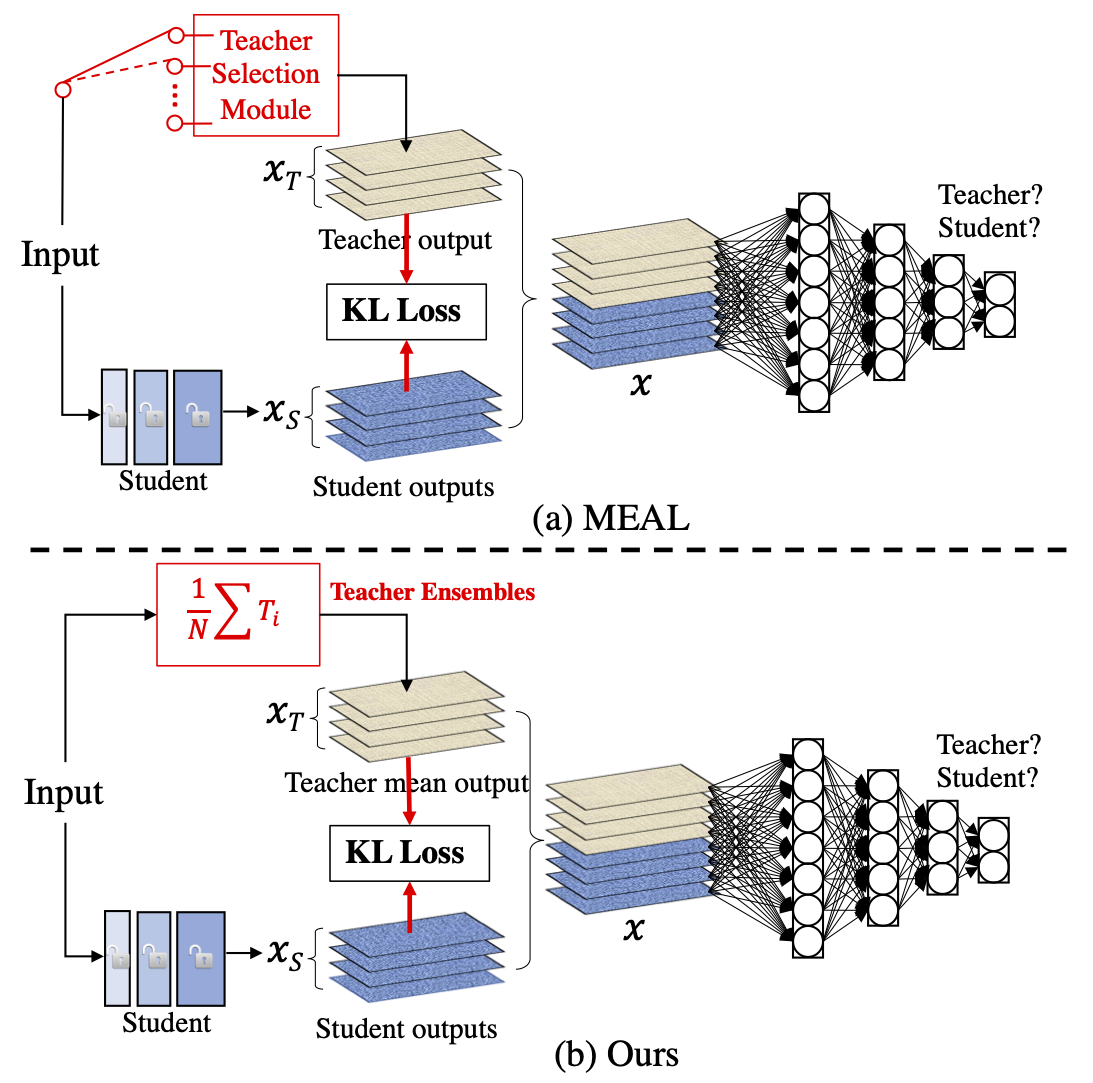

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

A highly modular PyTorch framework with a focus on Neural Architecture Search (NAS).

UniNAS A highly modular PyTorch framework with a focus on Neural Architecture Search (NAS). under development (which happens mostly on our internal Gi

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Slientruss3d : Python for stable truss analysis tool

slientruss3d : Python for stable truss analysis tool Desciption slientruss3d is a python package which can solve the resistances, internal forces and

The project page of paper: Architecture disentanglement for deep neural networks [ICCV 2021, oral]

This is the project page for the paper: Architecture Disentanglement for Deep Neural Networks, Jie Hu, Liujuan Cao, Tong Tong, Ye Qixiang, ShengChuan

Code for the ACL 2021 paper "Structural Guidance for Transformer Language Models"

Structural Guidance for Transformer Language Models This repository accompanies the paper, Structural Guidance for Transformer Language Models, publis

SwinTrack: A Simple and Strong Baseline for Transformer Tracking

SwinTrack This is the official repo for SwinTrack. A Simple and Strong Baseline Prerequisites Environment conda (recommended) conda create -y -n SwinT

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

The official repository for "Score Transformer: Generating Musical Scores from Note-level Representation" (MMAsia '21)

Score Transformer This is the official repository for "Score Transformer": Score Transformer: Generating Musical Scores from Note-level Representation

Code for A Volumetric Transformer for Accurate 3D Tumor Segmentation

VT-UNet This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmentaion results of VT-UNet. Environmen

Implementation of the paper 'Sentence Bottleneck Autoencoders from Transformer Language Models'

Introduction This repository contains the code for the paper Sentence Bottleneck Autoencoders from Transformer Language Models by Ivan Montero, Nikola

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network)

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network) BERTAC is a framework that combines a

Code for the paper "Are Sixteen Heads Really Better than One?"

Are Sixteen Heads Really Better than One? This repository contains code to reproduce the experiments in our paper Are Sixteen Heads Really Better than

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Norm-based Analysis of Transformer

Norm-based Analysis of Transformer Implementations for 2 papers introducing to analyze Transformers using vector norms: Kobayashi+'20 Attention is Not

Understanding the Difficulty of Training Transformers

Admin Understanding the Difficulty of Training Transformers Guided by our analyses, we propose Adaptive Model Initialization (Admin), which successful

Official Pytorch Implementation of Length-Adaptive Transformer (ACL 2021)

Length-Adaptive Transformer This is the official Pytorch implementation of Length-Adaptive Transformer. For detailed information about the method, ple

Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP 2021.

The Stem Cell Hypothesis Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP

🥇Samsung AI Challenge 2021 1등 솔루션입니다🥇

MoT - Molecular Transformer Large-scale Pretraining for Molecular Property Prediction Samsung AI Challenge for Scientific Discovery This repository is

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion. NÜWA is a unified multimodal p

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification [pdf] The official repository for Self-Supervised Pre-Training for Transfo

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

Quantity Takeoff with Python. Collecting groups of elements by filters

The free tool QuantityTakeoff allows you to group elements from Revit and IFC models (in BIMJSON-CSV format) with just a few filters and find the required volume values for the grouped elements.

Agile Threat Modeling Toolkit

Threagile is an open-source toolkit for agile threat modeling:

CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer

CycleTransGAN-EVC CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer Demo emotion CycleTransGAN CycleTransGAN Cycle

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

Official PyTorch Implementation of HELP: Hardware-adaptive Efficient Latency Prediction for NAS via Meta-Learning (NeurIPS 2021 Spotlight)

[NeurIPS 2021 Spotlight] HELP: Hardware-adaptive Efficient Latency Prediction for NAS via Meta-Learning [Paper] This is Official PyTorch implementatio

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

Pytorch implementation of Integrating Tree Path in Transformer for Code Representation

This is an official Pytorch implementation of the approaches proposed in: Han Peng, Ge Li, Wenhan Wang, Yunfei Zhao, Zhi Jin “Integrating Tree Path in

Code for CVPR 2021 paper TransNAS-Bench-101: Improving Transferrability and Generalizability of Cross-Task Neural Architecture Search.

TransNAS-Bench-101 This repository contains the publishable code for CVPR 2021 paper TransNAS-Bench-101: Improving Transferrability and Generalizabili

This is a collection of our NAS and Vision Transformer work.

This is a collection of our NAS and Vision Transformer work.

Swin-Transformer is basically a hierarchical Transformer whose representation is computed with shifted windows.

Swin-Transformer Swin-Transformer is basically a hierarchical Transformer whose representation is computed with shifted windows. For more details, ple

Code for A Volumetric Transformer for Accurate 3D Tumor Segmentation

VT-UNet This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmentaion results of VT-UNet. Environmen

The official implementation for "FQ-ViT: Fully Quantized Vision Transformer without Retraining".

FQ-ViT [arXiv] This repo contains the official implementation of "FQ-ViT: Fully Quantized Vision Transformer without Retraining". Table of Contents In

This is a collection of our NAS and Vision Transformer work.

AutoML - Neural Architecture Search This is a collection of our AutoML-NAS work iRPE (NEW): Rethinking and Improving Relative Position Encoding for Vi

ContourletNet: A Generalized Rain Removal Architecture Using Multi-Direction Hierarchical Representation

ContourletNet: A Generalized Rain Removal Architecture Using Multi-Direction Hierarchical Representation (Accepted by BMVC'21) Abstract: Images acquir

Codes for paper "KNAS: Green Neural Architecture Search"

KNAS Codes for paper "KNAS: Green Neural Architecture Search" KNAS is a green (energy-efficient) Neural Architecture Search (NAS) approach. It contain

Mixing up the Invariant Information clustering architecture, with self supervised concepts from SimCLR and MoCo approaches

Self Supervised clusterer Combined IIC, and Moco architectures, with some SimCLR notions, to get state of the art unsupervised clustering while retain

Source code for CsiNet and CRNet using Fully Connected Layer-Shared feedback architecture.

FCS-applications Source code for CsiNet and CRNet using the Fully Connected Layer-Shared feedback architecture. Introduction This repository contains

Large scale and asynchronous Hyperparameter Optimization at your fingertip.

Syne Tune This package provides state-of-the-art distributed hyperparameter optimizers (HPO) where trials can be evaluated with several backend option

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

Pytorch library for end-to-end transformer models training and serving

Pytorch library for end-to-end transformer models training and serving

Problem statements on System Design and Software Architecture as part of Arpit's System Design Masterclass

Problem statements on System Design and Software Architecture as part of Arpit's System Design Masterclass

Official Code for Cross-Modality Fusion Transformer for Multispectral Object Detection.

Multispectral-Object-Detection Intro Official Code for Cross-Modality Fusion Transformer for Multispectral Object Detection. Multispectral Object Dete

Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation

TVT Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation Datasets: Digit: MNIST, SVHN, USPS Object: Office, Office-Home, Vi

AutoDeeplab / auto-deeplab / AutoML for semantic segmentation, implemented in Pytorch

AutoML for Image Semantic Segmentation Currently this repo contains the only working open-source implementation of Auto-Deeplab which, by the way out-

PySpark bindings for H3, a hierarchical hexagonal geospatial indexing system

h3-pyspark: Uber's H3 Hexagonal Hierarchical Geospatial Indexing System in PySpark PySpark bindings for the H3 core library. For available functions,

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis, accepted at ACMMM 2021.

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification [pdf] The official repository for Self-Supervised Pre-Training for Transfo

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing Paper Introduction Multi-task indoor scene understanding is widely considered a

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: "NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion". NÜWA is a unified multimodal

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing Paper Introduction Multi-task indoor scene understanding is widely considered a

OpenMMLab Text Detection, Recognition and Understanding Toolbox

Introduction English | 简体中文 MMOCR is an open-source toolbox based on PyTorch and mmdetection for text detection, text recognition, and the correspondi

OpenMMLab Image Classification Toolbox and Benchmark

Introduction English | 简体中文 MMClassification is an open source image classification toolbox based on PyTorch. It is a part of the OpenMMLab project. D

Question and answer retrieval in Turkish with BERT

trfaq Google supported this work by providing Google Cloud credit. Thank you Google for supporting the open source! 🎉 What is this? At this repo, I'm

MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation

MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation This repo is the official implementation of "MHFormer: Multi-Hypothesis Transforme

A library that integrates huggingface transformers with the world of fastai, giving fastai devs everything they need to train, evaluate, and deploy transformer specific models.

blurr A library that integrates huggingface transformers with version 2 of the fastai framework Install You can now pip install blurr via pip install

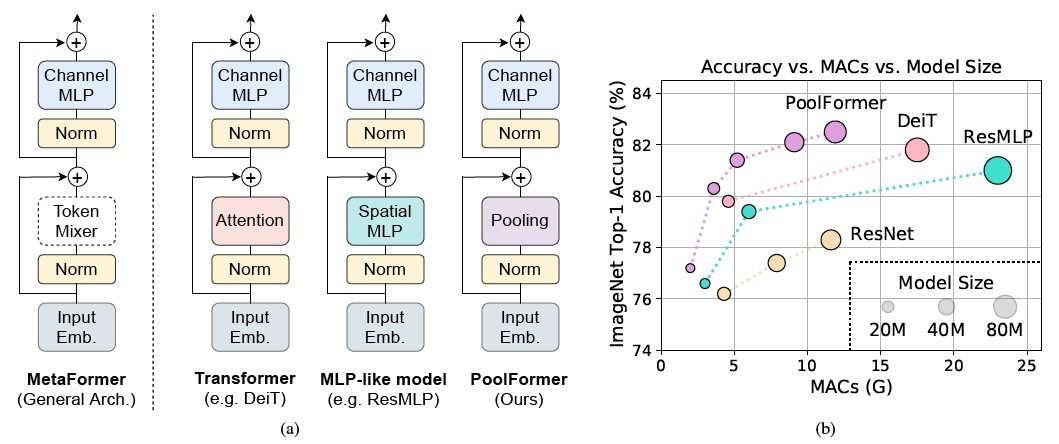

PoolFormer: MetaFormer is Actually What You Need for Vision

PoolFormer: MetaFormer is Actually What You Need for Vision (arXiv) This is a PyTorch implementation of PoolFormer proposed by our paper "MetaFormer i

Skoot is a lightweight python library of machine learning transformer classes that interact with scikit-learn and pandas.

Skoot is a lightweight python library of machine learning transformer classes that interact with scikit-learn and pandas. Its objective is to ex

Tensorflow 2.x implementation of Vision-Transformer model

Vision Transformer Unofficial Tensorflow 2.x implementation of the Transformer based Image Classification model proposed by the paper AN IMAGE IS WORT

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models.

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models. Hyperactive: is very easy to lear

State of the art faster Natural Language Processing in Tensorflow 2.0 .

tf-transformers: faster and easier state-of-the-art NLP in TensorFlow 2.0 ****************************************************************************

This is an official implementation for "Exploiting Temporal Contexts with Strided Transformer for 3D Human Pose Estimation".

Exploiting Temporal Contexts with Strided Transformer for 3D Human Pose Estimation This repo is the official implementation of Exploiting Temporal Con

Generalized Decision Transformer for Offline Hindsight Information Matching

Generalized Decision Transformer for Offline Hindsight Information Matching [arxiv] If you use this codebase for your research, please cite the paper: