905 Repositories

Python transformer-architecture Libraries

Naszilla is a Python library for neural architecture search (NAS)

A repository to compare many popular NAS algorithms seamlessly across three popular benchmarks (NASBench 101, 201, and 301). You can implement your ow

The pure and clear PyTorch Distributed Training Framework.

The pure and clear PyTorch Distributed Training Framework. Introduction Requirements and Usage Dependency Dataset Basic Usage Slurm Cluster Usage Base

TransMorph: Transformer for Medical Image Registration

TransMorph: Transformer for Medical Image Registration keywords: Vision Transformer, Swin Transformer, convolutional neural networks, image registrati

Official repository for "Restormer: Efficient Transformer for High-Resolution Image Restoration". SOTA for motion deblurring, image deraining, denoising (Gaussian/real data), and defocus deblurring.

Restormer: Efficient Transformer for High-Resolution Image Restoration Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan,

Code for the paper "Offline Reinforcement Learning as One Big Sequence Modeling Problem"

Trajectory Transformer Code release for Offline Reinforcement Learning as One Big Sequence Modeling Problem. Installation All python dependencies are

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

AutoML library for deep learning

Official Website: autokeras.com AutoKeras: An AutoML system based on Keras. It is developed by DATA Lab at Texas A&M University. The goal of AutoKeras

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch. Most of the models in NLP were implemented with less than 100 lines of code.(except comments or blank lines)

DeepHyper: Scalable Asynchronous Neural Architecture and Hyperparameter Search for Deep Neural Networks

What is DeepHyper? DeepHyper is a software package that uses learning, optimization, and parallel computing to automate the design and development of

Deploy optimized transformer based models on Nvidia Triton server

🤗 Hugging Face Transformer submillisecond inference 🤯 and deployment on Nvidia Triton server Yes, you can perfom inference with transformer based mo

Official repository for the paper "GN-Transformer: Fusing AST and Source Code information in Graph Networks".

GN-Transformer AST This is the official repository for the paper "GN-Transformer: Fusing AST and Source Code information in Graph Networks". Data Prep

The implementation of DeBERTa

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Official repository for "Restormer: Efficient Transformer for High-Resolution Image Restoration". SOTA results for single-image motion deblurring, image deraining, image denoising (synthetic and real data), and dual-pixel defocus deblurring.

Restormer: Efficient Transformer for High-Resolution Image Restoration Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan,

A PyTorch implementation of "CoAtNet: Marrying Convolution and Attention for All Data Sizes".

CoAtNet Overview This is a PyTorch implementation of CoAtNet specified in "CoAtNet: Marrying Convolution and Attention for All Data Sizes", arXiv 2021

Implementation of DocFormer: End-to-End Transformer for Document Understanding, a multi-modal transformer based architecture for the task of Visual Document Understanding (VDU)

DocFormer - PyTorch Implementation of DocFormer: End-to-End Transformer for Document Understanding, a multi-modal transformer based architecture for t

Official implementation of UTNet: A Hybrid Transformer Architecture for Medical Image Segmentation

UTNet (Accepted at MICCAI 2021) Official implementation of UTNet: A Hybrid Transformer Architecture for Medical Image Segmentation Introduction Transf

PyTorch implementation for our NeurIPS 2021 Spotlight paper "Long Short-Term Transformer for Online Action Detection".

Long Short-Term Transformer for Online Action Detection Introduction This is a PyTorch implementation for our NeurIPS 2021 Spotlight paper "Long Short

A transformer-based method for Healthcare Image Captioning in Vietnamese

vieCap4H Challenge 2021: A transformer-based method for Healthcare Image Captioning in Vietnamese This repo GitHub contains our solution for vieCap4H

TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios

TPH-YOLOv5 This repo is the implementation of "TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured

Flaxformer: transformer architectures in JAX/Flax

Flaxformer is a transformer library for primarily NLP and multimodal research at Google.

A Pytorch implement of paper "Anomaly detection in dynamic graphs via transformer" (TADDY).

TADDY: Anomaly detection in dynamic graphs via transformer This repo covers an reference implementation for the paper "Anomaly detection in dynamic gr

Session-aware Item-combination Recommendation with Transformer Network

Session-aware Item-combination Recommendation with Transformer Network 2nd place (0.39224) code and report for IEEE BigData Cup 2021 Track1 Report EDA

AdaNet is a lightweight TensorFlow-based framework for automatically learning high-quality models with minimal expert intervention

AdaNet is a lightweight TensorFlow-based framework for automatically learning high-quality models with minimal expert intervention. AdaNet buil

An open source AutoML toolkit for automate machine learning lifecycle, including feature engineering, neural architecture search, model compression and hyper-parameter tuning.

NNI Doc | 简体中文 NNI (Neural Network Intelligence) is a lightweight but powerful toolkit to help users automate Feature Engineering, Neural Architecture

Neural Architecture Search Powered by Swarm Intelligence 🐜

Neural Architecture Search Powered by Swarm Intelligence 🐜 DeepSwarm DeepSwarm is an open-source library which uses Ant Colony Optimization to tackle

High-resolution networks and Segmentation Transformer for Semantic Segmentation

High-resolution networks and Segmentation Transformer for Semantic Segmentation Branches This is the implementation for HRNet + OCR. The PyTroch 1.1 v

This code is a toolbox that uses Torch library for training and evaluating the ERFNet architecture for semantic segmentation.

ERFNet This code is a toolbox that uses Torch library for training and evaluating the ERFNet architecture for semantic segmentation. NEW!! New PyTorch

The repository contains source code and models to use PixelNet architecture used for various pixel-level tasks. More details can be accessed at http://www.cs.cmu.edu/~aayushb/pixelNet/.

PixelNet: Representation of the pixels, by the pixels, and for the pixels. We explore design principles for general pixel-level prediction problems, f

ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation.

ENet This work has been published in arXiv: ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. Packages: train contains too

ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation

ENet in Caffe Execution times and hardware requirements Network 1024x512 1280x720 Parameters Model size (fp32) ENet 20.4 ms 32.9 ms 0.36 M 1.5 MB SegN

FCN (Fully Convolutional Network) is deep fully convolutional neural network architecture for semantic pixel-wise segmentation

FCN_via_Keras FCN FCN (Fully Convolutional Network) is deep fully convolutional neural network architecture for semantic pixel-wise segmentation. This

A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation

Segnet is deep fully convolutional neural network architecture for semantic pixel-wise segmentation. This is implementation of http://arxiv.org/pdf/15

Implementation of SegNet: A Deep Convolutional Encoder-Decoder Architecture for Semantic Pixel-Wise Labelling

Caffe SegNet This is a modified version of Caffe which supports the SegNet architecture As described in SegNet: A Deep Convolutional Encoder-Decoder A

![[CVPR'20] TTSR: Learning Texture Transformer Network for Image Super-Resolution](https://github.com/FuzhiYang/TTSR/raw/master/IMG/TT.png)

[CVPR'20] TTSR: Learning Texture Transformer Network for Image Super-Resolution

TTSR Official PyTorch implementation of the paper Learning Texture Transformer Network for Image Super-Resolution accepted in CVPR 2020. Contents Intr

Boundary-aware Transformers for Skin Lesion Segmentation

Boundary-aware Transformers for Skin Lesion Segmentation Introduction This is an official release of the paper Boundary-aware Transformers for Skin Le

History Aware Multimodal Transformer for Vision-and-Language Navigation

History Aware Multimodal Transformer for Vision-and-Language Navigation This repository is the official implementation of History Aware Multimodal Tra

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

aMLP Transformer Model for Japanese

aMLP-japanese Japanese aMLP Pretrained Model aMLPとは、Liu, Daiらが提案する、Transformerモデルです。 ざっくりというと、BERTの代わりに使えて、より性能の良いモデルです。 詳しい解説は、こちらの記事などを参考にしてください。 この

History Aware Multimodal Transformer for Vision-and-Language Navigation

History Aware Multimodal Transformer for Vision-and-Language Navigation This repository is the official implementation of History Aware Multimodal Tra

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers

hierarchical-transformer-1d Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers In Progress!! 2021.

Oriented Object Detection: Oriented RepPoints + Swin Transformer/ReResNet

Oriented RepPoints for Aerial Object Detection The code for the implementation of “Oriented RepPoints + Swin Transformer/ReResNet”. Introduction Based

Transformer part of 12th place solution in Riiid! Answer Correctness Prediction

kaggle_riiid Transformer part of 12th place solution in Riiid! Answer Correctness Prediction. Please see here for more information. Execution You need

A object detecting neural network powered by the yolo architecture and leveraging the PyTorch framework and associated libraries.

Yolo-Powered-Detector A object detecting neural network powered by the yolo architecture and leveraging the PyTorch framework and associated libraries

The official implementation of Theme Transformer

Theme Transformer This is the official implementation of Theme Transformer. Checkout our demo and paper : Demo | arXiv Environment: using python versi

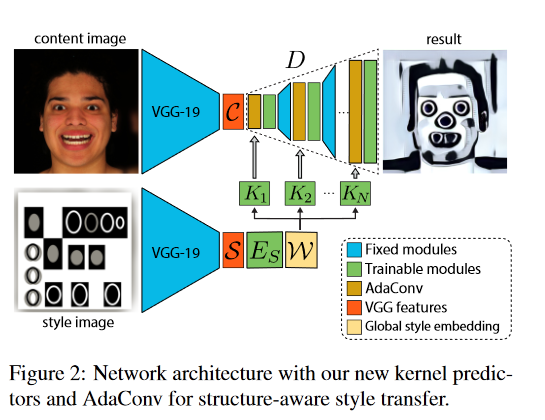

Unofficial PyTorch implementation of the Adaptive Convolution architecture for image style transfer

AdaConv Unofficial PyTorch implementation of the Adaptive Convolution architecture for image style transfer from "Adaptive Convolutions for Structure-

Implementation of Hourglass Transformer, in Pytorch, from Google and OpenAI

Hourglass Transformer - Pytorch (wip) Implementation of Hourglass Transformer, in Pytorch. It will also contain some of my own ideas about how to make

ViDT: An Efficient and Effective Fully Transformer-based Object Detector

ViDT: An Efficient and Effective Fully Transformer-based Object Detector by Hwanjun Song1, Deqing Sun2, Sanghyuk Chun1, Varun Jampani2, Dongyoon Han1,

Official code and pretrained models for CTRL-C (Camera calibration TRansformer with Line-Classification).

CTRL-C: Camera calibration TRansformer with Line-Classification This repository contains the official code and pretrained models for CTRL-C (Camera ca

ICCV2021, Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet

Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet, ICCV 2021 Update: 2021/03/11: update our new results. Now our T2T-ViT-14 w

This is a collection of our NAS and Vision Transformer work.

AutoML - Neural Architecture Search This is a collection of our AutoML-NAS work iRPE (NEW): Rethinking and Improving Relative Position Encoding for Vi

ICCV2021 Papers with Code

ICCV2021 Papers with Code

Efficient Training of Audio Transformers with Patchout

PaSST: Efficient Training of Audio Transformers with Patchout This is the implementation for Efficient Training of Audio Transformers with Patchout Pa

Flaxformer: transformer architectures in JAX/Flax

Flaxformer: transformer architectures in JAX/Flax Flaxformer is a transformer library for primarily NLP and multimodal research at Google. It is used

TransCD: Scene Change Detection via Transformer-based Architecture

TransCD: Scene Change Detection via Transformer-based Architecture

Official Pytorch implementation of 'RoI Tanh-polar Transformer Network for Face Parsing in the Wild.'

Official Pytorch implementation of 'RoI Tanh-polar Transformer Network for Face Parsing in the Wild.'

METER: Multimodal End-to-end TransformER

METER Code and pre-trained models will be publicized soon. Citation @article{dou2021meter, title={An Empirical Study of Training End-to-End Vision-a

Arch-Net: Model Distillation for Architecture Agnostic Model Deployment

Arch-Net: Model Distillation for Architecture Agnostic Model Deployment The official implementation of Arch-Net: Model Distillation for Architecture A

MiShell is a multi-platform, multi-architecture project based on the first version (MiShell32)

MiShell is a multi-platform, multi-architecture project based on the first version (MiShell32), which offers super super small reverse shell payloads great for injection in buffer overflow vulnerabilities, written in assembly with a lot of tools written in python.

Deploy optimized transformer based models on Nvidia Triton server

Deploy optimized transformer based models on Nvidia Triton server

Charsiu: A transformer-based phonetic aligner

Charsiu: A transformer-based phonetic aligner [arXiv] Note. This is a preview version. The aligner is under active development. New functions, new lan

Image Restoration Using Swin Transformer for VapourSynth

SwinIR SwinIR function for VapourSynth, based on https://github.com/JingyunLiang/SwinIR. Dependencies NumPy PyTorch, preferably with CUDA. Note that t

Using a Seq2Seq RNN architecture via TensorFlow to predict future Bitcoin prices

Recurrent Bitcoin Network A Data Science Thesis Project About This repository contains the source code for implementing Bitcoin price prediciton using

![[SIGMETRICS 2022] One Proxy Device Is Enough for Hardware-Aware Neural Architecture Search](https://github.com/Ren-Research/OneProxy/raw/main/images/sota.jpg)

[SIGMETRICS 2022] One Proxy Device Is Enough for Hardware-Aware Neural Architecture Search

One Proxy Device Is Enough for Hardware-Aware Neural Architecture Search paper | website One Proxy Device Is Enough for Hardware-Aware Neural Architec

An Open-Source Toolkit for Prompt-Learning.

An Open-Source Framework for Prompt-learning. Overview • Installation • How To Use • Docs • Paper • Citation • What's New? Nov 2021: Now we have relea

Public implementation of the Convolutional Motif Kernel Network (CMKN) architecture

CMKN Implementation of the convolutional motif kernel network (CMKN) introduced in Ditz et al., "Convolutional Motif Kernel Network", 2021. Testing Yo

Official implementation of "Multi-Glimpse Network: A Robust and Efficient Classification Architecture based on Recurrent Downsampled Attention" (BMVC 2021).

Multi-Glimpse Network Multi-Glimpse Network: A Robust and Efficient Classification Architecture based on Recurrent Downsampled Attention arXiv Require

A python library for highly configurable transformers - easing model architecture search and experimentation.

A python library for highly configurable transformers - easing model architecture search and experimentation.

A Convolutional Transformer for Keyword Spotting

☢️ Audiomer ☢️ Audiomer: A Convolutional Transformer for Keyword Spotting [ arXiv ] [ Previous SOTA ] [ Model Architecture ] Results on SpeechCommands

Architecture example simulator

SCADA architecture Example of a SCADA-like console application, used to serve as a minimal example of a standard architecture of an IIoT system. Insta

Time Series Forecasting with Temporal Fusion Transformer in Pytorch

Forecasting with the Temporal Fusion Transformer Multi-horizon forecasting often contains a complex mix of inputs – including static (i.e. time-invari

Pytorch library for fast transformer implementations

Transformers are very successful models that achieve state of the art performance in many natural language tasks

A treasure chest for visual recognition powered by PaddlePaddle

简体中文 | English PaddleClas 简介 飞桨图像识别套件PaddleClas是飞桨为工业界和学术界所准备的一个图像识别任务的工具集,助力使用者训练出更好的视觉模型和应用落地。 近期更新 2021.11.1 发布PP-ShiTu技术报告,新增饮料识别demo 2021.10.23 发

Code for a seq2seq architecture with Bahdanau attention designed to map stereotactic EEG data from human brains to spectrograms, using the PyTorch Lightning.

stereoEEG2speech We provide code for a seq2seq architecture with Bahdanau attention designed to map stereotactic EEG data from human brains to spectro

Agent-based model simulator for air quality and pandemic risk assessment in architectural spaces

Agent-based model simulation for air quality and pandemic risk assessment in architectural spaces. User Guide archABM is a fast and open source agent-

toroidal - a lightweight transformer library for PyTorch

toroidal - a lightweight transformer library for PyTorch Toroidal transformers are of smaller size and lower weight than the more common E-I types. Th

Code for "Discovering Non-monotonic Autoregressive Orderings with Variational Inference" (paper and code updated from ICLR 2021)

Discovering Non-monotonic Autoregressive Orderings with Variational Inference Description This package contains the source code implementation of the

![[NeurIPS 2021]](https://github.com/VITA-Group/DePT/raw/main/figs/dept_pipeline.png)

[NeurIPS 2021] "Delayed Propagation Transformer: A Universal Computation Engine towards Practical Control in Cyber-Physical Systems"

Delayed Propagation Transformer: A Universal Computation Engine towards Practical Control in Cyber-Physical Systems Introduction Multi-agent control i

GUI implementation of a Transformer chatbot. Suggests amicable responses to messages from friends.

conversation-helper GUI implementation of a Transformer chatbot. Suggests amicable responses to messages from friends. Screenshots Upcoming Release Im

A transformer model to predict pathogenic mutations

MutFormer MutFormer is an application of the BERT (Bidirectional Encoder Representations from Transformers) NLP (Natural Language Processing) model wi

A pre-trained model with multi-exit transformer architecture.

ElasticBERT This repository contains finetuning code and checkpoints for ElasticBERT. Towards Efficient NLP: A Standard Evaluation and A Strong Baseli

Data, model training, and evaluation code for "PubTables-1M: Towards a universal dataset and metrics for training and evaluating table extraction models".

PubTables-1M This repository contains training and evaluation code for the paper "PubTables-1M: Towards a universal dataset and metrics for training a

My first Minecraft CPU. Created in collaboration with Peer Carnes as a final project in CS 281: Architecture and Assembly at the University of Puget Sound

Minecraft CPU This is my first ever Minecraft CPU, created in collaboration with Peer Carnes. We created a custom assembly language, including an asse

ElasticBERT: A pre-trained model with multi-exit transformer architecture.

This repository contains finetuning code and checkpoints for ElasticBERT. Towards Efficient NLP: A Standard Evaluation and A Strong Baseli

Implementation of UNET architecture for Image Segmentation.

Semantic Segmentation using UNET This is the implementation of UNET on Carvana Image Masking Kaggle Challenge About the Dataset This dataset contains

Machine Translation Implement By Bi-GRU And Transformer

Seq2Seq Translation Implement By Bidirectional GRU And Transformer In Pytorch Before You Run The Code You should download the data through the link be

NAS-FCOS: Fast Neural Architecture Search for Object Detection (CVPR 2020)

NAS-FCOS: Fast Neural Architecture Search for Object Detection This project hosts the train and inference code with pretrained model for implementing

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrai

Implementation of ConvMixer-Patches Are All You Need? in TensorFlow and Keras

Patches Are All You Need? - ConvMixer ConvMixer, an extremely simple model that is similar in spirit to the ViT and the even-more-basic MLP-Mixer in t

Multivariate Time Series Transformer, public version

Multivariate Time Series Transformer Framework This code corresponds to the paper: George Zerveas et al. A Transformer-based Framework for Multivariat

Test-Time Personalization with a Transformer for Human Pose Estimation, NeurIPS 2021

Transforming Self-Supervision in Test Time for Personalizing Human Pose Estimation This is an official implementation of the NeurIPS 2021 paper: Trans

Implementation of Enformer, Deepmind's attention network for predicting gene expression, in Pytorch

Enformer - Pytorch (wip) Implementation of Enformer, Deepmind's attention network for predicting gene expression, in Pytorch. The original tensorflow

Video Background Music Generation with Controllable Music Transformer (ACM MM 2021 Oral)

CMT Code for paper Video Background Music Generation with Controllable Music Transformer (ACM MM 2021 Best Paper Award) [Paper] [Site] Directory Struc

Spatial-Temporal Transformer for Dynamic Scene Graph Generation, ICCV2021

Spatial-Temporal Transformer for Dynamic Scene Graph Generation Pytorch Implementation of our paper Spatial-Temporal Transformer for Dynamic Scene Gra

SOFT: Softmax-free Transformer with Linear Complexity, NeurIPS 2021 Spotlight

SOFT: Softmax-free Transformer with Linear Complexity SOFT: Softmax-free Transformer with Linear Complexity, Jiachen Lu, Jinghan Yao, Junge Zhang, Xia

iot-dashboard: Fully integrated architecture platform with a dashboard for Logistics Monitoring, Internet of Things.

Fully integrated architecture platform with a dashboard for Logistics Monitoring, Internet of Things. Written in Python. Flask applicati

The official implementation of paper Siamese Transformer Pyramid Networks for Real-Time UAV Tracking, accepted by WACV22

SiamTPN Introduction This is the official implementation of the SiamTPN (WACV2022). The tracker intergrates pyramid feature network and transformer in

A lightweight library to compare different PyTorch implementations of the same network architecture.

TorchBug is a lightweight library designed to compare two PyTorch implementations of the same network architecture. It allows you to count, and compar

Huggingface transformers for discord

disformers Huggingface transformers for discord base source butyr/huggingface-transformer-chatbots install pip install -U disformers example see examp

Source code of our BMVC 2021 paper: AniFormer: Data-driven 3D Animation with Transformer

AniFormer This is the PyTorch implementation of our BMVC 2021 paper AniFormer: Data-driven 3D Animation with Transformer. Haoyu Chen, Hao Tang, Nicu S

Unofficial implementation of MUSIQ (Multi-Scale Image Quality Transformer)

MUSIQ: Multi-Scale Image Quality Transformer Unofficial pytorch implementation of the paper "MUSIQ: Multi-Scale Image Quality Transformer" (paper link