1192 Repositories

Python Models-Playground Libraries

Code and pre-trained models for MultiMAE: Multi-modal Multi-task Masked Autoencoders

MultiMAE: Multi-modal Multi-task Masked Autoencoders Roman Bachmann*, David Mizrahi*, Andrei Atanov, Amir Zamir Website | arXiv | BibTeX Official PyTo

BDDM: Bilateral Denoising Diffusion Models for Fast and High-Quality Speech Synthesis

Bilateral Denoising Diffusion Models (BDDMs) This is the official PyTorch implementation of the following paper: BDDM: BILATERAL DENOISING DIFFUSION M

I will implement Fastai in each projects present in this repository.

DEEP LEARNING FOR CODERS WITH FASTAI AND PYTORCH The repository contains a list of the projects which I have worked on while reading the book Deep Lea

Entity Disambiguation as text extraction (ACL 2022)

ExtEnD: Extractive Entity Disambiguation This repository contains the code of ExtEnD: Extractive Entity Disambiguation, a novel approach to Entity Dis

ACL'22: Structured Pruning Learns Compact and Accurate Models

☕ CoFiPruning: Structured Pruning Learns Compact and Accurate Models This repository contains the code and pruned models for our ACL'22 paper Structur

![[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links](https://github.com/michiyasunaga/LinkBERT/raw/main/figs/overview.png)

[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links

LinkBERT: A Knowledgeable Language Model Pretrained with Document Links This repo provides the model, code & data of our paper: LinkBERT: Pretraining

Lightning ⚡️ fast forecasting with statistical and econometric models.

Nixtla Statistical ⚡️ Forecast Lightning fast forecasting with statistical and econometric models StatsForecast offers a collection of widely used uni

HugsVision is a easy to use huggingface wrapper for state-of-the-art computer vision

HugsVision is an open-source and easy to use all-in-one huggingface wrapper for computer vision. The goal is to create a fast, flexible and user-frien

An easy-to-use framework for BERT models, with trainers, various NLP tasks and detailed annonations

FantasyBert English | 中文 Introduction An easy-to-use framework for BERT models, with trainers, various NLP tasks and detailed annonations. You can imp

RITA is a family of autoregressive protein models, developed by LightOn in collaboration with the OATML group at Oxford and the Debora Marks Lab at Harvard.

RITA: a Study on Scaling Up Generative Protein Sequence Models RITA is a family of autoregressive protein models, developed by a collaboration of Ligh

Official repository for the paper "Self-Supervised Models are Continual Learners" (CVPR 2022)

Self-Supervised Models are Continual Learners This is the official repository for the paper: Self-Supervised Models are Continual Learners Enrico Fini

SeqTR: A Simple yet Universal Network for Visual Grounding

SeqTR This is the official implementation of SeqTR: A Simple yet Universal Network for Visual Grounding, which simplifies and unifies the modelling fo

Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch

CoCa - Pytorch Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch. They were able to elegantly fit in contras

Unified API to facilitate usage of pre-trained "perceptor" models, a la CLIP

mmc installation git clone https://github.com/dmarx/Multi-Modal-Comparators cd 'Multi-Modal-Comparators' pip install poetry poetry build pip install d

Language Models Can See: Plugging Visual Controls in Text Generation

Language Models Can See: Plugging Visual Controls in Text Generation Authors: Yixuan Su, Tian Lan, Yahui Liu, Fangyu Liu, Dani Yogatama, Yan Wang, Lin

Convert scikit-learn models to PyTorch modules

sk2torch sk2torch converts scikit-learn models into PyTorch modules that can be tuned with backpropagation and even compiled as TorchScript. Problems

SentimentArcs: a large ensemble of dozens of sentiment analysis models to analyze emotion in text over time

SentimentArcs - Emotion in Text An end-to-end pipeline based on Jupyter notebooks to detect, extract, process and anlayze emotion over time in text. E

Code for intrusion detection system (IDS) development using CNN models and transfer learning

Intrusion-Detection-System-Using-CNN-and-Transfer-Learning This is the code for the paper entitled "A Transfer Learning and Optimized CNN Based Intrus

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

Implementation of GeoDiff: a Geometric Diffusion Model for Molecular Conformation Generation (ICLR 2022).

GeoDiff: a Geometric Diffusion Model for Molecular Conformation Generation [OpenReview] [arXiv] [Code] The official implementation of GeoDiff: A Geome

A Persian Image Captioning model based on Vision Encoder Decoder Models of the transformers🤗.

Persian-Image-Captioning We fine-tuning the Vision Encoder Decoder Model for the task of image captioning on the coco-flickr-farsi dataset. The implem

An official repository for tutorials of Probabilistic Modelling and Reasoning (2021/2022) - a University of Edinburgh master's course.

PMR computer tutorials on HMMs (2021-2022) This is a repository for computer tutorials of Probabilistic Modelling and Reasoning (2021/2022) - a Univer

Guide to using pre-trained large language models of source code

Large Models of Source Code I occasionally train and publicly release large neural language models on programs, including PolyCoder. Here, I describe

CLIP (Contrastive Language–Image Pre-training) for Italian

Italian CLIP CLIP (Radford et al., 2021) is a multimodal model that can learn to represent images and text jointly in the same space. In this project,

Official code for the CVPR 2022 (oral) paper "Extracting Triangular 3D Models, Materials, and Lighting From Images".

nvdiffrec Joint optimization of topology, materials and lighting from multi-view image observations as described in the paper Extracting Triangular 3D

Includes PyTorch - Keras model porting code for ConvNeXt family of models with fine-tuning and inference notebooks.

ConvNeXt-TF This repository provides TensorFlow / Keras implementations of different ConvNeXt [1] variants. It also provides the TensorFlow / Keras mo

An ever-growing playground of notebooks showcasing CLIP's impressive zero-shot capabilities.

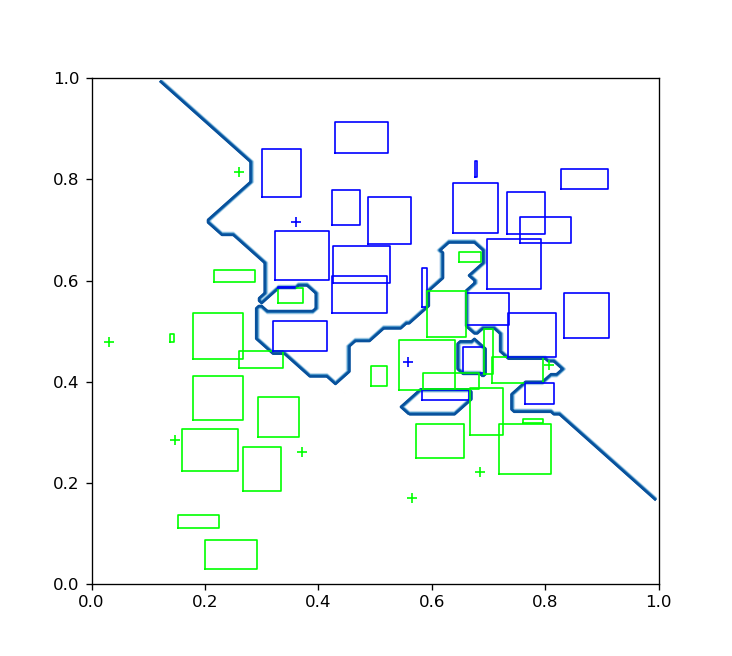

Playground for CLIP-like models Demo Colab Link GradCAM Visualization Naive Zero-shot Detection Smarter Zero-shot Detection Captcha Solver Changelog 2

Repo for the Tutorials of Day1-Day3 of the Nordic Probabilistic AI School 2021 (https://probabilistic.ai/)

ProbAI 2021 - Probabilistic Programming and Variational Inference Tutorial with Pryo Day 1 (June 14) Slides Notebook: students_PPLs_Intro Notebook: so

Fast SHAP value computation for interpreting tree-based models

FastTreeSHAP FastTreeSHAP package is built based on the paper Fast TreeSHAP: Accelerating SHAP Value Computation for Trees published in NeurIPS 2021 X

.png)

Contextual Attention Network: Transformer Meets U-Net

Contextual Attention Network: Transformer Meets U-Net Contexual attention network for medical image segmentation with state of the art results on skin

Package towards building Explainable Forecasting and Nowcasting Models with State-of-the-art Deep Neural Networks and Dynamic Factor Model on Time Series data sets with single line of code. Also, provides utilify facility for time-series signal similarities matching, and removing noise from timeseries signals.

DeepXF: Explainable Forecasting and Nowcasting with State-of-the-art Deep Neural Networks and Dynamic Factor Model Also, verify TS signal similarities

🚀 RocketQA, dense retrieval for information retrieval and question answering, including both Chinese and English state-of-the-art models.

In recent years, the dense retrievers based on pre-trained language models have achieved remarkable progress. To facilitate more developers using cutt

SciFive: a text-text transformer model for biomedical literature

SciFive SciFive provided a Text-Text framework for biomedical language and natural language in NLP. Under the T5's framework and desrbibed in the pape

GARCH and Multivariate LSTM forecasting models for Bitcoin realized volatility with potential applications in crypto options trading, hedging, portfolio management, and risk management

Bitcoin Realized Volatility Forecasting with GARCH and Multivariate LSTM Author: Chi Bui This Repository Repository Directory ├── README.md

TorchMultimodal is a PyTorch library for training state-of-the-art multimodal multi-task models at scale.

TorchMultimodal (Alpha Release) Introduction TorchMultimodal is a PyTorch library for training state-of-the-art multimodal multi-task models at scale.

UT-Sarulab MOS prediction system using SSL models

UTMOS: UTokyo-SaruLab MOS Prediction System Official implementation of "UTMOS: UTokyo-SaruLab System for VoiceMOS Challenge 2022" submitted to INTERSP

Implementation of Retrieval-Augmented Denoising Diffusion Probabilistic Models in Pytorch

Retrieval-Augmented Denoising Diffusion Probabilistic Models (wip) Implementation of Retrieval-Augmented Denoising Diffusion Probabilistic Models in P

ACL22 paper: Imputing Out-of-Vocabulary Embeddings with LOVE Makes Language Models Robust with Little Cost

Imputing Out-of-Vocabulary Embeddings with LOVE Makes Language Models Robust with Little Cost LOVE is accpeted by ACL22 main conference as a long pape

Pretrained models for Jax/Haiku; MobileNet, ResNet, VGG, Xception.

Pre-trained image classification models for Jax/Haiku Jax/Haiku Applications are deep learning models that are made available alongside pre-trained we

Very simple NCHW and NHWC conversion tool for ONNX. Change to the specified input order for each and every input OP. Also, change the channel order of RGB and BGR. Simple Channel Converter for ONNX.

scc4onnx Very simple NCHW and NHWC conversion tool for ONNX. Change to the specified input order for each and every input OP. Also, change the channel

SIGIR'22 paper: Axiomatically Regularized Pre-training for Ad hoc Search

Introduction This codebase contains source-code of the Python-based implementation (ARES) of our SIGIR 2022 paper. Chen, Jia, et al. "Axiomatically Re

Repository for fine-tuning Transformers 🤗 based seq2seq speech models in JAX/Flax.

Seq2Seq Speech in JAX A JAX/Flax repository for combining a pre-trained speech encoder model (e.g. Wav2Vec2, HuBERT, WavLM) with a pre-trained text de

Classification models 1D Zoo - Keras and TF.Keras

Classification models 1D Zoo - Keras and TF.Keras This repository contains 1D variants of popular CNN models for classification like ResNets, DenseNet

Simple tool to combine(merge) onnx models. Simple Network Combine Tool for ONNX.

snc4onnx Simple tool to combine(merge) onnx models. Simple Network Combine Tool for ONNX. https://github.com/PINTO0309/simple-onnx-processing-tools 1.

DeepGNN is a framework for training machine learning models on large scale graph data.

DeepGNN Overview DeepGNN is a framework for training machine learning models on large scale graph data. DeepGNN contains all the necessary features in

Aiming at the common training datsets split, spectrum preprocessing, wavelength select and calibration models algorithm involved in the spectral analysis process

Aiming at the common training datsets split, spectrum preprocessing, wavelength select and calibration models algorithm involved in the spectral analysis process, a complete algorithm library is established, which is named opensa (openspectrum analysis).

A very simple tool to rewrite parameters such as attributes and constants for OPs in ONNX models. Simple Attribute and Constant Modifier for ONNX.

sam4onnx A very simple tool to rewrite parameters such as attributes and constants for OPs in ONNX models. Simple Attribute and Constant Modifier for

Simple ONNX operation generator. Simple Operation Generator for ONNX.

sog4onnx Simple ONNX operation generator. Simple Operation Generator for ONNX. https://github.com/PINTO0309/simple-onnx-processing-tools Key concept V

Simple node deletion tool for onnx.

snd4onnx Simple node deletion tool for onnx. I only test very miscellaneous and limited patterns as a hobby. There are probably a large number of bugs

A library to inspect itermediate layers of PyTorch models.

A library to inspect itermediate layers of PyTorch models. Why? It's often the case that we want to inspect intermediate layers of a model without mod

Helping data scientists better understand their datasets and models in text classification. With love from ServiceNow.

Azimuth, an open-source dataset and error analysis tool for text classification, with love from ServiceNow. Overview Azimuth is an open source applica

The official code of LM-Debugger, an interactive tool for inspection and intervention in transformer-based language models.

LM-Debugger is an open-source interactive tool for inspection and intervention in transformer-based language models. This repository includes the code

A very simple tool for situations where optimization with onnx-simplifier would exceed the Protocol Buffers upper file size limit of 2GB, or simply to separate onnx files to any size you want.

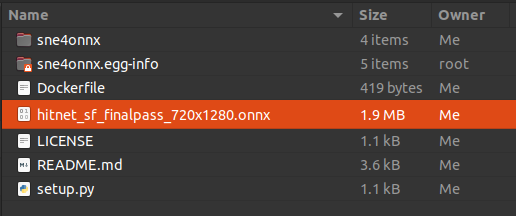

sne4onnx A very simple tool for situations where optimization with onnx-simplifier would exceed the Protocol Buffers upper file size limit of 2GB, or

This repository contains the data and code for the paper "Diverse Text Generation via Variational Encoder-Decoder Models with Gaussian Process Priors" (SPNLP@ACL2022)

GP-VAE This repository provides datasets and code for preprocessing, training and testing models for the paper: Diverse Text Generation via Variationa

This is an open source library implementing hyperbox-based machine learning algorithms

hyperbox-brain is a Python open source toolbox implementing hyperbox-based machine learning algorithms built on top of scikit-learn and is distributed

scAR (single-cell Ambient Remover) is a package for data denoising in single-cell omics.

scAR scAR (single cell Ambient Remover) is a package for denoising multiple single cell omics data. It can be used for multiple tasks, such as, sgRNA

PyTorch Implementation of "Bridging Pre-trained Language Models and Hand-crafted Features for Unsupervised POS Tagging" (Findings of ACL 2022)

Feature_CRF_AE Feature_CRF_AE provides a implementation of Bridging Pre-trained Language Models and Hand-crafted Features for Unsupervised POS Tagging

In this project, RandomOverSampler and SMOTE algorithms were used to perform oversampling, ClusterCentroids algorithm was used to undersampling, SMOTEENN algorithm was applied as a combinatorial approach of over- and undersampling of credit card credit dataset from LendingClub. Machine learning models - BalancedRandomForestClassifier and EasyEnsembleClassifier were used to predict credit risk.

Overview of Credit Card Analysis In this project, RandomOverSampler and SMOTE algorithms were used to perform oversampling, ClusterCentroids algorithm

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

PyTorch Implementation of DiffGAN-TTS: High-Fidelity and Efficient Text-to-Speech with Denoising Diffusion GANs

DiffGAN-TTS - PyTorch Implementation PyTorch implementation of DiffGAN-TTS: High

Neural-Machine-Translation - Implementation of revolutionary machine translation models

Neural Machine Translation Framework: PyTorch Repository contaning my implementa

Covid19-Forecasting - An interactive website that tracks, models and predicts COVID-19 Cases

Covid-Tracker This is an interactive website that tracks, models and predicts CO

Static-test - A playground to play with ideas related to testing the comparability of the code

Static test playground ⚠️ The code is just an experiment. Compiles and runs on U

Federated Learning - Including common test models for federated learning, like CNN, Resnet18 and lstm, controlled by different parser

Federated_Learning 💻 This projest include common test models for federated lear

Using PyTorch Perform intent classification using three different models to see which one is better for this task

Using PyTorch Perform intent classification using three different models to see which one is better for this task

icepickle is to allow a safe way to serialize and deserialize linear scikit-learn models

icepickle It's a cooler way to store simple linear models. The goal of icepickle is to allow a safe way to serialize and deserialize linear scikit-lea

PyTorch implementation for the paper Pseudo Numerical Methods for Diffusion Models on Manifolds

Pseudo Numerical Methods for Diffusion Models on Manifolds (PNDM) This repo is the official PyTorch implementation for the paper Pseudo Numerical Meth

Code To Tune or Not To Tune? Zero-shot Models for Legal Case Entailment.

COLIEE 2021 - task 2: Legal Case Entailment This repository contains the code to reproduce NeuralMind's submissions to COLIEE 2021 presented in the pa

Source code of our work: "Benchmarking Deep Models for Salient Object Detection"

SALOD Source code of our work: "Benchmarking Deep Models for Salient Object Detection". In this works, we propose a new benchmark for SALient Object D

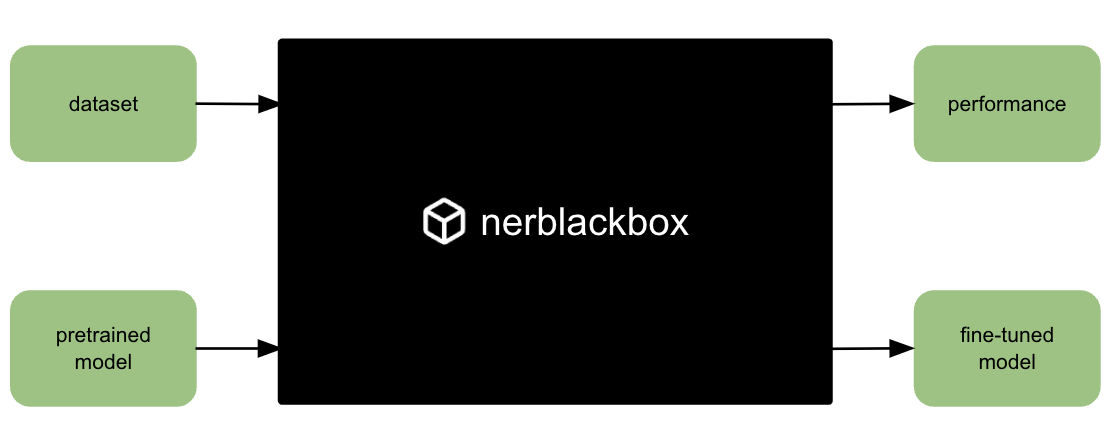

A python package to fine-tune transformer-based models for named entity recognition (NER).

nerblackbox A python package to fine-tune transformer-based language models for named entity recognition (NER). Resources Source Code: https://github.

This is the replication package for paper submission: Towards Training Reproducible Deep Learning Models.

This is the replication package for paper submission: Towards Training Reproducible Deep Learning Models.

Convert BART models to ONNX with quantization. 3X reduction in size, and upto 3X boost in inference speed

fast-Bart Reduction of BART model size by 3X, and boost in inference speed up to 3X BART implementation of the fastT5 library (https://github.com/Ki6a

KoRean based ELECTRA pre-trained models (KR-ELECTRA) for Tensorflow and PyTorch

KoRean based ELECTRA (KR-ELECTRA) This is a release of a Korean-specific ELECTRA model with comparable or better performances developed by the Computa

BASH - Biomechanical Animated Skinned Human

We developed a method animating a statistical 3D human model for biomechanical analysis to increase accessibility for non-experts, like patients, athletes, or designers.

JFB: Jacobian-Free Backpropagation for Implicit Models

JFB: Jacobian-Free Backpropagation for Implicit Models

Datasets and pretrained Models for StyleGAN3 ...

Datasets and pretrained Models for StyleGAN3 ... Dear arfiticial friend, this is a collection of artistic datasets and models that we have put togethe

SGPT: Multi-billion parameter models for semantic search

SGPT: Multi-billion parameter models for semantic search This repository contains code, results and pre-trained models for the paper SGPT: Multi-billi

Blender 3.1 Alpha (and later) PLY importer that correctly loads point clouds (and all PLY models as point clouds)

import-ply-as-verts Blender 3.1 Alpha (and later) PLY importer that correctly loads point clouds (and all PLY models as point clouds) Latest News Mand

Pretrained Japanese BERT models

Pretrained Japanese BERT models This is a repository of pretrained Japanese BERT models. The models are available in Transformers by Hugging Face. Mod

The source code for Generating Training Data with Language Models: Towards Zero-Shot Language Understanding.

SuperGen The source code for Generating Training Data with Language Models: Towards Zero-Shot Language Understanding. Requirements Before running, you

Explore extreme compression for pre-trained language models

Code for paper "Exploring extreme parameter compression for pre-trained language models ICLR2022"

The pyrelational package offers a flexible workflow to enable active learning with as little change to the models and datasets as possible

pyrelational is a python active learning library developed by Relation Therapeutics for rapidly implementing active learning pipelines from data management, model development (and Bayesian approximation), to creating novel active learning strategies.

A demo project to elaborate how Machine Learn Models are deployed on production using Flask API

This is a salary prediction website developed with the help of machine learning, this makes prediction of salary on basis of few parameters like interview score, experience test score.

Posterior temperature optimized Bayesian models for inverse problems in medical imaging

Posterior temperature optimized Bayesian models for inverse problems in medical imaging Max-Heinrich Laves*, Malte Tölle*, Alexander Schlaefer, Sandy

Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection"

Hierarchical Token Semantic Audio Transformer Introduction The Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound

Source code for "Understanding Knowledge Integration in Language Models with Graph Convolutions"

Graph Convolution Simulator (GCS) Source code for "Understanding Knowledge Integration in Language Models with Graph Convolutions" Requirements: PyTor

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation This reposi

Event queue (Equeue) dialect is an MLIR Dialect that models concurrent devices in terms of control and structure.

Event Queue Dialect Event queue (Equeue) dialect is an MLIR Dialect that models concurrent devices in terms of control and structure. Motivation The m

Transformers provides thousands of pretrained models to perform tasks on different modalities such as text, vision, and audio.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Source code for EquiDock: Independent SE(3)-Equivariant Models for End-to-End Rigid Protein Docking (ICLR 2022)

Source code for EquiDock: Independent SE(3)-Equivariant Models for End-to-End Rigid Protein Docking (ICLR 2022) Please cite "Independent SE(3)-Equivar

This repository provides an efficient PyTorch-based library for training deep models.

An Efficient Library for Training Deep Models This repository provides an efficient PyTorch-based library for training deep models. Installation Make

Trafffic prediction analysis using hybrid models - Machine Learning

Hybrid Machine learning Model Clone the Repository Create a new Directory as assests and download the model from the below link Model Link To Start th

Tensorflow2 Keras-based Semantic Segmentation Models Implementation

Tensorflow2 Keras-based Semantic Segmentation Models Implementation

NeuralForecast is a Python library for time series forecasting with deep learning models

NeuralForecast is a Python library for time series forecasting with deep learning models. It includes benchmark datasets, data-loading utilities, evaluation functions, statistical tests, univariate model benchmarks and SOTA models implemented in PyTorch and PyTorchLightning.

Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5

NLP-Summarizer Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5 This project aimed to provide in

A U-Net combined with a variational auto-encoder that is able to learn conditional distributions over semantic segmentations.

Probabilistic U-Net + **Update** + An improved Model (the Hierarchical Probabilistic U-Net) + LIDC crops is now available. See below. Re-implementatio

/samples.jpg)

A collection of pre-trained StyleGAN2 models trained on different datasets at different resolution.

Awesome Pretrained StyleGAN2 A collection of pre-trained StyleGAN2 models trained on different datasets at different resolution. Note the readme is a

A curated list of Generative Deep Art projects, tools, artworks, and models

Generative Deep Art A curated list of Generative Deep Art projects, tools, artworks, and models Inbox Get started with making AI art in 2022 – deeplea