7236 Repositories

Python Training-a-deep-learning-model-on-the-noisy-CIFAR-dataset Libraries

Negative Sample Matters: A Renaissance of Metric Learning for Temporal Grounding

2D-TAN (Optimized) Introduction This is an optimized re-implementation repository for AAAI'2020 paper: Learning 2D Temporal Localization Networks for

Dust model dichotomous performance analysis

Dust-model-dichotomous-performance-analysis Using a collated dataset of 90,000 dust point source observations from 9 drylands studies from around the

UniSpeech - Large Scale Self-Supervised Learning for Speech

UniSpeech The family of UniSpeech: WavLM (arXiv): WavLM: Large-Scale Self-Supervised Pre-training for Full Stack Speech Processing UniSpeech (ICML 202

Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition

SEW (Squeezed and Efficient Wav2vec) The repo contains the code of the paper "Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speec

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

Malaya-Speech is a Speech-Toolkit library for bahasa Malaysia, powered by Deep Learning Tensorflow.

Malaya-Speech is a Speech-Toolkit library for bahasa Malaysia, powered by Deep Learning Tensorflow. Documentation Proper documentation is available at

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

Code for CodeT5: a new code-aware pre-trained encoder-decoder model.

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation This is the official PyTorch implementation

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Repo for Enhanced Seq2Seq Autoencoder via Contrastive Learning for Abstractive Text Summarization

ESACL: Enhanced Seq2Seq Autoencoder via Contrastive Learning for AbstractiveText Summarization This repo is for our paper "Enhanced Seq2Seq Autoencode

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite

Mesh TensorFlow: Model Parallelism Made Easier

Mesh TensorFlow - Model Parallelism Made Easier Introduction Mesh TensorFlow (mtf) is a language for distributed deep learning, capable of specifying

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models

PEGASUS library Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models, or PEGASUS, uses self-supervised

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Hiring We are hiring at all levels (including FTE researchers and interns)! If you are interested in working with us on NLP and large-scale pre-traine

Unsupervised Language Model Pre-training for French

FlauBERT and FLUE FlauBERT is a French BERT trained on a very large and heterogeneous French corpus. Models of different sizes are trained using the n

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

Code for EMNLP20 paper: "ProphetNet: Predicting Future N-gram for Sequence-to-Sequence Pre-training"

ProphetNet-X This repo provides the code for reproducing the experiments in ProphetNet. In the paper, we propose a new pre-trained language model call

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

CodeBERT: A Pre-Trained Model for Programming and Natural Languages.

CodeBERT This repo provides the code for reproducing the experiments in CodeBERT: A Pre-Trained Model for Programming and Natural Languages. CodeBERT

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

ELECTRA Introduction ELECTRA is a method for self-supervised language representation learning. It can be used to pre-train transformer networks using

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

ALBERT ***************New March 28, 2020 *************** Add a colab tutorial to run fine-tuning for GLUE datasets. ***************New January 7, 2020

Library of deep learning models and datasets designed to make deep learning more accessible and accelerate ML research.

Tensor2Tensor Tensor2Tensor, or T2T for short, is a library of deep learning models and datasets designed to make deep learning more accessible and ac

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

This is the repository for the NeurIPS-21 paper [Contrastive Graph Poisson Networks: Semi-Supervised Learning with Extremely Limited Labels].

CGPN This is the repository for the NeurIPS-21 paper [Contrastive Graph Poisson Networks: Semi-Supervised Learning with Extremely Limited Labels]. Req

Infrastructure template and Jupyter notebooks for running RoseTTAFold on AWS Batch.

AWS RoseTTAFold Infrastructure template and Jupyter notebooks for running RoseTTAFold on AWS Batch. Overview Proteins are large biomolecules that play

This project impelemented for midterm of the Machine Learning #Zoomcamp #Alexey Grigorev

MLProject_01 This project impelemented for midterm of the Machine Learning #Zoomcamp #Alexey Grigorev Context Dataset English question data set file F

A curated list of long-tailed recognition resources.

Awesome Long-tailed Recognition A curated list of long-tailed recognition and related resources. Please feel free to pull requests or open an issue to

Pytorch implementation of the paper "Class-Balanced Loss Based on Effective Number of Samples"

Class-balanced-loss-pytorch Pytorch implementation of the paper Class-Balanced Loss Based on Effective Number of Samples presented at CVPR'19. Yin Cui

Pytorch codes for Feature Transfer Learning for Face Recognition with Under-Represented Data

FTLNet_Pytorch Pytorch codes for Feature Transfer Learning for Face Recognition with Under-Represented Data 1. Introduction This repo is an unofficial

Reimplementation of NeurIPS'19: "Meta-Weight-Net: Learning an Explicit Mapping For Sample Weighting" by Shu et al.

[Re] Meta-Weight-Net: Learning an Explicit Mapping For Sample Weighting Reimplementation of NeurIPS'19: "Meta-Weight-Net: Learning an Explicit Mapping

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning. CVPR 2018

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning Tensorflow code and models for the paper: Large Scale Fine-Grained Categ

Code for paper "Learning to Reweight Examples for Robust Deep Learning"

learning-to-reweight-examples Code for paper Learning to Reweight Examples for Robust Deep Learning. [arxiv] Environment We tested the code on tensorf

PyTorch Implementation of the paper Learning to Reweight Examples for Robust Deep Learning

Learning to Reweight Examples for Robust Deep Learning Unofficial PyTorch implementation of Learning to Reweight Examples for Robust Deep Learning. Th

A Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Training Data》

RangeLoss Pytorch This is a Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Trai

Adjust Decision Boundary for Class Imbalanced Learning

Adjusting Decision Boundary for Class Imbalanced Learning This repository is the official PyTorch implementation of WVN-RS, introduced in Adjusting De

[WACV21] Code for our paper: Samuel, Atzmon and Chechik, "From Generalized zero-shot learning to long-tail with class descriptors"

DRAGON: From Generalized zero-shot learning to long-tail with class descriptors Paper Project Website Video Overview DRAGON learns to correct the bias

Deep learning for Engineers - Physics Informed Deep Learning

SciANN: Neural Networks for Scientific Computations SciANN is a Keras wrapper for scientific computations and physics-informed deep learning. New to S

Efficient and Scalable Physics-Informed Deep Learning and Scientific Machine Learning on top of Tensorflow for multi-worker distributed computing

Notice: Support for Python 3.6 will be dropped in v.0.2.1, please plan accordingly! Efficient and Scalable Physics-Informed Deep Learning Collocation-

PyDEns is a framework for solving Ordinary and Partial Differential Equations (ODEs & PDEs) using neural networks

PyDEns PyDEns is a framework for solving Ordinary and Partial Differential Equations (ODEs & PDEs) using neural networks. With PyDEns one can solve PD

Physics-Informed Neural Networks (PINN) and Deep BSDE Solvers of Differential Equations for Scientific Machine Learning (SciML) accelerated simulation

NeuralPDE NeuralPDE.jl is a solver package which consists of neural network solvers for partial differential equations using scientific machine learni

Learn the Deep Learning for Computer Vision in three steps: theory from base to SotA, code in PyTorch, and space-repetition with Anki

DeepCourse: Deep Learning for Computer Vision arthurdouillard.com/deepcourse/ This is a course I'm giving to the French engineering school EPITA each

Continual World is a benchmark for continual reinforcement learning

Continual World Continual World is a benchmark for continual reinforcement learning. It contains realistic robotic tasks which come from MetaWorld. Th

Deep Learning Theory

Deep Learning Theory 整理了一些深度学习的理论相关内容,持续更新。 Overview Recent advances in deep learning theory 总结了目前深度学习理论研究的六个方向的一些结果,概述型,没做深入探讨(2021)。 1.1 complexity

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

The implementation of Learning Instance and Task-Aware Dynamic Kernels for Few Shot Learning

INSTA: Learning Instance and Task-Aware Dynamic Kernels for Few Shot Learning This repository provides the implementation and demo of Learning Instanc

Implementation of Research Paper "Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation"

Zero-DCE and Zero-DCE++(Lite architechture for Mobile and edge Devices) Papers Abstract The paper presents a novel method, Zero-Reference Deep Curve E

Memory-Augmented Model Predictive Control

Memory-Augmented Model Predictive Control This repository hosts the source code for the journal article "Composing MPC with LQR and Neural Networks fo

Conjugated Discrete Distributions for Distributional Reinforcement Learning (C2D)

Conjugated Discrete Distributions for Distributional Reinforcement Learning (C2D) Code & Data Appendix for Conjugated Discrete Distributions for Distr

An implementation of quantum convolutional neural network with MindQuantum. Huawei, classifying MNIST dataset

关于实现的一点说明 山东大学 2020级 苏博南 www.subonan.com 文件说明 tools.py 这里面主要有两个函数: resize(a, lenb) 这其实是我找同学写的一个小算法hhh。给出一个$28\times 28$的方阵a,返回一个$lenb\times lenb$的方阵。因

MARS: Learning Modality-Agnostic Representation for Scalable Cross-media Retrieva

Introduction This is the source code of our TCSVT 2021 paper "MARS: Learning Modality-Agnostic Representation for Scalable Cross-media Retrieval". Ple

Using CNN to mimic the driver based on training data from Torcs

Behavioural-Cloning-in-autonomous-driving Using CNN to mimic the driver based on training data from Torcs. Approach First, the data was collected from

A program that uses an API and a AI model to get info of sotcks

Stock-Market-AI-Analysis I dont mind anyone using this code but please give me credit A program that uses an API and a AI model to get info of stocks

Manipulation OpenAI Gym environments to simulate robots at the STARS lab

Manipulator Learning This repository contains a set of manipulation environments that are compatible with OpenAI Gym and simulated in pybullet. In par

SeqFormer: a Frustratingly Simple Model for Video Instance Segmentation

SeqFormer: a Frustratingly Simple Model for Video Instance Segmentation SeqFormer SeqFormer: a Frustratingly Simple Model for Video Instance Segmentat

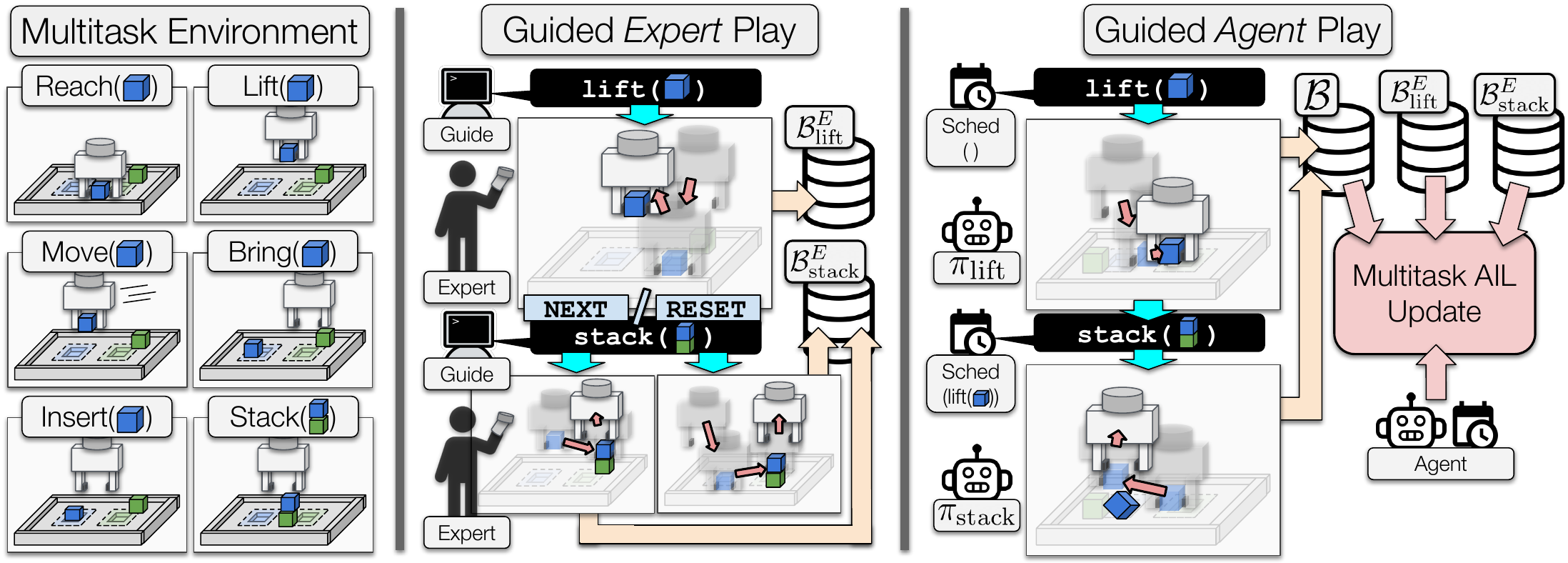

Learning from Guided Play: A Scheduled Hierarchical Approach for Improving Exploration in Adversarial Imitation Learning Source Code

Learning from Guided Play: A Scheduled Hierarchical Approach for Improving Exploration in Adversarial Imitation Learning Source Code

In generative deep geometry learning, we often get many obj files remain to be rendered

a python prompt cli script for blender batch render In deep generative geometry learning, we always get many .obj files to be rendered. Our rendered i

Text Classification in Turkish Texts with Bert

You can watch the details of the project on my youtube channel Project Interface Project Second Interface Goal= Correctly guessing the classification

A foreign language learning aid using a neural network to predict probability of translating foreign words

Langy Langy is a reading-focused foreign language learning aid orientated towards young children. Reading is an activity that every child knows. It is

The projects lets you extract glossary words and their definitions from a given piece of text automatically using NLP techniques

Unsupervised technique to Glossary and Definition Extraction Code Files GPT2-DefinitionModel.ipynb - GPT-2 model for definition generation. Data_Gener

A Python description of the Kinematic Bicycle Model with an animated example.

Kinematic Bicycle Model Abstract A python library for the Kinematic Bicycle model. The Kinematic Bicycle is a compromise between the non-linear and li

Predict an emoji that is associated with a text

Sentiment Analysis Sentiment analysis in computational linguistics is a general term for techniques that quantify sentiment or mood in a text. Can you

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm. It contains the code to reproduce the results presented in the original paper: https://arxiv.org/abs/2112.03670

Telegram chatbot created with deep learning model (LSTM) and telebot library.

Telegram chatbot Telegram chatbot created with deep learning model (LSTM) and telebot library. Description This program will allow you to create very

A PaddlePaddle version of Neural Renderer, refer to its PyTorch version

Neural 3D Mesh Renderer in PadddlePaddle A PaddlePaddle version of Neural Renderer, refer to its PyTorch version Install Run: pip install neural-rende

Robbing the FED: Directly Obtaining Private Data in Federated Learning with Modified Models

Robbing the FED: Directly Obtaining Private Data in Federated Learning with Modified Models This repo contains a barebones implementation for the atta

An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022

Dual Correlation Reduction Network An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022. Any

A toolkit for document-level event extraction, containing some SOTA model implementations

❤️ A Toolkit for Document-level Event Extraction with & without Triggers Hi, there 👋 . Thanks for your stay in this repo. This project aims at buildi

Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021

Introduction Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021 Prerequisites Python 3.8 and conda, get Conda CUDA 11

A 1.3B text-to-image generation model trained on 14 million image-text pairs

minDALL-E on Conceptual Captions minDALL-E, named after minGPT, is a 1.3B text-to-image generation model trained on 14 million image-text pairs for no

A simple program for training and testing vit

Vit This is a simple program for training and testing vit. Key requirements: torch, torchvision and timm. Dataset I put 5 categories of the cub classi

Joint learning of images and text via maximization of mutual information

mutual_info_img_txt Joint learning of images and text via maximization of mutual information. This repository incorporates the algorithms presented in

Using Bert as the backbone model for lime, designed for NLP task explanation (sentence pair text classification task)

Lime Comparing deep contextualized model for sentences highlighting task. In addition, take the classic explanation model "LIME" with bert-base model

SPEAR: Semi suPErvised dAta progRamming

Semi-Supervised Data Programming for Data Efficient Machine Learning SPEAR is a library for data programming with semi-supervision. The package implem

Multiwavelets-based operator model

Multiwavelet model for Operator maps Gaurav Gupta, Xiongye Xiao, and Paul Bogdan Multiwavelet-based Operator Learning for Differential Equations In Ne

PantheonRL is a package for training and testing multi-agent reinforcement learning environments.

PantheonRL is a package for training and testing multi-agent reinforcement learning environments. PantheonRL supports cross-play, fine-tuning, ad-hoc coordination, and more.

Library to enable Bayesian active learning in your research or labeling work.

Bayesian Active Learning (BaaL) BaaL is an active learning library developed at ElementAI. This repository contains techniques and reusable components

Experiments on continual learning from a stream of pretrained models.

Ex-model CL Ex-model continual learning is a setting where a stream of experts (i.e. model's parameters) is available and a CL model learns from them

![[AAAI 2022] Sparse Structure Learning via Graph Neural Networks for Inductive Document Classification](https://github.com/qkrdmsghk/TextSSL/raw/master/TextSSL.png)

[AAAI 2022] Sparse Structure Learning via Graph Neural Networks for Inductive Document Classification

Sparse Structure Learning via Graph Neural Networks for inductive document classification Make graph dataset create co-occurrence graph for datasets.

GT China coal model

GT China coal model The full version of a China coal transport model with a very high spatial reslution. What it does The code works in a few steps: T

Official code repository for Continual Learning In Environments With Polynomial Mixing Times

Official code for Continual Learning In Environments With Polynomial Mixing Times Continual Learning in Environments with Polynomial Mixing Times This

Baseline powergrid model for NY

Baseline-powergrid-model-for-NY Table of Contents About The Project Built With Usage License Contact Acknowledgements About The Project As the urgency

Physical Anomalous Trajectory or Motion (PHANTOM) Dataset

Physical Anomalous Trajectory or Motion (PHANTOM) Dataset Description This dataset contains the six different classes as described in our paper[]. The

DeepDiffusion: Unsupervised Learning of Retrieval-adapted Representations via Diffusion-based Ranking on Latent Feature Manifold

DeepDiffusion Introduction This repository provides the code of the DeepDiffusion algorithm for unsupervised learning of retrieval-adapted representat

Official code base for the poster "On the use of Cortical Magnification and Saccades as Biological Proxies for Data Augmentation" published in NeurIPS 2021 Workshop (SVRHM)

Self-Supervised Learning (SimCLR) with Biological Plausible Image Augmentations Official code base for the poster "On the use of Cortical Magnificatio

ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab

AliceMind AliceMind: ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab This repository provides pre-trained encode

A high-performance distributed deep learning system targeting large-scale and automated distributed training.

HETU Documentation | Examples Hetu is a high-performance distributed deep learning system targeting trillions of parameters DL model training, develop

Sleep staging from ECG, assisted with EEG

Sleep_Staging_Knowledge Distillation This codebase implements knowledge distillation approach for ECG based sleep staging assisted by EEG based sleep

Type4Py: Deep Similarity Learning-Based Type Inference for Python

Type4Py: Deep Similarity Learning-Based Type Inference for Python This repository contains the implementation of Type4Py and instructions for re-produ

A GitHub action that suggests type annotations for Python using machine learning.

Typilus: Suggest Python Type Annotations A GitHub action that suggests type annotations for Python using machine learning. This action makes suggestio

Idea is to build a model which will take keywords as inputs and generate sentences as outputs.

keytotext Idea is to build a model which will take keywords as inputs and generate sentences as outputs. Potential use case can include: Marketing Sea

AI4Good project for detecting waste in the environment

Detect waste AI4Good project for detecting waste in environment. www.detectwaste.ml. Our latest results were published in Waste Management journal in