53 Repositories

Python benchmarking Libraries

Repo for "Benchmarking Robustness of 3D Point Cloud Recognition against Common Corruptions" https://arxiv.org/abs/2201.12296

Benchmarking Robustness of 3D Point Cloud Recognition against Common Corruptions This repo contains the dataset and code for the paper Benchmarking Ro

Evaluation and Benchmarking of Speech Super-resolution Methods

Speech Super-resolution Evaluation and Benchmarking What this repo do: A toolbox for the evaluation of speech super-resolution algorithms. Unify the e

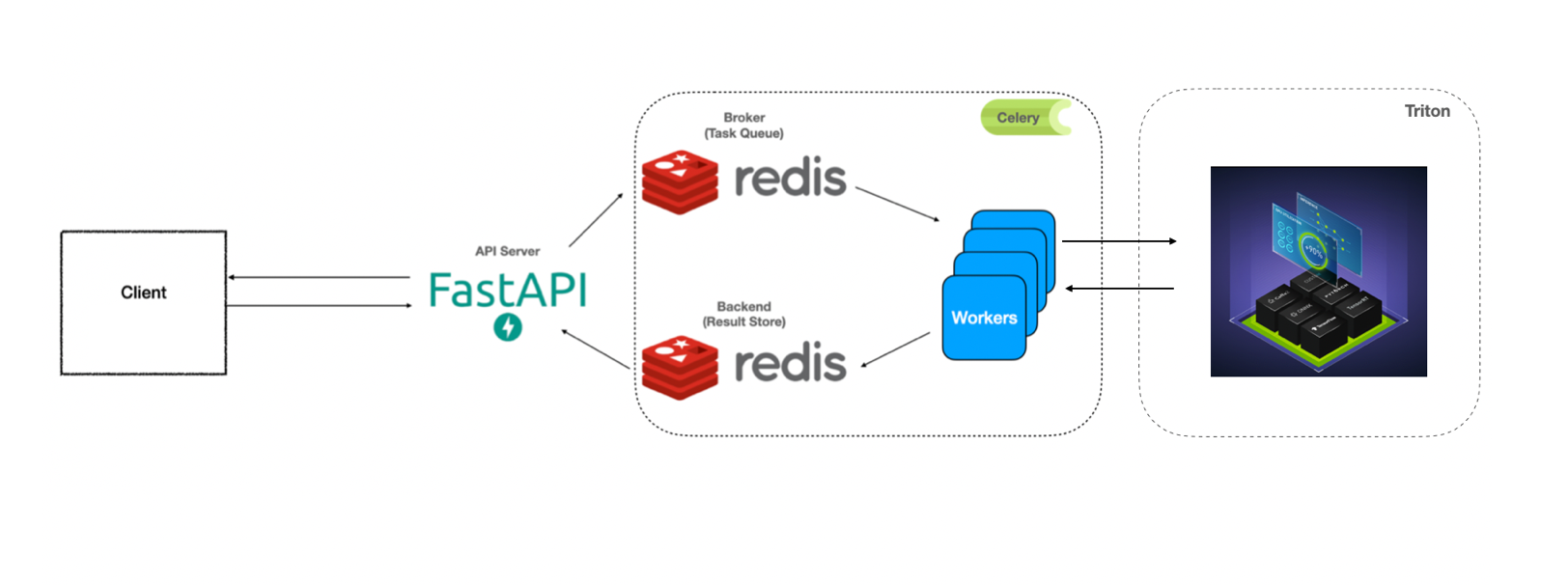

Simple example of FastAPI + Celery + Triton for benchmarking

You can see the previous work from: https://github.com/Curt-Park/producer-consumer-fastapi-celery https://github.com/Curt-Park/triton-inference-server

Source code of our work: "Benchmarking Deep Models for Salient Object Detection"

SALOD Source code of our work: "Benchmarking Deep Models for Salient Object Detection". In this works, we propose a new benchmark for SALient Object D

NATS-Bench: Benchmarking NAS Algorithms for Architecture Topology and Size

NATS-Bench: Benchmarking NAS Algorithms for Architecture Topology and Size Xuanyi Dong, Lu Liu, Katarzyna Musial, Bogdan Gabrys in IEEE Transactions o

What are the best Systems? New Perspectives on NLP Benchmarking

What are the best Systems? New Perspectives on NLP Benchmarking In Machine Learning, a benchmark refers to an ensemble of datasets associated with one

Benchmarking Pipeline for Prediction of Protein-Protein Interactions

B4PPI Benchmarking Pipeline for the Prediction of Protein-Protein Interactions How this benchmarking pipeline has been built, and how to use it, is de

Code for the paper "Benchmarking and Analyzing Point Cloud Classification under Corruptions"

ModelNet-C Code for the paper "Benchmarking and Analyzing Point Cloud Classification under Corruptions". For the latest updates, see: sites.google.com

A library for benchmarking, developing and deploying deep learning anomaly detection algorithms

A library for benchmarking, developing and deploying deep learning anomaly detection algorithms Key Features • Getting Started • Docs • License Introd

ColossalAI-Benchmark - Performance benchmarking with ColossalAI

Benchmark for Tuning Accuracy and Efficiency Overview The benchmark includes our

![[Pedestron] Generalizable Pedestrian Detection: The Elephant In The Room. @ CVPR2021](https://github.com/hasanirtiza/Pedestron/raw/master/gifs/1.gif)

[Pedestron] Generalizable Pedestrian Detection: The Elephant In The Room. @ CVPR2021

Pedestron Pedestron is a MMdetection based repository, that focuses on the advancement of research on pedestrian detection. We provide a list of detec

This repository contains datasets and baselines for benchmarking Chinese text recognition.

Benchmarking-Chinese-Text-Recognition This repository contains datasets and baselines for benchmarking Chinese text recognition. Please see the corres

Benchmark VAE - Library for Variational Autoencoder benchmarking

Documentation pythae This library implements some of the most common (Variational) Autoencoder models. In particular it provides the possibility to pe

Scaling and Benchmarking Self-Supervised Visual Representation Learning

FAIR Self-Supervision Benchmark is deprecated. Please see VISSL, a ground-up rewrite of benchmark in PyTorch. FAIR Self-Supervision Benchmark This cod

Location of public benchmarking; primarily final results

CSL_public_benchmark This repo is intended to provide a periodically-updated, public view into genome sequencing benchmarks managed by HudsonAlpha's C

WIP SAT benchmarking tooling, written with only my personal use in mind.

SAT Benchmarking Some early work in progress tooling for running benchmarks and keeping track of the results when working on SAT solvers and related t

Sweeter debugging and benchmarking Python programs.

Do you ever use print() or log() to debug your code? If so, ycecream, or y for short, will make printing debug information a lot sweeter. And on top o

A collection of benchmarking tools.

Benchmark Utilities About A collection of benchmarking tools. PYPI Package Table of Contents Using the library Installing and using the library Manual

A repository for benchmarking neural vocoders by their quality and speed.

License The majority of VocBench is licensed under CC-BY-NC, however portions of the project are available under separate license terms: Wavenet, Para

CSAW-M: An Ordinal Classification Dataset for Benchmarking Mammographic Masking of Cancer

CSAW-M This repository contains code for CSAW-M: An Ordinal Classification Dataset for Benchmarking Mammographic Masking of Cancer. Source code for tr

Molecular Sets (MOSES): A Benchmarking Platform for Molecular Generation Models

Molecular Sets (MOSES): A benchmarking platform for molecular generation models Deep generative models are rapidly becoming popular for the discovery

A dual benchmarking study of visual forgery and visual forensics techniques

A dual benchmarking study of facial forgery and facial forensics In recent years, visual forgery has reached a level of sophistication that humans can

tsflex - feature-extraction benchmarking

tsflex - feature-extraction benchmarking This repository withholds the benchmark results and visualization code of the tsflex paper and toolkit. Flow

Just a little benchmark for scrapper PC's

PopMark Just a little benchmark for scrapper PC's This benchmark is for old computer that dont support other benchmark because of support. Like lack o

Ludwig Benchmarking Toolkit

Ludwig Benchmarking Toolkit The Ludwig Benchmarking Toolkit is a personalized benchmarking toolkit for running end-to-end benchmark studies across an

Official codebase for "B-Pref: Benchmarking Preference-BasedReinforcement Learning" contains scripts to reproduce experiments.

B-Pref Official codebase for B-Pref: Benchmarking Preference-BasedReinforcement Learning contains scripts to reproduce experiments. Install conda env

Official codebase for "B-Pref: Benchmarking Preference-BasedReinforcement Learning" contains scripts to reproduce experiments.

B-Pref Official codebase for B-Pref: Benchmarking Preference-BasedReinforcement Learning contains scripts to reproduce experiments. Install conda env

A Comprehensive Study on Learning-Based PE Malware Family Classification Methods

A Comprehensive Study on Learning-Based PE Malware Family Classification Methods Datasets Because of copyright issues, both the MalwareBazaar dataset

Molecular Sets (MOSES): A benchmarking platform for molecular generation models

Molecular Sets (MOSES): A benchmarking platform for molecular generation models Deep generative models are rapidly becoming popular for the discovery

Revisiting Oxford and Paris: Large-Scale Image Retrieval Benchmarking

Revisiting Oxford and Paris: Large-Scale Image Retrieval Benchmarking We revisit and address issues with Oxford 5k and Paris 6k image retrieval benchm

Repo for "Physion: Evaluating Physical Prediction from Vision in Humans and Machines" submission to NeurIPS 2021 (Datasets & Benchmarks track)

Physion: Evaluating Physical Prediction from Vision in Humans and Machines This repo contains code and data to reproduce the results in our paper, Phy

A Python library for adversarial machine learning focusing on benchmarking adversarial robustness.

ARES This repository contains the code for ARES (Adversarial Robustness Evaluation for Safety), a Python library for adversarial machine learning rese

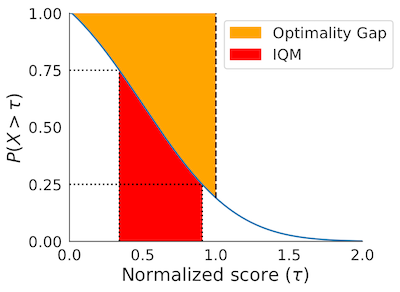

rliable is an open-source Python library for reliable evaluation, even with a handful of runs, on reinforcement learning and machine learnings benchmarks.

Open-source library for reliable evaluation on reinforcement learning and machine learning benchmarks. See NeurIPS 2021 oral for details.

Repository for benchmarking graph neural networks

Benchmarking Graph Neural Networks Updates Nov 2, 2020 Project based on DGL 0.4.2. See the relevant dependencies defined in the environment yml files

Benchmarking the robustness of Spatial-Temporal Models

Benchmarking the robustness of Spatial-Temporal Models This repositery contains the code for the paper Benchmarking the Robustness of Spatial-Temporal

ProFuzzBench - A Benchmark for Stateful Protocol Fuzzing

ProFuzzBench - A Benchmark for Stateful Protocol Fuzzing ProFuzzBench is a benchmark for stateful fuzzing of network protocols. It includes a suite of

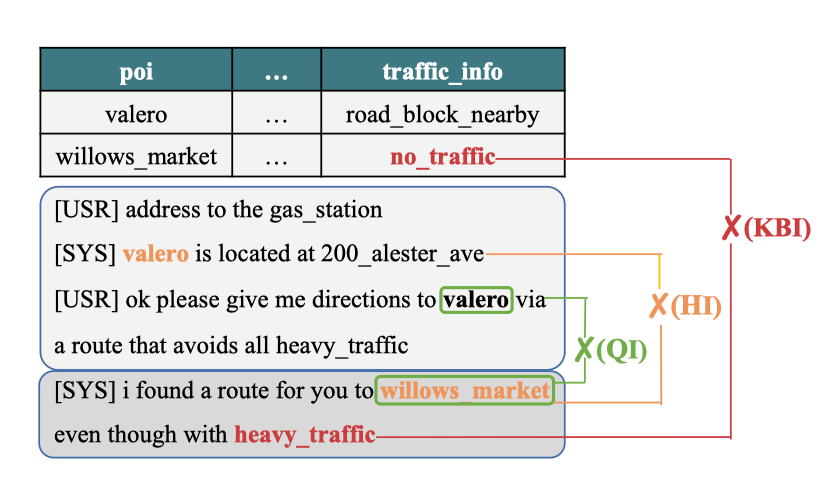

PyTorch code for EMNLP 2021 paper: Don't be Contradicted with Anything! CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System

PyTorch code for EMNLP 2021 paper: Don't be Contradicted with Anything! CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System

RobustART: Benchmarking Robustness on Architecture Design and Training Techniques

The first comprehensive Robustness investigation benchmark on large-scale dataset ImageNet regarding ARchitecture design and Training techniques towards diverse noises.

PyTorch code for EMNLP 2021 paper: Don't be Contradicted with Anything! CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System

Don’t be Contradicted with Anything!CI-ToD: Towards Benchmarking Consistency for Task-oriented Dialogue System This repository contains the PyTorch im

Merlion: A Machine Learning Framework for Time Series Intelligence

Merlion: A Machine Learning Library for Time Series Table of Contents Introduction Installation Documentation Getting Started Anomaly Detection Foreca

Merlion: A Machine Learning Framework for Time Series Intelligence

Merlion is a Python library for time series intelligence. It provides an end-to-end machine learning framework that includes loading and transforming data, building and training models, post-processing model outputs, and evaluating model performance. I

CARLA: A Python Library to Benchmark Algorithmic Recourse and Counterfactual Explanation Algorithms

CARLA - Counterfactual And Recourse Library CARLA is a python library to benchmark counterfactual explanation and recourse models. It comes out-of-the

Pip-package for trajectory benchmarking from "Be your own Benchmark: No-Reference Trajectory Metric on Registered Point Clouds", ECMR'21

Map Metrics for Trajectory Quality Map metrics toolkit provides a set of metrics to quantitatively evaluate trajectory quality via estimating consiste

FedScale: Benchmarking Model and System Performance of Federated Learning

FedScale: Benchmarking Model and System Performance of Federated Learning (Paper) This repository contains scripts and instructions of building FedSca

Revisiting, benchmarking, and refining Heterogeneous Graph Neural Networks.

Heterogeneous Graph Benchmark Revisiting, benchmarking, and refining Heterogeneous Graph Neural Networks. Roadmap We organize our repo by task, and on

whm also known as wifi-heat-mapper is a Python library for benchmarking Wi-Fi networks and gather useful metrics that can be converted into meaningful easy-to-understand heatmaps.

whm also known as wifi-heat-mapper is a Python library for benchmarking Wi-Fi networks and gather useful metrics that can be converted into meaningful easy-to-understand heatmaps.

A collection of GNN-based fake news detection models.

This repo includes the Pytorch-Geometric implementation of a series of Graph Neural Network (GNN) based fake news detection models. All GNN models are implemented and evaluated under the User Preference-aware Fake News Detection (UPFD) framework. The fake news detection problem is instantiated as a graph classification task under the UPFD framework.

FedNLP: A Benchmarking Framework for Federated Learning in Natural Language Processing

FedNLP is a research-oriented benchmarking framework for advancing federated learning (FL) in natural language processing (NLP). It uses FedML repository as the git submodule. In other words, FedNLP only focuses on adavanced models and dataset, while FedML supports various federated optimizers (e.g., FedAvg) and platforms (Distributed Computing, IoT/Mobile, Standalone).

Airspeed Velocity: A simple Python benchmarking tool with web-based reporting

airspeed velocity airspeed velocity (asv) is a tool for benchmarking Python packages over their lifetime. It is primarily designed to benchmark a sing

py.test fixture for benchmarking code

Overview docs tests package A pytest fixture for benchmarking code. It will group the tests into rounds that are calibrated to the chosen timer. See c

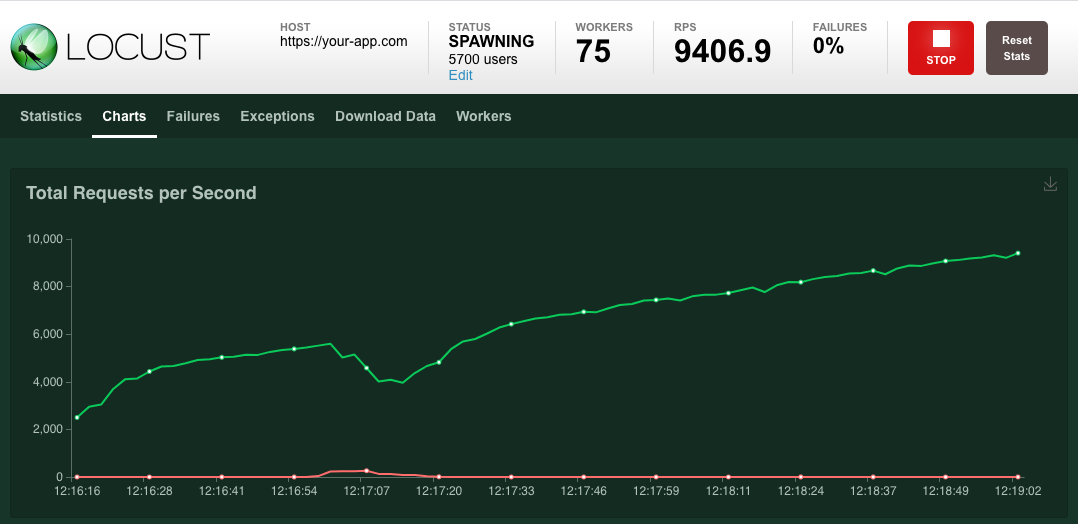

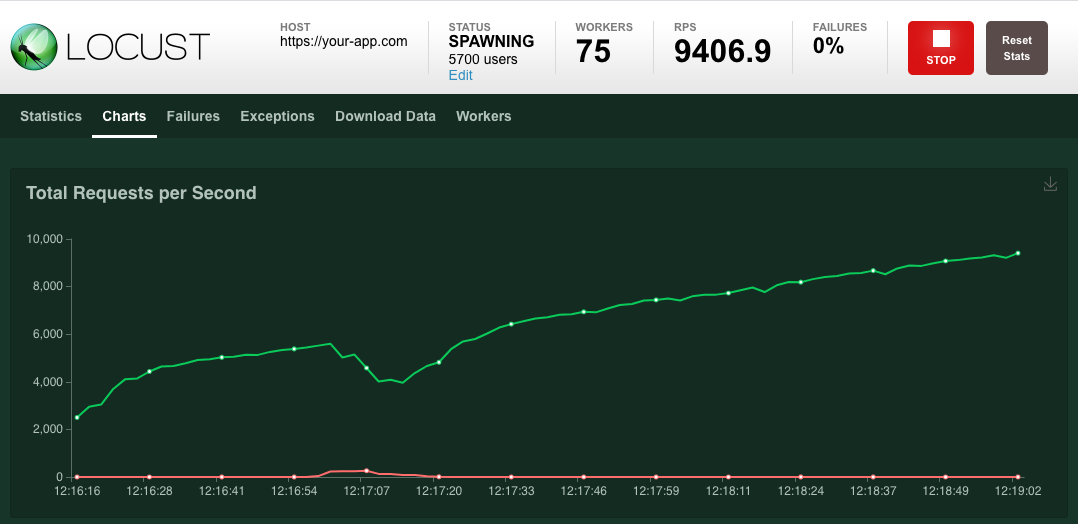

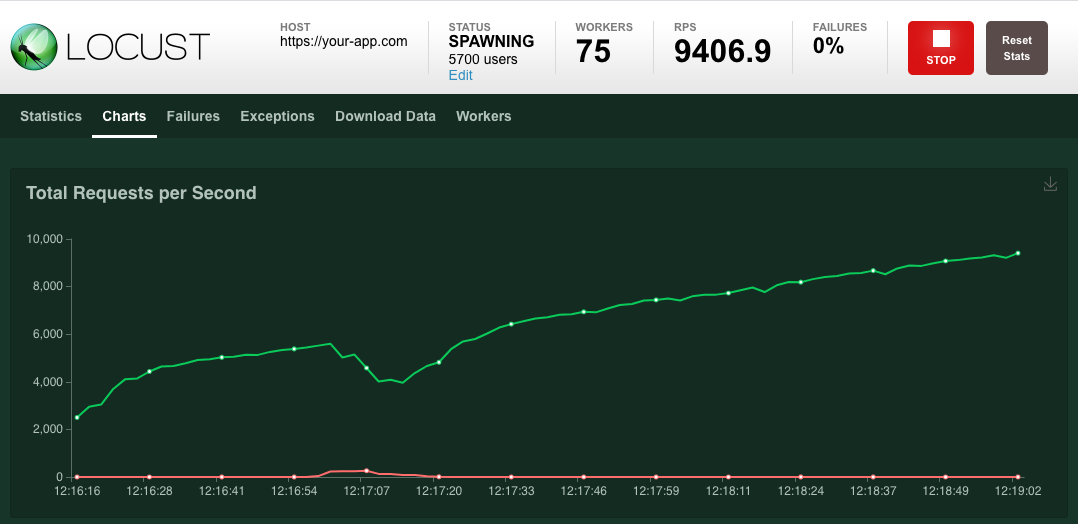

Scalable user load testing tool written in Python

Locust Locust is an easy to use, scriptable and scalable performance testing tool. You define the behaviour of your users in regular Python code, inst

Scalable user load testing tool written in Python

Locust Locust is an easy to use, scriptable and scalable performance testing tool. You define the behaviour of your users in regular Python code, inst

Scalable user load testing tool written in Python

Locust Locust is an easy to use, scriptable and scalable performance testing tool. You define the behaviour of your users in regular Python code, inst