363 Repositories

Python chinese-bert Libraries

Pytorch version of BERT-whitening

BERT-whitening This is the Pytorch implementation of "Whitening Sentence Representations for Better Semantics and Faster Retrieval". BERT-whitening is

Weakly supervised medical named entity classification

Trove Trove is a research framework for building weakly supervised (bio)medical named entity recognition (NER) and other entity attribute classifiers

Python library containing BART query generation and BERT-based Siamese models for neural retrieval.

Neural Retrieval Embedding-based Zero-shot Retrieval through Query Generation leverages query synthesis over large corpuses of unlabeled text (such as

I-BERT: Integer-only BERT Quantization

I-BERT: Integer-only BERT Quantization HuggingFace Implementation I-BERT is also available in the master branch of HuggingFace! Visit the following li

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Code for pre-training CharacterBERT models (as well as BERT models).

Pre-training CharacterBERT (and BERT) This is a repository for pre-training BERT and CharacterBERT. DISCLAIMER: The code was largely adapted from an o

Chinese Mandarin tts text-to-speech 中文 (普通话) 语音 合成 , by fastspeech 2 , implemented in pytorch, using waveglow as vocoder,

Chinese mandarin text to speech based on Fastspeech2 and Unet This is a modification and adpation of fastspeech2 to mandrin(普通话). Many modifications t

Combining Automatic Labelers and Expert Annotations for Accurate Radiology Report Labeling Using BERT

CheXbert: Combining Automatic Labelers and Expert Annotations for Accurate Radiology Report Labeling Using BERT CheXbert is an accurate, automated dee

Transformer related optimization, including BERT, GPT

This repository provides a script and recipe to run the highly optimized transformer-based encoder and decoder component, and it is tested and maintained by NVIDIA.

Adversarial Adaptation with Distillation for BERT Unsupervised Domain Adaptation

Knowledge Distillation for BERT Unsupervised Domain Adaptation Official PyTorch implementation | Paper Abstract A pre-trained language model, BERT, ha

Top2Vec is an algorithm for topic modeling and semantic search.

Top2Vec is an algorithm for topic modeling and semantic search. It automatically detects topics present in text and generates jointly embedded topic, document and word vectors.

Convolutional Recurrent Neural Networks(CRNN) for Scene Text Recognition

CRNN_Tensorflow This is a TensorFlow implementation of a Deep Neural Network for scene text recognition. It is mainly based on the paper "An End-to-En

make a better chinese character recognition OCR than tesseract

deep ocr See README_en.md for English installation documentation. 只在ubuntu下面测试通过,需要virtualenv安装,安装路径可自行调整: git clone https://github.com/JinpengLI/deep

CTPN + DenseNet + CTC based end-to-end Chinese OCR implemented using tensorflow and keras

简介 基于Tensorflow和Keras实现端到端的不定长中文字符检测和识别 文本检测:CTPN 文本识别:DenseNet + CTC 环境部署 sh setup.sh 注:CPU环境执行前需注释掉for gpu部分,并解开for cpu部分的注释 Demo 将测试图片放入test_images

Deep Learning Chinese Word Segment

引用 本项目模型BiLSTM+CRF参考论文:http://www.aclweb.org/anthology/N16-1030 ,IDCNN+CRF参考论文:https://arxiv.org/abs/1702.02098 构建 安装好bazel代码构建工具,安装好tensorflow(目前本项目需

ARU-Net - Deep Learning Chinese Word Segment

ARU-Net: A Neural Pixel Labeler for Layout Analysis of Historical Documents Contents Introduction Installation Demo Training Introduction This is the

TensorFlowTTS: Real-Time State-of-the-art Speech Synthesis for Tensorflow 2 (supported including English, Korean, Chinese, German and Easy to adapt for other languages)

🤪 TensorFlowTTS provides real-time state-of-the-art speech synthesis architectures such as Tacotron-2, Melgan, Multiband-Melgan, FastSpeech, FastSpeech2 based-on TensorFlow 2. With Tensorflow 2, we can speed-up training/inference progress, optimizer further by using fake-quantize aware and pruning, make TTS models can be run faster than real-time and be able to deploy on mobile devices or embedded systems.

CUAD

Contract Understanding Atticus Dataset This repository contains code for the Contract Understanding Atticus Dataset (CUAD), a dataset for legal contra

Contract Understanding Atticus Dataset

Contract Understanding Atticus Dataset This repository contains code for the Contract Understanding Atticus Dataset (CUAD), a dataset for legal contra

Minimalist BERT implementation assignment for CS11-747

minbert Assignment by Zhengbao Jiang, Shuyan Zhou, and Ritam Dutt This is an exercise in developing a minimalist version of BERT, part of Carnegie Mel

本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。

【关于 NLP】那些你不知道的事 作者:杨夕、芙蕖、李玲、陈海顺、twilight、LeoLRH、JimmyDU、艾春辉、张永泰、金金金 介绍 本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。 目录架构 一、【

Code associated with the "Data Augmentation using Pre-trained Transformer Models" paper

Data Augmentation using Pre-trained Transformer Models Code associated with the Data Augmentation using Pre-trained Transformer Models paper Code cont

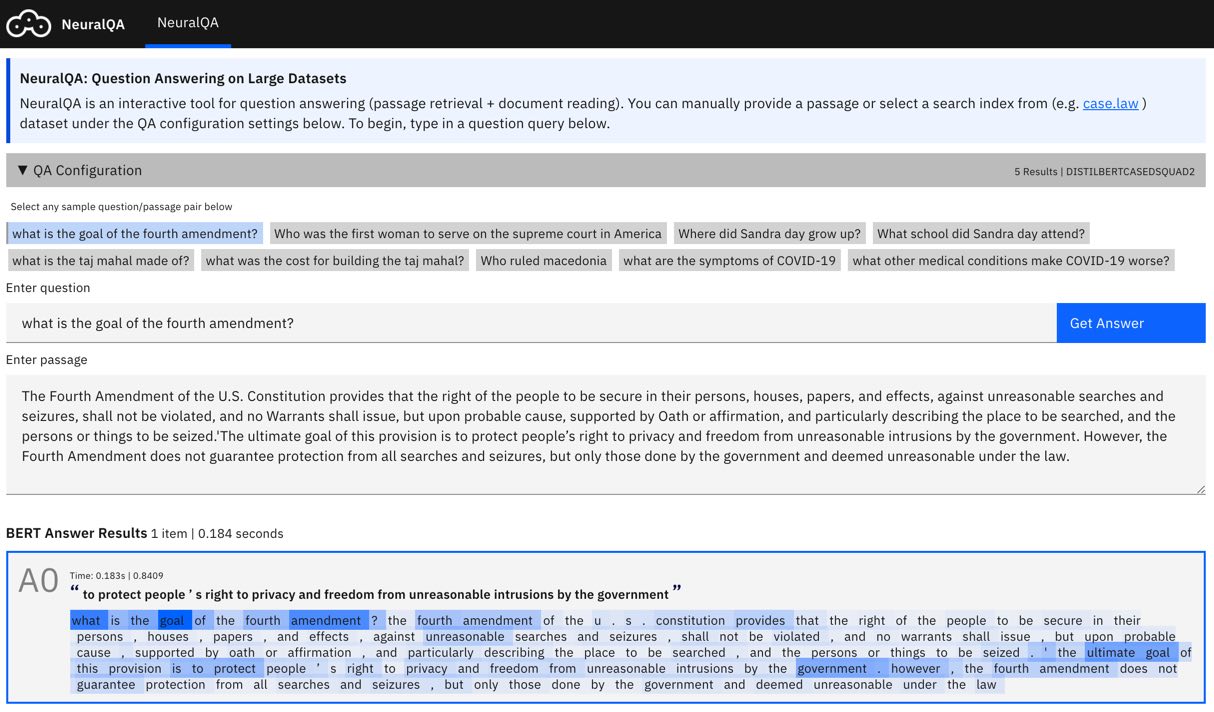

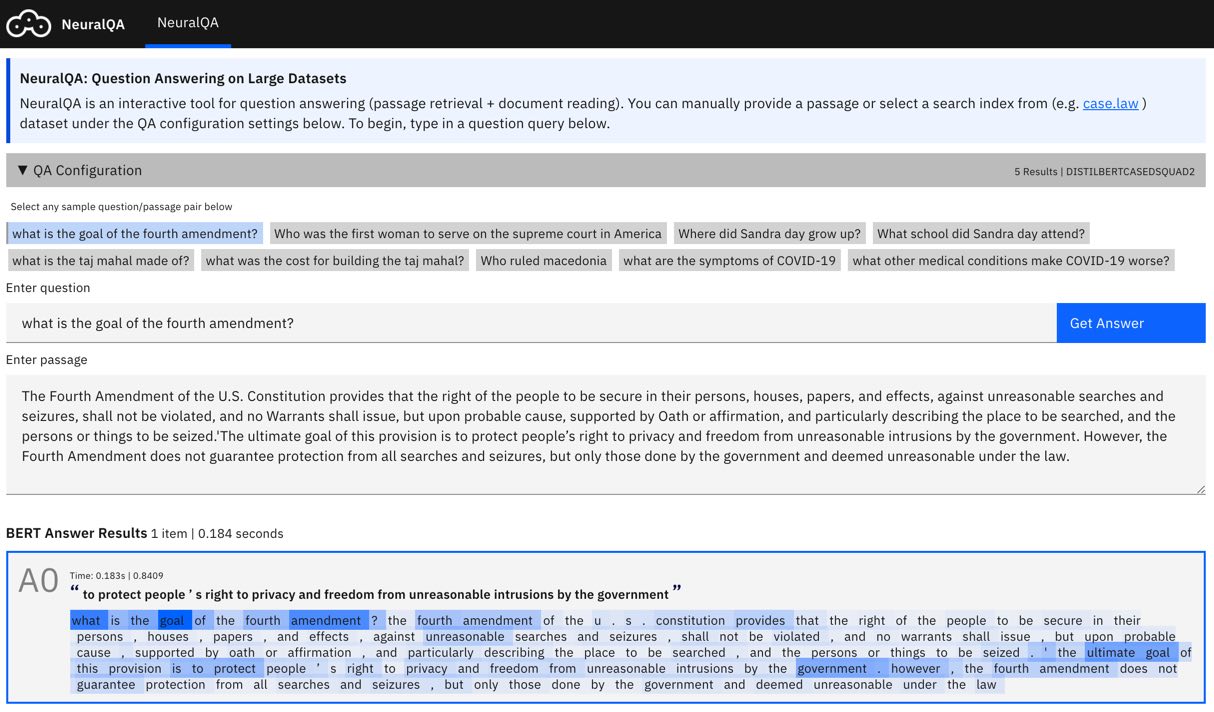

NeuralQA: A Usable Library for Question Answering on Large Datasets with BERT

NeuralQA: A Usable Library for (Extractive) Question Answering on Large Datasets with BERT Still in alpha, lots of changes anticipated. View demo on n

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Kashgari Overview | Performance | Installation | Documentation | Contributing 🎉 🎉 🎉 We released the 2.0.0 version with TF2 Support. 🎉 🎉 🎉 If you

:house_with_garden: Fast & easy transfer learning for NLP. Harvesting language models for the industry. Focus on Question Answering.

(Framework for Adapting Representation Models) What is it? FARM makes Transfer Learning with BERT & Co simple, fast and enterprise-ready. It's built u

Toolkit for Machine Learning, Natural Language Processing, and Text Generation, in TensorFlow. This is part of the CASL project: http://casl-project.ai/

Texar is a toolkit aiming to support a broad set of machine learning, especially natural language processing and text generation tasks. Texar provides

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

Super easy library for BERT based NLP models

Fast-Bert New - Learning Rate Finder for Text Classification Training (borrowed with thanks from https://github.com/davidtvs/pytorch-lr-finder) Suppor

🛸 Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy

spacy-transformers: Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy This package provides spaCy components and architectures to use tr

fastNLP: A Modularized and Extensible NLP Framework. Currently still in incubation.

fastNLP fastNLP是一款轻量级的自然语言处理(NLP)工具包,目标是快速实现NLP任务以及构建复杂模型。 fastNLP具有如下的特性: 统一的Tabular式数据容器,简化数据预处理过程; 内置多种数据集的Loader和Pipe,省去预处理代码; 各种方便的NLP工具,例如Embedd

:mag: End-to-End Framework for building natural language search interfaces to data by utilizing Transformers and the State-of-the-Art of NLP. Supporting DPR, Elasticsearch, HuggingFace’s Modelhub and much more!

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

Sentence Embeddings with BERT & XLNet

Sentence Transformers: Multilingual Sentence Embeddings using BERT / RoBERTa / XLM-RoBERTa & Co. with PyTorch This framework provides an easy method t

💥 Fast State-of-the-Art Tokenizers optimized for Research and Production

Provides an implementation of today's most used tokenizers, with a focus on performance and versatility. Main features: Train new vocabularies and tok

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

InfoBERT: Improving Robustness of Language Models from An Information Theoretic Perspective

InfoBERT: Improving Robustness of Language Models from An Information Theoretic Perspective This is the official code base for our ICLR 2021 paper

CJK computer science terms comparison / 中日韓電腦科學術語對照 / 日中韓のコンピュータ科学の用語対照 / 한·중·일 전산학 용어 대조

CJK computer science terms comparison This repository contains the source code of the website. You can see the website from the following link: Englis

NeuralQA: A Usable Library for Question Answering on Large Datasets with BERT

NeuralQA: A Usable Library for (Extractive) Question Answering on Large Datasets with BERT Still in alpha, lots of changes anticipated. View demo on n

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Kashgari Overview | Performance | Installation | Documentation | Contributing 🎉 🎉 🎉 We released the 2.0.0 version with TF2 Support. 🎉 🎉 🎉 If you

:house_with_garden: Fast & easy transfer learning for NLP. Harvesting language models for the industry. Focus on Question Answering.

(Framework for Adapting Representation Models) What is it? FARM makes Transfer Learning with BERT & Co simple, fast and enterprise-ready. It's built u

Toolkit for Machine Learning, Natural Language Processing, and Text Generation, in TensorFlow. This is part of the CASL project: http://casl-project.ai/

Texar is a toolkit aiming to support a broad set of machine learning, especially natural language processing and text generation tasks. Texar provides

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

Super easy library for BERT based NLP models

Fast-Bert New - Learning Rate Finder for Text Classification Training (borrowed with thanks from https://github.com/davidtvs/pytorch-lr-finder) Suppor

🛸 Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy

spacy-transformers: Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy This package provides spaCy components and architectures to use tr

fastNLP: A Modularized and Extensible NLP Framework. Currently still in incubation.

fastNLP fastNLP是一款轻量级的自然语言处理(NLP)工具包,目标是快速实现NLP任务以及构建复杂模型。 fastNLP具有如下的特性: 统一的Tabular式数据容器,简化数据预处理过程; 内置多种数据集的Loader和Pipe,省去预处理代码; 各种方便的NLP工具,例如Embedd

:mag: Transformers at scale for question answering & neural search. Using NLP via a modular Retriever-Reader-Pipeline. Supporting DPR, Elasticsearch, HuggingFace's Modelhub...

Haystack is an end-to-end framework for Question Answering & Neural search that enables you to ... ... ask questions in natural language and find gran

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

Sentence Embeddings with BERT & XLNet

Sentence Transformers: Multilingual Sentence Embeddings using BERT / RoBERTa / XLM-RoBERTa & Co. with PyTorch This framework provides an easy method t

💥 Fast State-of-the-Art Tokenizers optimized for Research and Production

Provides an implementation of today's most used tokenizers, with a focus on performance and versatility. Main features: Train new vocabularies and tok

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

NLP Core Library and Model Zoo based on PaddlePaddle 2.0

PaddleNLP 2.0拥有丰富的模型库、简洁易用的API与高性能的分布式训练的能力,旨在为飞桨开发者提升文本建模效率,并提供基于PaddlePaddle 2.0的NLP领域最佳实践。

Chinese NewsTitle Generation Project by GPT2.带有超级详细注释的中文GPT2新闻标题生成项目。

GPT2-NewsTitle 带有超详细注释的GPT2新闻标题生成项目 UpDate 01.02.2021 从网上收集数据,将清华新闻数据、搜狗新闻数据等新闻数据集,以及开源的一些摘要数据进行整理清洗,构建一个较完善的中文摘要数据集。 数据集清洗时,仅进行了简单地规则清洗。

天池中药说明书实体识别挑战冠军方案;中文命名实体识别;NER; BERT-CRF & BERT-SPAN & BERT-MRC;Pytorch

天池中药说明书实体识别挑战冠军方案;中文命名实体识别;NER; BERT-CRF & BERT-SPAN & BERT-MRC;Pytorch

Big Bird: Transformers for Longer Sequences

BigBird, is a sparse-attention based transformer which extends Transformer based models, such as BERT to much longer sequences. Moreover, BigBird comes along with a theoretical understanding of the capabilities of a complete transformer that the sparse model can handle.

PhoNLP: A BERT-based multi-task learning toolkit for part-of-speech tagging, named entity recognition and dependency parsing

PhoNLP is a multi-task learning model for joint part-of-speech (POS) tagging, named entity recognition (NER) and dependency parsing. Experiments on Vietnamese benchmark datasets show that PhoNLP produces state-of-the-art results, outperforming a single-task learning approach that fine-tunes the pre-trained Vietnamese language model PhoBERT for each task independently.

Code of paper: A Recurrent Vision-and-Language BERT for Navigation

Recurrent VLN-BERT Code of the Recurrent-VLN-BERT paper: A Recurrent Vision-and-Language BERT for Navigation Yicong Hong, Qi Wu, Yuankai Qi, Cristian

CCF BDCI 2020 房产行业聊天问答匹配赛道 A榜47/2985

CCF BDCI 2020 房产行业聊天问答匹配 A榜47/2985 赛题描述详见:https://www.datafountain.cn/competitions/474 文件说明 data: 存放训练数据和测试数据以及预处理代码 model_bert.py: 网络模型结构定义 adv_train

汉字转拼音(pypinyin)

汉字拼音转换工具(Python 版) 将汉字转为拼音。可以用于汉字注音、排序、检索(Russian translation) 。 基于 hotoo/pinyin 开发。 Documentation: http://pypinyin.rtfd.io/ GitHub: https://github.co

a chinese segment base on crf

Genius Genius是一个开源的python中文分词组件,采用 CRF(Conditional Random Field)条件随机场算法。 Feature 支持python2.x、python3.x以及pypy2.x。 支持简单的pinyin分词 支持用户自定义break 支持用户自定义合并词

Chinese segmentation library

What is loso? loso is a Chinese segmentation system written in Python. It was developed by Victor Lin ([email protected]) for Plurk Inc. Copyright &

Python library for processing Chinese text

SnowNLP: Simplified Chinese Text Processing SnowNLP是一个python写的类库,可以方便的处理中文文本内容,是受到了TextBlob的启发而写的,由于现在大部分的自然语言处理库基本都是针对英文的,于是写了一个方便处理中文的类库,并且和TextBlob

pkuseg多领域中文分词工具; The pkuseg toolkit for multi-domain Chinese word segmentation

pkuseg:一个多领域中文分词工具包 (English Version) pkuseg 是基于论文[Luo et. al, 2019]的工具包。其简单易用,支持细分领域分词,有效提升了分词准确度。 目录 主要亮点 编译和安装 各类分词工具包的性能对比 使用方式 论文引用 作者 常见问题及解答 主要

Ready-to-use OCR with 80+ supported languages and all popular writing scripts including Latin, Chinese, Arabic, Devanagari, Cyrillic and etc.

EasyOCR Ready-to-use OCR with 80+ languages supported including Chinese, Japanese, Korean and Thai. What's new 1 February 2021 - Version 1.2.3 Add set