363 Repositories

Python chinese-bert Libraries

ACL'22: Structured Pruning Learns Compact and Accurate Models

☕ CoFiPruning: Structured Pruning Learns Compact and Accurate Models This repository contains the code and pruned models for our ACL'22 paper Structur

HugsVision is a easy to use huggingface wrapper for state-of-the-art computer vision

HugsVision is an open-source and easy to use all-in-one huggingface wrapper for computer vision. The goal is to create a fast, flexible and user-frien

An easy-to-use framework for BERT models, with trainers, various NLP tasks and detailed annonations

FantasyBert English | 中文 Introduction An easy-to-use framework for BERT models, with trainers, various NLP tasks and detailed annonations. You can imp

Sapiens is a human antibody language model based on BERT.

Sapiens: Human antibody language model ____ _ / ___| __ _ _ __ (_) ___ _ __ ___ \___ \ / _` | '_ \| |/ _ \ '

KoBERTopic은 BERTopic을 한국어 데이터에 적용할 수 있도록 토크나이저와 BERT를 수정한 코드입니다.

KoBERTopic 모델 소개 KoBERTopic은 BERTopic을 한국어 데이터에 적용할 수 있도록 토크나이저와 BERT를 수정했습니다. 기존 BERTopic : https://github.com/MaartenGr/BERTopic/tree/05a6790b21009d

A Persian Image Captioning model based on Vision Encoder Decoder Models of the transformers🤗.

Persian-Image-Captioning We fine-tuning the Vision Encoder Decoder Model for the task of image captioning on the coco-flickr-farsi dataset. The implem

Persian Bert For Long-Range Sequences

ParsBigBird: Persian Bert For Long-Range Sequences The Bert and ParsBert algorithms can handle texts with token lengths of up to 512, however, many ta

This is a repo of basic Machine Learning!

Basic Machine Learning This repository contains a topic-wise curated list of Machine Learning and Deep Learning tutorials, articles and other resource

🚀 RocketQA, dense retrieval for information retrieval and question answering, including both Chinese and English state-of-the-art models.

In recent years, the dense retrievers based on pre-trained language models have achieved remarkable progress. To facilitate more developers using cutt

Multilingual Emotion classification using BERT (fine-tuning). Published at the WASSA workshop (ACL2022).

XLM-EMO: Multilingual Emotion Prediction in Social Media Text Abstract Detecting emotion in text allows social and computational scientists to study h

LightHuBERT: Lightweight and Configurable Speech Representation Learning with Once-for-All Hidden-Unit BERT

LightHuBERT LightHuBERT: Lightweight and Configurable Speech Representation Learning with Once-for-All Hidden-Unit BERT | Github | Huggingface | SUPER

SIGIR'22 paper: Axiomatically Regularized Pre-training for Ad hoc Search

Introduction This codebase contains source-code of the Python-based implementation (ARES) of our SIGIR 2022 paper. Chen, Jia, et al. "Axiomatically Re

This PyTorch package implements MoEBERT: from BERT to Mixture-of-Experts via Importance-Guided Adaptation (NAACL 2022).

MoEBERT This PyTorch package implements MoEBERT: from BERT to Mixture-of-Experts via Importance-Guided Adaptation (NAACL 2022). Installation Create an

Official repository for "Exploiting Session Information in BERT-based Session-aware Sequential Recommendation", SIGIR 2022 short.

Session-aware BERT4Rec Official repository for "Exploiting Session Information in BERT-based Session-aware Sequential Recommendation", SIGIR 2022 shor

Create a semantic search engine with a neural network (i.e. BERT) whose knowledge base can be updated

Create a semantic search engine with a neural network (i.e. BERT) whose knowledge base can be updated. This engine can later be used for downstream tasks in NLP such as Q&A, summarization, generation, and natural language understanding (NLU).

Pretrained Japanese BERT models

Pretrained Japanese BERT models This is a repository of pretrained Japanese BERT models. The models are available in Transformers by Hugging Face. Mod

L3Cube-MahaCorpus a Marathi monolingual data set scraped from different internet sources.

L3Cube-MahaCorpus L3Cube-MahaCorpus a Marathi monolingual data set scraped from different internet sources. We expand the existing Marathi monolingual

Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5

NLP-Summarizer Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5 This project aimed to provide in

Sequence-tagging using deep learning

Classification using Deep Learning Requirements PyTorch version = 1.9.1+cu111 Python version = 3.8.10 PyTorch-Lightning version = 1.4.9 Huggingface

In this workshop we will be exploring NLP state of the art transformers, with SOTA models like T5 and BERT, then build a model using HugginFace transformers framework.

Transformers are all you need In this workshop we will be exploring NLP state of the art transformers, with SOTA models like T5 and BERT, then build a

RuCLIP-SB (Russian Contrastive Language–Image Pretraining SWIN-BERT) is a multimodal model for obtaining images and text similarities and rearranging captions and pictures. Unlike other versions of the model we use BERT for text encoder and SWIN transformer for image encoder.

ruCLIP-SB RuCLIP-SB (Russian Contrastive Language–Image Pretraining SWIN-BERT) is a multimodal model for obtaining images and text similarities and re

This repository contains code accompanying the paper "An End-to-End Chinese Text Normalization Model based on Rule-Guided Flat-Lattice Transformer"

FlatTN This repository contains code accompanying the paper "An End-to-End Chinese Text Normalization Model based on Rule-Guided Flat-Lattice Transfor

A PyTorch implementation for our paper "Dual Contrastive Learning: Text Classification via Label-Aware Data Augmentation".

Dual-Contrastive-Learning A PyTorch implementation for our paper "Dual Contrastive Learning: Text Classification via Label-Aware Data Augmentation". Y

Accelerating BERT Inference for Sequence Labeling via Early-Exit

Sequence-Labeling-Early-Exit Code for ACL 2021 paper: Accelerating BERT Inference for Sequence Labeling via Early-Exit Requirement: Please refer to re

RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2

RoNER RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2. It is meant to be an easy to use, hi

This repo provides a package to automatically select a random seed based on ancient Chinese Xuanxue

🤞 Random Luck Deep learning is acturally the alchemy. This repo provides a package to automatically select a random seed based on ancient Chinese Xua

BERTopic is a topic modeling technique that leverages 🤗 transformers and c-TF-IDF to create dense clusters allowing for easily interpretable topics whilst keeping important words in the topic descriptions

BERTopic BERTopic is a topic modeling technique that leverages 🤗 transformers and c-TF-IDF to create dense clusters allowing for easily interpretable

Code for the paper BERT might be Overkill: A Tiny but Effective Biomedical Entity Linker based on Residual Convolutional Neural Networks

Biomedical Entity Linking This repo provides the code for the paper BERT might be Overkill: A Tiny but Effective Biomedical Entity Linker based on Res

T‘rex Park is a Youzan sponsored project. Offering Chinese NLP and image models pretrained from E-commerce datasets

T‘rex Park is a Youzan sponsored project. Offering Chinese NLP and image models pretrained from E-commerce datasets (product titles, images, comments, etc.).

Codes and scripts for "Explainable Semantic Space by Grounding Languageto Vision with Cross-Modal Contrastive Learning"

Visually Grounded Bert Language Model This repository is the official implementation of Explainable Semantic Space by Grounding Language to Vision wit

In this project, we compared Spanish BERT and Multilingual BERT in the Sentiment Analysis task.

Applying BERT Fine Tuning to Sentiment Classification on Amazon Reviews Abstract Sentiment analysis has made great progress in recent years, due to th

This repository contains demos I made with the Transformers library by HuggingFace.

Transformers-Tutorials Hi there! This repository contains demos I made with the Transformers library by 🤗 HuggingFace. Currently, all of them are imp

[AI6122] Text Data Management & Processing

[AI6122] Text Data Management & Processing is an elective course of MSAI, SCSE, NTU, Singapore. The repository corresponds to the AI6122 of Semester 1, AY2021-2022, starting from 08/2021. The instructor of this course is Prof. Sun Aixin.

A Deep Learning Based Knowledge Extraction Toolkit for Knowledge Base Population

DeepKE is a knowledge extraction toolkit supporting low-resource and document-level scenarios for entity, relation and attribute extraction. We provide comprehensive documents, Google Colab tutorials, and online demo for beginners.

Prompt-BERT: Prompt makes BERT Better at Sentence Embeddings

Prompt-BERT: Prompt makes BERT Better at Sentence Embeddings Results on STS Tasks Model STS12 STS13 STS14 STS15 STS16 STSb SICK-R Avg. unsup-prompt-be

Code for ECIR'20 paper Diagnosing BERT with Retrieval Heuristics

Bert Axioms This is the repository with the code for the Paper Diagnosing BERT with Retrieval Heuristics Required Data In order to run this code, you

Python-zhuyin - An open source Python library that provides a unified interface for converting between Chinese pinyin and Zhuyin (bopomofo)

Python-zhuyin - An open source Python library that provides a unified interface for converting between Chinese pinyin and Zhuyin (bopomofo)

2021 AI CUP Competition on Traditional Chinese Scene Text Recognition - Intermediate Contest

繁體中文場景文字辨識 程式碼說明 組別:這就是我 成員:蔣明憲 唐碩謙 黃玥菱 林冠霆 蕭靖騰 目錄 環境套件 安裝方式 資料夾布局 前處理-製作偵測訓練註解檔 前處理-製作分類訓練樣本 part.py : 從 json 裁切出分類訓練樣本 Class.py : 將切出來的樣本按照文字分類到各資料夾

Mapping a variable-length sentence to a fixed-length vector using BERT model

Are you looking for X-as-service? Try the Cloud-Native Neural Search Framework for Any Kind of Data bert-as-service Using BERT model as a sentence enc

Exploration of BERT-based models on twitter sentiment classifications

twitter-sentiment-analysis Explore the relationship between twitter sentiment of Tesla and its stock price/return. Explore the effect of different BER

Huawei firewall automatically updates Chinese ip to target IP group.

Huawei firewall automatically updates Chinese ip to target IP group.

End-to-end MLOps pipeline of a BERT model for emotion classification.

image source EmoBERT-MLOps The goal of this repository is to build an end-to-end MLOps pipeline based on the MLOps course from Made with ML, but this

ASCEND Chinese-English code-switching dataset

ASCEND (A Spontaneous Chinese-English Dataset) introduces a high-quality resource of spontaneous multi-turn conversational dialogue Chinese-English code-switching corpus collected in Hong Kong.

Datasets, tools, and benchmarks for representation learning of code.

The CodeSearchNet challenge has been concluded We would like to thank all participants for their submissions and we hope that this challenge provided

A series of Jupyter notebooks with Chinese comment that walk you through the fundamentals of Machine Learning and Deep Learning in python using Scikit-Learn and TensorFlow.

Hands-on-Machine-Learning 目的 这份笔记旨在帮助中文学习者以一种较快较系统的方式入门机器学习, 是在学习Hands-on Machine Learning with Scikit-Learn and TensorFlow这本书的 时候做的个人笔记: 此项目的可取之处 原书的

Jupyter Notebook tutorials on solving real-world problems with Machine Learning & Deep Learning using PyTorch

Jupyter Notebook tutorials on solving real-world problems with Machine Learning & Deep Learning using PyTorch. Topics: Face detection with Detectron 2, Time Series anomaly detection with LSTM Autoencoders, Object Detection with YOLO v5, Build your first Neural Network, Time Series forecasting for Coronavirus daily cases, Sentiment Analysis with BERT.

Anki Cards for the HSK vocabulary Chinese-German

Anki-HanyuShuipingKaoshi Anki Cards for the HSK vocabulary Chinese-German Das Deck baut auf folgenden Quellen auf: China Endecken Wortschatz von wohok

A Demo server serving Bert through ONNX with GPU written in Rust with 3

Demo BERT ONNX server written in rust This demo showcase the use of onnxruntime-rs on BERT with a GPU on CUDA 11 served by actix-web and tokenized wit

Using BERT+Bi-LSTM+CRF

Chinese Medical Entity Recognition Based on BERT+Bi-LSTM+CRF Step 1 I share the dataset on my google drive, please download the whole 'CCKS_2019_Task1

Chinese version of GPT2 training code, using BERT tokenizer.

GPT2-Chinese Description Chinese version of GPT2 training code, using BERT tokenizer or BPE tokenizer. It is based on the extremely awesome repository

This project deals with a simplified version of a more general problem of Aspect Based Sentiment Analysis.

Aspect_Based_Sentiment_Extraction Created on: 5th Jan, 2022. This project deals with an important field of Natural Lnaguage Processing - Aspect Based

Rhyme with AI

Local development Create a conda virtual environment and activate it: conda env create --file environment.yml conda activate rhyme-with-ai Install the

YACLC - Yet Another Chinese Learner Corpus

汉语学习者文本多维标注数据集YACLC V1.0 中文 | English 汉语学习者文本多维标注数据集(Yet Another Chinese Learner

This repository contains datasets and baselines for benchmarking Chinese text recognition.

Benchmarking-Chinese-Text-Recognition This repository contains datasets and baselines for benchmarking Chinese text recognition. Please see the corres

Two-stage text summarization with BERT and BART

Two-Stage Text Summarization Description We experiment with a 2-stage summarization model on CNN/DailyMail dataset that combines the ability to filter

Using BERT-based models for toxic span detection

SemEval 2021 Task 5: Toxic Spans Detection: Task: Link to SemEval-2021: Task 5 Toxic Span Detection is https://competitions.codalab.org/competitions/2

Chinese-specific configuration to improve your favorite DNS server

Dnsmasq-china-list - Chinese-specific configuration to improve your favorite DNS server. Best partner for chnroutes.

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning And private Server services

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning

KoBERT - Korean BERT pre-trained cased (KoBERT)

KoBERT KoBERT Korean BERT pre-trained cased (KoBERT) Why'?' Training Environment Requirements How to install How to use Using with PyTorch Using with

Automatic library of congress classification, using word embeddings from book titles and synopses.

Automatic Library of Congress Classification The Library of Congress Classification (LCC) is a comprehensive classification system that was first deve

GCRC: A Gaokao Chinese Reading Comprehension dataset for interpretable Evaluation

GCRC GCRC: A New Challenging MRC Dataset from Gaokao Chinese for Explainable Eva

Source code of the "Graph-Bert: Only Attention is Needed for Learning Graph Representations" paper

Graph-Bert Source code of "Graph-Bert: Only Attention is Needed for Learning Graph Representations". Please check the script.py as the entry point. We

Pre-training of Graph Augmented Transformers for Medication Recommendation

G-Bert Pre-training of Graph Augmented Transformers for Medication Recommendation Intro G-Bert combined the power of Graph Neural Networks and BERT (B

Self-Guided Contrastive Learning for BERT Sentence Representations

Self-Guided Contrastive Learning for BERT Sentence Representations This repository is dedicated for releasing the implementation of the models utilize

EmoTag helps you train emotion detection model for Chinese audios

emoTag emoTag helps you train emotion detection model for Chinese audios. Environment pip install -r requirement.txt Data We used Emotional Speech Dat

Utilize Korean BERT model in sentence-transformers library

ko-sentence-transformers 이 프로젝트는 KoBERT 모델을 sentence-transformers 에서 보다 쉽게 사용하기 위해 만들어졌습니다. Ko-Sentence-BERT-SKTBERT 프로젝트에서는 KoBERT 모델을 sentence-trans

jiant is an NLP toolkit

🚨 Update 🚨 : As of 2021/10/17, the jiant project is no longer being actively maintained. This means there will be no plans to add new models, tasks,

PyBERT is a serial communication link bit error rate tester simulator with a graphical user interface (GUI).

PyBERT PyBERT is a serial communication link bit error rate tester simulator with a graphical user interface (GUI). It uses the Traits/UI package of t

Korean Sentence Embedding Repository

Korean-Sentence-Embedding 🍭 Korean sentence embedding repository. You can download the pre-trained models and inference right away, also it provides

A unified tokenization tool for Images, Chinese and English.

ICE Tokenizer Token id [0, 20000) are image tokens. Token id [20000, 20100) are common tokens, mainly punctuations. E.g., icetk[20000] == 'unk', ice

A 30000+ Chinese MRC dataset - Delta Reading Comprehension Dataset

Delta Reading Comprehension Dataset 台達閱讀理解資料集 Delta Reading Comprehension Dataset (DRCD) 屬於通用領域繁體中文機器閱讀理解資料集。 本資料集期望成為適用於遷移學習之標準中文閱讀理解資料集。 本資料集從2,108篇

A Telegram bot to index Chinese and Japanese group contents, works with @lilydjwg/luoxu.

luoxu-bot luoxu-bot 是类似于 luoxu-web 的 CJK 友好的 Telegram Bot,依赖于 luoxu 所创建的后端。 测试环境 Python 3.7.9 pip 21.1.2 开发中使用到的 Telethon 需要 Python 3+ 配置 前往 luoxu 根据相

⛵️The official PyTorch implementation for "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing" (EMNLP 2020).

BERT-of-Theseus Code for paper "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing". BERT-of-Theseus is a new compressed BERT by progre

Chinese license plate recognition

AgentCLPR 简介 一个基于 ONNXRuntime、AgentOCR 和 License-Plate-Detector 项目开发的中国车牌检测识别系统。 车牌识别效果 支持多种车牌的检测和识别(其中单层车牌识别效果较好): 单层车牌: [[[[373, 282], [69, 284],

BERT, LDA, and TFIDF based keyword extraction in Python

BERT, LDA, and TFIDF based keyword extraction in Python kwx is a toolkit for multilingual keyword extraction based on Google's BERT and Latent Dirichl

BERT-based Financial Question Answering System

BERT-based Financial Question Answering System In this example, we use Jina, PyTorch, and Hugging Face transformers to build a production-ready BERT-b

Chinese named entity recognization with BiLSTM using Keras

Chinese named entity recognization (Bilstm with Keras) Project Structure ./ ├── README.md ├── data │ ├── README.md │ ├── data 数据集 │ │ ├─

Chinese named entity recognization (bert/roberta/macbert/bert_wwm with Keras)

Chinese named entity recognization (bert/roberta/macbert/bert_wwm with Keras)

Chinese Named Entity Recognization (BiLSTM with PyTorch)

BiLSTM-CRF for Name Entity Recognition PyTorch version A PyTorch implemention of Bi-LSTM-CRF model for Chinese Named Entity Recognition. 使用 PyTorch 实现

Chinese NER with albert/electra or other bert descendable model (keras)

Chinese NLP (albert/electra with Keras) Named Entity Recognization Project Structure ./ ├── NER │ ├── __init__.py │ ├── log

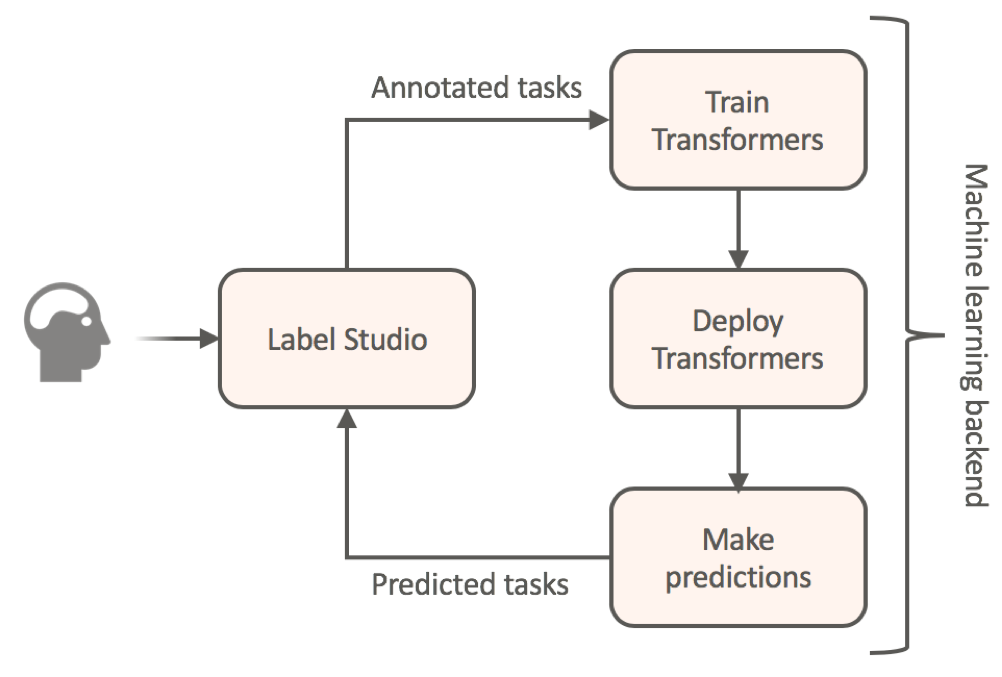

Label data using HuggingFace's transformers and automatically get a prediction service

Label Studio for Hugging Face's Transformers Website • Docs • Twitter • Join Slack Community Transfer learning for NLP models by annotating your textu

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

ConvBERT: Improving BERT with Span-based Dynamic Convolution

ConvBERT Introduction In this repo, we introduce a new architecture ConvBERT for pre-training based language model. The code is tested on a V100 GPU.

Repository for the paper "Optimal Subarchitecture Extraction for BERT"

Bort Companion code for the paper "Optimal Subarchitecture Extraction for BERT." Bort is an optimal subset of architectural parameters for the BERT ar

DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference

DeeBERT This is the code base for the paper DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference. Code in this repository is also available

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

ALBERT ***************New March 28, 2020 *************** Add a colab tutorial to run fine-tuning for GLUE datasets. ***************New January 7, 2020

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Text Classification in Turkish Texts with Bert

You can watch the details of the project on my youtube channel Project Interface Project Second Interface Goal= Correctly guessing the classification

Powerful unsupervised domain adaptation method for dense retrieval.

Powerful unsupervised domain adaptation method for dense retrieval

Using Bert as the backbone model for lime, designed for NLP task explanation (sentence pair text classification task)

Lime Comparing deep contextualized model for sentences highlighting task. In addition, take the classic explanation model "LIME" with bert-base model

ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab

AliceMind AliceMind: ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab This repository provides pre-trained encode

iBOT: Image BERT Pre-Training with Online Tokenizer

Image BERT Pre-Training with iBOT Official PyTorch implementation and pretrained models for paper iBOT: Image BERT Pre-Training with Online Tokenizer.

K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce (EMNLP Founding 2021)

Introduction K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce. Installation PyTor

iBOT: Image BERT Pre-Training with Online Tokenizer

Image BERT Pre-Training with iBOT Official PyTorch implementation and pretrained models for paper iBOT: Image BERT Pre-Training with Online Tokenizer.

ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab

AliceMind AliceMind: ALIbaba's Collection of Encoder-decoders from MinD (Machine IntelligeNce of Damo) Lab This repository provides pre-trained encode

Nested Named Entity Recognition for Chinese Biomedical Text

CBio-NAMER CBioNAMER (Nested nAMed Entity Recognition for Chinese Biomedical Text) is our method used in CBLUE (Chinese Biomedical Language Understand

BERTMap: A BERT-Based Ontology Alignment System

BERTMap: A BERT-based Ontology Alignment System Important Notices The relevant paper was accepted in AAAI-2022. Arxiv version is available at: https:/

ColBERT: Contextualized Late Interaction over BERT (SIGIR'20)

Update: if you're looking for ColBERTv2 code, you can find it alongside a new simpler API, in the branch new_api. ColBERT ColBERT is a fast and accura