237 Repositories

Python evaluation-metrics Libraries

Politecnico of Turin Thesis: "Implementation and Evaluation of an Educational Chatbot based on NLP Techniques"

THESIS_CAIRONE_FIORENTINO Politecnico of Turin Thesis: "Implementation and Evaluation of an Educational Chatbot based on NLP Techniques" GENERATE TOKE

Automatic Video Captioning Evaluation Metric --- EMScore

Automatic Video Captioning Evaluation Metric --- EMScore Overview For an illustration, EMScore can be computed as: Installation modify the encode_text

Code for training and evaluation of the model from "Language Generation with Recurrent Generative Adversarial Networks without Pre-training"

Language Generation with Recurrent Generative Adversarial Networks without Pre-training Code for training and evaluation of the model from "Language G

A high performance implementation of HDBSCAN clustering.

HDBSCAN HDBSCAN - Hierarchical Density-Based Spatial Clustering of Applications with Noise. Performs DBSCAN over varying epsilon values and integrates

Non-Metric Space Library (NMSLIB): An efficient similarity search library and a toolkit for evaluation of k-NN methods for generic non-metric spaces.

Non-Metric Space Library (NMSLIB) Important Notes NMSLIB is generic but fast, see the results of ANN benchmarks. A standalone implementation of our fa

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding This repo contains the data and source code for baseline models in the NeurIPS 2

reXmeX is recommender system evaluation metric library.

A general purpose recommender metrics library for fair evaluation.

XAI - An eXplainability toolbox for machine learning

XAI - An eXplainability toolbox for machine learning XAI is a Machine Learning library that is designed with AI explainability in its core. XAI contai

The project covers common metrics for super-resolution performance evaluation.

Super-Resolution Performance Evaluation Code The project covers common metrics for super-resolution performance evaluation. Metrics support The script

Metrinome is an all-purpose tool for working with code complexity metrics.

Overview Metrinome is an all-purpose tool for working with code complexity metrics. It can be used as both a REPL and API, and includes: Converters to

Source code, data, and evaluation details for “Cross-Lingual Citations in English Papers: A Large-Scale Analysis of Prevalence, Formation, and Ramifications”

Analysis of cross-lingual citations in English papers Contents initial_analysis Source code, data, and evaluation details as published at ICADL2020 ci

Multi-Modal Fingerprint Presentation Attack Detection: Evaluation On A New Dataset

PADISI USC Dataset This repository analyzes the PADISI-Finger dataset introduced in Multi-Modal Fingerprint Presentation Attack Detection: Evaluation

Security evaluation module with onnx, pytorch, and SecML.

🚀 🐼 🔥 PandaVision Integrate and automate security evaluations with onnx, pytorch, and SecML! Installation Starting the server without Docker If you

A System Metrics Monitoring Tool Built using Python3 , rabbitmq,Grafana and InfluxDB. Setup using docker compose. Use to monitor system performance with graphical interface of grafana , storage of influxdb and message queuing of rabbitmq

SystemMonitoringRabbitMQGrafanaInflux This repository has code to setup a system monitoring tool The tools used are the follows Python3.6 Docker Rabbi

Web Crawlers for Data Labelling of Malicious Domain Detection & IP Reputation Evaluation

Web Crawlers for Data Labelling of Malicious Domain Detection & IP Reputation Evaluation This repository provides two web crawlers to label domain nam

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding This repo contains the data and source code for baseline models in the NeurIPS 2

A compact version of EDI-Vetter, which uses the TLS output to quickly vet transit signals.

A compact version of EDI-Vetter, which uses the TLS output to quickly vet transit signals. All your favorite hits in a simplified format.

Suite of tools for retrieving USGS NWIS observations and evaluating National Water Model (NWM) data.

Documentation OWPHydroTools GitHub pages documentation Motivation We developed OWPHydroTools with data scientists in mind. We attempted to ensure the

Pipeline and Dataset helpers for complex algorithm evaluation.

tpcp - Tiny Pipelines for Complex Problems A generic way to build object-oriented datasets and algorithm pipelines and tools to evaluate them pip inst

Regression Metrics Calculation Made easy for tensorflow2 and scikit-learn

Regression Metrics Installation To install the package from the PyPi repository you can execute the following command: pip install regressionmetrics I

Installation, test and evaluation of Scribosermo speech-to-text engine

Scribosermo STT Setup Scribosermo is a LGPL licensed, open-source speech recognition engine to "Train fast Speech-to-Text networks in different langua

A toolkit for geo ML data processing and model evaluation (fork of solaris)

An open source ML toolkit for overhead imagery. This is a beta version of lunular which may continue to develop. Please report any bugs through issues

Using Streamlit to host a multi-page tool with model specs and classification metrics, while also accepting user input values for prediction.

Predicitng_viability Using Streamlit to host a multi-page tool with model specs and classification metrics, while also accepting user input values for

Evaluation of TCP BBRv1 in wireless networks

The Network Simulator, Version 3 Table of Contents: An overview Building ns-3 Running ns-3 Getting access to the ns-3 documentation Working with the d

Cloudkeeper is “housekeeping for clouds” - find leaky resources, manage quota limits, detect drift and clean up.

Cloudkeeper Housekeeping for Clouds! Table of contents Overview Docker based quick start Cloning this repository Component list Contact License Overvi

Facilitating Database Tuning with Hyper-ParameterOptimization: A Comprehensive Experimental Evaluation

A Comprehensive Experimental Evaluation for Database Configuration Tuning This is the source code to the paper "Facilitating Database Tuning with Hype

Regression Metrics Calculation Made easy

Regression Metrics Mean Absolute Error Mean Square Error Root Mean Square Error Root Mean Square Logarithmic Error Root Mean Square Logarithmic Error

Unified MultiWOZ evaluation scripts for the context-to-response task.

MultiWOZ Context-to-Response Evaluation Standardized and easy to use Inform, Success, BLEU ~ See the paper ~ Easy-to-use scripts for standardized eval

Data, model training, and evaluation code for "PubTables-1M: Towards a universal dataset and metrics for training and evaluating table extraction models".

PubTables-1M This repository contains training and evaluation code for the paper "PubTables-1M: Towards a universal dataset and metrics for training a

This is a five-step framework for the development of intrusion detection systems (IDS) using machine learning (ML) considering model realization, and performance evaluation.

AB-TRAP: building invisibility shields to protect network devices The AB-TRAP framework is applicable to the development of Network Intrusion Detectio

Fairness Metrics: All you need to know

Fairness Metrics: All you need to know Testing machine learning software for ethical bias has become a pressing current concern. Recent research has p

High-fidelity performance metrics for generative models in PyTorch

High-fidelity performance metrics for generative models in PyTorch

Federated_learning codes used for the the paper "Evaluation of Federated Learning Aggregation Algorithms" and "A Federated Learning Aggregation Algorithm for Pervasive Computing: Evaluation and Comparison"

Federated Distance (FedDist) This is the code accompanying the Percom2021 paper "A Federated Learning Aggregation Algorithm for Pervasive Computing: E

Script to quickly get the metrics from Github repos to analyze.

commit-prefix-analysis Script to quickly get the metrics from Github repos to analyze. Setup Install the Github CLI. You'll know its working when runn

Developed a website to analyze and generate report of students based on the curriculum that represents student’s academic performance.

Developed a website to analyze and generate report of students based on the curriculum that represents student’s academic performance. We have developed the system such that, it will automatically parse data onto the database from excel file, which will in return reduce time consumption of analysis of data.

BEAMetrics: Benchmark to Evaluate Automatic Metrics in Natural Language Generation

BEAMetrics: Benchmark to Evaluate Automatic Metrics in Natural Language Generation Installing The Dependencies $ conda create --name beametrics python

Continual reinforcement learning baselines: experiment specifications, implementation of existing methods, and common metrics. Easily extensible to new methods.

Continual Reinforcement Learning This repository provides a simple way to run continual reinforcement learning experiments in PyTorch, including evalu

Source code for "Taming Visually Guided Sound Generation" (Oral at the BMVC 2021)

Taming Visually Guided Sound Generation • [Project Page] • [ArXiv] • [Poster] • • Listen for the samples on our project page. Overview We propose to t

Anomaly detection in multi-agent trajectories: Code for training, evaluation and the OpenAI highway simulation.

Anomaly Detection in Multi-Agent Trajectories for Automated Driving This is the official project page including the paper, code, simulation, baseline

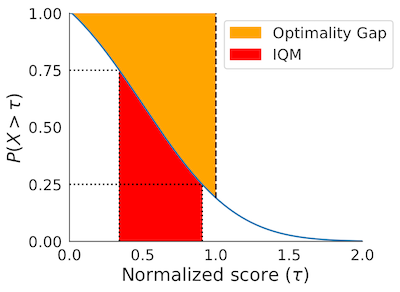

rliable is an open-source Python library for reliable evaluation, even with a handful of runs, on reinforcement learning and machine learnings benchmarks.

Open-source library for reliable evaluation on reinforcement learning and machine learning benchmarks. See NeurIPS 2021 oral for details.

Evaluation of a Monocular Eye Tracking Set-Up

Evaluation of a Monocular Eye Tracking Set-Up As part of my master thesis, I implemented a new state-of-the-art model that is based on the work of Che

A fast implementation of bss_eval metrics for blind source separation

fast_bss_eval Do you have a zillion BSS audio files to process and it is taking days ? Is your simulation never ending ? Fear no more! fast_bss_eval i

ImageNet-CoG is a benchmark for concept generalization. It provides a full evaluation framework for pre-trained visual representations which measure how well they generalize to unseen concepts.

The ImageNet-CoG Benchmark Project Website Paper (arXiv) Code repository for the ImageNet-CoG Benchmark introduced in the paper "Concept Generalizatio

fast_bss_eval is a fast implementation of the bss_eval metrics for the evaluation of blind source separation.

fast_bss_eval Do you have a zillion BSS audio files to process and it is taking days ? Is your simulation never ending ? Fear no more! fast_bss_eval i

Supplementary materials for ISMIR 2021 LBD paper "Evaluation of Latent Space Disentanglement in the Presence of Interdependent Attributes"

Evaluation of Latent Space Disentanglement in the Presence of Interdependent Attributes Supplementary materials for ISMIR 2021 LBD submission: K. N. W

Implementation of temporal pooling methods studied in [ICIP'20] A Comparative Evaluation Of Temporal Pooling Methods For Blind Video Quality Assessment

Implementation of temporal pooling methods studied in [ICIP'20] A Comparative Evaluation Of Temporal Pooling Methods For Blind Video Quality Assessment

Novel Instances Mining with Pseudo-Margin Evaluation for Few-Shot Object Detection

Novel Instances Mining with Pseudo-Margin Evaluation for Few-Shot Object Detection (NimPme) The official implementation of Novel Instances Mining with

lazy_table - a python-tabulate wrapper for producing tables from generators

A python-tabulate wrapper for producing tables from generators. Motivation lazy_table is useful when (i) each row of your table is generated by a poss

Block fingerprinting for the beacon chain, for client identification & client diversity metrics

blockprint This is a repository for discussion and development of tools for Ethereum block fingerprinting. The primary aim is to measure beacon chain

A python toolbox for predictive uncertainty quantification, calibration, metrics, and visualization

Website, Tutorials, and Docs Uncertainty Toolbox A python toolbox for predictive uncertainty quantification, calibration, metrics, and visualizatio

Demonstration that AWS IAM policy evaluation docs are incorrect

The flowchart from the AWS IAM policy evaluation documentation page, as of 2021-09-12, and dating back to at least 2018-12-27, is the following: The f

Implementation of Common Image Evaluation Metrics by Sayed Nadim (sayednadim.github.io). The repo is built based on full reference image quality metrics such as L1, L2, PSNR, SSIM, LPIPS. and feature-level quality metrics such as FID, IS. It can be used for evaluating image denoising, colorization, inpainting, deraining, dehazing etc. where we have access to ground truth.

Image Quality Evaluation Metrics Implementation of some common full reference image quality metrics. The repo is built based on full reference image q

Cross Quality LFW: A database for Analyzing Cross-Resolution Image Face Recognition in Unconstrained Environments

Cross-Quality Labeled Faces in the Wild (XQLFW) Here, we release the database, evaluation protocol and code for the following paper: Cross Quality LFW

Our CIKM21 Paper "Incorporating Query Reformulating Behavior into Web Search Evaluation"

Reformulation-Aware-Metrics Introduction This codebase contains source-code of the Python-based implementation of our CIKM 2021 paper. Chen, Jia, et a

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics. Jury offers a smooth and easy-to-use interface. It uses datasets for underlying metric computation, and hence adding custom metric is easy as adopting datasets.Metric.

Monitor and log Network and Disks statistics in MegaBytes per second.

iometrics Monitor and log Network and Disks statistics in MegaBytes per second. Install pip install iometrics Usage Pytorch-lightning integration from

Pip-package for trajectory benchmarking from "Be your own Benchmark: No-Reference Trajectory Metric on Registered Point Clouds", ECMR'21

Map Metrics for Trajectory Quality Map metrics toolkit provides a set of metrics to quantitatively evaluate trajectory quality via estimating consiste

Evaluation suite for large-scale language models.

This repo contains code for running the evaluations and reproducing the results from the Jurassic-1 Technical Paper (see blog post), with current support for running the tasks through both the AI21 Studio API and OpenAI's GPT3 API.

A suite of utilities for AWS Lambda Functions that makes tracing with AWS X-Ray, structured logging and creating custom metrics asynchronously easier

A suite of utilities for AWS Lambda Functions that makes tracing with AWS X-Ray, structured logging and creating custom metrics asynchronously easier

Summary Explorer is a tool to visually explore the state-of-the-art in text summarization.

Summary Explorer Summary Explorer is a tool to visually inspect the summaries from several state-of-the-art neural summarization models across multipl

Action Segmentation Evaluation

Reference Action Segmentation Evaluation Code This repository contains the reference code for action segmentation evaluation. If you have a bug-fix/im

Summary Explorer is a tool to visually explore the state-of-the-art in text summarization.

Summary Explorer is a tool to visually explore the state-of-the-art in text summarization.

Benchmark for evaluating open-ended generation

OpenMEVA Contributed by Jian Guan, Zhexin Zhang. Thank Jiaxin Wen for DeBugging. OpenMEVA is a benchmark for evaluating open-ended story generation me

A TensorFlow implementation of SOFA, the Simulator for OFfline LeArning and evaluation.

SOFA This repository is the implementation of SOFA, the Simulator for OFfline leArning and evaluation. Keeping Dataset Biases out of the Simulation: A

Active and Sample-Efficient Model Evaluation

Active Testing: Sample-Efficient Model Evaluation Hi, good to see you here! 👋 This is code for "Active Testing: Sample-Efficient Model Evaluation". P

KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark.

KLUE Baseline Korean(한국어) KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark. See our paper fo

ANNchor is a python library which constructs approximate k-nearest neighbour graphs for slow metrics.

Fast k-NN graph construction for slow metrics

Reference implementation of code generation projects from Facebook AI Research. General toolkit to apply machine learning to code, from dataset creation to model training and evaluation. Comes with pretrained models.

This repository is a toolkit to do machine learning for programming languages. It implements tokenization, dataset preprocessing, model training and m

The Hailo Model Zoo includes pre-trained models and a full building and evaluation environment

Hailo Model Zoo The Hailo Model Zoo provides pre-trained models for high-performance deep learning applications. Using the Hailo Model Zoo you can mea

🍃 A comprehensive monitoring and alerting solution for the status of your Chia farmer and harvesters.

chia-monitor A monitoring tool to collect all important metrics from your Chia farming node and connected harvesters. It can send you push notificatio

A Toolbox for Image Feature Matching and Evaluations

This is a toolbox repository to help evaluate various methods that perform image matching from a pair of images.

Semantic Scholar's Author Disambiguation Algorithm & Evaluation Suite

S2AND This repository provides access to the S2AND dataset and S2AND reference model described in the paper S2AND: A Benchmark and Evaluation System f

A fast python implementation of DTU MVS 2014 evaluation

DTUeval-python A python implementation of DTU MVS 2014 evaluation. It only takes 1min for each mesh evaluation. And the gap between the two implementa

A collection of metrics for evaluating timbre dissimilarity using the TorchMetrics API

Timbre Dissimilarity Metrics A collection of metrics for evaluating timbre dissimilarity using the TorchMetrics API Installation pip install -e . Usag

SIMULEVAL A General Evaluation Toolkit for Simultaneous Translation

SimulEval SimulEval is a general evaluation framework for simultaneous translation on text and speech. Requirement python = 3.7.0 Installation git cl

YoloV5 implemented by TensorFlow2 , with support for training, evaluation and inference.

Efficient implementation of YOLOV5 in TensorFlow2

This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard evaluation metric to measure the accuracy and robustness of 3D face reconstruction methods from a single image under variations in viewing angle, lighting, and common occlusions.

NoW Evaluation This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard e

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation models. It contains 17 different amateur subjects performing 30 sports-related actions each, for a total of 510 action clips.

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation mode

PyTorch evaluation code for Delving Deep into the Generalization of Vision Transformers under Distribution Shifts.

Out-of-distribution Generalization Investigation on Vision Transformers This repository contains PyTorch evaluation code for Delving Deep into the Gen

NAS Benchmark in "Prioritized Architecture Sampling with Monto-Carlo Tree Search", CVPR2021

NAS-Bench-Macro This repository includes the benchmark and code for NAS-Bench-Macro in paper "Prioritized Architecture Sampling with Monto-Carlo Tree

This is the repo for Uncertainty Quantification 360 Toolkit.

UQ360 The Uncertainty Quantification 360 (UQ360) toolkit is an open-source Python package that provides a diverse set of algorithms to quantify uncert

中文医疗信息处理基准CBLUE: A Chinese Biomedical LanguageUnderstanding Evaluation Benchmark

English | 中文说明 CBLUE AI (Artificial Intelligence) is playing an indispensabe role in the biomedical field, helping improve medical technology. For fur

Evaluation Pipeline for our ECCV2020: Journey Towards Tiny Perceptual Super-Resolution.

Journey Towards Tiny Perceptual Super-Resolution Test code for our ECCV2020 paper: https://arxiv.org/abs/2007.04356 Our x4 upscaling pre-trained model

Push Prometheus metrics to VictoriaMetrics or other exporters

Push metrics from your periodic long-running jobs to existing Prometheus/VictoriaMetrics monitoring system.

QuanTaichi evaluation suite

QuanTaichi: A Compiler for Quantized Simulations (SIGGRAPH 2021) Yuanming Hu, Jiafeng Liu, Xuanda Yang, Mingkuan Xu, Ye Kuang, Weiwei Xu, Qiang Dai, W

This is the repo for Uncertainty Quantification 360 Toolkit.

UQ360 The Uncertainty Quantification 360 (UQ360) toolkit is an open-source Python package that provides a diverse set of algorithms to quantify uncert

Details about the wide minima density hypothesis and metrics to compute width of a minima

wide-minima-density-hypothesis Details about the wide minima density hypothesis and metrics to compute width of a minima This repo presents the wide m

Framework for joint representation learning, evaluation through multimodal registration and comparison with image translation based approaches

CoMIR: Contrastive Multimodal Image Representation for Registration Framework 🖼 Registration of images in different modalities with Deep Learning 🤖

whm also known as wifi-heat-mapper is a Python library for benchmarking Wi-Fi networks and gather useful metrics that can be converted into meaningful easy-to-understand heatmaps.

whm also known as wifi-heat-mapper is a Python library for benchmarking Wi-Fi networks and gather useful metrics that can be converted into meaningful easy-to-understand heatmaps.

The repo of the preprinting paper "Labels Are Not Perfect: Inferring Spatial Uncertainty in Object Detection"

Inferring Spatial Uncertainty in Object Detection A teaser version of the code for the paper Labels Are Not Perfect: Inferring Spatial Uncertainty in

Code for reproducing our analysis in the paper titled: Image Cropping on Twitter: Fairness Metrics, their Limitations, and the Importance of Representation, Design, and Agency

Image Crop Analysis This is a repo for the code used for reproducing our Image Crop Analysis paper as shared on our blog post. If you plan to use this

An evaluation toolkit for voice conversion models.

Voice-conversion-evaluation An evaluation toolkit for voice conversion models. Sample test pair Generate the metadata for evaluating models. The direc

An evaluation toolkit for voice conversion models.

Voice-conversion-evaluation An evaluation toolkit for voice conversion models. Sample test pair Generate the metadata for evaluating models. The direc

Resources for the "Evaluating the Factual Consistency of Abstractive Text Summarization" paper

Evaluating the Factual Consistency of Abstractive Text Summarization Authors: Wojciech Kryściński, Bryan McCann, Caiming Xiong, and Richard Socher Int

Findings of ACL 2021

Assessing Dialogue Systems with Distribution Distances [arXiv][code] We propose to measure the performance of a dialogue system by computing the distr

Task-based datasets, preprocessing, and evaluation for sequence models.

SeqIO: Task-based datasets, preprocessing, and evaluation for sequence models. SeqIO is a library for processing sequential data to be fed into downst

[CVPR 2021] MiVOS - Mask Propagation module. Reproduced STM (and better) with training code :star2:. Semi-supervised video object segmentation evaluation.

MiVOS (CVPR 2021) - Mask Propagation Ho Kei Cheng, Yu-Wing Tai, Chi-Keung Tang [arXiv] [Paper PDF] [Project Page] [Papers with Code] This repo impleme

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

OCTIS: Comparing Topic Models is Simple! A python package to optimize and evaluate topic models (accepted at EACL2021 demo track)

OCTIS : Optimizing and Comparing Topic Models is Simple! OCTIS (Optimizing and Comparing Topic models Is Simple) aims at training, analyzing and compa