58 Repositories

Python explainable-ml Libraries

📦 PyTorch based visualization package for generating layer-wise explanations for CNNs.

Explainable CNNs 📦 Flexible visualization package for generating layer-wise explanations for CNNs. It is a common notion that a Deep Learning model i

Responsible AI Workshop: a series of tutorials & walkthroughs to illustrate how put responsible AI into practice

Responsible AI Workshop Responsible innovation is top of mind. As such, the tech industry as well as a growing number of organizations of all kinds in

Package towards building Explainable Forecasting and Nowcasting Models with State-of-the-art Deep Neural Networks and Dynamic Factor Model on Time Series data sets with single line of code. Also, provides utilify facility for time-series signal similarities matching, and removing noise from timeseries signals.

DeepXF: Explainable Forecasting and Nowcasting with State-of-the-art Deep Neural Networks and Dynamic Factor Model Also, verify TS signal similarities

This is an open source library implementing hyperbox-based machine learning algorithms

hyperbox-brain is a Python open source toolbox implementing hyperbox-based machine learning algorithms built on top of scikit-learn and is distributed

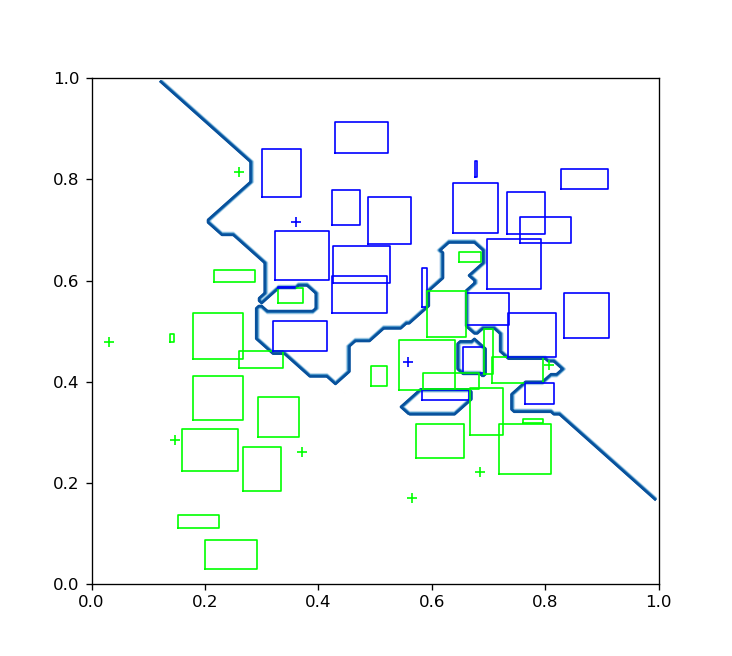

CLASSIX is a fast and explainable clustering algorithm based on sorting

CLASSIX Fast and explainable clustering based on sorting CLASSIX is a fast and explainable clustering algorithm based on sorting. Here are a few highl

Python package for concise, transparent, and accurate predictive modeling

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. 📚 docs • 📖 demo notebooks Modern

An implementation of IMLE-Net: An Interpretable Multi-level Multi-channel Model for ECG Classification

IMLE-Net: An Interpretable Multi-level Multi-channel Model for ECG Classification The repostiory consists of the code, results and data set links for

Which Style Makes Me Attractive? Interpretable Control Discovery and Counterfactual Explanation on StyleGAN

Interpretable Control Exploration and Counterfactual Explanation (ICE) on StyleGAN Which Style Makes Me Attractive? Interpretable Control Discovery an

Codes and scripts for "Explainable Semantic Space by Grounding Languageto Vision with Cross-Modal Contrastive Learning"

Visually Grounded Bert Language Model This repository is the official implementation of Explainable Semantic Space by Grounding Language to Vision wit

🔅 Shapash makes Machine Learning models transparent and understandable by everyone

🎉 What's new ? Version New Feature Description Tutorial 1.6.x Explainability Quality Metrics To help increase confidence in explainability methods, y

SCOUTER: Slot Attention-based Classifier for Explainable Image Recognition

SCOUTER: Slot Attention-based Classifier for Explainable Image Recognition PDF Abstract Explainable artificial intelligence has been gaining attention

A library for graph deep learning research

Documentation | Paper [JMLR] | Tutorials | Benchmarks | Examples DIG: Dive into Graphs is a turnkey library for graph deep learning research. Why DIG?

PyExplainer: A Local Rule-Based Model-Agnostic Technique (Explainable AI)

PyExplainer PyExplainer is a local rule-based model-agnostic technique for generating explanations (i.e., why a commit is predicted as defective) of J

EXplainable Artificial Intelligence (XAI)

EXplainable Artificial Intelligence (XAI) This repository includes the codes for different projects on eXplainable Artificial Intelligence (XAI) by th

The official start-up code for paper "FFA-IR: Towards an Explainable and Reliable Medical Report Generation Benchmark."

FFA-IR The official start-up code for paper "FFA-IR: Towards an Explainable and Reliable Medical Report Generation Benchmark." The framework is inheri

Resources for our AAAI 2022 paper: "LOREN: Logic-Regularized Reasoning for Interpretable Fact Verification".

LOREN Resources for our AAAI 2022 paper (pre-print): "LOREN: Logic-Regularized Reasoning for Interpretable Fact Verification". DEMO System Check out o

Build Low Code Automated Tensorflow, What-IF explainable models in just 3 lines of code.

Build Low Code Automated Tensorflow explainable models in just 3 lines of code.

Papers about explainability of GNNs

Papers about explainability of GNNs

Code accompanying the NeurIPS 2021 paper "Generating High-Quality Explanations for Navigation in Partially-Revealed Environments"

Generating High-Quality Explanations for Navigation in Partially-Revealed Environments This work presents an approach to explainable navigation under

This code is for eCaReNet: explainable Cancer Relapse Prediction Network.

eCaReNet This code is for eCaReNet: explainable Cancer Relapse Prediction Network. (Towards Explainable End-to-End Prostate Cancer Relapse Prediction

CinnaMon is a Python library which offers a number of tools to detect, explain, and correct data drift in a machine learning system

CinnaMon is a Python library which offers a number of tools to detect, explain, and correct data drift in a machine learning system

Image classification for projects and researches

This is a tool to help you quickly solve classification problems including: data analysis, training, report results and model explanation.

PyExplainer: A Local Rule-Based Model-Agnostic Technique (Explainable AI)

PyExplainer PyExplainer is a local rule-based model-agnostic technique for generating explanations (i.e., why a commit is predicted as defective) of J

The code of NeurIPS 2021 paper "Scalable Rule-Based Representation Learning for Interpretable Classification".

Rule-based Representation Learner This is a PyTorch implementation of Rule-based Representation Learner (RRL) as described in NeurIPS 2021 paper: Scal

LOFO (Leave One Feature Out) Importance calculates the importances of a set of features based on a metric of choice,

LOFO (Leave One Feature Out) Importance calculates the importances of a set of features based on a metric of choice, for a model of choice, by iteratively removing each feature from the set, and evaluating the performance of the model, with a validation scheme of choice, based on the chosen metric.

DrWhy is the collection of tools for eXplainable AI (XAI). It's based on shared principles and simple grammar for exploration, explanation and visualisation of predictive models.

Responsible Machine Learning With Great Power Comes Great Responsibility. Voltaire (well, maybe) How to develop machine learning models in a responsib

XAI - An eXplainability toolbox for machine learning

XAI - An eXplainability toolbox for machine learning XAI is a Machine Learning library that is designed with AI explainability in its core. XAI contai

moDel Agnostic Language for Exploration and eXplanation

moDel Agnostic Language for Exploration and eXplanation Overview Unverified black box model is the path to the failure. Opaqueness leads to distrust.

Fit interpretable models. Explain blackbox machine learning.

InterpretML - Alpha Release In the beginning machines learned in darkness, and data scientists struggled in the void to explain them. Let there be lig

Explainable Zero-Shot Topic Extraction

Zero-Shot Topic Extraction with Common-Sense Knowledge Graph This repository contains the code for reproducing the results reported in the paper "Expl

ACV is a python library that provides explanations for any machine learning model or data.

ACV is a python library that provides explanations for any machine learning model or data. It gives local rule-based explanations for any model or data and different Shapley Values for tree-based models.

Explainable Medical ImageSegmentation via GenerativeAdversarial Networks andLayer-wise Relevance Propagation

MedAI: Transparency in Medical Image Segmentation What is this repo This repo contains the code and experiments that are implemented to contribute in

SimplEx - Explaining Latent Representations with a Corpus of Examples

SimplEx - Explaining Latent Representations with a Corpus of Examples Code Author: Jonathan Crabbé ([email protected]) This repository contains the imp

A framework for attentive explainable deep learning on tabular data

🧠 kendrite A framework for attentive explainable deep learning on tabular data 💨 Quick start kedro run 🧱 Built upon Technology Description Links ke

JittorVis - Visual understanding of deep learning models

JittorVis: Visual understanding of deep learning model JittorVis is an open-source library for understanding the inner workings of Jittor models by vi

Temporal Meta-path Guided Explainable Recommendation (WSDM2021)

Temporal Meta-path Guided Explainable Recommendation (WSDM2021) TMER Code of paper "Temporal Meta-path Guided Explainable Recommendation". Requirement

Reinforcement Knowledge Graph Reasoning for Explainable Recommendation

Reinforcement Knowledge Graph Reasoning for Explainable Recommendation This repository contains the source code of the SIGIR 2019 paper "Reinforcement

Jointly Learning Explainable Rules for Recommendation with Knowledge Graph

Jointly Learning Explainable Rules for Recommendation with Knowledge Graph

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

Using / reproducing ACD from the paper "Hierarchical interpretations for neural network predictions" 🧠 (ICLR 2019)

Hierarchical neural-net interpretations (ACD) 🧠 Produces hierarchical interpretations for a single prediction made by a pytorch neural network. Offic

Collection of NLP model explanations and accompanying analysis tools

Thermostat is a large collection of NLP model explanations and accompanying analysis tools. Combines explainability methods from the captum library wi

CARLA: A Python Library to Benchmark Algorithmic Recourse and Counterfactual Explanation Algorithms

CARLA - Counterfactual And Recourse Library CARLA is a python library to benchmark counterfactual explanation and recourse models. It comes out-of-the

A collection of research papers and software related to explainability in graph machine learning.

A collection of research papers and software related to explainability in graph machine learning.

Repository for the COLING 2020 paper "Explainable Automated Fact-Checking: A Survey."

Explainable Fact Checking: A Survey This repository and the accompanying webpage contain resources for the paper "Explainable Fact Checking: A Survey"

Code for CVPR2021 "Visualizing Adapted Knowledge in Domain Transfer". Visualization for domain adaptation. #explainable-ai

Visualizing Adapted Knowledge in Domain Transfer @inproceedings{hou2021visualizing, title={Visualizing Adapted Knowledge in Domain Transfer}, auth

Implementation of the paper "Shapley Explanation Networks"

Shapley Explanation Networks Implementation of the paper "Shapley Explanation Networks" at ICLR 2021. Note that this repo heavily uses the experimenta

An Explainable Leaderboard for NLP

ExplainaBoard: An Explainable Leaderboard for NLP Introduction | Website | Download | Backend | Paper | Video | Bib Introduction ExplainaBoard is an i

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Learning Intents behind Interactions with Knowledge Graph for Recommendation, WWW2021

Learning Intents behind Interactions with Knowledge Graph for Recommendation This is our PyTorch implementation for the paper: Xiang Wang, Tinglin Hua

Interpretability and explainability of data and machine learning models

AI Explainability 360 (v0.2.1) The AI Explainability 360 toolkit is an open-source library that supports interpretability and explainability of datase

Contrastive Explanation (Foil Trees), developed at TNO/Utrecht University

Contrastive Explanation (Foil Trees) Contrastive and counterfactual explanations for machine learning (ML) Marcel Robeer (2018-2020), TNO/Utrecht Univ

A data-driven approach to quantify the value of classifiers in a machine learning ensemble.

Documentation | External Resources | Research Paper Shapley is a Python library for evaluating binary classifiers in a machine learning ensemble. The

Predictive AI layer for existing databases.

MindsDB is an open-source AI layer for existing databases that allows you to effortlessly develop, train and deploy state-of-the-art machine learning

Explainability for Vision Transformers (in PyTorch)

Explainability for Vision Transformers (in PyTorch) This repository implements methods for explainability in Vision Transformers

Debugging, monitoring and visualization for Python Machine Learning and Data Science

Welcome to TensorWatch TensorWatch is a debugging and visualization tool designed for data science, deep learning and reinforcement learning from Micr

A Python package implementing a new model for text classification with visualization tools for Explainable AI :octocat:

A Python package implementing a new model for text classification with visualization tools for Explainable AI 🍣 Online live demos: http://tworld.io/s

A data-driven approach to quantify the value of classifiers in a machine learning ensemble.

Documentation | External Resources | Research Paper Shapley is a Python library for evaluating binary classifiers in a machine learning ensemble. The

Predictive AI layer for existing databases.

MindsDB is an open-source AI layer for existing databases that allows you to effortlessly develop, train and deploy state-of-the-art machine learning