1380 Repositories

Python language-modeling Libraries

Vision-Language Pre-training for Image Captioning and Question Answering

VLP This repo hosts the source code for our AAAI2020 work Vision-Language Pre-training (VLP). We have released the pre-trained model on Conceptual Cap

Research code for ECCV 2020 paper "UNITER: UNiversal Image-TExt Representation Learning"

UNITER: UNiversal Image-TExt Representation Learning This is the official repository of UNITER (ECCV 2020). This repository currently supports finetun

Oscar and VinVL

Oscar: Object-Semantics Aligned Pre-training for Vision-and-Language Tasks VinVL: Revisiting Visual Representations in Vision-Language Models Updates

Research Code for NeurIPS 2020 Spotlight paper "Large-Scale Adversarial Training for Vision-and-Language Representation Learning": UNITER adversarial training part

VILLA: Vision-and-Language Adversarial Training This is the official repository of VILLA (NeurIPS 2020 Spotlight). This repository currently supports

Contrastive Language-Image Pretraining

CLIP [Blog] [Paper] [Model Card] [Colab] CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of (image, text) pair

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting Created by Yongming Rao*, Wenliang Zhao*, Guangyi Chen, Yansong Tang, Zheng Z

Learn to code in any language. If

Learn to Code It is an intiiative undertaken by Student Ambassadors Club, Jamshoro for students who are absolute begineers in programming and want to

Linear programming solver for paper-reviewer matching and mind-matching

Paper-Reviewer Matcher A python package for paper-reviewer matching algorithm based on topic modeling and linear programming. The algorithm is impleme

The worst and slowest programming language you have ever seen

VenumLang this is a complete joke EXAMPLE: fizzbuzz in venumlang x = 0

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting Created by Yongming Rao*, Wenliang Zhao*, Guangyi Chen, Yansong Tang, Zheng Z

Advent of Code is an Advent calendar of small programming puzzles for a variety of skill sets and skill levels that can be solved in any programming language you like.

Advent Of Code 2021 - Python English Advent of Code is an Advent calendar of small programming puzzles for a variety of skill sets and skill levels th

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

Open source annotation tool for machine learning practitioners.

doccano doccano is an open source text annotation tool for humans. It provides annotation features for text classification, sequence labeling and sequ

Tools for curating biomedical training data for large-scale language modeling

Tools for curating biomedical training data for large-scale language modeling

Implementation of the paper 'Sentence Bottleneck Autoencoders from Transformer Language Models'

Introduction This repository contains the code for the paper Sentence Bottleneck Autoencoders from Transformer Language Models by Ivan Montero, Nikola

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network)

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network) BERTAC is a framework that combines a

Diagnostic tests for linguistic capacities in language models

LM diagnostics This repository contains the diagnostic datasets and experimental code for What BERT is not: Lessons from a new suite of psycholinguist

Language model Prompt And Query Archive

LPAQA: Language model Prompt And Query Archive This repository contains data and code for the paper How Can We Know What Language Models Know? Install

Code for Editing Factual Knowledge in Language Models

KnowledgeEditor Code for Editing Factual Knowledge in Language Models (https://arxiv.org/abs/2104.08164). @inproceedings{decao2021editing, title={Ed

Code for ACL2021 long paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases

LANKA This is the source code for paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases (ACL 2021, long paper) Referen

A Model for Natural Language Attack on Text Classification and Inference

TextFooler A Model for Natural Language Attack on Text Classification and Inference This is the source code for the paper: Jin, Di, et al. "Is BERT Re

The official implementation of "BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Identify Analogies?, ACL 2021 main conference"

BERT is to NLP what AlexNet is to CV This is the official implementation of BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Iden

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

ALBERT ***************New March 28, 2020 *************** Add a colab tutorial to run fine-tuning for GLUE datasets. ***************New January 7, 2020

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

Code associated with the Don't Stop Pretraining ACL 2020 paper

dont-stop-pretraining Code associated with the Don't Stop Pretraining ACL 2020 paper Citation @inproceedings{dontstoppretraining2020, author = {Suchi

Multi-Task Deep Neural Networks for Natural Language Understanding

New Release We released Adversarial training for both LM pre-training/finetuning and f-divergence. Large-scale Adversarial training for LMs: ALUM code

Training and evaluation codes for the BertGen paper (ACL-IJCNLP 2021)

BERTGEN This repository is the implementation of the paper "BERTGEN: Multi-task Generation through BERT" (https://arxiv.org/abs/2106.03484). The codeb

This repository contains the code for "Exploiting Cloze Questions for Few-Shot Text Classification and Natural Language Inference"

Pattern-Exploiting Training (PET) This repository contains the code for Exploiting Cloze Questions for Few-Shot Text Classification and Natural Langua

ACL'2021: LM-BFF: Better Few-shot Fine-tuning of Language Models

LM-BFF (Better Few-shot Fine-tuning of Language Models) This is the implementation of the paper Making Pre-trained Language Models Better Few-shot Lea

Revisiting Self-Training for Few-Shot Learning of Language Model.

SFLM This is the implementation of the paper Revisiting Self-Training for Few-Shot Learning of Language Model. SFLM is short for self-training for few

PyTorch source code of NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models"

This repository contains source code for NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models" (P

Source code for the ACL-IJCNLP 2021 paper entitled "T-DNA: Taming Pre-trained Language Models with N-gram Representations for Low-Resource Domain Adaptation" by Shizhe Diao et al.

T-DNA Source code for the ACL-IJCNLP 2021 paper entitled Taming Pre-trained Language Models with N-gram Representations for Low-Resource Domain Adapta

Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF shows significant improvements over baseline fine-tuning without data filtration.

Information Gain Filtration Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF sho

Sodium is a general purpose programming language which is instruction-oriented (a new programming concept that we are developing and devising) [Still developing...]

Sodium Programming Language Sodium is a general purpose programming language which is instruction-oriented (a new programming concept that we are deve

DANeS is an open-source E-newspaper dataset by collaboration between DATASET JSC (dataset.vn) and AIV Group (aivgroup.vn)

DANeS - Open-source E-newspaper dataset Source: Technology vector created by macrovector - www.freepik.com. DANeS is an open-source E-newspaper datase

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

Nateve transpiler developed with python.

Adam Adam is a Nateve Programming Language transpiler developed using Python. Nateve Nateve is a new general domain programming language open source i

A simple Programming Language

R.S.O.C. A custom built programming language About The Project R.S.O.C. is a custom built programming language very similar to a low-level 8085 progra

Agile Threat Modeling Toolkit

Threagile is an open-source toolkit for agile threat modeling:

A Pythonic framework for threat modeling

pytm: A Pythonic framework for threat modeling Introduction Traditional threat modeling too often comes late to the party, or sometimes not at all. In

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Pytorch implementation of the paper "Topic Modeling Revisited: A Document Graph-based Neural Network Perspective"

Graph Neural Topic Model (GNTM) This is the pytorch implementation of the paper "Topic Modeling Revisited: A Document Graph-based Neural Network Persp

Think Big, Teach Small: Do Language Models Distil Occam’s Razor?

Think Big, Teach Small: Do Language Models Distil Occam’s Razor? Software related to the paper "Think Big, Teach Small: Do Language Models Distil Occa

Evolutionary Scale Modeling (esm): Pretrained language models for proteins

Evolutionary Scale Modeling This repository contains code and pre-trained weights for Transformer protein language models from Facebook AI Research, i

A Simple Long-Tailed Rocognition Baseline via Vision-Language Model

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

Official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

A paper list of pre-trained language models (PLMs).

Large-scale pre-trained language models (PLMs) such as BERT and GPT have achieved great success and become a milestone in NLP.

Various Algorithms for Short Text Mining

Short Text Mining in Python Introduction This package shorttext is a Python package that facilitates supervised and unsupervised learning for short te

This repository contains the implementation of the paper: Federated Distillation of Natural Language Understanding with Confident Sinkhorns

Federated Distillation of Natural Language Understanding with Confident Sinkhorns This repository provides an alternative method for ensembled distill

WinPython is a portable distribution of the Python programming language for Windows

WinPython tools Copyright © 2012-2013 Pierre Raybaut Copyright © 2014-2019+ The Winpython development team https://github.com/winpython/ Licensed unde

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

Pre-Training 3D Point Cloud Transformers with Masked Point Modeling

Point-BERT: Pre-Training 3D Point Cloud Transformers with Masked Point Modeling Created by Xumin Yu*, Lulu Tang*, Yongming Rao*, Tiejun Huang, Jie Zho

Source Code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chinese Question Matching

Description The source code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chin

LAnguage Model Analysis

LAMA: LAnguage Model Analysis LAMA is a probe for analyzing the factual and commonsense knowledge contained in pretrained language models. The dataset

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER (EMNLP 2021).

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER. @inproceedings{tedes

Simple programming language built on Python.

Serial Another programming language. Built on Python. Building and running program In order to run the program on serial, unfortunately you still need

An optimized prompt tuning strategy comparable to fine-tuning across model scales and tasks.

P-tuning v2 P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks An optimized prompt tuning strategy achievi

Training open neural machine translation models

Train Opus-MT models This package includes scripts for training NMT models using MarianNMT and OPUS data for OPUS-MT. More details are given in the Ma

A simple telegram bot to recognize lengthy voice files to text and vice versa with multiple language support.

Voicebot A simple Telegram bot to convert lengthy voice clips to text and vice versa with supporting languages. Mandatory Variables API_HASH - Yo

Calculator in command line using python programming language

Calculator in command line using python programming language University of the People Python fundamental Chapter 5 Conditionals and recursion The main

CupScript is a simple programing language made with python

CupScript CupScript is a simple programming language made with python It includes some basic functions, variables, loops, and some other built in func

Translate U is capable of translating the text present in an image from one language to the other.

Translate U is capable of translating the text present in an image from one language to the other. The app uses OCR and Google translate to identify and translate across 80+ languages.

A lightweight Python module and command-line tool for generating NATO APP-6(D) compliant military symbols from both ID codes and natural language names

Python military symbols This is a lightweight Python module, including a command-line script, to generate NATO APP-6(D) compliant military symbol icon

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

Conversational text Analysis using various NLP techniques

PyConverse Let me try first Installation pip install pyconverse Usage Please try this notebook that demos the core functionalities: basic usage noteb

This repository containing cross-section cut and fill calculations using Python programming language.

cross-section This repository is containing cut and fill calculations for cross-section using Python programming language. This codes is made to calcu

Supplementary code for TISMIR paper "Sliding-Window Pitch-Class Histograms as a Means of Modeling Musical Form"

Sliding-Window Pitch-Class Histograms as a Means of Modeling Musical Form This is supplementary code for the TISMIR paper Sliding-Window Pitch-Class H

Text-to-Speech for Belarusian language

title emoji colorFrom colorTo sdk app_file pinned Belarusian TTS 🐸 green green gradio app.py false Belarusian TTS 📢 🤖 Belarusian TTS (text-to-speec

A simple language for new programmers and a toy language ;)

Yell An extremely simple, yet powerful language for new programmers, as well as a toy language ;) Explore the docs » Report Bug · Request Feature Yell

A paper list for aspect based sentiment analysis.

Aspect-Based-Sentiment-Analysis A paper list for aspect based sentiment analysis. Survey [IEEE-TAC-20]: Issues and Challenges of Aspect-based Sentimen

My programming language named JoLang. (Mainly created for fun)

JoLang status: not ready So this is my programming language which I decided to name 'JoLang' (inspired by Jonathan and GoLang). Features I implemented

Code for text augmentation method leveraging large-scale language models

HyperMix Code for our paper GPT3Mix and conducting classification experiments using GPT-3 prompt-based data augmentation. Getting Started Installing P

Modeling Temporal Concept Receptive Field Dynamically for Untrimmed Video Analysis

Modeling Temporal Concept Receptive Field Dynamically for Untrimmed Video Analysis This is a PyTorch implementation of the model described in our pape

Using Language Model to Bootstrap Human Activity Recognition Ambient Sensors Based in Smart Homes

Using Language Model to Bootstrap Human Activity Recognition Ambient Sensors Based in Smart Homes This repository is the official implementation of Us

🧪 Cutting-edge experimental spaCy components and features

spacy-experimental: Cutting-edge experimental spaCy components and features This package includes experimental components and features for spaCy v3.x,

A dashboard for your Terminal written in the Python 3 language,

termDash is a handy little program, written in the Python 3 language, and is a small little dashboard for your terminal, designed to be a utility to help people, as well as helping new users get used to the terminal.

A TensorFlow 2.x implementation of Masked Autoencoders Are Scalable Vision Learners

Masked Autoencoders Are Scalable Vision Learners A TensorFlow implementation of Masked Autoencoders Are Scalable Vision Learners [1]. Our implementati

Self-Supervised Document-to-Document Similarity Ranking via Contextualized Language Models and Hierarchical Inference

Self-Supervised Document Similarity Ranking (SDR) via Contextualized Language Models and Hierarchical Inference This repo is the implementation for SD

fastai ulmfit - Pretraining the Language Model, Fine-Tuning and training a Classifier

fast.ai ULMFiT with SentencePiece from pretraining to deployment Motivation: Why even bother with a non-BERT / Transformer language model? Short answe

An easy to use Natural Language Processing library and framework for predicting, training, fine-tuning, and serving up state-of-the-art NLP models.

Welcome to AdaptNLP A high level framework and library for running, training, and deploying state-of-the-art Natural Language Processing (NLP) models

A small project of two newbies, who wanted to learn something about Python language programming, via fun way.

HaveFun A small project of two newbies, who wanted to learn something about Python language programming, via fun way. What's this project about? Well.

jonny is a stack based programming language

jonny-lang jonny is a stack based programming language also compiling jonny files currently doesnt work on windows you can probably compile jonny file

Fried Chicken Programming Language

Fried-Chicken Fried Chicken Programming Language How To Run Once downloaded and opened, choose any file for code. Any file extensions work. Just make

State of the art faster Natural Language Processing in Tensorflow 2.0 .

tf-transformers: faster and easier state-of-the-art NLP in TensorFlow 2.0 ****************************************************************************

Create beautiful diagrams just by typing mathematical notation in plain text.

Penrose Penrose is an early-stage system that is still in development. Our system is not ready for contributions or public use yet, but hopefully will

A fast, efficient universal vector embedding utility package.

Magnitude: a fast, simple vector embedding utility library A feature-packed Python package and vector storage file format for utilizing vector embeddi

A natural language modeling framework based on PyTorch

Overview PyText is a deep-learning based NLP modeling framework built on PyTorch. PyText addresses the often-conflicting requirements of enabling rapi

A basic interpreted programming language written in python

shin A basic interpreted programming language written in python. extension You can use our own extension ".shin". Example: main.shin How to start Clon

Cookiecutter templates for Serverless applications using AWS SAM and the Rust programming language.

Cookiecutter SAM template for Lambda functions in Rust This is a Cookiecutter template to create a serverless application based on the Serverless Appl

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models.

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models. Solve a variety of tasks with pre-trained models or finetune them in

A Large Scale Benchmark for Individual Treatment Effect Prediction and Uplift Modeling

large-scale-ITE-UM-benchmark This repository contains code and data to reproduce the results of the paper "A Large Scale Benchmark for Individual Trea

PyTorch implementation for Score-Based Generative Modeling through Stochastic Differential Equations (ICLR 2021, Oral)

Score-Based Generative Modeling through Stochastic Differential Equations This repo contains a PyTorch implementation for the paper Score-Based Genera

This repo contains implementation of different architectures for emotion recognition in conversations.

Emotion Recognition in Conversations Updates 🔥 🔥 🔥 Date Announcements 03/08/2021 🎆 🎆 We have released a new dataset M2H2: A Multimodal Multiparty

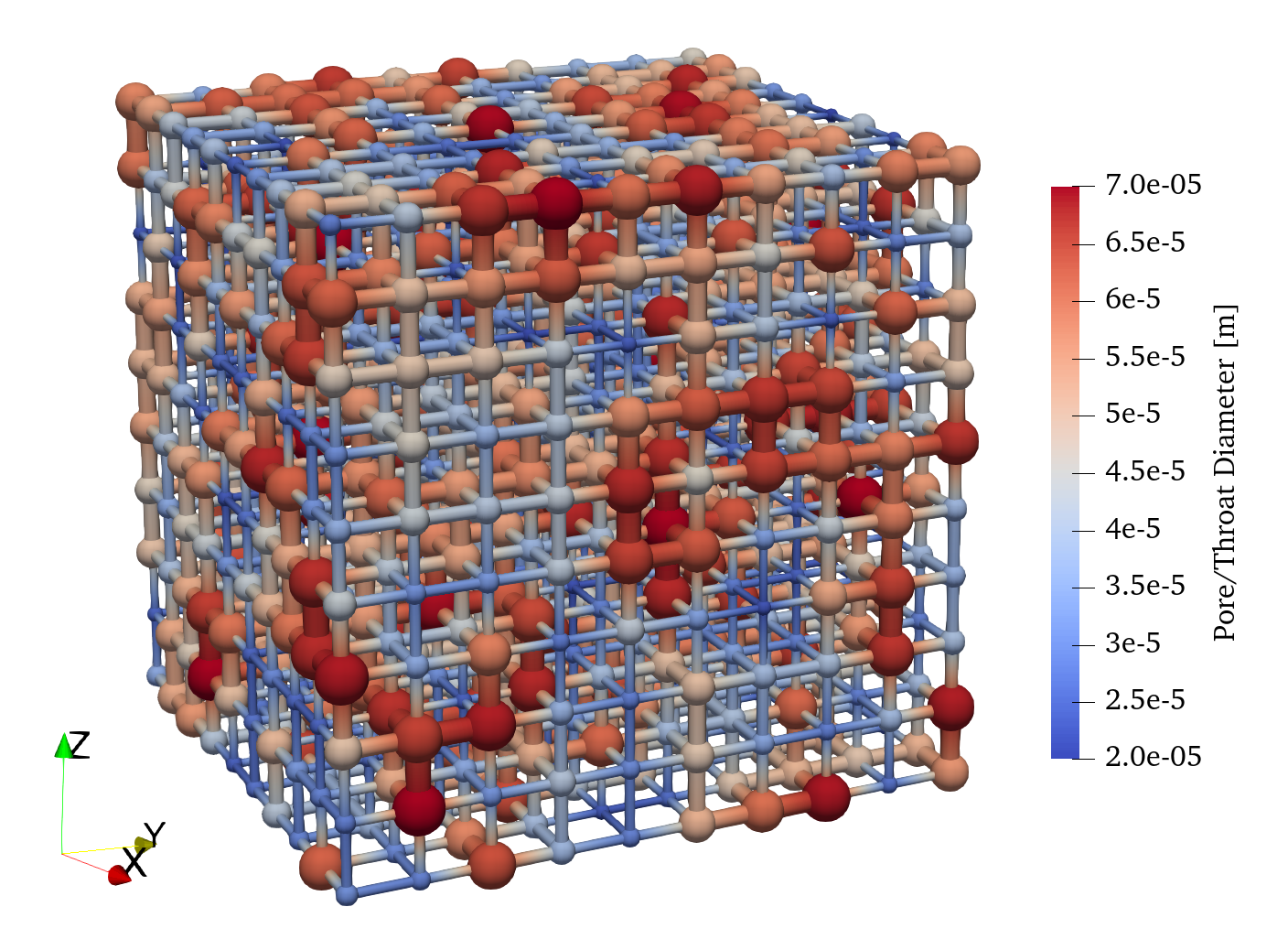

A Python package for performing pore network modeling of porous media

Overview of OpenPNM OpenPNM is a comprehensive framework for performing pore network simulations of porous materials. More Information For more detail

MICOM is a Python package for metabolic modeling of microbial communities

Welcome MICOM is a Python package for metabolic modeling of microbial communities currently developed in the Gibbons Lab at the Institute for Systems

BookNLP, a natural language processing pipeline for books

BookNLP BookNLP is a natural language processing pipeline that scales to books and other long documents (in English), including: Part-of-speech taggin

Convert Text-to Handwriting Using Python

Convert Text-to Handwriting Using Python Description In this project we'll use python library that's "pywhatkit" for converting text to handwriting. t