2334 Repositories

Python pre-trained-language-models Libraries

HiddenMarkovModel implements hidden Markov models with Gaussian mixtures as distributions on top of TensorFlow

Class HiddenMarkovModel HiddenMarkovModel implements hidden Markov models with Gaussian mixtures as distributions on top of TensorFlow 2.0 Installatio

This repository includes the code of the sequence-to-sequence model for discontinuous constituent parsing described in paper Discontinuous Grammar as a Foreign Language.

Discontinuous Grammar as a Foreign Language This repository includes the code of the sequence-to-sequence model for discontinuous constituent parsing

Nook is a simple, concatenative programming language written in Python.

Nook Nook is a simple, concatenative programming language written in Python. Status Nook is currently WIP. It lacks a lot of basic feature, and will n

Fuzz a language by mixing up only few words.

afasi Fuzz a language by mixing up only few words. Status Beta. Note: The default branch is default. Use Examples Version General Help Translate Help

🚀 emojimash 🚀 is a programming language with ALL THE EMOJI

🚀 emojimash 🚀 is a programming language with ALL THE EMOJI

Repository for the paper "Exploring the Sensory Spaces of English Perceptual Verbs in Natural Language Data"

Sensory Spaces of English Perceptual Verbs This repository contains the code and collocational data described in the paper "Exploring the Sensory Spac

A collection of models for image-text generation in ACM MM 2021.

Bi-directional Image and Text Generation UMT-BITG (image & text generator) Unifying Multimodal Transformer for Bi-directional Image and Text Generatio

Code for reproducing our paper: LMSOC: An Approach for Socially Sensitive Pretraining

LMSOC: An Approach for Socially Sensitive Pretraining Code for reproducing the paper LMSOC: An Approach for Socially Sensitive Pretraining to appear a

This repository contains all source code, pre-trained models related to the paper "An Empirical Study on GANs with Margin Cosine Loss and Relativistic Discriminator"

An Empirical Study on GANs with Margin Cosine Loss and Relativistic Discriminator This is a Pytorch implementation for the paper "An Empirical Study o

Knowledge Oriented Programming Language

KoPL: 面向知识的推理问答编程语言 安装 | 快速开始 | 文档 KoPL全称 Knowledge oriented Programing Language, 是一个为复杂推理问答而设计的编程语言。我们可以将自然语言问题表示为由基本函数组合而成的KoPL程序,程序运行的结果就是问题的答案。目前,

A new mini-batch framework for optimal transport in deep generative models, deep domain adaptation, approximate Bayesian computation, color transfer, and gradient flow.

BoMb-OT Python3 implementation of the papers On Transportation of Mini-batches: A Hierarchical Approach and Improving Mini-batch Optimal Transport via

cairo_kernel is a simple Jupyter kernel for Cairo a smart contract programing language for STARKs.

cairo_kernel cairo_kernel is a simple Jupyter kernel for Cairo a smart contract programing language for STARKs. Installation Install virtualenv virtua

Recognition of 38 speech commands in russian. Based on Yandex Cup 2021 ML Challenge: ASR

Speech_38_ru_commands Recognition of 38 speech commands in russian. Based on Yandex Cup 2021 ML Challenge: ASR Программа умеет распознавать 38 ключевы

Reverse engineer your pytorch vision models, in style

🔍 Rover Reverse engineer your CNNs, in style Rover will help you break down your CNN and visualize the features from within the model. No need to wri

A bunch of random PyTorch models using PyTorch's C++ frontend

PyTorch Deep Learning Models using the C++ frontend Gettting started Clone the repo 1. https://github.com/mrdvince/pytorchcpp 2. cd fashionmnist or

Blue Brain text mining toolbox for semantic search and structured information extraction

Blue Brain Search Source Code DOI Data & Models DOI Documentation Latest Release Python Versions License Build Status Static Typing Code Style Securit

A Word Level Transformer layer based on PyTorch and 🤗 Transformers.

Transformer Embedder A Word Level Transformer layer based on PyTorch and 🤗 Transformers. How to use Install the library from PyPI: pip install transf

LWCC: A LightWeight Crowd Counting library for Python that includes several pretrained state-of-the-art models.

LWCC: A LightWeight Crowd Counting library for Python LWCC is a lightweight crowd counting framework for Python. It wraps four state-of-the-art models

JittorVis - Visual understanding of deep learning models

JittorVis: Visual understanding of deep learning model JittorVis is an open-source library for understanding the inner workings of Jittor models by vi

meProp: Sparsified Back Propagation for Accelerated Deep Learning (ICML 2017)

meProp The codes were used for the paper meProp: Sparsified Back Propagation for Accelerated Deep Learning with Reduced Overfitting (ICML 2017) [pdf]

A modular framework for vision & language multimodal research from Facebook AI Research (FAIR)

MMF is a modular framework for vision and language multimodal research from Facebook AI Research. MMF contains reference implementations of state-of-t

Implementation of EMNLP 2017 Paper "Natural Language Does Not Emerge 'Naturally' in Multi-Agent Dialog" using PyTorch and ParlAI

Language Emergence in Multi Agent Dialog Code for the Paper Natural Language Does Not Emerge 'Naturally' in Multi-Agent Dialog Satwik Kottur, José M.

On-device speech-to-intent engine powered by deep learning

Rhino Made in Vancouver, Canada by Picovoice Rhino is Picovoice's Speech-to-Intent engine. It directly infers intent from spoken commands within a giv

[EMNLP 2021] Mirror-BERT: Converting Pretrained Language Models to universal text encoders without labels.

[EMNLP 2021] Mirror-BERT: Converting Pretrained Language Models to universal text encoders without labels.

Train a state-of-the-art yolov3 object detector from scratch!

TrainYourOwnYOLO: Building a Custom Object Detector from Scratch This repo let's you train a custom image detector using the state-of-the-art YOLOv3 c

NLMpy - A Python package to create neutral landscape models

NLMpy is a Python package for the creation of neutral landscape models that are widely used by landscape ecologists to model ecological patterns

Learning to Prompt for Vision-Language Models.

CoOp Paper: Learning to Prompt for Vision-Language Models Authors: Kaiyang Zhou, Jingkang Yang, Chen Change Loy, Ziwei Liu CoOp (Context Optimization)

Implementation of CVAE. Trained CVAE on faces from UTKFace Dataset to produce synthetic faces with a given degree of happiness/smileyness.

Conditional Smiles! (SmileCVAE) About Implementation of AE, VAE and CVAE. Trained CVAE on faces from UTKFace Dataset. Using an encoding of the Smile-s

The official code for PRIMER: Pyramid-based Masked Sentence Pre-training for Multi-document Summarization

PRIMER The official code for PRIMER: Pyramid-based Masked Sentence Pre-training for Multi-document Summarization. PRIMER is a pre-trained model for mu

YouRefIt: Embodied Reference Understanding with Language and Gesture

YouRefIt: Embodied Reference Understanding with Language and Gesture YouRefIt: Embodied Reference Understanding with Language and Gesture by Yixin Che

A collection of models for image - text generation in ACM MM 2021.

Bi-directional Image and Text Generation UMT-BITG (image & text generator) Unifying Multimodal Transformer for Bi-directional Image and Text Generatio

Implementation of Natural Language Code Search in the project CodeBERT: A Pre-Trained Model for Programming and Natural Languages.

CodeBERT-Implementation In this repo we have replicated the paper CodeBERT: A Pre-Trained Model for Programming and Natural Languages. We are interest

Watson Natural Language Understanding and Knowledge Studio

Material de demonstração dos serviços: Watson Natural Language Understanding e Knowledge Studio Visão Geral: https://www.ibm.com/br-pt/cloud/watson-na

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

PyTorch implementation of D2C: Diffuison-Decoding Models for Few-shot Conditional Generation.

D2C: Diffuison-Decoding Models for Few-shot Conditional Generation Project | Paper PyTorch implementation of D2C: Diffuison-Decoding Models for Few-sh

A Diagnostic Dataset for Compositional Language and Elementary Visual Reasoning

CLEVR Dataset Generation This is the code used to generate the CLEVR dataset as described in the paper: CLEVR: A Diagnostic Dataset for Compositional

Connectionist Temporal Classification (CTC) decoding algorithms: best path, beam search, lexicon search, prefix search, and token passing. Implemented in Python.

CTC Decoding Algorithms Update 2021: installable Python package Python implementation of some common Connectionist Temporal Classification (CTC) decod

Rendering color and depth images for ShapeNet models.

Color & Depth Renderer for ShapeNet This library includes the tools for rendering multi-view color and depth images of ShapeNet models. Physically bas

PyTorch image models, scripts, pretrained weights -- ResNet, ResNeXT, EfficientNet, EfficientNetV2, NFNet, Vision Transformer, MixNet, MobileNet-V3/V2, RegNet, DPN, CSPNet, and more

PyTorch Image Models Sponsors What's New Introduction Models Features Results Getting Started (Documentation) Train, Validation, Inference Scripts Awe

First-Order Probabilistic Programming Language

FOPPL: A First-Order Probabilistic Programming Language This is an implementation of FOPPL, an S-expression based probabilistic programming language d

Source code for the paper "PLOME: Pre-training with Misspelled Knowledge for Chinese Spelling Correction" in ACL2021

PLOME:Pre-training with Misspelled Knowledge for Chinese Spelling Correction (ACL2021) This repository provides the code and data of the work in ACL20

Class activation maps for your PyTorch models (CAM, Grad-CAM, Grad-CAM++, Smooth Grad-CAM++, Score-CAM, SS-CAM, IS-CAM, XGrad-CAM, Layer-CAM)

TorchCAM: class activation explorer Simple way to leverage the class-specific activation of convolutional layers in PyTorch. Quick Tour Setting your C

An NLP library with Awesome pre-trained Transformer models and easy-to-use interface, supporting wide-range of NLP tasks from research to industrial applications.

简体中文 | English News [2021-10-12] PaddleNLP 2.1版本已发布!新增开箱即用的NLP任务能力、Prompt Tuning应用示例与生成任务的高性能推理! 🎉 更多详细升级信息请查看Release Note。 [2021-08-22]《千言:面向事实一致性的生

Production First and Production Ready End-to-End Speech Recognition Toolkit

WeNet 中文版 Discussions | Docs | Papers | Runtime (x86) | Runtime (android) | Pretrained Models We share neural Net together. The main motivation of WeN

A high-level Python library for Quantum Natural Language Processing

lambeq About lambeq is a toolkit for quantum natural language processing (QNLP). Documentation: https://cqcl.github.io/lambeq/ User support: lambeq-su

Mengzi Pretrained Models

中文 | English Mengzi 尽管预训练语言模型在 NLP 的各个领域里得到了广泛的应用,但是其高昂的时间和算力成本依然是一个亟需解决的问题。这要求我们在一定的算力约束下,研发出各项指标更优的模型。 我们的目标不是追求更大的模型规模,而是轻量级但更强大,同时对部署和工业落地更友好的模型。

The World of an Octopus: How Reporting Bias Influences a Language Model's Perception of Color

The World of an Octopus: How Reporting Bias Influences a Language Model's Perception of Color Overview Code and dataset for The World of an Octopus: H

PyMultiDictionary is a Dictionary Module for Python 3+ to get meanings, translations, synonyms and antonyms of words in 20 different languages

PyMultiDictionary PyMultiDictionary is a Dictionary Module for Python 3+ to get meanings, translations, synonyms and antonyms of words in 20 different

Scalable training for dense retrieval models.

Scalable implementation of dense retrieval. Training on cluster By default it trains locally: PYTHONPATH=.:$PYTHONPATH python dpr_scale/main.py traine

NVIDIA Merlin is an open source library providing end-to-end GPU-accelerated recommender systems, from feature engineering and preprocessing to training deep learning models and running inference in production.

NVIDIA Merlin NVIDIA Merlin is an open source library designed to accelerate recommender systems on NVIDIA’s GPUs. It enables data scientists, machine

Crosslingual Segmental Language Model

Crosslingual Segmental Language Model This repository contains the code from Multilingual unsupervised sequence segmentation transfers to extremely lo

Proposed n-stage Latent Dirichlet Allocation method - A Novel Approach for LDA

n-stage Latent Dirichlet Allocation (n-LDA) Proposed n-LDA & A Novel Approach for classical LDA Latent Dirichlet Allocation (LDA) is a generative prob

The official code for PRIMER: Pyramid-based Masked Sentence Pre-training for Multi-document Summarization

PRIMER The official code for PRIMER: Pyramid-based Masked Sentence Pre-training for Multi-document Summarization. PRIMER is a pre-trained model for mu

This repository contains the code for the paper in EMNLP 2021: "HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression".

HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression This repository contains the code for the paper in EM

A framework to train language models to learn invariant representations.

Invariant Language Modeling Implementation of the training for invariant language models. Motivation Modern pretrained language models are critical co

PixelPyramids: Exact Inference Models from Lossless Image Pyramids (ICCV 2021)

PixelPyramids: Exact Inference Models from Lossless Image Pyramids This repository contains the PyTorch implementation of the paper PixelPyramids: Exa

BEAMetrics: Benchmark to Evaluate Automatic Metrics in Natural Language Generation

BEAMetrics: Benchmark to Evaluate Automatic Metrics in Natural Language Generation Installing The Dependencies $ conda create --name beametrics python

Multi-Task Pre-Training for Plug-and-Play Task-Oriented Dialogue System

Multi-Task Pre-Training for Plug-and-Play Task-Oriented Dialogue System Authors: Yixuan Su, Lei Shu, Elman Mansimov, Arshit Gupta, Deng Cai, Yi-An Lai

VLG-Net: Video-Language Graph Matching Networks for Video Grounding

VLG-Net: Video-Language Graph Matching Networks for Video Grounding Introduction Official repository for VLG-Net: Video-Language Graph Matching Networ

Discovering and Achieving Goals via World Models

Discovering and Achieving Goals via World Models [Project Website] [Benchmark Code] [Video (2min)] [Oral Talk (13min)] [Paper] Russell Mendonca*1, Ole

SPRING is a seq2seq model for Text-to-AMR and AMR-to-Text (AAAI2021).

SPRING This is the repo for SPRING (Symmetric ParsIng aNd Generation), a novel approach to semantic parsing and generation, presented at AAAI 2021. Wi

A Library for Modelling Probabilistic Hierarchical Graphical Models in PyTorch

A Library for Modelling Probabilistic Hierarchical Graphical Models in PyTorch

MIT-Machine Learning with Python–From Linear Models to Deep Learning

MIT-Machine Learning with Python–From Linear Models to Deep Learning | One of the 5 courses in MIT MicroMasters in Statistics & Data Science Welcome t

![[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis](https://raw.githubusercontent.com/EndlessSora/focal-frequency-loss/master/resources/teaser.jpg)

[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis

Focal Frequency Loss - Official PyTorch Implementation This repository provides the official PyTorch implementation for the following paper: Focal Fre

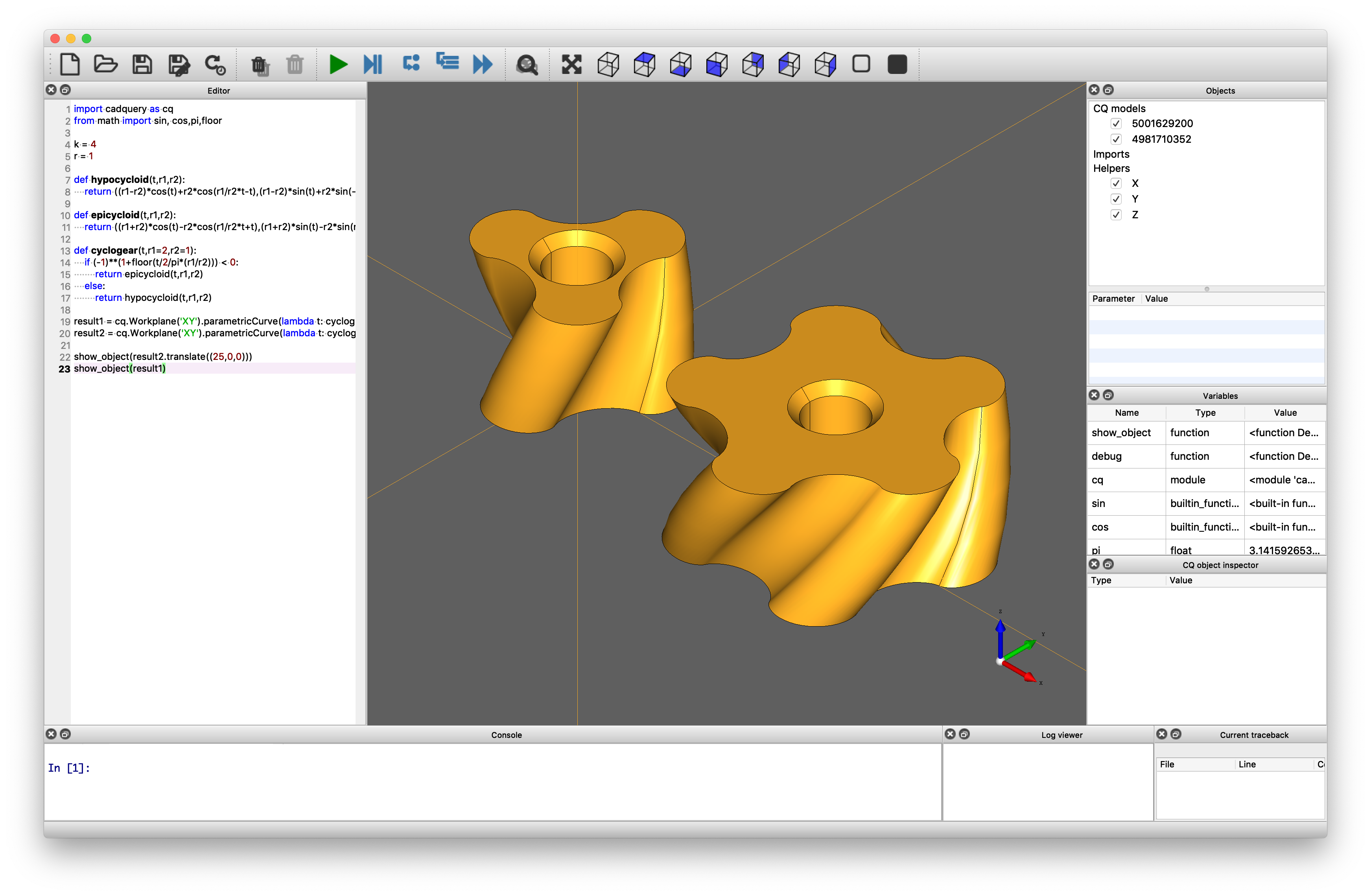

CadQuery is an intuitive, easy-to-use Python module for building parametric 3D CAD models.

A python parametric CAD scripting framework based on OCCT

ViSD4SA, a Vietnamese Span Detection for Aspect-based sentiment analysis dataset

UIT-ViSD4SA PACLIC 35 General Introduction This repository contains the data of the paper: Span Detection for Vietnamese Aspect-Based Sentiment Analys

SciBERT is a BERT model trained on scientific text.

SciBERT is a BERT model trained on scientific text.

Documentation and issues for Pylance - Fast, feature-rich language support for Python

Documentation and issues for Pylance - Fast, feature-rich language support for Python

Multi-Task Pre-Training for Plug-and-Play Task-Oriented Dialogue System

Multi-Task Pre-Training for Plug-and-Play Task-Oriented Dialogue System Authors: Yixuan Su, Lei Shu, Elman Mansimov, Arshit Gupta, Deng Cai, Yi-An Lai

Indobenchmark are collections of Natural Language Understanding (IndoNLU) and Natural Language Generation (IndoNLG)

Indobenchmark Toolkit Indobenchmark are collections of Natural Language Understanding (IndoNLU) and Natural Language Generation (IndoNLG) resources fo

Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer

MT5_paddle Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer English | 简体中文 mT5: A Massively

A simple language and reference decompiler/compiler for MHW THK Files

Leviathon A simple language and reference decompiler/compiler for MHW THK Files. Project Goals The project aims to define a language specification for

Library of deep learning models and datasets designed to make deep learning more accessible and accelerate ML research.

Tensor2Tensor Tensor2Tensor, or T2T for short, is a library of deep learning models and datasets designed to make deep learning more accessible and ac

Petuhlang is a joke-like language, based on Python.

Petuhlang is a joke-like language, based on Python. It updates builtins to make a new syntax based on operators rewrite.

This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model inference.

PyTorch Infer Utils This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model infer

Hapi is a Python library for building Conceptual Distributed Model using HBV96 lumped model & Muskingum routing method

Current build status All platforms: Current release info Name Downloads Version Platforms Hapi - Hydrological library for Python Hapi is an open-sourc

The Multi-Mission Maximum Likelihood framework (3ML)

PyPi Conda The Multi-Mission Maximum Likelihood framework (3ML) A framework for multi-wavelength/multi-messenger analysis for astronomy/astrophysics.

Tangram makes it easy for programmers to train, deploy, and monitor machine learning models.

Tangram Website | Discord Tangram makes it easy for programmers to train, deploy, and monitor machine learning models. Run tangram train to train a mo

A practical and feature-rich paraphrasing framework to augment human intents in text form to build robust NLU models for conversational engines. Created by Prithiviraj Damodaran. Open to pull requests and other forms of collaboration.

Parrot Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more t

PyPI package for scaffolding out code for decision tree models that can learn to find relationships between the attributes of an object.

Decision Tree Writer This package allows you to train a binary classification decision tree on a list of labeled dictionaries or class instances, and

Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE)

OG-SPACE Introduction Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE) is a computational framewo

Analysis of rationale selection in neural rationale models

Neural Rationale Interpretability Analysis We analyze the neural rationale models proposed by Lei et al. (2016) and Bastings et al. (2019), as impleme

Supervision Exists Everywhere: A Data Efficient Contrastive Language-Image Pre-training Paradigm

DeCLIP Supervision Exists Everywhere: A Data Efficient Contrastive Language-Image Pre-training Paradigm. Our paper is available in arxiv Updates ** Ou

LibreLingo🐢 🌎 📚 a community-owned language-learning platform

LibreLingo's mission is to create a modern language-learning platform that is owned by the community of its users. All software is licensed under AGPLv3, which guarantees the freedom to run, study, share, and modify the software. Course authors are encouraged to release their courses with free licenses.

BLEURT is a metric for Natural Language Generation based on transfer learning.

BLEURT: a Transfer Learning-Based Metric for Natural Language Generation BLEURT is an evaluation metric for Natural Language Generation. It takes a pa

Self-Supervised Speech Pre-training and Representation Learning Toolkit.

What's New Sep 2021: We host a challenge in AAAI workshop: The 2nd Self-supervised Learning for Audio and Speech Processing! See SUPERB official site

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees"

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees" Installa

Benchmarking the robustness of Spatial-Temporal Models

Benchmarking the robustness of Spatial-Temporal Models This repositery contains the code for the paper Benchmarking the Robustness of Spatial-Temporal

Codebase of deep learning models for inferring stability of mRNA molecules

Kaggle OpenVaccine Models Codebase of deep learning models for inferring stability of mRNA molecules, corresponding to the Kaggle Open Vaccine Challen

A high-level Python library for Quantum Natural Language Processing

lambeq About lambeq is a toolkit for quantum natural language processing (QNLP). Documentation: https://cqcl.github.io/lambeq/ Getting started Prerequ

The self-supervised goal reaching benchmark introduced in Discovering and Achieving Goals via World Models

Lexa-Benchmark Codebase for the self-supervised goal reaching benchmark introduced in 'Discovering and Achieving Goals via World Models'. Setup Create

Code & Data for the Paper "Time Masking for Temporal Language Models", WSDM 2022

Time Masking for Temporal Language Models This repository provides a reference implementation of the paper: Time Masking for Temporal Language Models

Using Selenium with Python to Web Scrap Popular Youtube Tech Channels.

Web Scrapping Popular Youtube Tech Channels with Selenium Data Mining, Data Wrangling, and Exploratory Data Analysis About the Data Web scrapi

African language Speech Recognition - Speech-to-Text

Swahili-Speech-To-Text Table of Contents Swahili-Speech-To-Text Overview Scenario Approach Project Structure data: models: notebooks: scripts tests: l

Neural text generators like the GPT models promise a general-purpose means of manipulating texts.

Boolean Prompting for Neural Text Generators Neural text generators like the GPT models promise a general-purpose means of manipulating texts. These m

Trained on Simulated Data, Tested in the Real World

Trained on Simulated Data, Tested in the Real World

ImageNet-CoG is a benchmark for concept generalization. It provides a full evaluation framework for pre-trained visual representations which measure how well they generalize to unseen concepts.

The ImageNet-CoG Benchmark Project Website Paper (arXiv) Code repository for the ImageNet-CoG Benchmark introduced in the paper "Concept Generalizatio

Bayesian regularization for functional graphical models.

BayesFGM Paper: Jiajing Niu, Andrew Brown. Bayesian regularization for functional graphical models. Requirements R version 3.6.3 and up Python 3.6 and

TCube generates rich and fluent narratives that describes the characteristics, trends, and anomalies of any time-series data (domain-agnostic) using the transfer learning capabilities of PLMs.

TCube: Domain-Agnostic Neural Time series Narration This repository contains the code for the paper: "TCube: Domain-Agnostic Neural Time series Narrat

New approach to benchmark VQA models

VQA Benchmarking This repository contains the web application & the python interface to evaluate VQA models. Documentation Please see the documentatio