1525 Repositories

Python transformer-tensorflow Libraries

The Official TensorFlow Implementation for SPatchGAN (ICCV2021)

SPatchGAN: Official TensorFlow Implementation Paper "SPatchGAN: A Statistical Feature Based Discriminator for Unsupervised Image-to-Image Translation"

Official implementation for paper: A Latent Transformer for Disentangled Face Editing in Images and Videos.

A Latent Transformer for Disentangled Face Editing in Images and Videos Official implementation for paper: A Latent Transformer for Disentangled Face

Officially unofficial re-implementation of paper: Paint Transformer: Feed Forward Neural Painting with Stroke Prediction, ICCV 2021.

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction [Paper] [Official Paddle Implementation] [Huggingface Gradio Demo] [Unofficial

Styled Handwritten Text Generation with Transformers (ICCV 21)

⚡ Handwriting Transformers [PDF] Ankan Kumar Bhunia, Salman Khan, Hisham Cholakkal, Rao Muhammad Anwer, Fahad Shahbaz Khan & Mubarak Shah Abstract: We

Photonix Photo Manager - a photo management application based on web technologies

A modern, web-based photo management server. Run it on your home server and it will let you find the right photo from your collection on any device. Smart filtering is made possible by object recognition, face recognition, location awareness, color analysis and other ML algorithms.

Fast Style Transfer in TensorFlow

Fast Style Transfer in TensorFlow Add styles from famous paintings to any photo in a fraction of a second! You can even style videos! It takes 100ms o

Python script for performing depth completion from sparse depth and rgb images using the msg_chn_wacv20. model in Tensorflow Lite.

TFLite-msg_chn_wacv20-depth-completion Python script for performing depth completion from sparse depth and rgb images using the msg_chn_wacv20. model

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

A collection of neat and practical data science and machine learning projects

Data Science A collection of neat and practical data science and machine learning projects Explore the docs » Report Bug · Request Feature Table of Co

Acoustic mosquito detection code with Bayesian Neural Networks

HumBugDB Acoustic mosquito detection with Bayesian Neural Networks. Extract audio or features from our large-scale dataset on Zenodo. This repository

This library contains a Tensorflow implementation of the paper Stability Analysis of Unfolded WMMSE for Power Allocation

UWMMSE-stability Tensorflow implementation of Stability Analysis of UWMMSE Overview This library contains a Tensorflow implementation of the paper Sta

Source code for GNN-LSPE (Graph Neural Networks with Learnable Structural and Positional Representations)

Graph Neural Networks with Learnable Structural and Positional Representations Source code for the paper "Graph Neural Networks with Learnable Structu

Source code for Transformer-based Multi-task Learning for Disaster Tweet Categorisation (UCD's participation in TREC-IS 2020A, 2020B and 2021A).

Source code for "UCD participation in TREC-IS 2020A, 2020B and 2021A". *** update at: 2021/05/25 This repo so far relates to the following work: Trans

Official implementation for paper: A Latent Transformer for Disentangled Face Editing in Images and Videos.

A Latent Transformer for Disentangled Face Editing in Images and Videos Official implementation for paper: A Latent Transformer for Disentangled Face

Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer

MT5_paddle Use PaddlePaddle to reproduce the paper:mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer English | 简体中文 mT5: A Massively

Pytorch reimplementation of the Vision Transformer (An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale)

Vision Transformer Pytorch reimplementation of Google's repository for the ViT model that was released with the paper An Image is Worth 16x16 Words: T

Implementation of ConvMixer in TensorFlow and Keras

ConvMixer ConvMixer, an extremely simple model that is similar in spirit to the ViT and the even-more-basic MLP-Mixer in that it operates directly on

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision Training Efficiency We show the training efficiency of our DSLP model b

CONditionals for Ordinal Regression and classification in tensorflow

Condor Ordinal regression in Tensorflow Keras Tensorflow Keras implementation of CONDOR Ordinal Regression (aka ordinal classification) by Garrett Jen

A curated list of awesome resources combining Transformers with Neural Architecture Search

A curated list of awesome resources combining Transformers with Neural Architecture Search

TensorFlow implementation of Adaptive Information Transfer Multi-task (AITM) framework. Code for the paper submitted to KDD21: Modeling the Sequential Dependence among Audience Multi-step Conversions with Multi-task Learning for Customer Acquisition.

AITM TensorFlow implementation of Adaptive Information Transfer Multi-task (AITM) framework. Code for the paper accepted by KDD21: Modeling the Sequen

Official repo for BMVC2021 paper ASFormer: Transformer for Action Segmentation

ASFormer: Transformer for Action Segmentation This repo provides training & inference code for BMVC 2021 paper: ASFormer: Transformer for Action Segme

SCAAML is a deep learning framwork dedicated to side-channel attacks run on top of TensorFlow 2.x.

SCAAML (Side Channel Attacks Assisted with Machine Learning) is a deep learning framwork dedicated to side-channel attacks. It is written in python and run on top of TensorFlow 2.x.

Rethinking Transformer-based Set Prediction for Object Detection

Rethinking Transformer-based Set Prediction for Object Detection Here are the code for the ICCV paper. The code is adapted from Detectron2 and AdelaiD

Simple and flexible ML workflow engine.

This is a simple and flexible ML workflow engine. It helps to orchestrate events across a set of microservices and create executable flow to handle requests. Engine is designed to be configurable with any microservices. Enjoy!

A collection of papers about Transformer in the field of medical image analysis.

A collection of papers about Transformer in the field of medical image analysis.

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision Training Efficiency We show the training efficiency of our DSLP model b

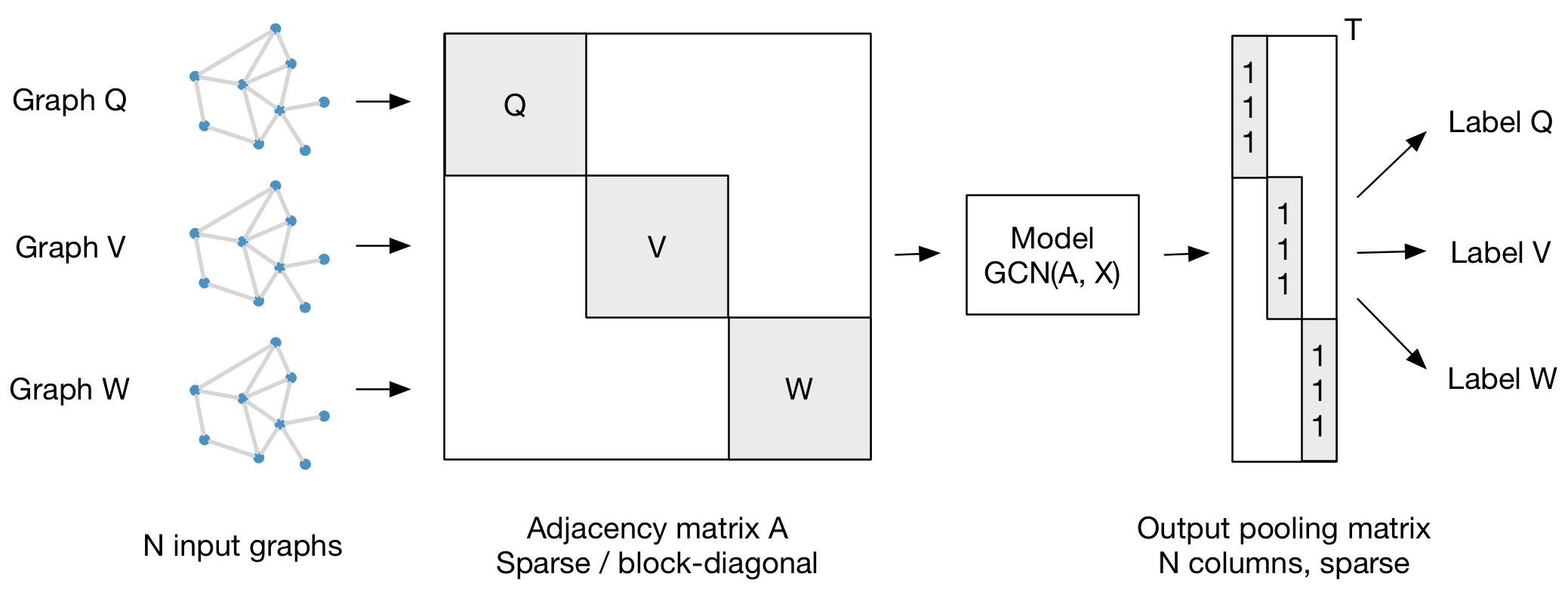

Implementation of Graph Convolutional Networks in TensorFlow

Graph Convolutional Networks This is a TensorFlow implementation of Graph Convolutional Networks for the task of (semi-supervised) classification of n

Graph Attention Networks

GAT Graph Attention Networks (Veličković et al., ICLR 2018): https://arxiv.org/abs/1710.10903 GAT layer t-SNE + Attention coefficients on Cora Overvie

Tensorflow Repo for "DeepGCNs: Can GCNs Go as Deep as CNNs?"

DeepGCNs: Can GCNs Go as Deep as CNNs? In this work, we present new ways to successfully train very deep GCNs. We borrow concepts from CNNs, mainly re

Face Mask Detection on Image and Video using tensorflow and keras

Face-Mask-Detection Face Mask Detection on Image and Video using tensorflow and keras Train Neural Network on face-mask dataset using tensorflow and k

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation We propose a novel approach to translate unpaired contrast computed

FG-transformer-TTS Fine-grained style control in transformer-based text-to-speech synthesis

LST-TTS Official implementation for the paper Fine-grained style control in transformer-based text-to-speech synthesis. Submitted to ICASSP 2022. Audi

Code for the preprint "Well-classified Examples are Underestimated in Classification with Deep Neural Networks"

This is a repository for the paper of "Well-classified Examples are Underestimated in Classification with Deep Neural Networks" The implementation and

Quantum-enhanced transformer neural network

Example of a Quantum-enhanced transformer neural network Get the code: git clone https://github.com/rdisipio/qtransformer.git cd qtransformer Create

African language Speech Recognition - Speech-to-Text

Swahili-Speech-To-Text Table of Contents Swahili-Speech-To-Text Overview Scenario Approach Project Structure data: models: notebooks: scripts tests: l

This is an official implementation for "Swin Transformer: Hierarchical Vision Transformer using Shifted Windows" on Semantic Segmentation.

Swin Transformer for Semantic Segmentation of satellite images This repo contains the supported code and configuration files to reproduce semantic seg

This package implements THOR: Transformer with Stochastic Experts.

THOR: Transformer with Stochastic Experts This PyTorch package implements Taming Sparsely Activated Transformer with Stochastic Experts. Installation

Jarvis Project is a basic virtual assistant that uses TensorFlow for learning.

Jarvis_proyect Jarvis Project is a basic virtual assistant that uses TensorFlow for learning. Latest version 0.1 Features: Good morning protocol Tell

PyTorch implementation for paper StARformer: Transformer with State-Action-Reward Representations.

StARformer This repository contains the PyTorch implementation for our paper titled StARformer: Transformer with State-Action-Reward Representations.

Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation.

Unified-EPT Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation. Installation Linux, CUDA=10.0,

![[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer](https://github.com/AllenXiangX/SnowflakeNet/raw/main/pics/network.png)

[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer

This repository contains the source code for the paper SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer (ICCV 2021 Oral). The project page is here.

Pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering".

TRAnsformer Routing Networks (TRAR) This is an official implementation for ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visu

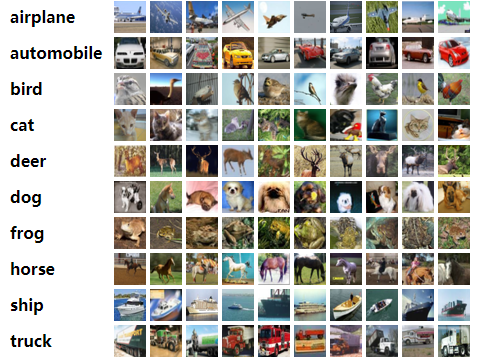

classification task on dataset-CIFAR10,by using Tensorflow/keras

CIFAR10-Tensorflow classification task on dataset-CIFAR10,by using Tensorflow/keras 在这一个库中,我使用Tensorflow与keras框架搭建了几个卷积神经网络模型,针对CIFAR10数据集进行了训练与测试。分别使

SinGlow: Generative Flow for SVS tasks in Tensorflow 2

SinGlow is a part of my Singing voice synthesis system. It can extract features of sound, particularly songs and musics. Then we can use these features (or perfect encoding) for feature migrating tasks. For example migrate features of real singers' song to those virtual singers' songs.

YuNetのPythonでのONNX、TensorFlow-Lite推論サンプル

YuNet-ONNX-TFLite-Sample YuNetのPythonでのONNX、TensorFlow-Lite推論サンプルです。 TensorFlow-LiteモデルはPINTO0309/PINTO_model_zoo/144_YuNetのものを使用しています。 Requirement Op

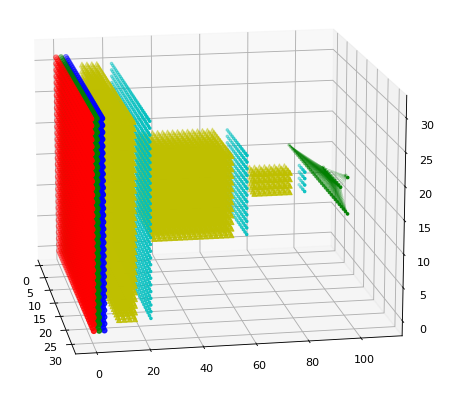

A ultra-lightweight 3D renderer of the Tensorflow/Keras neural network architectures

A ultra-lightweight 3D renderer of the Tensorflow/Keras neural network architectures

Unofficial PyTorch implementation of MobileViT based on paper "MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer".

MobileViT RegNet Unofficial PyTorch implementation of MobileViT based on paper MOBILEVIT: LIGHT-WEIGHT, GENERAL-PURPOSE, AND MOBILE-FRIENDLY VISION TR

Google's Meena transformer chatbot implementation

Here's my attempt at recreating Meena, a state of the art chatbot developed by Google Research and described in the paper Towards a Human-like Open-Domain Chatbot.

Mesh Graphormer is a new transformer-based method for human pose and mesh reconsruction from an input image

MeshGraphormer ✨ ✨ This is our research code of Mesh Graphormer. Mesh Graphormer is a new transformer-based method for human pose and mesh reconsructi

Dynamic Realtime Animation Control

Our project is targeted at making an application that dynamically detects the user’s expressions and gestures and projects it onto an animation software which then renders a 2D/3D animation realtime that gets broadcasted live.

3D-Transformer: Molecular Representation with Transformer in 3D Space

3D-Transformer: Molecular Representation with Transformer in 3D Space

TensorFlow implementation of "Variational Inference with Normalizing Flows"

[TensorFlow 2] Variational Inference with Normalizing Flows TensorFlow implementation of "Variational Inference with Normalizing Flows" [1] Concept Co

Automatically resolve RidderMaster based on TensorFlow & OpenCV

AutoRiddleMaster Automatically resolve RidderMaster based on TensorFlow & OpenCV 基于 TensorFlow 和 OpenCV 实现的全自动化解御迷士小马谜题 Demo How to use Deploy the ser

![[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax](https://github.com/scaomath/galerkin-transformer/raw/main/data/simple_ft.png)

[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax

[NeurIPS 2021] Galerkin Transformer: linear attention without softmax Summary A non-numerical analyst oriented explanation on Toward Data Science abou

Google and Stanford University released a new pre-trained model called ELECTRA

Google and Stanford University released a new pre-trained model called ELECTRA, which has a much compact model size and relatively competitive performance compared to BERT and its variants. For further accelerating the research of the Chinese pre-trained model, the Joint Laboratory of HIT and iFLYTEK Research (HFL) has released the Chinese ELECTRA models based on the official code of ELECTRA. ELECTRA-small could reach similar or even higher scores on several NLP tasks with only 1/10 parameters compared to BERT and its variants.

Improving 3D Object Detection with Channel-wise Transformer

"Improving 3D Object Detection with Channel-wise Transformer" Thanks for the OpenPCDet, this implementation of the CT3D is mainly based on the pcdet v

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

An official repository for Paper "Uformer: A General U-Shaped Transformer for Image Restoration".

Uformer: A General U-Shaped Transformer for Image Restoration Zhendong Wang, Xiaodong Cun, Jianmin Bao and Jianzhuang Liu Paper: https://arxiv.org/abs

A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You can find two approaches for achieving this in this repo.

multitask-learning-transformers A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

This YoloV5 based model is fit to detect people and different types of land vehicles, and displaying their density on a fitted map, according to their coordinates and detected labels.

This YoloV5 based model is fit to detect people and different types of land vehicles, and displaying their density on a fitted map, according to their

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)

A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

Interactive deep learning book with multi-framework code, math, and discussions. Adopted at 200 universities.

D2L.ai: Interactive Deep Learning Book with Multi-Framework Code, Math, and Discussions Book website | STAT 157 Course at UC Berkeley | Latest version

Visualizer for neural network, deep learning, and machine learning models

Netron is a viewer for neural network, deep learning and machine learning models. Netron supports ONNX, TensorFlow Lite, Keras, Caffe, Darknet, ncnn,

VSR-Transformer - This paper proposes a new Transformer for video super-resolution (called VSR-Transformer).

VSR-Transformer By Jiezhang Cao, Yawei Li, Kai Zhang, Luc Van Gool This paper proposes a new Transformer for video super-resolution (called VSR-Transf

Code release for ICCV 2021 paper "Anticipative Video Transformer"

Anticipative Video Transformer Ranked first in the Action Anticipation task of the CVPR 2021 EPIC-Kitchens Challenge! (entry: AVT-FB-UT) [project page

A simple but complete full-attention transformer with a set of promising experimental features from various papers

x-transformers A concise but fully-featured transformer, complete with a set of promising experimental features from various papers. Install $ pip ins

Unofficial Implementation of Zero-Shot Text-to-Speech for Text-Based Insertion in Audio Narration

Zero-Shot Text-to-Speech for Text-Based Insertion in Audio Narration This repo contains only model Implementation of Zero-Shot Text-to-Speech for Text

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

Swapping face using Face Mesh with TensorFlow Lite

Swapping face using Face Mesh with TensorFlow Lite

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

This repository contains an overview of important follow-up works based on the original Vision Transformer (ViT) by Google.

This repository contains an overview of important follow-up works based on the original Vision Transformer (ViT) by Google.

GluonMM is a library of transformer models for computer vision and multi-modality research

GluonMM is a library of transformer models for computer vision and multi-modality research. It contains reference implementations of widely adopted baseline models and also research work from Amazon Research.

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

A Tensorflow based library for Time Series Modelling with Gaussian Processes

Markovflow Documentation | Tutorials | API reference | Slack What does Markovflow do? Markovflow is a Python library for time-series analysis via prob

Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models.

Tevatron Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models. The toolkit has a modularized

High level network definitions with pre-trained weights in TensorFlow

TensorNets High level network definitions with pre-trained weights in TensorFlow (tested with 2.1.0 = TF = 1.4.0). Guiding principles Applicability.

Simple embedding based text classifier inspired by fastText, implemented in tensorflow

FastText in Tensorflow This project is based on the ideas in Facebook's FastText but implemented in Tensorflow. However, it is not an exact replica of

Neural machine translation between the writings of Shakespeare and modern English using TensorFlow

Shakespeare translations using TensorFlow This is an example of using the new Google's TensorFlow library on monolingual translation going from modern

TensorFlowOnSpark brings TensorFlow programs to Apache Spark clusters.

TensorFlowOnSpark TensorFlowOnSpark brings scalable deep learning to Apache Hadoop and Apache Spark clusters. By combining salient features from the T

Tensorforce: a TensorFlow library for applied reinforcement learning

Tensorforce: a TensorFlow library for applied reinforcement learning Introduction Tensorforce is an open-source deep reinforcement learning framework,

Deep Learning and Reinforcement Learning Library for Scientists and Engineers 🔥

TensorLayer is a novel TensorFlow-based deep learning and reinforcement learning library designed for researchers and engineers. It provides an extens

Tensorflow implementation of Human-Level Control through Deep Reinforcement Learning

Human-Level Control through Deep Reinforcement Learning Tensorflow implementation of Human-Level Control through Deep Reinforcement Learning. This imp

Annotated notes and summaries of the TensorFlow white paper, along with SVG figures and links to documentation

TensorFlow White Paper Notes Features Notes broken down section by section, as well as subsection by subsection Relevant links to documentation, resou

Pretty Tensor - Fluent Neural Networks in TensorFlow

Pretty Tensor provides a high level builder API for TensorFlow. It provides thin wrappers on Tensors so that you can easily build multi-layer neural networks.

A best practice for tensorflow project template architecture.

A best practice for tensorflow project template architecture.

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT This repository is the official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification. ArXiv If

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

Official implement of Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer

Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer This repository contains the PyTorch code for Evo-ViT. This work proposes a slow-fas

Learning based AI for playing multi-round Koi-Koi hanafuda card games. Have fun.

Koi-Koi AI Learning based AI for playing multi-round Koi-Koi hanafuda card games. Platform Python PyTorch PySimpleGUI (for the interface playing vs AI

Python Tensorflow 2 scripts for detecting objects of any class in an image without knowing their label.

Tensorflow-Mobile-Generic-Object-Localizer Python Tensorflow 2 scripts for detecting objects of any class in an image without knowing their label. Ori

Natural Language Processing with transformers

we want to create a repo to illustrate usage of transformers in chinese

A collection of SOTA Image Classification Models in PyTorch

A collection of SOTA Image Classification Models in PyTorch