1620 Repositories

Python transformers-models Libraries

yolox_backbone is a deep-learning library and is a collection of YOLOX Backbone models.

YOLOX-Backbone yolox-backbone is a deep-learning library and is a collection of YOLOX backbone models. Install pip install yolox-backbone Load a Pret

YOLOv5 🚀 is a family of object detection architectures and models pretrained on the COCO dataset

YOLOv5 🚀 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics open-source research int

Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT)

CIRPLANT This repository contains the code and pre-trained models for Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT) For d

Library for implementing reservoir computing models (echo state networks) for multivariate time series classification and clustering.

Framework overview This library allows to quickly implement different architectures based on Reservoir Computing (the family of approaches popularized

Code and models for ICCV2021 paper "Robust Object Detection via Instance-Level Temporal Cycle Confusion".

Robust Object Detection via Instance-Level Temporal Cycle Confusion This repo contains the implementation of the ICCV 2021 paper, Robust Object Detect

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

Refactoring dalle-pytorch and taming-transformers for TPU VM

Text-to-Image Translation (DALL-E) for TPU in Pytorch Refactoring Taming Transformers and DALLE-pytorch for TPU VM with Pytorch Lightning Requirements

Ongoing research training transformer language models at scale, including: BERT & GPT-2

What is this fork of Megatron-LM and Megatron-DeepSpeed This is a detached fork of https://github.com/microsoft/Megatron-DeepSpeed, which in itself is

A DNN inference latency prediction toolkit for accurately modeling and predicting the latency on diverse edge devices.

Note: This is an alpha (preview) version which is still under refining. nn-Meter is a novel and efficient system to accurately predict the inference l

This is the official implementation for "Do Transformers Really Perform Bad for Graph Representation?".

Graphormer By Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng*, Guolin Ke, Di He*, Yanming Shen and Tie-Yan Liu. This repo is the official impl

This repository contains PyTorch models for SpecTr (Spectral Transformer).

SpecTr: Spectral Transformer for Hyperspectral Pathology Image Segmentation This repository contains PyTorch models for SpecTr (Spectral Transformer).

Source code for "Progressive Transformers for End-to-End Sign Language Production" (ECCV 2020)

Progressive Transformers for End-to-End Sign Language Production Source code for "Progressive Transformers for End-to-End Sign Language Production" (B

Sign Language Translation with Transformers (COLING'2020, ECCV'20 SLRTP Workshop)

transformer-slt This repository gathers data and code supporting the experiments in the paper Better Sign Language Translation with STMC-Transformer.

Sign Language Transformers (CVPR'20)

Sign Language Transformers (CVPR'20) This repo contains the training and evaluation code for the paper Sign Language Transformers: Sign Language Trans

This is an official implementation for "Self-Supervised Learning with Swin Transformers".

Self-Supervised Learning with Vision Transformers By Zhenda Xie*, Yutong Lin*, Zhuliang Yao, Zheng Zhang, Qi Dai, Yue Cao and Han Hu This repo is the

VisualGPT: Data-efficient Adaptation of Pretrained Language Models for Image Captioning

VisualGPT Our Paper VisualGPT: Data-efficient Adaptation of Pretrained Language Models for Image Captioning Main Architecture of Our VisualGPT Downloa

Text-to-Image generation

Generate vivid Images for Any (Chinese) text CogView is a pretrained (4B-param) transformer for text-to-image generation in general domain. Read our p

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

nn-Meter is a novel and efficient system to accurately predict the inference latency of DNN models on diverse edge devices

A DNN inference latency prediction toolkit for accurately modeling and predicting the latency on diverse edge devices.

PyTorch Lightning Optical Flow models, scripts, and pretrained weights.

PyTorch Lightning Optical Flow models, scripts, and pretrained weights.

Data from "HateCheck: Functional Tests for Hate Speech Detection Models" (Röttger et al., ACL 2021)

In this repo, you can find the data from our ACL 2021 paper "HateCheck: Functional Tests for Hate Speech Detection Models". "test_suite_cases.csv" con

A tutorial showing how to train, convert, and run TensorFlow Lite object detection models on Android devices, the Raspberry Pi, and more!

A tutorial showing how to train, convert, and run TensorFlow Lite object detection models on Android devices, the Raspberry Pi, and more!

Code for "Finetuning Pretrained Transformers into Variational Autoencoders"

transformers-into-vaes Code for Finetuning Pretrained Transformers into Variational Autoencoders (our submission to NLP Insights Workshop 2021). Gathe

Implementation of "RaScaNet: Learning Tiny Models by Raster-Scanning Image" from CVPR 2021.

RaScaNet: Learning Tiny Models by Raster-Scanning Images Deploying deep convolutional neural networks on ultra-low power systems is challenging, becau

Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized

VQGAN-CLIP-Docker About Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized This is a stripped and minimal dependency repository for running loca

Machine learning models from Singapore's NLP research community

SG-NLP Machine learning models from Singapore's natural language processing (NLP) research community. sgnlp is a Python package that allows you to eas

Official implementation of the paper Visual Parser: Representing Part-whole Hierarchies with Transformers

Visual Parser (ViP) This is the official implementation of the paper Visual Parser: Representing Part-whole Hierarchies with Transformers. Key Feature

Scenic: A Jax Library for Computer Vision and Beyond

Scenic Scenic is a codebase with a focus on research around attention-based models for computer vision. Scenic has been successfully used to develop c

[ICCV, 2021] Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks

Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks This is an official PyTorch code repository of the paper "Cloud Transformers:

code for our ICCV 2021 paper "DeepCAD: A Deep Generative Network for Computer-Aided Design Models"

DeepCAD This repository provides source code for our paper: DeepCAD: A Deep Generative Network for Computer-Aided Design Models Rundi Wu, Chang Xiao,

Study of human inductive biases in CNNs and Transformers.

Are Convolutional Neural Networks or Transformers more like human vision? This repository contains the code and fine-tuned models of popular Convoluti

Toward Spatially Unbiased Generative Models (ICCV 2021)

Toward Spatially Unbiased Generative Models Implementation of Toward Spatially Unbiased Generative Models (ICCV 2021) Overview Recent image generation

Official implementation of NPMs: Neural Parametric Models for 3D Deformable Shapes - ICCV 2021

NPMs: Neural Parametric Models Project Page | Paper | ArXiv | Video NPMs: Neural Parametric Models for 3D Deformable Shapes Pablo Palafox, Aljaz Bozic

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

Code Repo for the ACL21 paper "Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning"

Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning This is the Github repository of our paper, "Common S

Punctuation Restoration using Transformer Models for High-and Low-Resource Languages

Punctuation Restoration using Transformer Models This repository contins official implementation of the paper Punctuation Restoration using Transforme

Open-World Entity Segmentation

Open-World Entity Segmentation Project Website Lu Qi*, Jason Kuen*, Yi Wang, Jiuxiang Gu, Hengshuang Zhao, Zhe Lin, Philip Torr, Jiaya Jia This projec

Implementation of Multistream Transformers in Pytorch

Multistream Transformers Implementation of Multistream Transformers in Pytorch. This repository deviates slightly from the paper, where instead of usi

A project for developing transformer-based models for clinical relation extraction

Clinical Relation Extration with Transformers Aim This package is developed for researchers easily to use state-of-the-art transformers models for ext

Dense Prediction Transformers

Vision Transformers for Dense Prediction This repository contains code and models for our paper: Vision Transformers for Dense Prediction René Ranftl,

An official implementation of the paper Exploring Sequence Feature Alignment for Domain Adaptive Detection Transformers

Sequence Feature Alignment (SFA) By Wen Wang, Yang Cao, Jing Zhang, Fengxiang He, Zheng-jun Zha, Yonggang Wen, and Dacheng Tao This repository is an o

PyTorch implementations of neural network models for keyword spotting

Honk: CNNs for Keyword Spotting Honk is a PyTorch reimplementation of Google's TensorFlow convolutional neural networks for keyword spotting, which ac

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

Evidently helps analyze machine learning models during validation or production monitoring

Evidently helps analyze machine learning models during validation or production monitoring. The tool generates interactive visual reports and JSON profiles from pandas DataFrame or csv files. Currently 6 reports are available.

A collection of interactive machine-learning experiments: 🏋️models training + 🎨models demo

🤖 Interactive Machine Learning experiments: 🏋️models training + 🎨models demo

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

Active learning for text classification in Python

Active Learning allows you to efficiently label training data in a small-data scenario.

StarGAN - Official PyTorch Implementation

StarGAN - Official PyTorch Implementation ***** New: StarGAN v2 is available at https://github.com/clovaai/stargan-v2 ***** This repository provides t

Minimal PyTorch implementation of Generative Latent Optimization from the paper "Optimizing the Latent Space of Generative Networks"

Minimal PyTorch implementation of Generative Latent Optimization This is a reimplementation of the paper Piotr Bojanowski, Armand Joulin, David Lopez-

This's an implementation of deepmind Visual Interaction Networks paper using pytorch

Visual-Interaction-Networks An implementation of Deepmind visual interaction networks in Pytorch. Introduction For the purpose of understanding the ch

PyTorch implementation of convolutional neural networks-based text-to-speech synthesis models

Deepvoice3_pytorch PyTorch implementation of convolutional networks-based text-to-speech synthesis models: arXiv:1710.07654: Deep Voice 3: Scaling Tex

Implementation of Multistream Transformers in Pytorch

Multistream Transformers Implementation of Multistream Transformers in Pytorch. This repository deviates slightly from the paper, where instead of usi

![[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery](https://github.com/IceTTTb/PlaneTR3D/raw/master/misc/network.jpg)

[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery

PlaneTR: Structure-Guided Transformers for 3D Plane Recovery This is the official implementation of our ICCV 2021 paper News There maybe some bugs in

Set of utilities for exporting/controlling your robot in Blender

Blender Robotics Utils This repository contains utilities for exporting/controlling your robot in Blender Maintainers This repository is maintained by

PyTorch implementation of popular datasets and models in remote sensing

PyTorch Remote Sensing (torchrs) (WIP) PyTorch implementation of popular datasets and models in remote sensing tasks (Change Detection, Image Super Re

Learned Token Pruning for Transformers

LTP: Learned Token Pruning for Transformers Check our paper for more details. Installation We follow the same installation procedure as the original H

Implementation of PyTorch-based multi-task pre-trained models

mtdp Library containing implementation related to the research paper "Multi-task pre-training of deep neural networks for digital pathology" (Mormont

ICML 21 - Voice2Series: Reprogramming Acoustic Models for Time Series Classification

Voice2Series-Reprogramming Voice2Series: Reprogramming Acoustic Models for Time Series Classification International Conference on Machine Learning (IC

Relative Positional Encoding for Transformers with Linear Complexity

Stochastic Positional Encoding (SPE) This is the source code repository for the ICML 2021 paper Relative Positional Encoding for Transformers with Lin

Deduplicating Training Data Makes Language Models Better

Deduplicating Training Data Makes Language Models Better This repository contains code to deduplicate language model datasets as descrbed in the paper

[ICML 2021] Towards Understanding and Mitigating Social Biases in Language Models

Towards Understanding and Mitigating Social Biases in Language Models This repo contains code and data for evaluating and mitigating bias from generat

Reference implementation of code generation projects from Facebook AI Research. General toolkit to apply machine learning to code, from dataset creation to model training and evaluation. Comes with pretrained models.

This repository is a toolkit to do machine learning for programming languages. It implements tokenization, dataset preprocessing, model training and m

A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers.

ViTGAN: Training GANs with Vision Transformers A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers. Refer

Python package for machine learning for healthcare using a OMOP common data model

This library was developed in order to facilitate rapid prototyping in Python of predictive machine-learning models using longitudinal medical data from an OMOP CDM-standard database.

Generative Query Network (GQN) in PyTorch as described in "Neural Scene Representation and Rendering"

Update 2019/06/24: A model trained on 10% of the Shepard-Metzler dataset has been added, the following notebook explains the main features of this mod

Annotated, understandable, and visually interpretable PyTorch implementations of: VAE, BIRVAE, NSGAN, MMGAN, WGAN, WGANGP, LSGAN, DRAGAN, BEGAN, RaGAN, InfoGAN, fGAN, FisherGAN

Overview PyTorch 0.4.1 | Python 3.6.5 Annotated implementations with comparative introductions for minimax, non-saturating, wasserstein, wasserstein g

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

Implementation of experiments in the paper Clockwork Variational Autoencoders (project website) using JAX and Flax

Clockwork VAEs in JAX/Flax Implementation of experiments in the paper Clockwork Variational Autoencoders (project website) using JAX and Flax, ported

The Hailo Model Zoo includes pre-trained models and a full building and evaluation environment

Hailo Model Zoo The Hailo Model Zoo provides pre-trained models for high-performance deep learning applications. Using the Hailo Model Zoo you can mea

RoBERTa Marathi Language model trained from scratch during huggingface 🤗 x flax community week

RoBERTa base model for Marathi Language (मराठी भाषा) Pretrained model on Marathi language using a masked language modeling (MLM) objective. RoBERTa wa

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 Corpora 📃 Corpora Number of documents Size (GB) BNE 201,080,084 570GB Models 🤖 RoBERTa-base BNE: https://huggingface.co

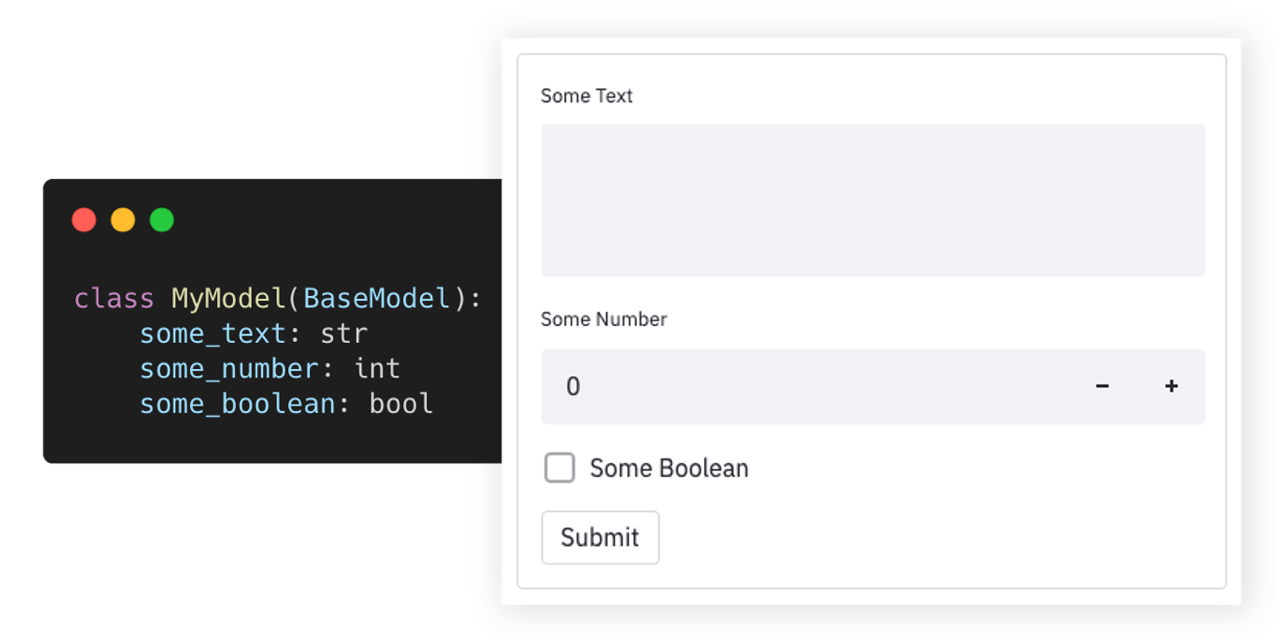

🪄 Auto-generate Streamlit UI from Pydantic Models and Dataclasses.

Streamlit Pydantic Auto-generate Streamlit UI elements from Pydantic models. Getting Started • Documentation • Support • Report a Bug • Contribution •

EsViT: Efficient self-supervised Vision Transformers

Efficient Self-Supervised Vision Transformers (EsViT) PyTorch implementation for EsViT, built with two techniques: A multi-stage Transformer architect

Pytorch code for ICRA'21 paper: "Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation"

Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation This repository is the pytorch implementation of our paper: Hierarchical Cr

StackRec: Efficient Training of Very Deep Sequential Recommender Models by Iterative Stacking

StackRec: Efficient Training of Very Deep Sequential Recommender Models by Iterative Stacking Datasets You can download datasets that have been pre-pr

Computationally Efficient Optimization of Plackett-Luce Ranking Models for Relevance and Fairness

Computationally Efficient Optimization of Plackett-Luce Ranking Models for Relevance and Fairness This repository contains the code used for the exper

Official repository for the CVPR 2021 paper "Learning Feature Aggregation for Deep 3D Morphable Models"

Deep3DMM Official repository for the CVPR 2021 paper Learning Feature Aggregation for Deep 3D Morphable Models. Requirements This code is tested on Py

Measuring and Improving Consistency in Pretrained Language Models

ParaRel 🤘 This repository contains the code and data for the paper: Measuring and Improving Consistency in Pretrained Language Models as well as the

Empirical Study of Transformers for Source Code & A Simple Approach for Handling Out-of-Vocabulary Identifiers in Deep Learning for Source Code

Transformers for variable misuse, function naming and code completion tasks The official PyTorch implementation of: Empirical Study of Transformers fo

source code for https://arxiv.org/abs/2005.11248 "Accelerating Antimicrobial Discovery with Controllable Deep Generative Models and Molecular Dynamics"

Accelerating Antimicrobial Discovery with Controllable Deep Generative Models and Molecular Dynamics This work will be published in Nature Biomedical

Towards Debiasing NLU Models from Unknown Biases

Towards Debiasing NLU Models from Unknown Biases Abstract: NLU models often exploit biased features to achieve high dataset-specific performance witho

Deep Neural Networks Improve Radiologists' Performance in Breast Cancer Screening

Deep Neural Networks Improve Radiologists' Performance in Breast Cancer Screening Introduction This is an implementation of the model used for breast

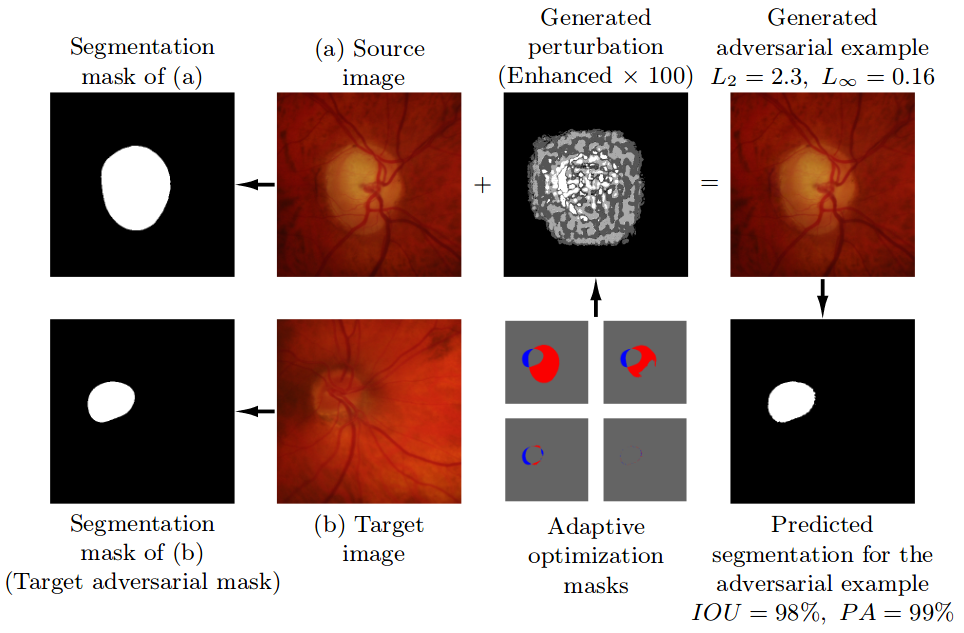

Pre-trained model, code, and materials from the paper "Impact of Adversarial Examples on Deep Learning Models for Biomedical Image Segmentation" (MICCAI 2019).

Adaptive Segmentation Mask Attack This repository contains the implementation of the Adaptive Segmentation Mask Attack (ASMA), a targeted adversarial

PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models

PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models Code accompanying CVPR'20 paper of the same title. Paper lin

Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow.

Generative Models Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow. Also present here are RBM and Helmholtz Machine. Note: Gen

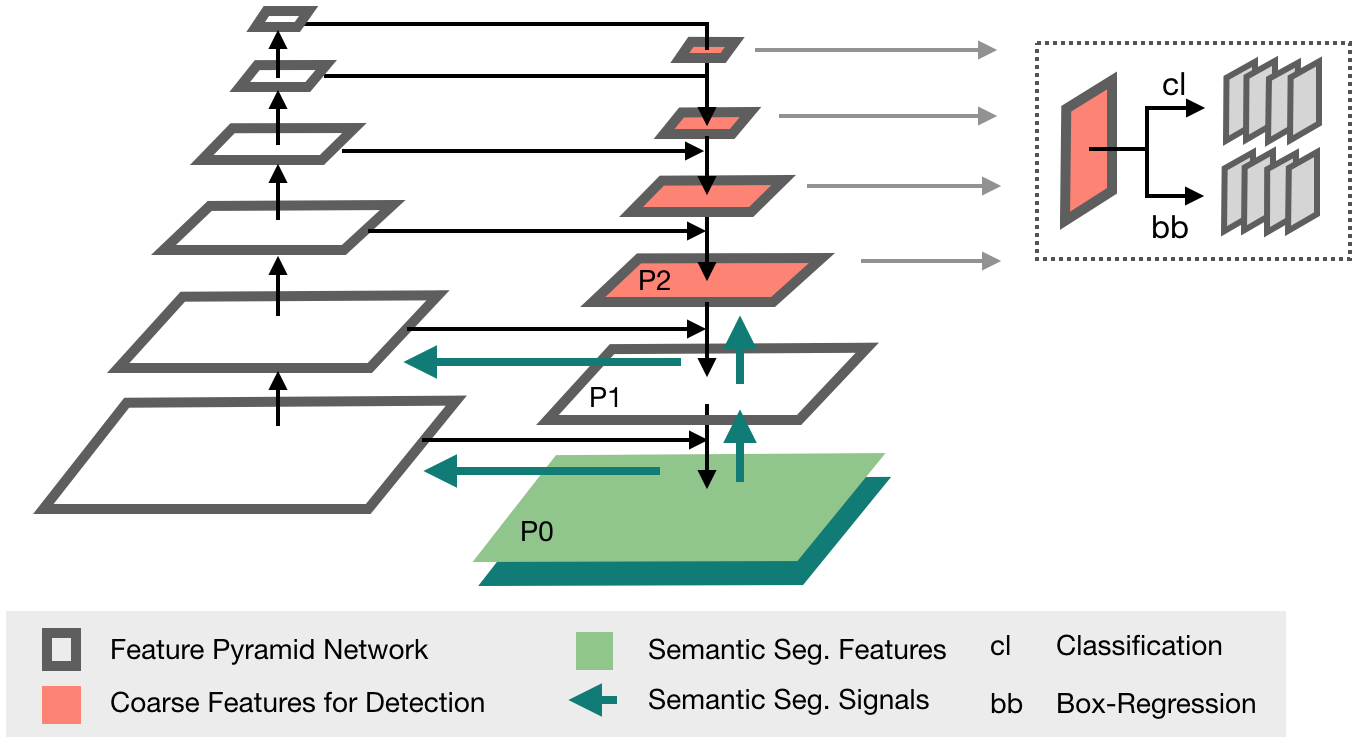

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

Serving PyTorch 1.0 Models as a Web Server in C++

Serving PyTorch Models in C++ This repository contains various examples to perform inference using PyTorch C++ API. Run git clone https://github.com/W

General Multi-label Image Classification with Transformers

General Multi-label Image Classification with Transformers Jack Lanchantin, Tianlu Wang, Vicente Ordóñez Román, Yanjun Qi Conference on Computer Visio

🤗 Push your spaCy pipelines to the Hugging Face Hub

spacy-huggingface-hub: Push your spaCy pipelines to the Hugging Face Hub This package provides a CLI command for uploading any trained spaCy pipeline

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

ML models implementation practice

Let's implement various ML algorithms with numpy/tf Vanilla Neural Network https://towardsdatascience.com/lets-code-a-neural-network-in-plain-numpy-ae

Denoising Diffusion Probabilistic Models

Denoising Diffusion Probabilistic Models This repo contains code for DDPM training. Based on Denoising Diffusion Probabilistic Models, Improved Denois

Code for the paper "Implicit Representations of Meaning in Neural Language Models"

Implicit Representations of Meaning in Neural Language Models Preliminaries Create and set up a conda environment as follows: conda create -n state-pr

This package contains deep learning models and related scripts for RoseTTAFold

RoseTTAFold This package contains deep learning models and related scripts to run RoseTTAFold This repository is the official implementation of RoseTT

Official code of paper "PGT: A Progressive Method for Training Models on Long Videos" on CVPR2021

PGT Code for paper PGT: A Progressive Method for Training Models on Long Videos. Install Run pip install -r requirements.txt. Run python setup.py buil

Source code for models described in the paper "AudioCLIP: Extending CLIP to Image, Text and Audio" (https://arxiv.org/abs/2106.13043)

AudioCLIP Extending CLIP to Image, Text and Audio This repository contains implementation of the models described in the paper arXiv:2106.13043. This

This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transformations at CVPR'21. According to some product reasons, we are not planning to release the training/testing codes and models. However, we will release the dataset and the scripts to prepare the dataset.

TransFill-Reference-Inpainting This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transf

Official repository for the paper "Going Beyond Linear Transformers with Recurrent Fast Weight Programmers"

Recurrent Fast Weight Programmers This is the official repository containing the code we used to produce the experimental results reported in the pape