1620 Repositories

Python transformers-models Libraries

NVIDIA Merlin is an open source library providing end-to-end GPU-accelerated recommender systems, from feature engineering and preprocessing to training deep learning models and running inference in production.

NVIDIA Merlin NVIDIA Merlin is an open source library designed to accelerate recommender systems on NVIDIA’s GPUs. It enables data scientists, machine

A framework to train language models to learn invariant representations.

Invariant Language Modeling Implementation of the training for invariant language models. Motivation Modern pretrained language models are critical co

PixelPyramids: Exact Inference Models from Lossless Image Pyramids (ICCV 2021)

PixelPyramids: Exact Inference Models from Lossless Image Pyramids This repository contains the PyTorch implementation of the paper PixelPyramids: Exa

Discovering and Achieving Goals via World Models

Discovering and Achieving Goals via World Models [Project Website] [Benchmark Code] [Video (2min)] [Oral Talk (13min)] [Paper] Russell Mendonca*1, Ole

A Library for Modelling Probabilistic Hierarchical Graphical Models in PyTorch

A Library for Modelling Probabilistic Hierarchical Graphical Models in PyTorch

MIT-Machine Learning with Python–From Linear Models to Deep Learning

MIT-Machine Learning with Python–From Linear Models to Deep Learning | One of the 5 courses in MIT MicroMasters in Statistics & Data Science Welcome t

![[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis](https://raw.githubusercontent.com/EndlessSora/focal-frequency-loss/master/resources/teaser.jpg)

[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis

Focal Frequency Loss - Official PyTorch Implementation This repository provides the official PyTorch implementation for the following paper: Focal Fre

High-Fidelity Pluralistic Image Completion with Transformers (ICCV 2021)

Image Completion Transformer (ICT) Project Page | Paper (ArXiv) | Pre-trained Models | Supplemental Material This repository is the official pytorch i

Styled Handwritten Text Generation with Transformers (ICCV 21)

⚡ Handwriting Transformers [PDF] Ankan Kumar Bhunia, Salman Khan, Hisham Cholakkal, Rao Muhammad Anwer, Fahad Shahbaz Khan & Mubarak Shah Abstract: We

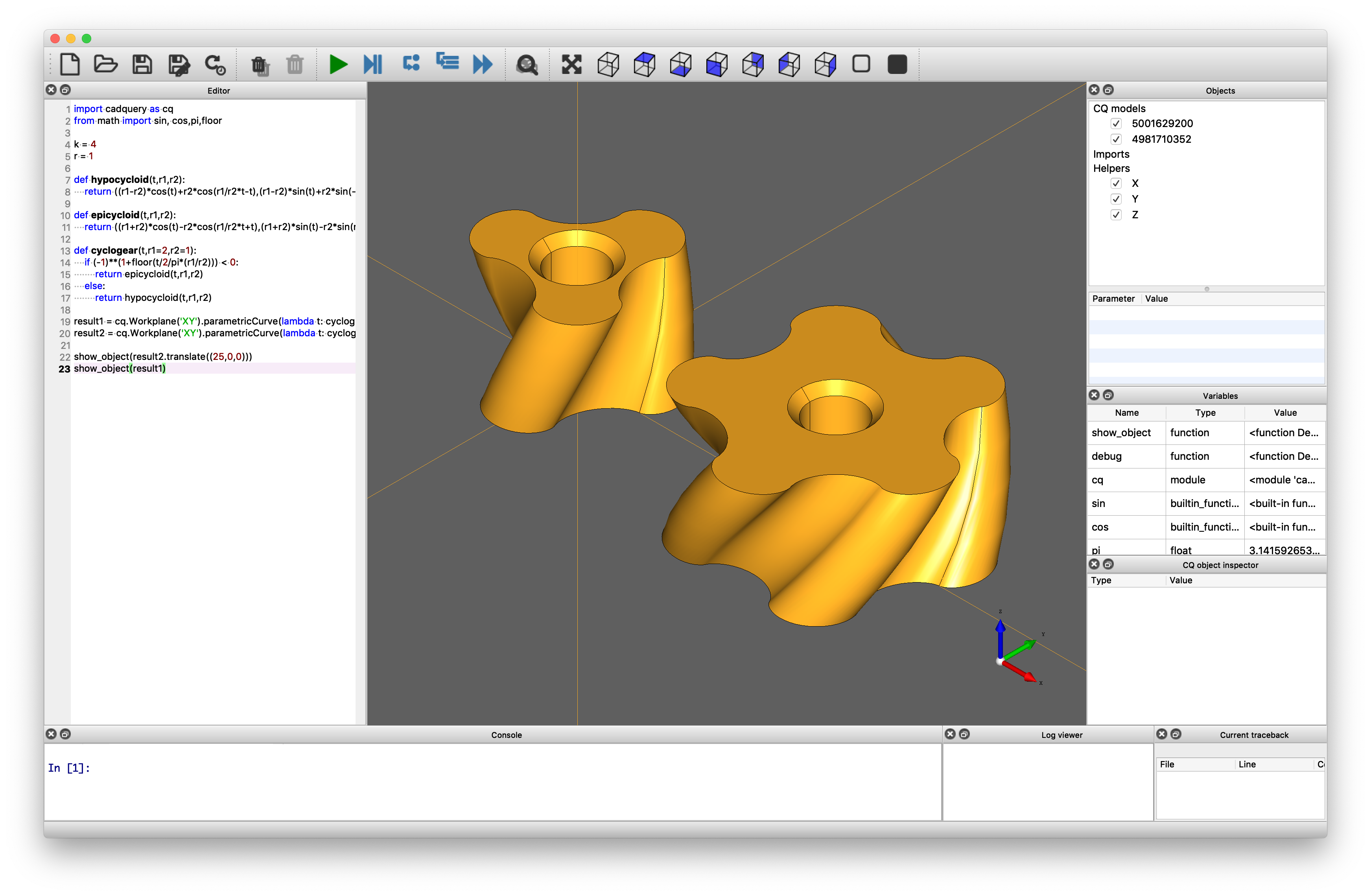

CadQuery is an intuitive, easy-to-use Python module for building parametric 3D CAD models.

A python parametric CAD scripting framework based on OCCT

The official repository for our paper "The Neural Data Router: Adaptive Control Flow in Transformers Improves Systematic Generalization".

Codebase for learning control flow in transformers The official repository for our paper "The Neural Data Router: Adaptive Control Flow in Transformer

Source code for CAST - Crisis Domain Adaptation Using Sequence-to-sequence Transformers (Accepted to ISCRAM 2021, CorePaper).

Source code for CAST: Crisis Domain Adaptation UsingSequence-to-sequenceTransformers (Paper, BibTeX, Accepted to ISCRAM 2021, CorePaper) Quick start D

Source code for GNN-LSPE (Graph Neural Networks with Learnable Structural and Positional Representations)

Graph Neural Networks with Learnable Structural and Positional Representations Source code for the paper "Graph Neural Networks with Learnable Structu

[ACM MM 2021] Diverse Image Inpainting with Bidirectional and Autoregressive Transformers

Diverse Image Inpainting with Bidirectional and Autoregressive Transformers Installation pip install -r requirements.txt Dataset Preparation Given the

Library of deep learning models and datasets designed to make deep learning more accessible and accelerate ML research.

Tensor2Tensor Tensor2Tensor, or T2T for short, is a library of deep learning models and datasets designed to make deep learning more accessible and ac

Pytorch reimplementation of the Vision Transformer (An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale)

Vision Transformer Pytorch reimplementation of Google's repository for the ViT model that was released with the paper An Image is Worth 16x16 Words: T

Public repo for the ICCV2021-CVAMD paper "Is it Time to Replace CNNs with Transformers for Medical Images?"

Is it Time to Replace CNNs with Transformers for Medical Images? Accepted at ICCV-2021: Workshop on Computer Vision for Automated Medical Diagnosis (C

Overview of architecture and implementation of TEDS-Net, as described in MICCAI 2021: "TEDS-Net: Enforcing Diffeomorphisms in Spatial Transformers to Guarantee TopologyPreservation in Segmentations"

TEDS-Net Overview of architecture and implementation of TEDS-Net, as described in MICCAI 2021: "TEDS-Net: Enforcing Diffeomorphisms in Spatial Transfo

This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model inference.

PyTorch Infer Utils This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model infer

Converting CPT to bert form for use

cpt-encoder 将CPT转成bert形式使用 说明 刚刚刷到又出了一种模型:CPT,看论文显示,在很多中文任务上性能比mac bert还好,就迫不及待想把它用起来。 根据对源码的研究,发现该模型在做nlu建模时主要用的encoder部分,也就是bert,因此我将这部分权重转为bert权重类型

My implementation of transformers related papers for computer vision in pytorch

vision_transformers This is my personnal repo to implement new transofrmers based and other computer vision DL models I am currenlty working without a

A curated list of awesome resources combining Transformers with Neural Architecture Search

A curated list of awesome resources combining Transformers with Neural Architecture Search

Hapi is a Python library for building Conceptual Distributed Model using HBV96 lumped model & Muskingum routing method

Current build status All platforms: Current release info Name Downloads Version Platforms Hapi - Hydrological library for Python Hapi is an open-sourc

The Multi-Mission Maximum Likelihood framework (3ML)

PyPi Conda The Multi-Mission Maximum Likelihood framework (3ML) A framework for multi-wavelength/multi-messenger analysis for astronomy/astrophysics.

Tangram makes it easy for programmers to train, deploy, and monitor machine learning models.

Tangram Website | Discord Tangram makes it easy for programmers to train, deploy, and monitor machine learning models. Run tangram train to train a mo

A practical and feature-rich paraphrasing framework to augment human intents in text form to build robust NLU models for conversational engines. Created by Prithiviraj Damodaran. Open to pull requests and other forms of collaboration.

Parrot Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more t

PyPI package for scaffolding out code for decision tree models that can learn to find relationships between the attributes of an object.

Decision Tree Writer This package allows you to train a binary classification decision tree on a list of labeled dictionaries or class instances, and

Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE)

OG-SPACE Introduction Optimized Gillespie algorithm for simulating Stochastic sPAtial models of Cancer Evolution (OG-SPACE) is a computational framewo

This is a library for training and applying sparse fine-tunings with torch and transformers.

This is a library for training and applying sparse fine-tunings with torch and transformers. Please refer to our paper Composable Sparse Fine-Tuning f

Analysis of rationale selection in neural rationale models

Neural Rationale Interpretability Analysis We analyze the neural rationale models proposed by Lei et al. (2016) and Bastings et al. (2019), as impleme

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation

CyTran: Cycle-Consistent Transformers for Non-Contrast to Contrast CT Translation We propose a novel approach to translate unpaired contrast computed

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees"

Companion code for "Bayesian logistic regression for online recalibration and revision of risk prediction models with performance guarantees" Installa

Benchmarking the robustness of Spatial-Temporal Models

Benchmarking the robustness of Spatial-Temporal Models This repositery contains the code for the paper Benchmarking the Robustness of Spatial-Temporal

Codebase of deep learning models for inferring stability of mRNA molecules

Kaggle OpenVaccine Models Codebase of deep learning models for inferring stability of mRNA molecules, corresponding to the Kaggle Open Vaccine Challen

The self-supervised goal reaching benchmark introduced in Discovering and Achieving Goals via World Models

Lexa-Benchmark Codebase for the self-supervised goal reaching benchmark introduced in 'Discovering and Achieving Goals via World Models'. Setup Create

Implementation of ICCV21 paper: PnP-DETR: Towards Efficient Visual Analysis with Transformers

Implementation of ICCV 2021 paper: PnP-DETR: Towards Efficient Visual Analysis with Transformers arxiv This repository is based on detr Recently, DETR

Code & Data for the Paper "Time Masking for Temporal Language Models", WSDM 2022

Time Masking for Temporal Language Models This repository provides a reference implementation of the paper: Time Masking for Temporal Language Models

Neural text generators like the GPT models promise a general-purpose means of manipulating texts.

Boolean Prompting for Neural Text Generators Neural text generators like the GPT models promise a general-purpose means of manipulating texts. These m

Revitalizing CNN Attention via Transformers in Self-Supervised Visual Representation Learning

Revitalizing CNN Attention via Transformers in Self-Supervised Visual Representation Learning This repository is the official implementation of CARE.

Bayesian regularization for functional graphical models.

BayesFGM Paper: Jiajing Niu, Andrew Brown. Bayesian regularization for functional graphical models. Requirements R version 3.6.3 and up Python 3.6 and

New approach to benchmark VQA models

VQA Benchmarking This repository contains the web application & the python interface to evaluate VQA models. Documentation Please see the documentatio

Deep learning models for classification of 15 common weeds in the southern U.S. cotton production systems.

CottonWeeds Deep learning models for classification of 15 common weeds in the southern U.S. cotton production systems. requirements pytorch torchsumma

Repository sharing code and the model for the paper "Rescoring Sequence-to-Sequence Models for Text Line Recognition with CTC-Prefixes"

Rescoring Sequence-to-Sequence Models for Text Line Recognition with CTC-Prefixes Setup virtualenv -p python3 venv source venv/bin/activate pip instal

HyperCube: Implicit Field Representations of Voxelized 3D Models

HyperCube: Implicit Field Representations of Voxelized 3D Models Authors: Magdalena Proszewska, Marcin Mazur, Tomasz Trzcinski, Przemysław Spurek [Pap

Revitalizing CNN Attention via Transformers in Self-Supervised Visual Representation Learning

Revitalizing CNN Attention via Transformers in Self-Supervised Visual Representation Learning

This is the repository for paper NEEDLE: Towards Non-invertible Backdoor Attack to Deep Learning Models.

This is the repository for paper NEEDLE: Towards Non-invertible Backdoor Attack to Deep Learning Models.

Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation.

Unified-EPT Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation. Installation Linux, CUDA=10.0,

This is the official pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering" on VQA Task

🌈 ERASOR (RA-L'21 with ICRA Option) Official page of "ERASOR: Egocentric Ratio of Pseudo Occupancy-based Dynamic Object Removal for Static 3D Point C

![[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer](https://github.com/AllenXiangX/SnowflakeNet/raw/main/pics/network.png)

[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer

This repository contains the source code for the paper SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer (ICCV 2021 Oral). The project page is here.

Pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering".

TRAnsformer Routing Networks (TRAR) This is an official implementation for ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visu

Toolkit for building machine learning models that generalize to unseen domains and are robust to privacy and other attacks.

Toolkit for Building Robust ML models that generalize to unseen domains (RobustDG) Divyat Mahajan, Shruti Tople, Amit Sharma Privacy & Causal Learning

SurvTRACE: Transformers for Survival Analysis with Competing Events

⭐ SurvTRACE: Transformers for Survival Analysis with Competing Events This repo provides the implementation of SurvTRACE for survival analysis. It is

Pre-training BERT masked language models with custom vocabulary

Pre-training BERT Masked Language Models (MLM) This repository contains the method to pre-train a BERT model using custom vocabulary. It was used to p

Implementations of Machine Learning models, Regularizers, Optimizers and different Cost functions.

Linear Models Implementations of LinearRegression, LassoRegression and RidgeRegression with appropriate Regularizers and Optimizers. Linear Regression

cqMore is a CadQuery plugin based on CadQuery 2.1.

cqMore (under construction) cqMore is a CadQuery plugin based on CadQuery 2.1. Installation Please use conda to install CadQuery and its dependencies

A geometric deep learning pipeline for predicting protein interface contacts.

A geometric deep learning pipeline for predicting protein interface contacts.

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

A framework for evaluating Knowledge Graph Embedding Models in a fine-grained manner.

Train 🤗-transformers model with Poutyne.

poutyne-transformers Train 🤗 -transformers models with Poutyne. Installation pip install poutyne-transformers Example import torch from transformers

Text2Art is an AI art generator powered with VQGAN + CLIP and CLIPDrawer models

Text2Art is an AI art generator powered with VQGAN + CLIP and CLIPDrawer models. You can easily generate all kind of art from drawing, painting, sketch, or even a specific artist style just using a text input. You can also specify the dimensions of the image. The process can take 3-20 mins and the results will be emailed to you.

Instance-level Image Retrieval using Reranking Transformers

Instance-level Image Retrieval using Reranking Transformers Fuwen Tan, Jiangbo Yuan, Vicente Ordonez, ICCV 2021. Abstract Instance-level image retriev

DeepCAD: A Deep Generative Network for Computer-Aided Design Models

DeepCAD This repository provides source code for our paper: DeepCAD: A Deep Generative Network for Computer-Aided Design Models Rundi Wu, Chang Xiao,

Training vision models with full-batch gradient descent and regularization

Stochastic Training is Not Necessary for Generalization -- Training competitive vision models without stochasticity This repository implements trainin

Blender addon to generate better building models from satellite imagery.

Blender addon to generate better building models from satellite imagery.

Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

RNN-for-Joint-NLU Pytorch implementation of "Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling"

TorchXRayVision: A library of chest X-ray datasets and models.

torchxrayvision A library for chest X-ray datasets and models. Including pre-trained models. ( 🎬 promo video about the project) Motivation: While the

A pytorch-based deep learning framework for multi-modal 2D/3D medical image segmentation

A 3D multi-modal medical image segmentation library in PyTorch We strongly believe in open and reproducible deep learning research. Our goal is to imp

Explainer for black box models that predict molecule properties

Explaining why that molecule exmol is a package to explain black-box predictions of molecules. The package uses model agnostic explanations to help us

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks, which modifies the input text with a textual template and directly uses PLMs to conduct pre-trained tasks. This library provides a standard, flexible and extensible framework to deploy the prompt-learning pipeline. OpenPrompt supports loading PLMs directly from huggingface transformers. In the future, we will also support PLMs implemented by other libraries.

pcnaDeep integrates cutting-edge detection techniques with tracking and cell cycle resolving models.

pcnaDeep: a deep-learning based single-cell cycle profiler with PCNA signal Welcome! pcnaDeep integrates cutting-edge detection techniques with tracki

A Chinese to English Neural Model Translation Project

ZH-EN NMT Chinese to English Neural Machine Translation This project is inspired by Stanford's CS224N NMT Project Dataset used in this project: News C

Pytorch implementation of paper "Efficient Nearest Neighbor Language Models" (EMNLP 2021)

Pytorch implementation of paper "Efficient Nearest Neighbor Language Models" (EMNLP 2021)

Official Implementation of 'UPDeT: Universal Multi-agent Reinforcement Learning via Policy Decoupling with Transformers' ICLR 2021(spotlight)

UPDeT Official Implementation of UPDeT: Universal Multi-agent Reinforcement Learning via Policy Decoupling with Transformers (ICLR 2021 spotlight) The

A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You can find two approaches for achieving this in this repo.

multitask-learning-transformers A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You

Model factory is a ML training platform to help engineers to build ML models at scale

Model Factory Machine learning today is powering many businesses today, e.g., search engine, e-commerce, news or feed recommendation. Training high qu

Pre-trained BERT Models for Ancient and Medieval Greek, and associated code for LaTeCH 2021 paper titled - "A Pilot Study for BERT Language Modelling and Morphological Analysis for Ancient and Medieval Greek"

Ancient Greek BERT The first and only available Ancient Greek sub-word BERT model! State-of-the-art post fine-tuning on Part-of-Speech Tagging and Mor

A PyTorch implementation of EfficientNet and EfficientNetV2 (coming soon!)

EfficientNet PyTorch Quickstart Install with pip install efficientnet_pytorch and load a pretrained EfficientNet with: from efficientnet_pytorch impor

PyTorch code accompanying our paper on Maximum Entropy Generators for Energy-Based Models

Maximum Entropy Generators for Energy-Based Models All experiments have tensorboard visualizations for samples / density / train curves etc. To run th

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)

A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

A scalable template for PyTorch projects, with examples in Image Segmentation, Object classification, GANs and Reinforcement Learning.

PyTorch Project Template is being sponsored by the following tool; please help to support us by taking a look and signing up to a free trial PyTorch P

Pytorch implementations of various Deep NLP models in cs-224n(Stanford Univ)

DeepNLP-models-Pytorch Pytorch implementations of various Deep NLP models in cs-224n(Stanford Univ: NLP with Deep Learning) This is not for Pytorch be

A collection of various deep learning architectures, models, and tips

Deep Learning Models A collection of various deep learning architectures, models, and tips for TensorFlow and PyTorch in Jupyter Notebooks. Traditiona

Visualizer for neural network, deep learning, and machine learning models

Netron is a viewer for neural network, deep learning and machine learning models. Netron supports ONNX, TensorFlow Lite, Keras, Caffe, Darknet, ncnn,

Making decision trees competitive with neural networks on CIFAR10, CIFAR100, TinyImagenet200, Imagenet

Neural-Backed Decision Trees · Site · Paper · Blog · Video Alvin Wan, *Lisa Dunlap, *Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah

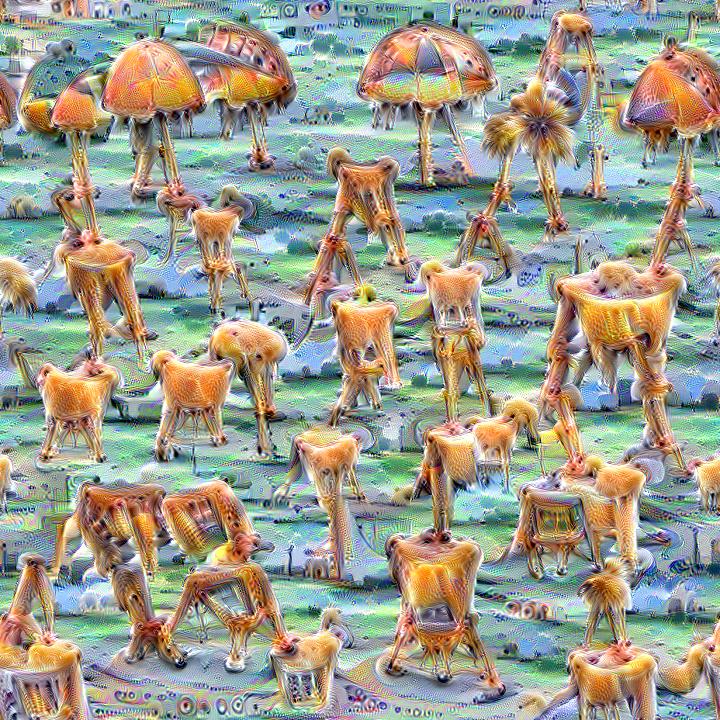

Quickly and easily create / train a custom DeepDream model

Dream-Creator This project aims to simplify the process of creating a custom DeepDream model by using pretrained GoogleNet models and custom image dat

Many Class Activation Map methods implemented in Pytorch for CNNs and Vision Transformers. Including Grad-CAM, Grad-CAM++, Score-CAM, Ablation-CAM and XGrad-CAM

Class Activation Map methods implemented in Pytorch pip install grad-cam ⭐ Comprehensive collection of Pixel Attribution methods for Computer Vision.

Implementation of the Remixer Block from the Remixer paper, in Pytorch

Remixer - Pytorch Implementation of the Remixer Block from the Remixer paper, in Pytorch. It claims that substituting the feedforwards in transformers

A simple but complete full-attention transformer with a set of promising experimental features from various papers

x-transformers A concise but fully-featured transformer, complete with a set of promising experimental features from various papers. Install $ pip ins

Code and data to accompany the camera-ready version of "Cross-Attention is All You Need: Adapting Pretrained Transformers for Machine Translation" in EMNLP 2021

Code and data to accompany the camera-ready version of "Cross-Attention is All You Need: Adapting Pretrained Transformers for Machine Translation" in EMNLP 2021

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

A Tools that help Data Scientists and ML engineers train and deploy ML models.

Domino Research This repo contains projects under active development by the Domino R&D team. We build tools that help Data Scientists and ML engineers

fastgradio is a python library to quickly build and share gradio interfaces of your trained fastai models.

fastgradio is a python library to quickly build and share gradio interfaces of your trained fastai models.

GluonMM is a library of transformer models for computer vision and multi-modality research

GluonMM is a library of transformer models for computer vision and multi-modality research. It contains reference implementations of widely adopted baseline models and also research work from Amazon Research.

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming. Outperforming `GPT-3` on SuperGLUE Few-Shot text classification.

TruthfulQA: Measuring How Models Imitate Human Falsehoods

TruthfulQA: Measuring How Models Imitate Human Falsehoods

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo