1179 Repositories

Python urdf-models Libraries

A collection of resources and papers on Diffusion Models, a darkhorse in the field of Generative Models

This repository contains a collection of resources and papers on Diffusion Models and Score-based Models. If there are any missing valuable resources

Quick program made to generate alpha and delta tables for Hidden Markov Models

HMM_Calc Functions for generating Alpha and Delta tables from a Hidden Markov Model. Parameters: a: Matrix of transition probabilities. a[i][j] = a_{i

Pipeline for training LSA models using Scikit-Learn.

Latent Semantic Analysis Pipeline for training LSA models using Scikit-Learn. Usage Instead of writing custom code for latent semantic analysis, you j

GPT-3: Language Models are Few-Shot Learners

GPT-3: Language Models are Few-Shot Learners arXiv link Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-trainin

Official implementations for various pre-training models of ERNIE-family, covering topics of Language Understanding & Generation, Multimodal Understanding & Generation, and beyond.

English|简体中文 ERNIE是百度开创性提出的基于知识增强的持续学习语义理解框架,该框架将大数据预训练与多源丰富知识相结合,通过持续学习技术,不断吸收海量文本数据中词汇、结构、语义等方面的知识,实现模型效果不断进化。ERNIE在累积 40 余个典型 NLP 任务取得 SOTA 效果,并在 G

Revisiting Pre-trained Models for Chinese Natural Language Processing (Findings of EMNLP 2020)

This repository contains the resources in our paper "Revisiting Pre-trained Models for Chinese Natural Language Processing", which will be published i

Optimus: the first large-scale pre-trained VAE language model

Optimus: the first pre-trained Big VAE language model This repository contains source code necessary to reproduce the results presented in the EMNLP 2

(ACL-IJCNLP 2021) Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models.

BERT Convolutions Code for the paper Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models. Contains expe

Code for the ACL 2021 paper "Structural Guidance for Transformer Language Models"

Structural Guidance for Transformer Language Models This repository accompanies the paper, Structural Guidance for Transformer Language Models, publis

Research code for the paper "How Good is Your Tokenizer? On the Monolingual Performance of Multilingual Language Models"

Introduction This repository contains research code for the ACL 2021 paper "How Good is Your Tokenizer? On the Monolingual Performance of Multilingual

![[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations](https://github.com/kdexd/virtex/raw/master/docs/_static/system_figure.jpg)

[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations

VirTex: Learning Visual Representations from Textual Annotations Karan Desai and Justin Johnson University of Michigan CVPR 2021 arxiv.org/abs/2006.06

Dense Passage Retriever - is a set of tools and models for open domain Q&A task.

Dense Passage Retrieval Dense Passage Retrieval (DPR) - is a set of tools and models for state-of-the-art open-domain Q&A research. It is based on the

Simple Python library, distributed via binary wheels with few direct dependencies, for easily using wav2vec 2.0 models for speech recognition

Wav2Vec2 STT Python Beta Software Simple Python library, distributed via binary wheels with few direct dependencies, for easily using wav2vec 2.0 mode

Toolkit for developing and maintaining ML models

modelkit Python framework for production ML systems. modelkit is a minimalist yet powerful MLOps library for Python, built for people who want to depl

CoRe: Contrastive Recurrent State-Space Models

CoRe: Contrastive Recurrent State-Space Models This code implements the CoRe model and reproduces experimental results found in Robust Robotic Control

Milano is a tool for automating hyper-parameters search for your models on a backend of your choice.

Milano (This is a research project, not an official NVIDIA product.) Documentation https://nvidia.github.io/Milano Milano (Machine learning autotuner

Code & Models for Temporal Segment Networks (TSN) in ECCV 2016

Temporal Segment Networks (TSN) We have released MMAction, a full-fledged action understanding toolbox based on PyTorch. It includes implementation fo

DenseNet Implementation in Keras with ImageNet Pretrained Models

DenseNet-Keras with ImageNet Pretrained Models This is an Keras implementation of DenseNet with ImageNet pretrained weights. The weights are converted

Code and pre-trained models for "ReasonBert: Pre-trained to Reason with Distant Supervision", EMNLP'2021

ReasonBERT Code and pre-trained models for ReasonBert: Pre-trained to Reason with Distant Supervision, EMNLP'2021 Pretrained Models The pretrained mod

Implementation of the paper 'Sentence Bottleneck Autoencoders from Transformer Language Models'

Introduction This repository contains the code for the paper Sentence Bottleneck Autoencoders from Transformer Language Models by Ivan Montero, Nikola

Diagnostic tests for linguistic capacities in language models

LM diagnostics This repository contains the diagnostic datasets and experimental code for What BERT is not: Lessons from a new suite of psycholinguist

Code for Editing Factual Knowledge in Language Models

KnowledgeEditor Code for Editing Factual Knowledge in Language Models (https://arxiv.org/abs/2104.08164). @inproceedings{decao2021editing, title={Ed

Code for ACL2021 long paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases

LANKA This is the source code for paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases (ACL 2021, long paper) Referen

The official implementation of "BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Identify Analogies?, ACL 2021 main conference"

BERT is to NLP what AlexNet is to CV This is the official implementation of BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Iden

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

ACL'2021: LM-BFF: Better Few-shot Fine-tuning of Language Models

LM-BFF (Better Few-shot Fine-tuning of Language Models) This is the implementation of the paper Making Pre-trained Language Models Better Few-shot Lea

PyTorch source code of NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models"

This repository contains source code for NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models" (P

Source code for the ACL-IJCNLP 2021 paper entitled "T-DNA: Taming Pre-trained Language Models with N-gram Representations for Low-Resource Domain Adaptation" by Shizhe Diao et al.

T-DNA Source code for the ACL-IJCNLP 2021 paper entitled Taming Pre-trained Language Models with N-gram Representations for Low-Resource Domain Adapta

The code is the training example of AAAI2022 Security AI Challenger Program Phase 8: Data Centric Robot Learning on ML models.

Example code of [Tianchi AAAI2022 Security AI Challenger Program Phase 8]

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

Semi-automated vocabulary generation from semantic vector models

vec2word Semi-automated vocabulary generation from semantic vector models This script generates a list of potential conlang word forms along with asso

Dataset for the Research2Clinics @ NeurIPS 2021 Paper: What Do You See in this Patient? Behavioral Testing of Clinical NLP Models

Behavioral Testing of Clinical NLP Models This repository contains code for testing the behavior of clinical prediction models based on patient letter

Codes and models of NeurIPS2021 paper - DominoSearch: Find layer-wise fine-grained N:M sparse schemes from dense neural networks

DominoSearch This is repository for codes and models of NeurIPS2021 paper - DominoSearch: Find layer-wise fine-grained N:M sparse schemes from dense n

Official implementation of Representer Point Selection via Local Jacobian Expansion for Post-hoc Classifier Explanation of Deep Neural Networks and Ensemble Models at NeurIPS 2021

Representer Point Selection via Local Jacobian Expansion for Classifier Explanation of Deep Neural Networks and Ensemble Models This repository is the

Interpretable and Generalizable Person Re-Identification with Query-Adaptive Convolution and Temporal Lifting

QAConv Interpretable and Generalizable Person Re-Identification with Query-Adaptive Convolution and Temporal Lifting This PyTorch code is proposed in

Code for "On Memorization in Probabilistic Deep Generative Models"

On Memorization in Probabilistic Deep Generative Models This repository contains the code necessary to reproduce the experiments in On Memorization in

Deep Latent Force Models

Deep Latent Force Models This repository contains a PyTorch implementation of the deep latent force model (DLFM), presented in the paper, Compositiona

![[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️](https://github.com/sungyoon-lee/LossLandscapeMatters/raw/master/media/fig.png)

[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️

Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples This repository is the official implementation of "Tow

Auditing Black-Box Prediction Models for Data Minimization Compliance

Data-Minimization-Auditor An auditing tool for model-instability based data minimization that is introduced in "Auditing Black-Box Prediction Models f

Think Big, Teach Small: Do Language Models Distil Occam’s Razor?

Think Big, Teach Small: Do Language Models Distil Occam’s Razor? Software related to the paper "Think Big, Teach Small: Do Language Models Distil Occa

Code for models used in Bashiri et al., "A Flow-based latent state generative model of neural population responses to natural images".

A Flow-based latent state generative model of neural population responses to natural images Code for "A Flow-based latent state generative model of ne

Locally Most Powerful Bayesian Test for Out-of-Distribution Detection using Deep Generative Models

LMPBT Supplementary code for the Paper entitled ``Locally Most Powerful Bayesian Test for Out-of-Distribution Detection using Deep Generative Models"

Evolutionary Scale Modeling (esm): Pretrained language models for proteins

Evolutionary Scale Modeling This repository contains code and pre-trained weights for Transformer protein language models from Facebook AI Research, i

PipeTransformer: Automated Elastic Pipelining for Distributed Training of Large-scale Models

PipeTransformer: Automated Elastic Pipelining for Distributed Training of Large-scale Models This repository is the official implementation of the fol

A paper list of pre-trained language models (PLMs).

Large-scale pre-trained language models (PLMs) such as BERT and GPT have achieved great success and become a milestone in NLP.

PySlowFast: video understanding codebase from FAIR for reproducing state-of-the-art video models.

PySlowFast PySlowFast is an open source video understanding codebase from FAIR that provides state-of-the-art video classification models with efficie

Behavioral Testing of Clinical NLP Models

Behavioral Testing of Clinical NLP Models This repository contains code for testing the behavior of clinical prediction models based on patient letter

OpenL3: Open-source deep audio and image embeddings

OpenL3 OpenL3 is an open-source Python library for computing deep audio and image embeddings. Please refer to the documentation for detailed instructi

Datasets, Transforms and Models specific to Computer Vision

vision Datasets, Transforms and Models specific to Computer Vision Installation First install the nightly version of OneFlow python3 -m pip install on

Set of methods to ensemble boxes from different object detection models, including implementation of "Weighted boxes fusion (WBF)" method.

Set of methods to ensemble boxes from different object detection models, including implementation of "Weighted boxes fusion (WBF)" method.

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

The first GANs-based omics-to-omics translation framework

OmiTrans Please also have a look at our multi-omics multi-task DL freamwork 👀 : OmiEmbed The FIRST GANs-based omics-to-omics translation framework Xi

A benchmark dataset for emulating atmospheric radiative transfer in weather and climate models with machine learning (NeurIPS 2021 Datasets and Benchmarks Track)

ClimART - A Benchmark Dataset for Emulating Atmospheric Radiative Transfer in Weather and Climate Models Official PyTorch Implementation Using deep le

Molecular Sets (MOSES): A Benchmarking Platform for Molecular Generation Models

Molecular Sets (MOSES): A benchmarking platform for molecular generation models Deep generative models are rapidly becoming popular for the discovery

GraphRNN: Generating Realistic Graphs with Deep Auto-regressive Models

GraphRNN: Generating Realistic Graphs with Deep Auto-regressive Model This repository is the official PyTorch implementation of GraphRNN, a graph gene

Pre-trained Deep Learning models and demos (high quality and extremely fast)

OpenVINO™ Toolkit - Open Model Zoo repository This repository includes optimized deep learning models and a set of demos to expedite development of hi

An open-source Kazakh named entity recognition dataset (KazNERD), annotation guidelines, and baseline NER models.

Kazakh Named Entity Recognition This repository contains an open-source Kazakh named entity recognition dataset (KazNERD), named entity annotation gui

Simple command line tool to train and deploy your machine learning models with AWS SageMaker

metamaker Simple command line tool to train and deploy your machine learning models with AWS SageMaker Features metamaker enables you to: Build a dock

This repository provides an unified frameworks to train and test the state-of-the-art few-shot font generation (FFG) models.

FFG-benchmarks This repository provides an unified frameworks to train and test the state-of-the-art few-shot font generation (FFG) models. What is Fe

This repo contains simple to use, pretrained/training-less models for speaker diarization.

PyDiar This repo contains simple to use, pretrained/training-less models for speaker diarization. Supported Models Binary Key Speaker Modeling Based o

Official code for the paper "Why Do Self-Supervised Models Transfer? Investigating the Impact of Invariance on Downstream Tasks".

Why Do Self-Supervised Models Transfer? Investigating the Impact of Invariance on Downstream Tasks This repository contains the official code for the

Training open neural machine translation models

Train Opus-MT models This package includes scripts for training NMT models using MarianNMT and OPUS data for OPUS-MT. More details are given in the Ma

Real-time pose estimation accelerated with NVIDIA TensorRT

trt_pose Want to detect hand poses? Check out the new trt_pose_hand project for real-time hand pose and gesture recognition! trt_pose is aimed at enab

The lightweight PyTorch wrapper for high-performance AI research. Scale your models, not the boilerplate.

The lightweight PyTorch wrapper for high-performance AI research. Scale your models, not the boilerplate. Website • Key Features • How To Use • Docs •

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

Pytorch and Keras Implementations of Hyperspectral Image Classification -- Traditional to Deep Models: A Survey for Future Prospects.

The repository contains the implementations for Hyperspectral Image Classification -- Traditional to Deep Models: A Survey for Future Prospects. Model

Qlib is an AI-oriented quantitative investment platform

Qlib is an AI-oriented quantitative investment platform, which aims to realize the potential, empower the research, and create the value of AI technologies in quantitative investment.

High performance distributed framework for training deep learning recommendation models based on PyTorch.

PERSIA (Parallel rEcommendation tRaining System with hybrId Acceleration) is developed by AI platform@Kuaishou Technology, collaborating with ETH. It

The FIRST GANs-based omics-to-omics translation framework

OmiTrans Please also have a look at our multi-omics multi-task DL freamwork 👀 : OmiEmbed The FIRST GANs-based omics-to-omics translation framework Xi

Make differentially private training of transformers easy for everyone

private-transformers This codebase facilitates fast experimentation of differentially private training of Hugging Face transformers. What is this? Why

Pytorch library for end-to-end transformer models training and serving

Pytorch library for end-to-end transformer models training and serving

A curated list of awesome papers for Semantic Retrieval (TOIS Accepted: Semantic Models for the First-stage Retrieval: A Comprehensive Review).

A curated list of awesome papers for Semantic Retrieval (TOIS Accepted: Semantic Models for the First-stage Retrieval: A Comprehensive Review).

Codes for NeurIPS 2021 paper "Adversarial Neuron Pruning Purifies Backdoored Deep Models"

Adversarial Neuron Pruning Purifies Backdoored Deep Models Code for NeurIPS 2021 "Adversarial Neuron Pruning Purifies Backdoored Deep Models" by Dongx

In the case of your data having only 1 channel while want to use timm models

timm_custom Description In the case of your data having only 1 channel while want to use timm models (with or without pretrained weights), run the fol

A model checker for verifying properties in epistemic models

Epistemic Model Checker This is a model checker for verifying properties in epistemic models. The goal of the model checker is to check for Pluralisti

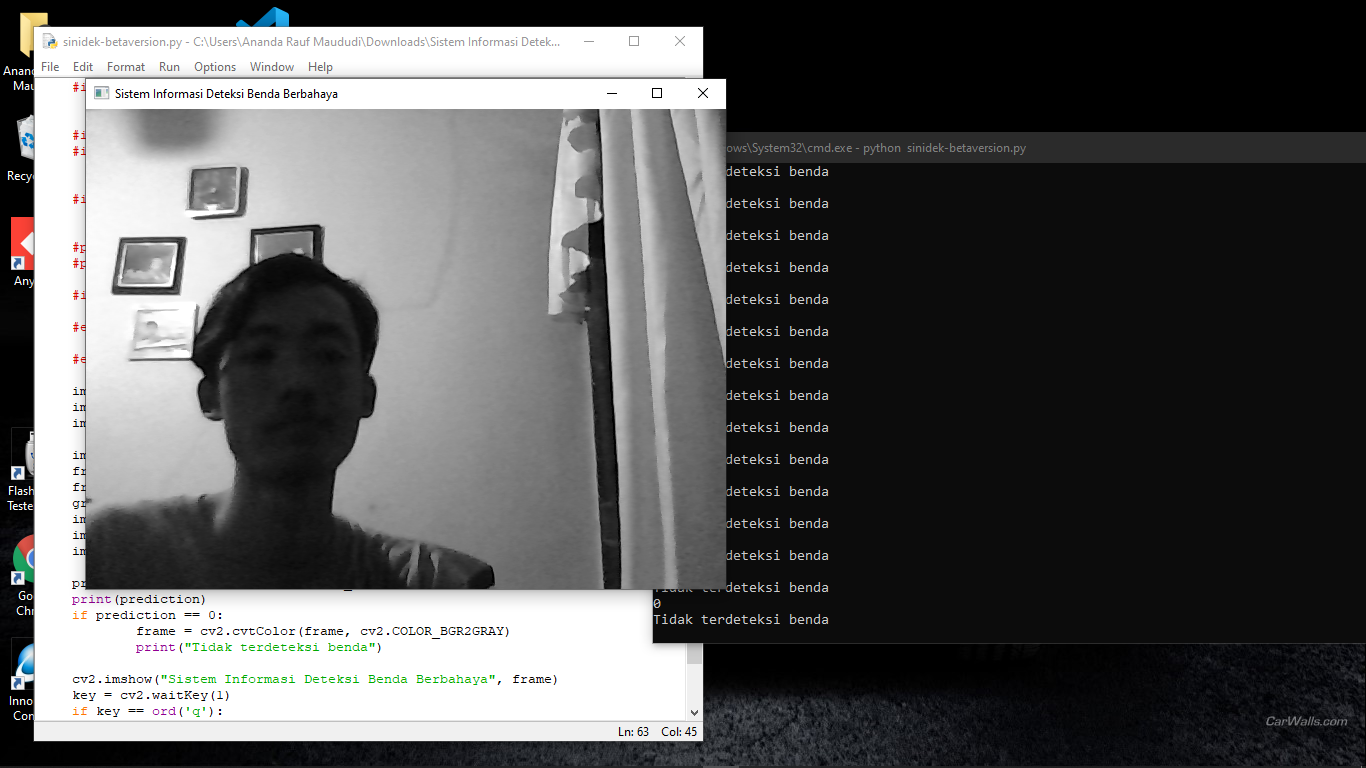

Si Adek Keras is software VR dangerous object detection.

Si Adek Python Keras Sistem Informasi Deteksi Benda Berbahaya Keras Python. Version 1.0 Developed by Ananda Rauf Maududi. Developed date: 24 November

This repository contains several image-to-image translation models, whcih were tested for RGB to NIR image generation. The models are Pix2Pix, Pix2PixHD, CycleGAN and PointWise.

RGB2NIR_Experimental This repository contains several image-to-image translation models, whcih were tested for RGB to NIR image generation. The models

Code for text augmentation method leveraging large-scale language models

HyperMix Code for our paper GPT3Mix and conducting classification experiments using GPT-3 prompt-based data augmentation. Getting Started Installing P

TensorFlow port of PyTorch Image Models (timm) - image models with pretrained weights.

TensorFlow-Image-Models Introduction Usage Models Profiling License Introduction TensorfFlow-Image-Models (tfimm) is a collection of image models with

Augmenting Physical Models with Deep Networks for Complex Dynamics Forecasting

Official code of APHYNITY Augmenting Physical Models with Deep Networks for Complex Dynamics Forecasting (ICLR 2021, Oral) Yuan Yin*, Vincent Le Guen*

Efficient semidefinite bounds for multi-label discrete graphical models.

Low rank solvers #################################### benchmark/ : folder with the random instances used in the paper. ############################

VGGVox models for Speaker Identification and Verification trained on the VoxCeleb (1 & 2) datasets

VGGVox models for speaker identification and verification This directory contains code to import and evaluate the speaker identification and verificat

Code for DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning

DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning Pytorch Implementation for DisCo: Remedy Self-supervi

Self-Supervised Document-to-Document Similarity Ranking via Contextualized Language Models and Hierarchical Inference

Self-Supervised Document Similarity Ranking (SDR) via Contextualized Language Models and Hierarchical Inference This repo is the implementation for SD

This is a Blender 2.9 script for importing mixamo Models to Godot-3

Mixamo-To-Godot This is a Blender 2.9 script for importing mixamo Models to Godot-3 The script does the following things Imports the mixamo models fro

FasterAI: A library to make smaller and faster models with FastAI.

Fasterai fasterai is a library created to make neural network smaller and faster. It essentially relies on common compression techniques for networks

An easy to use Natural Language Processing library and framework for predicting, training, fine-tuning, and serving up state-of-the-art NLP models.

Welcome to AdaptNLP A high level framework and library for running, training, and deploying state-of-the-art Natural Language Processing (NLP) models

A library that integrates huggingface transformers with the world of fastai, giving fastai devs everything they need to train, evaluate, and deploy transformer specific models.

blurr A library that integrates huggingface transformers with version 2 of the fastai framework Install You can now pip install blurr via pip install

Creating multimodal multitask models

Fusion Brain Challenge The English version of the document can be found here. Обновления 01.11 Мы выкладываем пример данных, аналогичных private test

Towards Improving Embedding Based Models of Social Network Alignment via Pseudo Anchors

PSML paper: Towards Improving Embedding Based Models of Social Network Alignment via Pseudo Anchors PSML_IONE,PSML_ABNE,PSML_DEEPLINK,PSML_SNNA: numpy

Finetune SSL models for MOS prediction

Finetune SSL models for MOS prediction This is code for our paper under review for ICASSP 2022: "Generalization Ability of MOS Prediction Networks" Er

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models.

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models. Feature-engine's transformers follow scikit-learn's functionality with fit() and transform() methods to first learn the transforming parameters from data and then transform the data.

apricot implements submodular optimization for the purpose of selecting subsets of massive data sets to train machine learning models quickly.

Please consider citing the manuscript if you use apricot in your academic work! You can find more thorough documentation here. apricot implements subm

Deep and online learning with spiking neural networks in Python

Introduction The brain is the perfect place to look for inspiration to develop more efficient neural networks. One of the main differences with modern

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models.

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models. Hyperactive: is very easy to lear

The purpose of this project is to share knowledge on how awesome Streamlit is and can be

Awesome Streamlit The fastest way to build Awesome Tools and Apps! Powered by Python! The purpose of this project is to share knowledge on how Awesome

Code to compute permutation and drop-column importances in Python scikit-learn models

Feature importances for scikit-learn machine learning models By Terence Parr and Kerem Turgutlu. See Explained.ai for more stuff. The scikit-learn Ran

A method that utilized Generative Adversarial Network (GAN) to interpret the black-box deep image classifier models by PyTorch.

A method that utilized Generative Adversarial Network (GAN) to interpret the black-box deep image classifier models by PyTorch.

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models.

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models. Solve a variety of tasks with pre-trained models or finetune them in

Resilience from Diversity: Population-based approach to harden models against adversarial attacks

Resilience from Diversity: Population-based approach to harden models against adversarial attacks Requirements To install requirements: pip install -r