1401 Repositories

Python video-swin-transformer Libraries

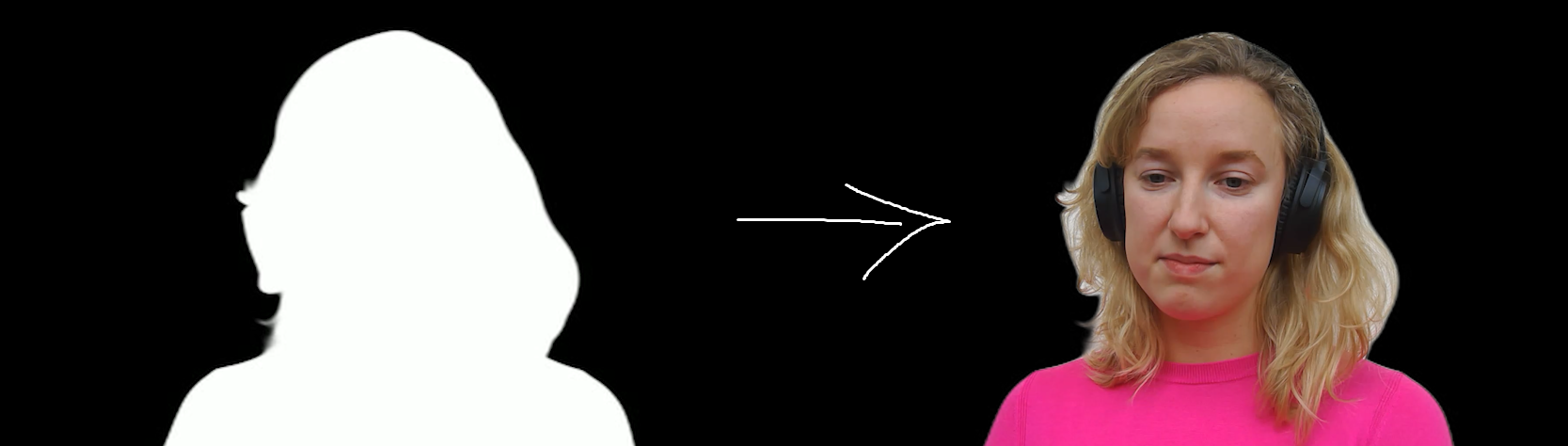

Rembg Video Virtual Green Screen Edition

Rembg Virtual Greenscreen Edition is a tool to create a green screen matte for videos

An easier way to build neural search on the cloud

An easier way to build neural search on the cloud Jina is a deep learning-powered search framework for building cross-/multi-modal search systems (e.g

git《Self-Attention Attribution: Interpreting Information Interactions Inside Transformer》(AAAI 2021) GitHub:

Self-Attention Attribution This repository contains the implementation for AAAI-2021 paper Self-Attention Attribution: Interpreting Information Intera

a general-purpose Transformer based vision backbone

Swin Transformer By Ze Liu*, Yutong Lin*, Yue Cao*, Han Hu*, Yixuan Wei, Zheng Zhang, Stephen Lin and Baining Guo. This repo is the official implement

Conversion of Image, video, text into ASCII format

asciju Python package that converts image to ascii Free software: MIT license

git git《Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking》(CVPR 2021) GitHub:git2] 《Masksembles for Uncertainty Estimation》(CVPR 2021) GitHub:git3]

Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking Ning Wang, Wengang Zhou, Jie Wang, and Houqiang Li Accepted by CVPR

Rotary Transformer

Rotary Transformer Rotary Transformer,简称RoFormer,是我们自研的语言模型之一,主要是为Transformer结构设计了新的旋转式位置编码(Rotary Position Embedding,RoPE)。RoPE具有良好的理论性质,且是目前唯一一种可以应用

Source code of our TPAMI'21 paper Dual Encoding for Video Retrieval by Text and CVPR'19 paper Dual Encoding for Zero-Example Video Retrieval.

Dual Encoding for Video Retrieval by Text Source code of our TPAMI'21 paper Dual Encoding for Video Retrieval by Text and CVPR'19 paper Dual Encoding

A collection of resources (including the papers and datasets) of OCR (Optical Character Recognition).

OCR Resources This repository contains a collection of resources (including the papers and datasets) of OCR (Optical Character Recognition). Contents

Adaptive Attention Span for Reinforcement Learning

Adaptive Transformers in RL Official implementation of Adaptive Transformers in RL In this work we replicate several results from Stabilizing Transfor

Code for SentiBERT: A Transferable Transformer-Based Architecture for Compositional Sentiment Semantics (ACL'2020).

SentiBERT Code for SentiBERT: A Transferable Transformer-Based Architecture for Compositional Sentiment Semantics (ACL'2020). https://arxiv.org/abs/20

Transformer Tracking (CVPR2021)

TransT - Transformer Tracking [CVPR2021] Official implementation of the TransT (CVPR2021) , including training code and trained models. We are revisin

About A python based Apple Quicktime protocol,you can record audio and video from real iOS devices

介绍 本应用程序使用 python 实现,可以通过 USB 连接 iOS 设备进行屏幕共享 高帧率(30〜60fps) 高画质 低延迟(200ms) 非侵入性 支持多设备并行 Mac OSX 安装 python =3.7 brew install libusb pkg-config 如需使用 g

[CVPR 2021] Modular Interactive Video Object Segmentation: Interaction-to-Mask, Propagation and Difference-Aware Fusion

[CVPR 2021] Modular Interactive Video Object Segmentation: Interaction-to-Mask, Propagation and Difference-Aware Fusion

Open source code for Paper "A Co-Interactive Transformer for Joint Slot Filling and Intent Detection"

A Co-Interactive Transformer for Joint Slot Filling and Intent Detection This repository contains the PyTorch implementation of the paper: A Co-Intera

Unofficial implementation of "TTNet: Real-time temporal and spatial video analysis of table tennis" (CVPR 2020)

TTNet-Pytorch The implementation for the paper "TTNet: Real-time temporal and spatial video analysis of table tennis" An introduction of the project c

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Simple screen recorder

Kooha Simple screen recorder Description Kooha is a simple screen recorder built with GTK. It allows you to record your screen and also audio from you

Model parallel transformers in Jax and Haiku

Mesh Transformer Jax A haiku library using the new(ly documented) xmap operator in Jax for model parallelism of transformers. See enwik8_example.py fo

FLAVR is a fast, flow-free frame interpolation method capable of single shot multi-frame prediction

FLAVR is a fast, flow-free frame interpolation method capable of single shot multi-frame prediction. It uses a customized encoder decoder architecture with spatio-temporal convolutions and channel gating to capture and interpolate complex motion trajectories between frames to generate realistic high frame rate videos. This repository contains original source code for the paper accepted to CVPR 2021.

![[CVPR2021 Oral] End-to-End Video Instance Segmentation with Transformers](https://user-images.githubusercontent.com/16319629/110786946-b99aa080-82a7-11eb-98e4-85478ca4eeac.png)

[CVPR2021 Oral] End-to-End Video Instance Segmentation with Transformers

VisTR: End-to-End Video Instance Segmentation with Transformers This is the official implementation of the VisTR paper: Installation We provide instru

This is the code for HOI Transformer

HOI Transformer Code for CVPR 2021 accepted paper End-to-End Human Object Interaction Detection with HOI Transformer. Reproduction We recomend you to

CoTr: Efficiently Bridging CNN and Transformer for 3D Medical Image Segmentation

CoTr: Efficient 3D Medical Image Segmentation by bridging CNN and Transformer This is the official pytorch implementation of the CoTr: Paper: CoTr: Ef

![[CVPR2021] The source code for our paper 《Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Learning》.](https://github.com/FingerRec/TBE/raw/main/figures/be_visualization.png)

[CVPR2021] The source code for our paper 《Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Learning》.

TBE The source code for our paper "Removing the Background by Adding the Background: Towards Background Robust Self-supervised Video Representation Le

This Bot can extract audios and subtitles from video files

Send any valid video file and the bot shows you available streams in it that can be extracted!!

This repo provides the official code for TransBTS: Multimodal Brain Tumor Segmentation Using Transformer (https://arxiv.org/pdf/2103.04430.pdf).

TransBTS: Multimodal Brain Tumor Segmentation Using Transformer This repo is the official implementation for TransBTS: Multimodal Brain Tumor Segmenta

本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。

【关于 NLP】那些你不知道的事 作者:杨夕、芙蕖、李玲、陈海顺、twilight、LeoLRH、JimmyDU、艾春辉、张永泰、金金金 介绍 本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。 目录架构 一、【

Code associated with the "Data Augmentation using Pre-trained Transformer Models" paper

Data Augmentation using Pre-trained Transformer Models Code associated with the Data Augmentation using Pre-trained Transformer Models paper Code cont

Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image classification, in Pytorch

Transformer in Transformer Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image c

Implementation of E(n)-Transformer, which extends the ideas of Welling's E(n)-Equivariant Graph Neural Network to attention

E(n)-Equivariant Transformer (wip) Implementation of E(n)-Equivariant Transformer, which extends the ideas from Welling's E(n)-Equivariant G

plumi video sharing

December 2017 update We are moving tickets from the Plumi tracker (trac.plumi.org) here, for historical reasons. Plumi video sharing system Plumi is a

Automatic Video Library Manager for TV Shows. It watches for new episodes of your favorite shows, and when they are posted it does its magic.

Automatic Video Library Manager for TV Shows. It watches for new episodes of your favorite shows, and when they are posted it does its magic. Exclusiv

Been busy guys, will be reviewing and integrating pull requests shortly. Thanks to all contributors! LATEST RELEASE: 6.0.0 - flatpak @ https://flathub.org/apps/details/com.ozmartians.VidCutter - snap @ https://snapcraft.io/vidcutter - see https://github.com/ozmartian/vidcutter/releases for more details...

VidCutter 6 released on Flathub! VidCutter is now available as a flatpak at Flathub and is the most reliable option for Linux. All dependencies come b

plumi video sharing

December 2017 update We are moving tickets from the Plumi tracker (trac.plumi.org) here, for historical reasons. Plumi video sharing system Plumi is a

OpenShot Video Editor is an award-winning free and open-source video editor for Linux, Mac, and Windows, and is dedicated to delivering high quality video editing and animation solutions to the world.

OpenShot Video Editor is an award-winning free and open-source video editor for Linux, Mac, and Windows, and is dedicated to delivering high quality v

Video Editor for Linux

Project on break until late March. NEW RELEASE 2.8 IS OUT NOW. INSTALLING: see here. RELEASE NOTES AVAILABLE here. Introduction Features Releases Inst

Pytorch Code for "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation"

Medical-Transformer Pytorch Code for the paper "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation" About this repo: This repo

Repository to create Ascii art in CMD based on video file.

Made to take any file format, and transform it into ascii art, displayed as a video in the cmd. If the cmd formatting is wrong, try zooming a little and remember to make cmd fullscreen. I made my cmd fullscreen, and zoomed out one tic. Written in Python 3.9

Todos os exercícios do Curso de Python, do canal Curso em Vídeo, resolvidos em Python, Javascript, Java, C++, C# e mais...

Exercícios - CeV Oferecido por Linguagens utilizadas atualmente O que vai encontrar aqui? 👀 Esse repositório é dedicado a armazenar todos os enunciad

Implementation of SETR model, Original paper: Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers.

SETR - Pytorch Since the original paper (Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers.) has no official

A multi-entity Transformer for multi-agent spatiotemporal modeling.

baller2vec This is the repository for the paper: Michael A. Alcorn and Anh Nguyen. baller2vec: A Multi-Entity Transformer For Multi-Agent Spatiotempor

Implementation of TimeSformer, a pure attention-based solution for video classification

TimeSformer - Pytorch Implementation of TimeSformer, a pure and simple attention-based solution for reaching SOTA on video classification.

Official PyTorch code for ClipBERT, an efficient framework for end-to-end learning on image-text and video-text tasks

Official PyTorch code for ClipBERT, an efficient framework for end-to-end learning on image-text and video-text tasks. It takes raw videos/images + text as inputs, and outputs task predictions. ClipBERT is designed based on 2D CNNs and transformers, and uses a sparse sampling strategy to enable efficient end-to-end video-and-language learning.

This program is to make a video based on Deep Dream

This program is to make a video based on Deep Dream. The program is modified from DeepDreamAnim and DeepDreamVideo with additional functions for bleding two frames based on the optical flows. It also supports the image division to apply the Deep Dream algorithm to a large image.

Implementation of self-attention mechanisms for general purpose. Focused on computer vision modules. Ongoing repository.

Self-attention building blocks for computer vision applications in PyTorch Implementation of self attention mechanisms for computer vision in PyTorch

Neural Re-rendering for Full-frame Video Stabilization

NeRViS: Neural Re-rendering for Full-frame Video Stabilization Project Page | Video | Paper | Google Colab Setup Setup environment for [Yu and Ramamoo

NeRViS: Neural Re-rendering for Full-frame Video Stabilization

Neural Re-rendering for Full-frame Video Stabilization

Summarization, translation, sentiment-analysis, text-generation and more at blazing speed using a T5 version implemented in ONNX.

Summarization, translation, Q&A, text generation and more at blazing speed using a T5 version implemented in ONNX. This package is still in alpha stag

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Code for the paper "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer"

T5: Text-To-Text Transfer Transformer The t5 library serves primarily as code for reproducing the experiments in Exploring the Limits of Transfer Lear

An easier way to build neural search on the cloud

An easier way to build neural search on the cloud Jina is a deep learning-powered search framework for building cross-/multi-modal search systems (e.g

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

TransGAN: Two Transformers Can Make One Strong GAN

[Preprint] "TransGAN: Two Transformers Can Make One Strong GAN", Yifan Jiang, Shiyu Chang, Zhangyang Wang

Open source repository for the code accompanying the paper 'Non-Rigid Neural Radiance Fields Reconstruction and Novel View Synthesis of a Deforming Scene from Monocular Video'.

Non-Rigid Neural Radiance Fields This is the official repository for the project "Non-Rigid Neural Radiance Fields: Reconstruction and Novel View Synt

Implementation of TabTransformer, attention network for tabular data, in Pytorch

Tab Transformer Implementation of Tab Transformer, attention network for tabular data, in Pytorch. This simple architecture came within a hair's bread

Efficient 3D Backbone Network for Temporal Modeling

VoV3D is an efficient and effective 3D backbone network for temporal modeling implemented on top of PySlowFast. Diverse Temporal Aggregation and

Authors implementation of LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant self-at

This is a Pytorch implementation of the paper: Self-Supervised Graph Transformer on Large-Scale Molecular Data.

This is a Pytorch implementation of the paper: Self-Supervised Graph Transformer on Large-Scale Molecular Data.

Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series Forecasting.

Non-AR Spatial-Temporal Transformer Introduction Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series For

Download courses from khanacademy.org

khan-dl A python script to download courses from Khan Academy using youtube-dl and beautifulsoup4.

Real-time video and audio streams over the network, with Streamlit.

streamlit-webrtc Example You can try out the sample app using the following commands.

Summarization, translation, sentiment-analysis, text-generation and more at blazing speed using a T5 version implemented in ONNX.

Summarization, translation, Q&A, text generation and more at blazing speed using a T5 version implemented in ONNX. This package is still in alpha stag

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Code for the paper "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer"

T5: Text-To-Text Transfer Transformer The t5 library serves primarily as code for reproducing the experiments in Exploring the Limits of Transfer Lear

An easier way to build neural search on the cloud

An easier way to build neural search on the cloud Jina is a deep learning-powered search framework for building cross-/multi-modal search systems (e.g

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

Script for downloading Coursera.org videos and naming them.

Coursera Downloader Coursera Downloader Introduction Features Disclaimer Installation instructions Recommended installation method for all Operating S

Command-line program to download videos from YouTube.com and other video sites

youtube-dl - download videos from youtube.com or other video platforms INSTALLATION DESCRIPTION OPTIONS CONFIGURATION OUTPUT TEMPLATE FORMAT SELECTION

NLP Core Library and Model Zoo based on PaddlePaddle 2.0

PaddleNLP 2.0拥有丰富的模型库、简洁易用的API与高性能的分布式训练的能力,旨在为飞桨开发者提升文本建模效率,并提供基于PaddlePaddle 2.0的NLP领域最佳实践。

Implementation of Feedback Transformer in Pytorch

Feedback Transformer - Pytorch Simple implementation of Feedback Transformer in Pytorch. They improve on Transformer-XL by having each token have acce

Transformer-based Text Auto-encoder (T-TA) using TensorFlow 2.

T-TA (Transformer-based Text Auto-encoder) This repository contains codes for Transformer-based Text Auto-encoder (T-TA, paper: Fast and Accurate Deep

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

DALL-E in Pytorch Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch. It will also contain CLIP for ranking the ge

Boltstream Live Video Streaming Website + Backend

Boltstream Self-hosted Live Video Streaming Website + Backend Reference

a pull switch (or BYO button) that gets you out of video calls, quick

zoomout a pull switch (or BYO button) that gets you out of video calls, quick. As seen on Twitter System compatibility Tested on macOS Catalina (10.15

Implementation of Bottleneck Transformer in Pytorch

Bottleneck Transformer - Pytorch Implementation of Bottleneck Transformer, SotA visual recognition model with convolution + attention that outperforms

Jittor implementation of PCT:Point Cloud Transformer

PCT: Point Cloud Transformer This is a Jittor implementation of PCT: Point Cloud Transformer.

Trankit is a Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing

Trankit: A Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing Trankit is a light-weight Transformer-based Pyth

Multiple-Object Tracking with Transformer

TransTrack: Multiple-Object Tracking with Transformer Introduction TransTrack: Multiple-Object Tracking with Transformer Models Training data Training

Chinese NewsTitle Generation Project by GPT2.带有超级详细注释的中文GPT2新闻标题生成项目。

GPT2-NewsTitle 带有超详细注释的GPT2新闻标题生成项目 UpDate 01.02.2021 从网上收集数据,将清华新闻数据、搜狗新闻数据等新闻数据集,以及开源的一些摘要数据进行整理清洗,构建一个较完善的中文摘要数据集。 数据集清洗时,仅进行了简单地规则清洗。

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting This is the origin Pytorch implementation of Informer in the followin

Implementation of the Point Transformer layer, in Pytorch

Point Transformer - Pytorch Implementation of the Point Transformer self-attention layer, in Pytorch. The simple circuit above seemed to have allowed

Python script to generate a visualization of various sorting algorithms, image or video.

sorting_algo_visualizer Python script to generate a visualization of various sorting algorithms, image or video.

Graph Transformer Architecture. Source code for

Graph Transformer Architecture Source code for the paper "A Generalization of Transformer Networks to Graphs" by Vijay Prakash Dwivedi and Xavier Bres

Big Bird: Transformers for Longer Sequences

BigBird, is a sparse-attention based transformer which extends Transformer based models, such as BERT to much longer sequences. Moreover, BigBird comes along with a theoretical understanding of the capabilities of a complete transformer that the sparse model can handle.

Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

Segmentation Transformer Implementation of Segmentation Transformer in PyTorch, a new model to achieve SOTA in semantic segmentation while using trans

Explainability for Vision Transformers (in PyTorch)

Explainability for Vision Transformers (in PyTorch) This repository implements methods for explainability in Vision Transformers

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch.

SE3 Transformer - Pytorch Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. May be needed for replicating Alphafold2 resu

Playable Video Generation

Playable Video Generation Playable Video Generation Willi Menapace, Stéphane Lathuilière, Sergey Tulyakov, Aliaksandr Siarohin, Elisa Ricci Paper: ArX

Implementation of Lie Transformer, Equivariant Self-Attention, in Pytorch

Lie Transformer - Pytorch (wip) Implementation of Lie Transformer, Equivariant Self-Attention, in Pytorch. Only the SE3 version will be present in thi

Code for "Layered Neural Rendering for Retiming People in Video."

Layered Neural Rendering in PyTorch This repository contains training code for the examples in the SIGGRAPH Asia 2020 paper "Layered Neural Rendering

TDN: Temporal Difference Networks for Efficient Action Recognition

TDN: Temporal Difference Networks for Efficient Action Recognition Overview We release the PyTorch code of the TDN(Temporal Difference Networks).

Pytorch implementation of PCT: Point Cloud Transformer

PCT: Point Cloud Transformer This is a Pytorch implementation of PCT: Point Cloud Transformer.

When doing audio and video sentiment recognition, I found that a lot of code is duplicated, often a function in different time debugging for a long time, based on this problem, I want to manage all the previous work, organized into an open source library can be iterative. For their own use and others.

FastAudioVisual Our project is developed here. The goal finish time is March 01, 2021 What is FastAudioVisual? FastAudioVisual is a tool that allows u

Automatically erase objects in the video, such as logo, text, etc.

Video-Auto-Wipe Read English Introduction:Here 本人不定期的基于生成技术制作一些好玩有趣的算法模型,这次带来的作品是“视频擦除”方向的应用模型,它实现的功能是自动感知到视频中我们不想看见的部分(譬如广告、水印、字幕、图标等等)然后进行擦除。由于图标擦

PyTorch implementation of "Conformer: Convolution-augmented Transformer for Speech Recognition" (INTERSPEECH 2020)

PyTorch implementation of Conformer: Convolution-augmented Transformer for Speech Recognition. Transformer models are good at capturing content-based

Graph neural network message passing reframed as a Transformer with local attention

Adjacent Attention Network An implementation of a simple transformer that is equivalent to graph neural network where the message passing is done with

Game Agent Framework. Helping you create AIs / Bots that learn to play any game you own!

Serpent.AI - Game Agent Framework (Python) Update: Revival (May 2020) Development work has resumed on the framework with the aim of bringing it into 2

High-performance cross-platform Video Processing Python framework powerpacked with unique trailblazing features :fire:

Releases | Gears | Documentation | Installation | License VidGear is a High-Performance Video Processing Python Library that provides an easy-to-use,

Video processing routines for SciPy

scikit-video Video Processing SciKit BETA Video processing algorithms, including I/O, quality metrics, temporal filtering, motion/object detection, mo