943 Repositories

Python vision-longformer Libraries

Implement object segmentation on images using HOG algorithm proposed in CVPR 2005

HOG Algorithm Implementation Description HOG (Histograms of Oriented Gradients) Algorithm is an algorithm aiming to realize object segmentation (edge

A collection of resources on neural rendering.

awesome neural rendering A collection of resources on neural rendering. Contributing If you think I have missed out on something (or) have any suggest

![[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation](https://github.com/Ha0Tang/SelectionGAN/raw/master/imgs/motivation.jpg)

[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation

SelectionGAN for Guided Image-to-Image Translation CVPR Paper | Extended Paper | Guided-I2I-Translation-Papers Citation If you use this code for your

Adversarial Texture Optimization from RGB-D Scans (CVPR 2020).

AdversarialTexture Adversarial Texture Optimization from RGB-D Scans (CVPR 2020). Scanning Data Download Please refer to data directory for details. B

This repository contains the source codes for the paper AtlasNet V2 - Learning Elementary Structures.

AtlasNet V2 - Learning Elementary Structures This work was build upon Thibault Groueix's AtlasNet and 3D-CODED projects. (you might want to have a loo

AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation

AtlasNet [Project Page] [Paper] [Talk] AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation Thibault Groueix, Matthew Fisher, Vladimir

A simple baseline for 3d human pose estimation in PyTorch.

3d_pose_baseline_pytorch A PyTorch implementation of a simple baseline for 3d human pose estimation. You can check the original Tensorflow implementat

A simple baseline for 3d human pose estimation in tensorflow. Presented at ICCV 17.

3d-pose-baseline This is the code for the paper Julieta Martinez, Rayat Hossain, Javier Romero, James J. Little. A simple yet effective baseline for 3

Computer vision - fun segmentation experience using classic and deep tools :)

Computer_Vision_Segmentation_Fun Segmentation of Images and Video. Tools: pytorch Models: Classic model - GrabCut Deep model - Deeplabv3_resnet101 Flo

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Automatically remove the mosaics in images and videos, or add mosaics to them.

Automatically remove the mosaics in images and videos, or add mosaics to them.

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t

Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources.

Illumination_Decomposition Code for TIP 2017 paper --- Illumination Decomposition for Photograph with Multiple Light Sources. This code implements the

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

This repository builds a basic vision transformer from scratch so that one beginner can understand the theory of vision transformer.

vision-transformer-from-scratch This repository includes several kinds of vision transformers from scratch so that one beginner can understand the the

Collection of common code that's shared among different research projects in FAIR computer vision team.

fvcore fvcore is a light-weight core library that provides the most common and essential functionality shared in various computer vision frameworks de

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

Longformer: The Long-Document Transformer

Longformer Longformer and LongformerEncoderDecoder (LED) are pretrained transformer models for long documents. ***** New December 1st, 2020: Longforme

Code for the paper "VisualBERT: A Simple and Performant Baseline for Vision and Language"

This repository contains code for the following two papers: VisualBERT: A Simple and Performant Baseline for Vision and Language (arxiv) with a short

Multi Task Vision and Language

12-in-1: Multi-Task Vision and Language Representation Learning Please cite the following if you use this code. Code and pre-trained models for 12-in-

Pytorch implementation of the paper "Class-Balanced Loss Based on Effective Number of Samples"

Class-balanced-loss-pytorch Pytorch implementation of the paper Class-Balanced Loss Based on Effective Number of Samples presented at CVPR'19. Yin Cui

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning. CVPR 2018

Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning Tensorflow code and models for the paper: Large Scale Fine-Grained Categ

Learn the Deep Learning for Computer Vision in three steps: theory from base to SotA, code in PyTorch, and space-repetition with Anki

DeepCourse: Deep Learning for Computer Vision arthurdouillard.com/deepcourse/ This is a course I'm giving to the French engineering school EPITA each

💃 VALSE: A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena

💃 VALSE: A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena.

MinkLoc3D-SI: 3D LiDAR place recognition with sparse convolutions,spherical coordinates, and intensity

MinkLoc3D-SI: 3D LiDAR place recognition with sparse convolutions,spherical coordinates, and intensity Introduction The 3D LiDAR place recognition aim

BOVText: A Large-Scale, Multidimensional Multilingual Dataset for Video Text Spotting

BOVText: A Large-Scale, Bilingual Open World Dataset for Video Text Spotting Updated on December 10, 2021 (Release all dataset(2021 videos)) Updated o

Code basis for the paper "Camera Condition Monitoring and Readjustment by means of Noise and Blur" (2021)

Camera Condition Monitoring and Readjustment by means of Noise and Blur This repository contains the source code of the paper: Wischow, M., Gallego, G

Get 2D point positions (e.g., facial landmarks) projected on 3D mesh

points2d_projection_mesh Input 2D points (e.g. facial landmarks) on an image Camera parameters (extrinsic and intrinsic) of the image Aligned 3D mesh

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

SRA's seminar on Introduction to Computer Vision Fundamentals

Introduction to Computer Vision This repository includes basics to : Python Numpy: A python library Git Computer Vision. The aim of this repository is

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

Python scripts for performing object detection with the 1000 labels of the ImageNet dataset in ONNX.

Python scripts for performing object detection with the 1000 labels of the ImageNet dataset in ONNX. The repository combines a class agnostic object localizer to first detect the objects in the image, and next a ResNet50 model trained on ImageNet is used to label each box.

Computer Vision Script to recognize first person motion, developed as final project for the course "Machine Learning and Deep Learning"

Overview of The Code BaseColab/MLDL_FPAR.pdf: it contains the full explanation of our work Base Colab: it contains the base colab used to perform all

BOVText: A Large-Scale, Multidimensional Multilingual Dataset for Video Text Spotting

BOVText: A Large-Scale, Bilingual Open World Dataset for Video Text Spotting Updated on December 10, 2021 (Release all dataset(2021 videos)) Updated o

Official code of IterMVS

IterMVS official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo' Introduction IterMVS is a novel lear

Re-implememtation of MAE (Masked Autoencoders Are Scalable Vision Learners) using PyTorch.

mae-repo PyTorch re-implememtation of "masked autoencoders are scalable vision learners". In this repo, it heavily borrows codes from codebase https:/

Official Implementation for the paper DeepFace-EMD: Re-ranking Using Patch-wise Earth Mover’s Distance Improves Out-Of-Distribution Face Identification

DeepFace-EMD: Re-ranking Using Patch-wise Earth Mover’s Distance Improves Out-Of-Distribution Face Identification Official Implementation for the pape

This is the official code for the paper "Learning with Nested Scene Modeling and Cooperative Architecture Search for Low-Light Vision"

RUAS This is the official code for the paper "Learning with Nested Scene Modeling and Cooperative Architecture Search for Low-Light Vision" A prelimin

Official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo'

IterMVS official source code of paper 'IterMVS: Iterative Probability Estimation for Efficient Multi-View Stereo' Introduction IterMVS is a novel lear

Supplemental learning materials for "Fourier Feature Networks and Neural Volume Rendering"

Fourier Feature Networks and Neural Volume Rendering This repository is a companion to a lecture given at the University of Cambridge Engineering Depa

Class-Balanced Loss Based on Effective Number of Samples. CVPR 2019

Class-Balanced Loss Based on Effective Number of Samples Tensorflow code for the paper: Class-Balanced Loss Based on Effective Number of Samples Yin C

Conceptual 12M is a dataset containing (image-URL, caption) pairs collected for vision-and-language pre-training.

Conceptual 12M We introduce the Conceptual 12M (CC12M), a dataset with ~12 million image-text pairs meant to be used for vision-and-language pre-train

Python library for computer vision labeling tasks. The core functionality is to translate bounding box annotations between different formats-for example, from coco to yolo.

PyLabel pip install pylabel PyLabel is a Python package to help you prepare image datasets for computer vision models including PyTorch and YOLOv5. I

A simple rest api that classifies pneumonia infection weather it is Normal, Pneumonia Virus or Pneumonia Bacteria from a chest-x-ray image.

This is a simple rest api that classifies pneumonia infection weather it is Normal, Pneumonia Virus or Pneumonia Bacteria from a chest-x-ray image.

Localized representation learning from Vision and Text (LoVT)

Localized Vision-Text Pre-Training Contrastive learning has proven effective for pre- training image models on unlabeled data and achieved great resul

CALVIN - A benchmark for Language-Conditioned Policy Learning for Long-Horizon Robot Manipulation Tasks

CALVIN CALVIN - A benchmark for Language-Conditioned Policy Learning for Long-Horizon Robot Manipulation Tasks Oier Mees, Lukas Hermann, Erick Rosete,

Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal"

Patch-wise Adversarial Removal Implementation of paper "Decision-based Black-box Attack Against Vision Transformers via Patch-wise Adversarial Removal

Repository aimed at compiling code, papers, demos etc.. related to my PhD on 3D vision and machine learning for fruit detection and shape estimation at the university of Lincoln

PhD_3DPerception Repository aimed at compiling code, papers, demos etc.. related to my PhD on 3D vision and machine learning for fruit detection and s

MLPs for Vision and Langauge Modeling (Coming Soon)

MLP Architectures for Vision-and-Language Modeling: An Empirical Study MLP Architectures for Vision-and-Language Modeling: An Empirical Study (Code wi

Post-Training Quantization for Vision transformers.

PTQ4ViT Post-Training Quantization Framework for Vision Transformers. We use the twin uniform quantization method to reduce the quantization error on

MapReader: A computer vision pipeline for the semantic exploration of maps at scale

MapReader A computer vision pipeline for the semantic exploration of maps at scale MapReader is an end-to-end computer vision (CV) pipeline designed b

The implementation code for "DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruction"

DAGAN This is the official implementation code for DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruct

Lip Reading - Cross Audio-Visual Recognition using 3D Convolutional Neural Networks

Lip Reading - Cross Audio-Visual Recognition using 3D Convolutional Neural Networks - Official Project Page This repository contains the code develope

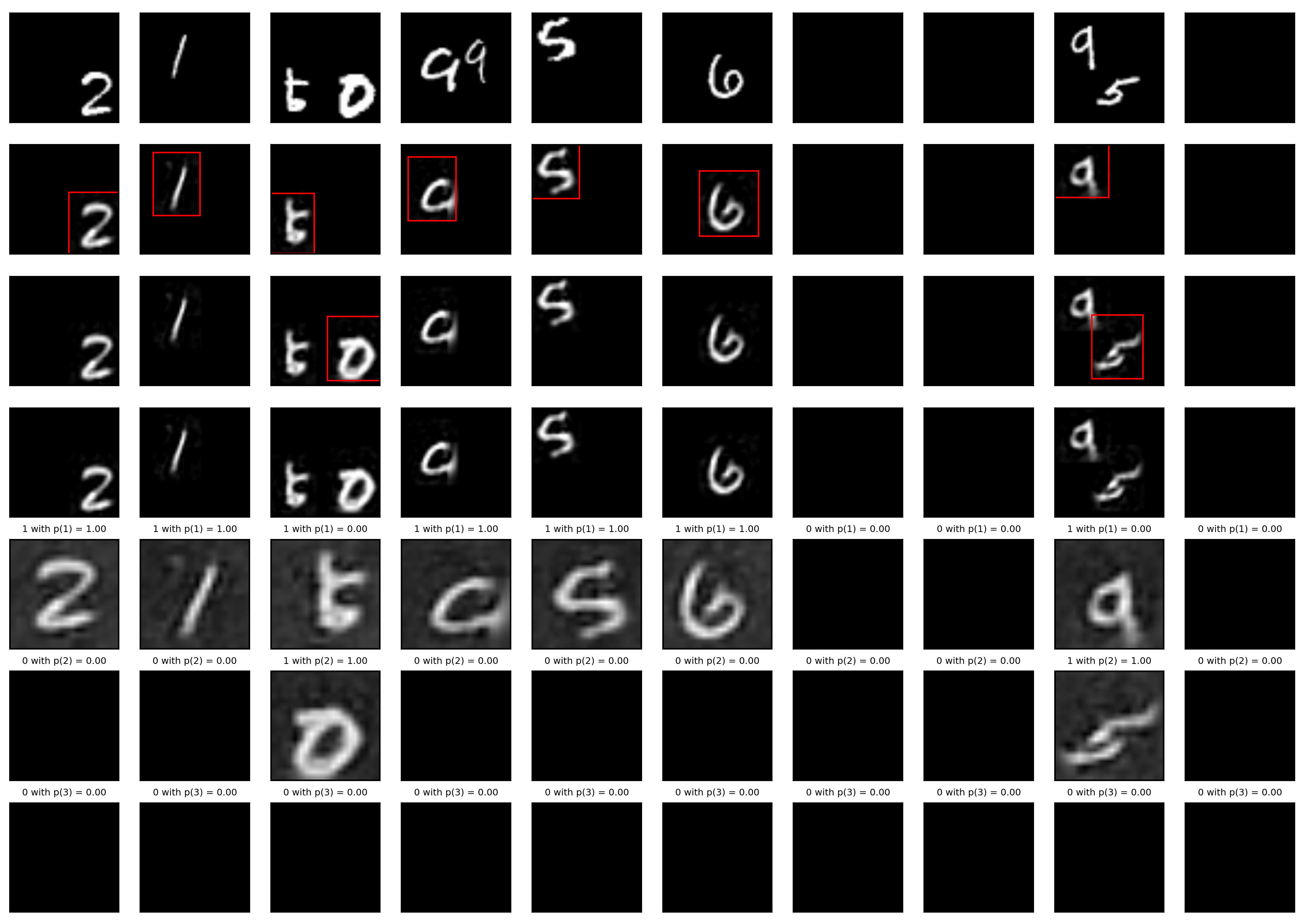

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

![[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation](https://user-images.githubusercontent.com/65257938/145016835-3c4be820-c55d-4eb4-b7f5-b8a012ee0f8c.png)

[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation

Reliable Propagation-Correction Modulation for Video Object Segmentation (AAAI22) Preview version paper of this work is available at: https://arxiv.or

PyTorch implementation of the paper Dynamic Token Normalization Improves Vision Transfromers.

Dynamic Token Normalization Improves Vision Transformers This is the PyTorch implementation of the paper Dynamic Token Normalization Improves Vision T

![[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation](https://user-images.githubusercontent.com/65257938/145016835-3c4be820-c55d-4eb4-b7f5-b8a012ee0f8c.png)

[AAAI22] Reliable Propagation-Correction Modulation for Video Object Segmentation

Reliable Propagation-Correction Modulation for Video Object Segmentation (AAAI22) Preview version paper of this work is available at: https://arxiv.or

Reproduction of Vision Transformer in Tensorflow2. Train from scratch and Finetune.

Vision Transformer(ViT) in Tensorflow2 Tensorflow2 implementation of the Vision Transformer(ViT). This repository is for An image is worth 16x16 words

Spiking Neural Network for Computer Vision using SpikingJelly framework and Pytorch-Lightning

Spiking Neural Network for Computer Vision using SpikingJelly framework and Pytorch-Lightning

Code repository for "Reducing Underflow in Mixed Precision Training by Gradient Scaling" presented at IJCAI '20

Reducing Underflow in Mixed Precision Training by Gradient Scaling This project implements the gradient scaling method to improve the performance of m

Official Pytorch implementation for 2021 ICCV paper "Learning Motion Priors for 4D Human Body Capture in 3D Scenes" and trained models / data

Learning Motion Priors for 4D Human Body Capture in 3D Scenes (LEMO) Official Pytorch implementation for 2021 ICCV (oral) paper "Learning Motion Prior

Single-Stage Instance Shadow Detection with Bidirectional Relation Learning (CVPR 2021 Oral)

Single-Stage Instance Shadow Detection with Bidirectional Relation Learning (CVPR 2021 Oral) Tianyu Wang*, Xiaowei Hu*, Chi-Wing Fu, and Pheng-Ann Hen

Code for ICLR 2020 paper "VL-BERT: Pre-training of Generic Visual-Linguistic Representations".

VL-BERT By Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, Jifeng Dai. This repository is an official implementation of the paper VL-BERT:

Vision-Language Pre-training for Image Captioning and Question Answering

VLP This repo hosts the source code for our AAAI2020 work Vision-Language Pre-training (VLP). We have released the pre-trained model on Conceptual Cap

Research code for ECCV 2020 paper "UNITER: UNiversal Image-TExt Representation Learning"

UNITER: UNiversal Image-TExt Representation Learning This is the official repository of UNITER (ECCV 2020). This repository currently supports finetun

Oscar and VinVL

Oscar: Object-Semantics Aligned Pre-training for Vision-and-Language Tasks VinVL: Revisiting Visual Representations in Vision-Language Models Updates

Research Code for NeurIPS 2020 Spotlight paper "Large-Scale Adversarial Training for Vision-and-Language Representation Learning": UNITER adversarial training part

VILLA: Vision-and-Language Adversarial Training This is the official repository of VILLA (NeurIPS 2020 Spotlight). This repository currently supports

A light weight data augmentation tool for training CNNs and Viola Jones detectors

hey-daug A light weight data augmentation tool for training CNNs and Viola Jones detectors (Haar Cascades). This tool inflates your data by up to six

Label Studio is a multi-type data labeling and annotation tool with standardized output format

Website • Docs • Twitter • Join Slack Community What is Label Studio? Label Studio is an open source data labeling tool. It lets you label data types

UnFlow: Unsupervised Learning of Optical Flow with a Bidirectional Census Loss

UnFlow: Unsupervised Learning of Optical Flow with a Bidirectional Census Loss This repository contains the TensorFlow implementation of the paper UnF

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

MapReader: A computer vision pipeline for the semantic exploration of maps at scale

MapReader A computer vision pipeline for the semantic exploration of maps at scale MapReader is an end-to-end computer vision (CV) pipeline designed b

A Simple Long-Tailed Rocognition Baseline via Vision-Language Model

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

Official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

Datasets, Transforms and Models specific to Computer Vision

vision Datasets, Transforms and Models specific to Computer Vision Installation First install the nightly version of OneFlow python3 -m pip install on

This is a collection of our NAS and Vision Transformer work.

This is a collection of our NAS and Vision Transformer work.

2021 National Underwater Robotics Vision Optics

2021-National-Underwater-Robotics-Vision-Optics 2021年全国水下机器人算法大赛-光学赛道-B榜精度第18名 (Kilian_Di的团队:A榜map@50:95 56.36 B榜map@50:95 56.7) 2021年全国水下机器人算法大赛-声学赛道

The official implementation for "FQ-ViT: Fully Quantized Vision Transformer without Retraining".

FQ-ViT [arXiv] This repo contains the official implementation of "FQ-ViT: Fully Quantized Vision Transformer without Retraining". Table of Contents In

This is a collection of our NAS and Vision Transformer work.

AutoML - Neural Architecture Search This is a collection of our AutoML-NAS work iRPE (NEW): Rethinking and Improving Relative Position Encoding for Vi

This repository contains the source code of our work on designing efficient CNNs for computer vision

Efficient networks for Computer Vision This repo contains source code of our work on designing efficient networks for different computer vision tasks:

Code repository for the paper Computer Vision User Entity Behavior Analytics

Computer Vision User Entity Behavior Analytics Code repository for "Computer Vision User Entity Behavior Analytics" Code Description dataset.csv As di

Official Pytorch Code for the paper TransWeather

TransWeather Official Code for the paper TransWeather, Arxiv Tech Report 2021 Paper | Website About this repo: This repo hosts the implentation code,

Experimenting with computer vision techniques to generate annotated image datasets from gameplay recordings automatically.

Experimenting with computer vision techniques to generate annotated image datasets from gameplay recordings automatically. The collected data will then be used to train a deep neural network that can detect enemy player models in real time, during gameplay. Finally, a virtual input device will adjust the player's crosshair based on live detections for greater accuracy.

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

Monk is a low code Deep Learning tool and a unified wrapper for Computer Vision.

Monk - A computer vision toolkit for everyone Why use Monk Issue: Want to begin learning computer vision Solution: Start with Monk's hands-on study ro

We are building an open database of COVID-19 cases with chest X-ray or CT images.

🛑 Note: please do not claim diagnostic performance of a model without a clinical study! This is not a kaggle competition dataset. Please read this pa

HybVIO visual-inertial odometry and SLAM system

HybVIO A visual-inertial odometry system with an optional SLAM module. This is a research-oriented codebase, which has been published for the purposes

labelpix is a graphical image labeling interface for drawing bounding boxes

Welcome to labelpix 👋 labelpix is a graphical image labeling interface for drawing bounding boxes. 🏠 Homepage Install pip install -r requirements.tx

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

Official repository for the paper F, B, Alpha Matting

FBA Matting Official repository for the paper F, B, Alpha Matting. This paper and project is under heavy revision for peer reviewed publication, and s

The code for the NeurIPS 2021 paper "A Unified View of cGANs with and without Classifiers".

Energy-based Conditional Generative Adversarial Network (ECGAN) This is the code for the NeurIPS 2021 paper "A Unified View of cGANs with and without

Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation

TVT Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation Datasets: Digit: MNIST, SVHN, USPS Object: Office, Office-Home, Vi

Source code for CVPR 2020 paper "Learning to Forget for Meta-Learning"

L2F - Learning to Forget for Meta-Learning Sungyong Baik, Seokil Hong, Kyoung Mu Lee Source code for CVPR 2020 paper "Learning to Forget for Meta-Lear

🔥RandLA-Net in Tensorflow (CVPR 2020, Oral & IEEE TPAMI 2021)

RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds (CVPR 2020) This is the official implementation of RandLA-Net (CVPR2020, Oral

OpenMMLab Computer Vision Foundation

English | 简体中文 Introduction MMCV is a foundational library for computer vision research and supports many research projects as below: MMCV: OpenMMLab

A minimal solution to hand motion capture from a single color camera at over 100fps. Easy to use, plug to run.

Minimal Hand A minimal solution to hand motion capture from a single color camera at over 100fps. Easy to use, plug to run. This project provides the

YOLOv4 / Scaled-YOLOv4 / YOLO - Neural Networks for Object Detection (Windows and Linux version of Darknet )

Yolo v4, v3 and v2 for Windows and Linux (neural networks for object detection) Paper YOLO v4: https://arxiv.org/abs/2004.10934 Paper Scaled YOLO v4:

A hybrid SOTA solution of LiDAR panoptic segmentation with C++ implementations of point cloud clustering algorithms. ICCV21, Workshop on Traditional Computer Vision in the Age of Deep Learning

ICCVW21-TradiCV-Survey-of-LiDAR-Cluster Motivation In contrast to popular end-to-end deep learning LiDAR panoptic segmentation solutions, we propose a

A TensorFlow 2.x implementation of Masked Autoencoders Are Scalable Vision Learners

Masked Autoencoders Are Scalable Vision Learners A TensorFlow implementation of Masked Autoencoders Are Scalable Vision Learners [1]. Our implementati

Official PyTorch implementation of "Meta-Learning with Task-Adaptive Loss Function for Few-Shot Learning" (ICCV2021 Oral)

MeTAL - Meta-Learning with Task-Adaptive Loss Function for Few-Shot Learning (ICCV2021 Oral) Sungyong Baik, Janghoon Choi, Heewon Kim, Dohee Cho, Jaes