2415 Repositories

Python Image-Fusion-Transformer Libraries

本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。

【关于 NLP】那些你不知道的事 作者:杨夕、芙蕖、李玲、陈海顺、twilight、LeoLRH、JimmyDU、艾春辉、张永泰、金金金 介绍 本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。 目录架构 一、【

CLIP+FFT text-to-image

Aphantasia This is a text-to-image tool, part of the artwork of the same name. Based on CLIP model, with FFT parameterizer from Lucent library as a ge

Ready-to-use code and tutorial notebooks to boost your way into few-shot image classification.

Easy Few-Shot Learning Ready-to-use code and tutorial notebooks to boost your way into few-shot image classification. This repository is made for you

a morph transfer UGATIT for image translation.

Morph-UGATIT a morph transfer UGATIT for image translation. Introduction 中文技术文档 This is Pytorch implementation of UGATIT, paper "U-GAT-IT: Unsupervise

Code associated with the "Data Augmentation using Pre-trained Transformer Models" paper

Data Augmentation using Pre-trained Transformer Models Code associated with the Data Augmentation using Pre-trained Transformer Models paper Code cont

Fast image augmentation library and easy to use wrapper around other libraries. Documentation: https://albumentations.ai/docs/ Paper about library: https://www.mdpi.com/2078-2489/11/2/125

Albumentations Albumentations is a Python library for image augmentation. Image augmentation is used in deep learning and computer vision tasks to inc

Image augmentation library in Python for machine learning.

Augmentor is an image augmentation library in Python for machine learning. It aims to be a standalone library that is platform and framework independe

Image augmentation for machine learning experiments.

imgaug This python library helps you with augmenting images for your machine learning projects. It converts a set of input images into a new, much lar

Image processing in Python

scikit-image: Image processing in Python Website (including documentation): https://scikit-image.org/ Mailing list: https://mail.python.org/mailman3/l

Open Source Computer Vision Library

OpenCV: Open Source Computer Vision Library Resources Homepage: https://opencv.org Courses: https://opencv.org/courses Docs: https://docs.opencv.org/m

Gluon CV Toolkit

Gluon CV Toolkit | Installation | Documentation | Tutorials | GluonCV provides implementations of the state-of-the-art (SOTA) deep learning models in

ICRA 2021 "Towards Precise and Efficient Image Guided Depth Completion"

PENet: Precise and Efficient Depth Completion This repo is the PyTorch implementation of our paper to appear in ICRA2021 on "Towards Precise and Effic

com_media allowed paths that are not intended for image uploads to RCE

CVE-2021-23132 com_media allowed paths that are not intended for image uploads to RCE. CVE-2020-24597 Directory traversal in com_media to RCE Two CVEs

python library for invisible image watermark (blind image watermark)

invisible-watermark invisible-watermark is a python library and command line tool for creating invisible watermark over image.(aka. blink image waterm

Deep Illuminator is a data augmentation tool designed for image relighting.

Deep Illuminator Deep Illuminator is a data augmentation tool designed for image relighting. It can be used to easily and efficiently genera

GANsformer: Generative Adversarial Transformers Drew A

GANsformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick *I wish to thank Christopher D. Manning for the fruitf

Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image classification, in Pytorch

Transformer in Transformer Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image c

Demo programs for the Talking Head Anime from a Single Image 2: More Expressive project.

Demo Code for "Talking Head Anime from a Single Image 2: More Expressive" This repository contains demo programs for the Talking Head Anime

Official pytorch implementation of paper "Image-to-image Translation via Hierarchical Style Disentanglement".

HiSD: Image-to-image Translation via Hierarchical Style Disentanglement Official pytorch implementation of paper "Image-to-image Translation

An open source image editor which can manipulate an image in many ways!

Image Editor - An open source image editor which can manipulate an image in many ways! If you need any more modes in repo or I

Implementation of E(n)-Transformer, which extends the ideas of Welling's E(n)-Equivariant Graph Neural Network to attention

E(n)-Equivariant Transformer (wip) Implementation of E(n)-Equivariant Transformer, which extends the ideas from Welling's E(n)-Equivariant G

An open-source application for biological image analysis

CellProfiler is a free open-source software designed to enable biologists without training in computer vision or programming to quantitatively measure

StudioGAN is a Pytorch library providing implementations of representative Generative Adversarial Networks (GANs) for conditional/unconditional image generation.

StudioGAN is a Pytorch library providing implementations of representative Generative Adversarial Networks (GANs) for conditional/unconditional image generation.

The open-source core of Pinry, a tiling image board system for people who want to save, tag, and share images, videos and webpages in an easy to skim through format.

The open-source core of Pinry, a tiling image board system for people who want to save, tag, and share images, videos and webpages in an easy to skim

Official implementation of the paper Image Generators with Conditionally-Independent Pixel Synthesis https://arxiv.org/abs/2011.13775

CIPS -- Official Pytorch Implementation of the paper Image Generators with Conditionally-Independent Pixel Synthesis Requirements pip install -r requi

Pytorch Code for "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation"

Medical-Transformer Pytorch Code for the paper "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation" About this repo: This repo

CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection

CLOCs is a novel Camera-LiDAR Object Candidates fusion network. It provides a low-complexity multi-modal fusion framework that improves the performance of single-modality detectors. CLOCs operates on the combined output candidates of any 3D and any 2D detector, and is trained to produce more accurate 3D and 2D detection results.

Implementation of SETR model, Original paper: Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers.

SETR - Pytorch Since the original paper (Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers.) has no official

Dynamic image server for web and print

Quru Image Server - dynamic imaging for web and print QIS is a high performance web server for creating and delivering dynamic images. It is ideal for

This is a new web-based photo management application. Run it on your home server and it will let you find the right photo from your collection on any device. Smart filtering is made possible by object recognition, location awareness, color analysis and other ML algorithms.

Photonix Photo Manager This is a photo management application based on web technologies. Run it on your home server and it will let you find what you

3D Reconstruction Software

Meshroom is a free, open-source 3D Reconstruction Software based on the AliceVision Photogrammetric Computer Vision framework. Learn more details abou

A multi-entity Transformer for multi-agent spatiotemporal modeling.

baller2vec This is the repository for the paper: Michael A. Alcorn and Anh Nguyen. baller2vec: A Multi-Entity Transformer For Multi-Agent Spatiotempor

This repo holds code for TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation

TransUNet This repo holds code for TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation Usage

Official PyTorch code for ClipBERT, an efficient framework for end-to-end learning on image-text and video-text tasks

Official PyTorch code for ClipBERT, an efficient framework for end-to-end learning on image-text and video-text tasks. It takes raw videos/images + text as inputs, and outputs task predictions. ClipBERT is designed based on 2D CNNs and transformers, and uses a sparse sampling strategy to enable efficient end-to-end video-and-language learning.

Hide secret texts inside an image, optionally encrypt them with a password using AES-256.

Hide secret texts/messages inside an image. You can optionally encrypt your texts with a password using AES-256 before encoding into the image.

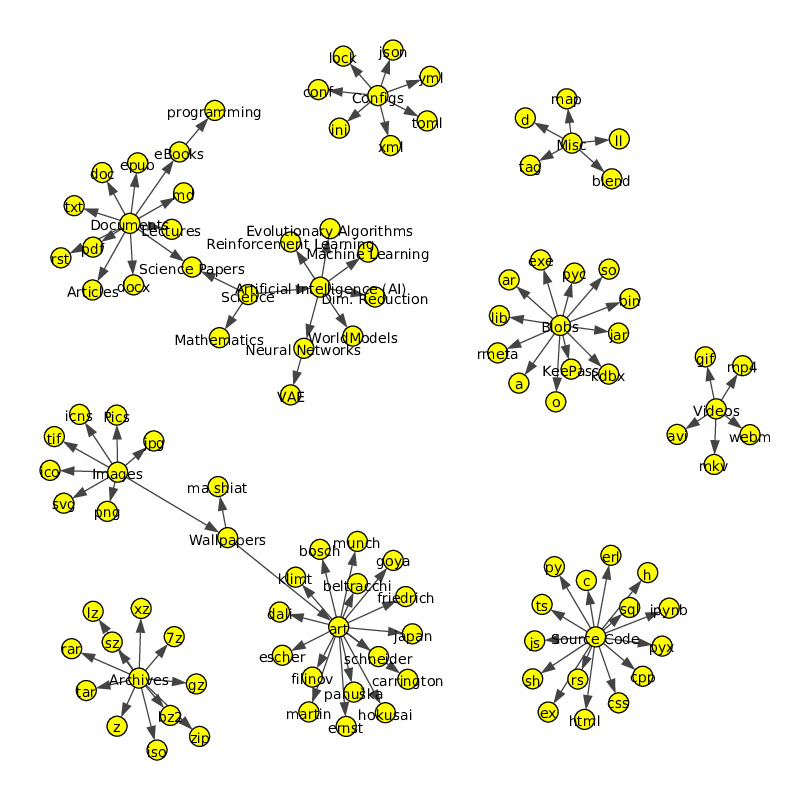

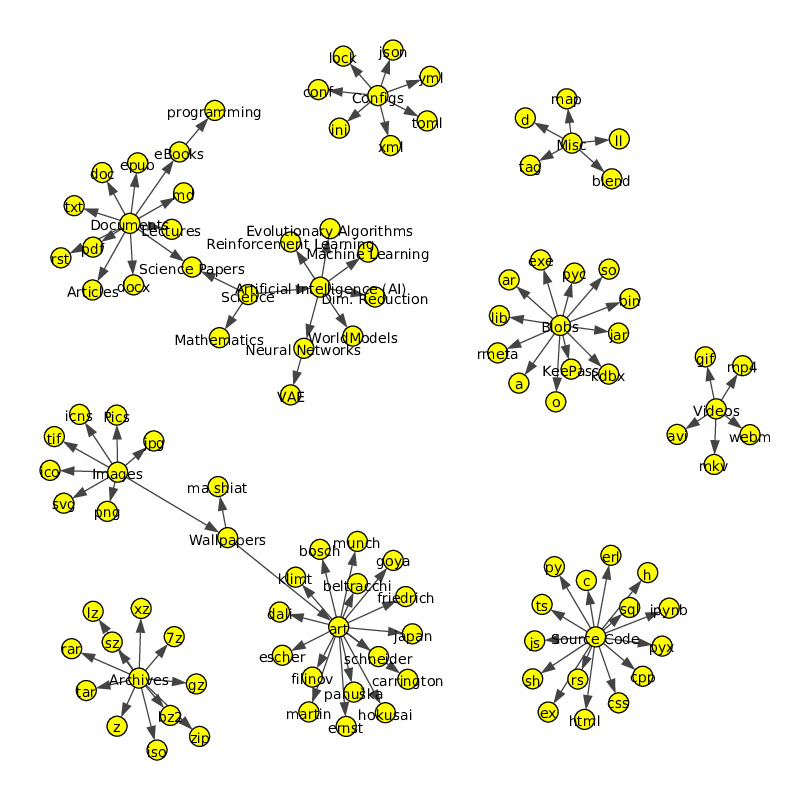

Knowledge Management for Humans using Machine Learning & Tags

HyperTag HyperTag helps humans intuitively express how they think about their files using tags and machine learning.

Implementation of self-attention mechanisms for general purpose. Focused on computer vision modules. Ongoing repository.

Self-attention building blocks for computer vision applications in PyTorch Implementation of self attention mechanisms for computer vision in PyTorch

一键翻译各类图片内文字

一键翻译各类图片内文字 针对群内、各个图站上大量不太可能会有人去翻译的图片设计,让我这种日语小白能够勉强看懂图片 主要支持日语,不过也能识别汉语和小写英文 支持简单的涂白和嵌字

Knowledge Management for Humans using Machine Learning & Tags

HyperTag helps humans intuitively express how they think about their files using tags and machine learning. Represent how you think using tags. Find what you look for using semantic search for your text documents (yes, even PDF's) and images.

Docker image with Uvicorn managed by Gunicorn for high-performance FastAPI web applications in Python 3.6 and above with performance auto-tuning. Optionally with Alpine Linux.

Supported tags and respective Dockerfile links python3.8, latest (Dockerfile) python3.7, (Dockerfile) python3.6 (Dockerfile) python3.8-slim (Dockerfil

Summarization, translation, sentiment-analysis, text-generation and more at blazing speed using a T5 version implemented in ONNX.

Summarization, translation, Q&A, text generation and more at blazing speed using a T5 version implemented in ONNX. This package is still in alpha stag

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Code for the paper "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer"

T5: Text-To-Text Transfer Transformer The t5 library serves primarily as code for reproducing the experiments in Exploring the Limits of Transfer Lear

An easier way to build neural search on the cloud

An easier way to build neural search on the cloud Jina is a deep learning-powered search framework for building cross-/multi-modal search systems (e.g

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

Pyroomacoustics is a package for audio signal processing for indoor applications. It was developed as a fast prototyping platform for beamforming algorithms in indoor scenarios.

Summary Pyroomacoustics is a software package aimed at the rapid development and testing of audio array processing algorithms. The content of the pack

TransGAN: Two Transformers Can Make One Strong GAN

[Preprint] "TransGAN: Two Transformers Can Make One Strong GAN", Yifan Jiang, Shiyu Chang, Zhangyang Wang

Official implementation of the ICLR 2021 paper

You Only Need Adversarial Supervision for Semantic Image Synthesis Official PyTorch implementation of the ICLR 2021 paper "You Only Need Adversarial S

Implementation of TabTransformer, attention network for tabular data, in Pytorch

Tab Transformer Implementation of Tab Transformer, attention network for tabular data, in Pytorch. This simple architecture came within a hair's bread

Exploring Cross-Image Pixel Contrast for Semantic Segmentation

Exploring Cross-Image Pixel Contrast for Semantic Segmentation Exploring Cross-Image Pixel Contrast for Semantic Segmentation, Wenguan Wang, Tianfei Z

A vision library for performing sliced inference on large images/small objects

SAHI: Slicing Aided Hyper Inference A vision library for performing sliced inference on large images/small objects Overview Object detection and insta

Authors implementation of LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant self-at

This is a Pytorch implementation of the paper: Self-Supervised Graph Transformer on Large-Scale Molecular Data.

This is a Pytorch implementation of the paper: Self-Supervised Graph Transformer on Large-Scale Molecular Data.

NFNets and Adaptive Gradient Clipping for SGD implemented in PyTorch

PyTorch implementation of Normalizer-Free Networks and SGD - Adaptive Gradient Clipping Paper: https://arxiv.org/abs/2102.06171.pdf Original code: htt

Python implementation of Wu et al (2018)'s registration fusion

reg-fusion Projection of a central sulcus probability map using the RF-ANTs approach (right hemisphere shown). This is a Python implementation of Wu e

Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series Forecasting.

Non-AR Spatial-Temporal Transformer Introduction Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series For

Multi-Stage Progressive Image Restoration

Multi-Stage Progressive Image Restoration Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan, Ming-Hsuan Yang, and Ling Sh

Summarization, translation, sentiment-analysis, text-generation and more at blazing speed using a T5 version implemented in ONNX.

Summarization, translation, Q&A, text generation and more at blazing speed using a T5 version implemented in ONNX. This package is still in alpha stag

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Code for the paper "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer"

T5: Text-To-Text Transfer Transformer The t5 library serves primarily as code for reproducing the experiments in Exploring the Limits of Transfer Lear

An easier way to build neural search on the cloud

An easier way to build neural search on the cloud Jina is a deep learning-powered search framework for building cross-/multi-modal search systems (e.g

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

Automated image processing for Django. Currently v4.0

ImageKit is a Django app for processing images. Need a thumbnail? A black-and-white version of a user-uploaded image? ImageKit will make them for you.

Python Script to download hundreds of images from 'Google Images'. It is a ready-to-run code!

Google Images Download Python Script for 'searching' and 'downloading' hundreds of Google images to the local hard disk! Documentation Documentation H

Command-line program to download image galleries and collections from several image hosting sites

gallery-dl gallery-dl is a command-line program to download image galleries and collections from several image hosting sites (see Supported Sites). It

NLP Core Library and Model Zoo based on PaddlePaddle 2.0

PaddleNLP 2.0拥有丰富的模型库、简洁易用的API与高性能的分布式训练的能力,旨在为飞桨开发者提升文本建模效率,并提供基于PaddlePaddle 2.0的NLP领域最佳实践。

Implementation of Feedback Transformer in Pytorch

Feedback Transformer - Pytorch Simple implementation of Feedback Transformer in Pytorch. They improve on Transformer-XL by having each token have acce

PyTorch code for the paper: FeatMatch: Feature-Based Augmentation for Semi-Supervised Learning

FeatMatch: Feature-Based Augmentation for Semi-Supervised Learning This is the PyTorch implementation of our paper: FeatMatch: Feature-Based Augmentat

Transformer-based Text Auto-encoder (T-TA) using TensorFlow 2.

T-TA (Transformer-based Text Auto-encoder) This repository contains codes for Transformer-based Text Auto-encoder (T-TA, paper: Fast and Accurate Deep

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

DALL-E in Pytorch Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch. It will also contain CLIP for ranking the ge

DeiT: Data-efficient Image Transformers

DeiT: Data-efficient Image Transformers This repository contains PyTorch evaluation code, training code and pretrained models for DeiT (Data-Efficient

Learning Continuous Image Representation with Local Implicit Image Function

LIIF This repository contains the official implementation for LIIF introduced in the following paper: Learning Continuous Image Representation with Lo

Implementation of Bottleneck Transformer in Pytorch

Bottleneck Transformer - Pytorch Implementation of Bottleneck Transformer, SotA visual recognition model with convolution + attention that outperforms

Jittor implementation of PCT:Point Cloud Transformer

PCT: Point Cloud Transformer This is a Jittor implementation of PCT: Point Cloud Transformer.

Trankit is a Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing

Trankit: A Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing Trankit is a light-weight Transformer-based Pyth

Multiple-Object Tracking with Transformer

TransTrack: Multiple-Object Tracking with Transformer Introduction TransTrack: Multiple-Object Tracking with Transformer Models Training data Training

Image morphing without reference points by applying warp maps and optimizing over them.

Differentiable Morphing Image morphing without reference points by applying warp maps and optimizing over them. Differentiable Morphing is machine lea

Open-AI's DALL-E for large scale training in mesh-tensorflow.

DALL-E in Mesh-Tensorflow [WIP] Open-AI's DALL-E in Mesh-Tensorflow. If this is similarly efficient to GPT-Neo, this repo should be able to train mode

Chinese NewsTitle Generation Project by GPT2.带有超级详细注释的中文GPT2新闻标题生成项目。

GPT2-NewsTitle 带有超详细注释的GPT2新闻标题生成项目 UpDate 01.02.2021 从网上收集数据,将清华新闻数据、搜狗新闻数据等新闻数据集,以及开源的一些摘要数据进行整理清洗,构建一个较完善的中文摘要数据集。 数据集清洗时,仅进行了简单地规则清洗。

Simple command line tool for text to image generation using OpenAI's CLIP and Siren (Implicit neural representation network)

Deep Daze mist over green hills shattered plates on the grass cosmic love and attention a time traveler in the crowd life during the plague meditative

A simple command line tool for text to image generation, using OpenAI's CLIP and a BigGAN

artificial intelligence cosmic love and attention fire in the sky a pyramid made of ice a lonely house in the woods marriage in the mountains lantern

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting This is the origin Pytorch implementation of Informer in the followin

Implementation of the Point Transformer layer, in Pytorch

Point Transformer - Pytorch Implementation of the Point Transformer self-attention layer, in Pytorch. The simple circuit above seemed to have allowed

Python script to generate a visualization of various sorting algorithms, image or video.

sorting_algo_visualizer Python script to generate a visualization of various sorting algorithms, image or video.

Graph Transformer Architecture. Source code for

Graph Transformer Architecture Source code for the paper "A Generalization of Transformer Networks to Graphs" by Vijay Prakash Dwivedi and Xavier Bres

Big Bird: Transformers for Longer Sequences

BigBird, is a sparse-attention based transformer which extends Transformer based models, such as BERT to much longer sequences. Moreover, BigBird comes along with a theoretical understanding of the capabilities of a complete transformer that the sparse model can handle.

Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

Segmentation Transformer Implementation of Segmentation Transformer in PyTorch, a new model to achieve SOTA in semantic segmentation while using trans

Bottleneck Transformers for Visual Recognition

Bottleneck Transformers for Visual Recognition Experiments Model Params (M) Acc (%) ResNet50 baseline (ref) 23.5M 93.62 BoTNet-50 18.8M 95.11% BoTNet-

Explainability for Vision Transformers (in PyTorch)

Explainability for Vision Transformers (in PyTorch) This repository implements methods for explainability in Vision Transformers

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch.

SE3 Transformer - Pytorch Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. May be needed for replicating Alphafold2 resu

Implementation of Lie Transformer, Equivariant Self-Attention, in Pytorch

Lie Transformer - Pytorch (wip) Implementation of Lie Transformer, Equivariant Self-Attention, in Pytorch. Only the SE3 version will be present in thi

Pytorch implementation of PCT: Point Cloud Transformer

PCT: Point Cloud Transformer This is a Pytorch implementation of PCT: Point Cloud Transformer.

PyTorch implementation of "A Full-Band and Sub-Band Fusion Model for Real-Time Single-Channel Speech Enhancement."

FullSubNet This Git repository for the official PyTorch implementation of "A Full-Band and Sub-Band Fusion Model for Real-Time Single-Channel Speech E

A framework for joint super-resolution and image synthesis, without requiring real training data

SynthSR This repository contains code to train a Convolutional Neural Network (CNN) for Super-resolution (SR), or joint SR and data synthesis. The met

PyTorch implementation of "Conformer: Convolution-augmented Transformer for Speech Recognition" (INTERSPEECH 2020)

PyTorch implementation of Conformer: Convolution-augmented Transformer for Speech Recognition. Transformer models are good at capturing content-based

Graph neural network message passing reframed as a Transformer with local attention

Adjacent Attention Network An implementation of a simple transformer that is equivalent to graph neural network where the message passing is done with

Accelerated deep learning R&D

Accelerated deep learning R&D PyTorch framework for Deep Learning research and development. It focuses on reproducibility, rapid experimentation, and

Toolbox of models, callbacks, and datasets for AI/ML researchers.

Pretrained SOTA Deep Learning models, callbacks and more for research and production with PyTorch Lightning and PyTorch Website • Installation • Main

Image-to-Image Translation with Conditional Adversarial Networks (Pix2pix) implementation in keras

pix2pix-keras Pix2pix implementation in keras. Original paper: Image-to-Image Translation with Conditional Adversarial Networks (pix2pix) Paper Author

Lightweight, Python library for fast and reproducible experimentation :microscope:

Steppy What is Steppy? Steppy is a lightweight, open-source, Python 3 library for fast and reproducible experimentation. Steppy lets data scientist fo