972 Repositories

Python Physics-Aware-Training Libraries

An open source Python package for plasma science that is under development

PlasmaPy PlasmaPy is an open source, community-developed Python 3.7+ package for plasma science. PlasmaPy intends to be for plasma science what Astrop

PyCharge is an open-source computational electrodynamics Python simulator

PyCharge PyCharge is an open-source computational electrodynamics Python simulator that can calculate the electromagnetic fields and potentials genera

🔀 Visual Room Rearrangement

AI2-THOR Rearrangement Challenge Welcome to the 2021 AI2-THOR Rearrangement Challenge hosted at the CVPR'21 Embodied-AI Workshop. The goal of this cha

General purpose Slater-Koster tight-binding code for electronic structure calculations

tight-binder Introduction General purpose tight-binding code for electronic structure calculations based on the Slater-Koster approximation. The code

Reproduce results and replicate training fo T0 (Multitask Prompted Training Enables Zero-Shot Task Generalization)

T-Zero This repository serves primarily as codebase and instructions for training, evaluation and inference of T0. T0 is the model developed in Multit

Align and Prompt: Video-and-Language Pre-training with Entity Prompts

ALPRO Align and Prompt: Video-and-Language Pre-training with Entity Prompts [Paper] Dongxu Li, Junnan Li, Hongdong Li, Juan Carlos Niebles, Steven C.H

Official Pytorch Implementation of 3DV2021 paper: SAFA: Structure Aware Face Animation.

SAFA: Structure Aware Face Animation (3DV2021) Official Pytorch Implementation of 3DV2021 paper: SAFA: Structure Aware Face Animation. Getting Started

Pre-Training Graph Neural Networks for Cold-Start Users and Items Representation.

Pretrain-Recsys This is our Tensorflow implementation for our WSDM 2021 paper: Bowen Hao, Jing Zhang, Hongzhi Yin, Cuiping Li, Hong Chen. Pre-Training

Pre-training of Graph Augmented Transformers for Medication Recommendation

G-Bert Pre-training of Graph Augmented Transformers for Medication Recommendation Intro G-Bert combined the power of Graph Neural Networks and BERT (B

Code for KDD'20 "Generative Pre-Training of Graph Neural Networks"

GPT-GNN: Generative Pre-Training of Graph Neural Networks GPT-GNN is a pre-training framework to initialize GNNs by generative pre-training. It can be

code for "Self-supervised edge features for improved Graph Neural Network training", arxivlink

Self-supervised edge features for improved Graph Neural Network training Data availability: Here is a link to the raw data for the organoids dataset.

![[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages](https://github.com/michiyasunaga/DrRepair/raw/master/DrRepair-overview.png)

[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages

DrRepair: Learning to Repair Programs from Error Messages This repo provides the source code & data of our paper: Graph-based, Self-Supervised Program

Autoregressive Predictive Coding: An unsupervised autoregressive model for speech representation learning

Autoregressive Predictive Coding This repository contains the official implementation (in PyTorch) of Autoregressive Predictive Coding (APC) proposed

Code and training data for our ECCV 2016 paper on Unsupervised Learning

Shuffle and Learn (Shuffle Tuple) Created by Ishan Misra Based on the ECCV 2016 Paper - "Shuffle and Learn: Unsupervised Learning using Temporal Order

![[NeurIPS'20] Self-supervised Co-Training for Video Representation Learning. Tengda Han, Weidi Xie, Andrew Zisserman.](https://github.com/TengdaHan/CoCLR/raw/main/asset/teaser.png)

[NeurIPS'20] Self-supervised Co-Training for Video Representation Learning. Tengda Han, Weidi Xie, Andrew Zisserman.

CoCLR: Self-supervised Co-Training for Video Representation Learning This repository contains the implementation of: InfoNCE (MoCo on videos) UberNCE

Geometry-Aware Learning of Maps for Camera Localization (CVPR2018)

Geometry-Aware Learning of Maps for Camera Localization This is the PyTorch implementation of our CVPR 2018 paper "Geometry-Aware Learning of Maps for

PyTorch code for training MM-DistillNet for multimodal knowledge distillation

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge MM-DistillNet is a

AdaFocus V2: End-to-End Training of Spatial Dynamic Networks for Video Recognition

AdaFocusV2 This repo contains the official code and pre-trained models for AdaFo

Deep Learning Training Scripts With Python

Deep Learning Training Scripts DNN Frameworks Caffe PyTorch Tensorflow CNN Models VGG ResNet DenseNet Inception Language Modeling GatedCNN-LM Attentio

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size.

Hub is a dataset format with a simple API for creating, storing, and collaborating on AI datasets of any size. The hub data layout enables rapid transformations and streaming of data while training models at scale. Hub is used by Google, Waymo, Red Cross, Oxford University, and Omdena.

Characterizing possible failure modes in physics-informed neural networks.

Characterizing possible failure modes in physics-informed neural networks This repository contains the PyTorch source code for the experiments in the

Open-source library for analyzing the results produced by ABINIT

Package Continuous Integration Documentation About AbiPy is a python library to analyze the results produced by Abinit, an open-source program for the

Code to use Augmented Shapiro Wilks Stopping, as well as code for the paper "Statistically Signifigant Stopping of Neural Network Training"

This codebase is being actively maintained, please create and issue if you have issues using it Basics All data files are included under losses and ea

PyTorch implementation of Rethinking Positional Encoding in Language Pre-training

TUPE PyTorch implementation of Rethinking Positional Encoding in Language Pre-training. Quickstart Clone this repository. git clone https://github.com

Code release for SLIP Self-supervision meets Language-Image Pre-training

SLIP: Self-supervision meets Language-Image Pre-training What you can find in this repo: Pre-trained models (with ViT-Small, Base, Large) and code to

VolumeGAN - 3D-aware Image Synthesis via Learning Structural and Textural Representations

VolumeGAN - 3D-aware Image Synthesis via Learning Structural and Textural Representations 3D-aware Image Synthesis via Learning Structural and Textura

NumPy aware dynamic Python compiler using LLVM

Numba A Just-In-Time Compiler for Numerical Functions in Python Numba is an open source, NumPy-aware optimizing compiler for Python sponsored by Anaco

Turn any live video stream or locally stored video into a dataset of interesting samples for ML training, or any other type of analysis.

Sieve Video Data Collection Example Find samples that are interesting within hours of raw video, for free and completely automatically using Sieve API

Implementation of our paper "DMT: Dynamic Mutual Training for Semi-Supervised Learning"

DMT: Dynamic Mutual Training for Semi-Supervised Learning This repository contains the code for our paper DMT: Dynamic Mutual Training for Semi-Superv

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

Introduction This is a Python package available on PyPI for NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pyto

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

ONNX Runtime is a cross-platform inference and training machine-learning accelerator. ONNX Runtime inference can enable faster customer experiences an

Python SDK for building, training, and deploying ML models

Overview of Kubeflow Fairing Kubeflow Fairing is a Python package that streamlines the process of building, training, and deploying machine learning (

Official code repository for ICCV 2021 paper: Gravity-Aware Monocular 3D Human Object Reconstruction

GraviCap Official code repository for ICCV 2021 paper: Gravity-Aware Monocular 3D Human Object Reconstruction. Gravity-Aware Monocular 3D Human-Object

Machine learning algorithms for many-body quantum systems

NetKet NetKet is an open-source project delivering cutting-edge methods for the study of many-body quantum systems with artificial neural networks and

Integrated physics-based and ligand-based modeling.

ComBind ComBind integrates data-driven modeling and physics-based docking for improved binding pose prediction and binding affinity prediction. Given

Simulate & classify transient absorption spectroscopy (TAS) spectral features for bulk semiconducting materials (Post-DFT)

PyTASER PyTASER is a Python (3.9+) library and set of command-line tools for classifying spectral features in bulk materials, post-DFT. The goal of th

Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021).

STAR-pytorch Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021). CVF (pdf) STAR-DC

Dynamics-aware Adversarial Attack of 3D Sparse Convolution Network

Leaded Gradient Method (LGM) This repository contains the PyTorch implementation for paper Dynamics-aware Adversarial Attack of 3D Sparse Convolution

Training deep models using anime, illustration images.

animeface deep models for anime images. Datasets anime-face-dataset Anime faces collected from Getchu.com. Based on Mckinsey666's dataset. 63.6K image

learned_optimization: Training and evaluating learned optimizers in JAX

learned_optimization: Training and evaluating learned optimizers in JAX learned_optimization is a research codebase for training learned optimizers. I

A full pipeline AutoML tool for tabular data

HyperGBM Doc | 中文 We Are Hiring! Dear folks,we are offering challenging opportunities located in Beijing for both professionals and students who are k

Official implementation of "Articulation Aware Canonical Surface Mapping"

Articulation-Aware Canonical Surface Mapping Nilesh Kulkarni, Abhinav Gupta, David F. Fouhey, Shubham Tulsiani Paper Project Page Requirements Python

Official Pytorch implementation of Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations

Scene Representation Networks This is the official implementation of the NeurIPS submission "Scene Representation Networks: Continuous 3D-Structure-Aw

Training PyTorch models with differential privacy

Opacus is a library that enables training PyTorch models with differential privacy. It supports training with minimal code changes required on the cli

Computational Methods Course at UdeA. Forked and size reduced from:

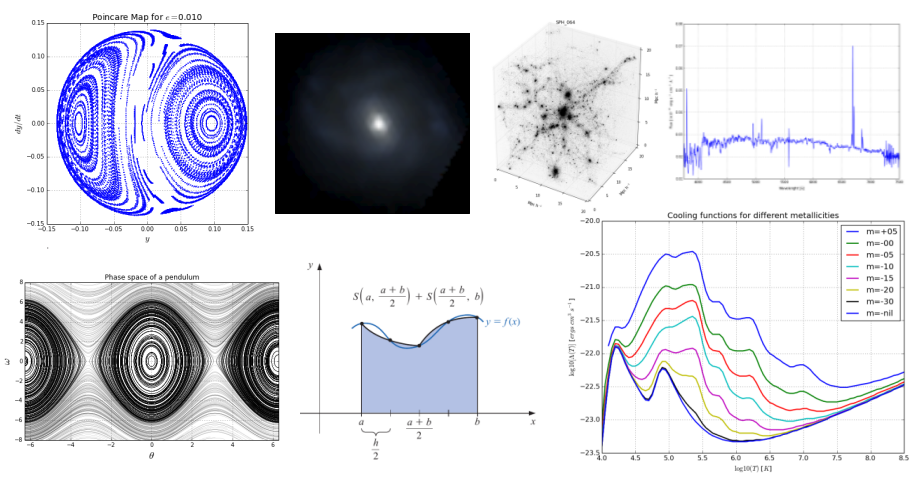

Computational Methods for Physics & Astronomy Book version at: https://restrepo.github.io/ComputationalMethods by: Sebastian Bustamante 2014/2015 Dieg

Codes for building and training the neural network model described in Domain-informed neural networks for interaction localization within astroparticle experiments.

Domain-informed Neural Networks Codes for building and training the neural network model described in Domain-informed neural networks for interaction

On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021))

PTvsBT On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021) Citation Please cite a

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t

Code for the ICCV'21 paper "Context-aware Scene Graph Generation with Seq2Seq Transformers"

ICCV'21 Context-aware Scene Graph Generation with Seq2Seq Transformers Authors: Yichao Lu*, Himanshu Rai*, Cheng Chang*, Boris Knyazev†, Guangwei Yu,

Fine-grained Post-training for Improving Retrieval-based Dialogue Systems - NAACL 2021

Fine-grained Post-training for Multi-turn Response Selection Implements the model described in the following paper Fine-grained Post-training for Impr

Training and Evaluation Code for Neural Volumes

Neural Volumes This repository contains training and evaluation code for the paper Neural Volumes. The method learns a 3D volumetric representation of

MakeItTalk: Speaker-Aware Talking-Head Animation

MakeItTalk: Speaker-Aware Talking-Head Animation This is the code repository implementing the paper: MakeItTalk: Speaker-Aware Talking-Head Animation

Ensembling Off-the-shelf Models for GAN Training

Data-Efficient GANs with DiffAugment project | paper | datasets | video | slides Generated using only 100 images of Obama, grumpy cats, pandas, the Br

The official repository for ROOT: analyzing, storing and visualizing big data, scientifically

About The ROOT system provides a set of OO frameworks with all the functionality needed to handle and analyze large amounts of data in a very efficien

Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition

SEW (Squeezed and Efficient Wav2vec) The repo contains the code of the paper "Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speec

Code for CodeT5: a new code-aware pre-trained encoder-decoder model.

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation This is the official PyTorch implementation

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite

Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models

PEGASUS library Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models, or PEGASUS, uses self-supervised

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Hiring We are hiring at all levels (including FTE researchers and interns)! If you are interested in working with us on NLP and large-scale pre-traine

Unsupervised Language Model Pre-training for French

FlauBERT and FLUE FlauBERT is a French BERT trained on a very large and heterogeneous French corpus. Models of different sizes are trained using the n

Code for EMNLP20 paper: "ProphetNet: Predicting Future N-gram for Sequence-to-Sequence Pre-training"

ProphetNet-X This repo provides the code for reproducing the experiments in ProphetNet. In the paper, we propose a new pre-trained language model call

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

ELECTRA Introduction ELECTRA is a method for self-supervised language representation learning. It can be used to pre-train transformer networks using

A Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Training Data》

RangeLoss Pytorch This is a Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Trai

Deep learning for Engineers - Physics Informed Deep Learning

SciANN: Neural Networks for Scientific Computations SciANN is a Keras wrapper for scientific computations and physics-informed deep learning. New to S

Efficient and Scalable Physics-Informed Deep Learning and Scientific Machine Learning on top of Tensorflow for multi-worker distributed computing

Notice: Support for Python 3.6 will be dropped in v.0.2.1, please plan accordingly! Efficient and Scalable Physics-Informed Deep Learning Collocation-

Physics-Informed Neural Networks (PINN) and Deep BSDE Solvers of Differential Equations for Scientific Machine Learning (SciML) accelerated simulation

NeuralPDE NeuralPDE.jl is a solver package which consists of neural network solvers for partial differential equations using scientific machine learni

Sparse Physics-based and Interpretable Neural Networks

Sparse Physics-based and Interpretable Neural Networks for PDEs This repository contains the code and manuscript for research done on Sparse Physics-b

The implementation of Learning Instance and Task-Aware Dynamic Kernels for Few Shot Learning

INSTA: Learning Instance and Task-Aware Dynamic Kernels for Few Shot Learning This repository provides the implementation and demo of Learning Instanc

Official implementation for Scale-Aware Neural Architecture Search for Multivariate Time Series Forecasting

1 SNAS4MTF This repo is the official implementation for Scale-Aware Neural Architecture Search for Multivariate Time Series Forecasting. 1.1 The frame

Cognition-aware Cognate Detection

Cognition-aware Cognate Detection The repository which contains our code for our EACL 2021 paper titled, "Cognition-aware Cognate Detection". This wor

Using CNN to mimic the driver based on training data from Torcs

Behavioural-Cloning-in-autonomous-driving Using CNN to mimic the driver based on training data from Torcs. Approach First, the data was collected from

Galactic and gravitational dynamics in Python

Gala is a Python package for Galactic and gravitational dynamics. Documentation The documentation for Gala is hosted on Read the docs. Installation an

A simple program for training and testing vit

Vit This is a simple program for training and testing vit. Key requirements: torch, torchvision and timm. Dataset I put 5 categories of the cub classi

PantheonRL is a package for training and testing multi-agent reinforcement learning environments.

PantheonRL is a package for training and testing multi-agent reinforcement learning environments. PantheonRL supports cross-play, fine-tuning, ad-hoc coordination, and more.

A high-performance distributed deep learning system targeting large-scale and automated distributed training.

HETU Documentation | Examples Hetu is a high-performance distributed deep learning system targeting trillions of parameters DL model training, develop

The source code of the paper "SHGNN: Structure-Aware Heterogeneous Graph Neural Network"

SHGNN: Structure-Aware Heterogeneous Graph Neural Network The source code and dataset of the paper: SHGNN: Structure-Aware Heterogeneous Graph Neural

GebPy is a Python-based, open source tool for the generation of geological data of minerals, rocks and complete lithological sequences.

GebPy is a Python-based, open source tool for the generation of geological data of minerals, rocks and complete lithological sequences. The data can be generated randomly or with respect to user-defined constraints, for example a specific element concentration within minerals and rocks or the order of units within a complete lithological profile.

A python interface for training Reinforcement Learning bots to battle on pokemon showdown

The pokemon showdown Python environment A Python interface to create battling pokemon agents. poke-env offers an easy-to-use interface for creating ru

PyGAD, a Python 3 library for building the genetic algorithm and training machine learning algorithms (Keras & PyTorch).

PyGAD: Genetic Algorithm in Python PyGAD is an open-source easy-to-use Python 3 library for building the genetic algorithm and optimizing machine lear

Codes for AAAI 2022 paper: Context-aware Health Event Prediction via Transition Functions on Dynamic Disease Graphs

Context-Aware-Healthcare Codes for AAAI 2022 paper: Context-aware Health Event Prediction via Transition Functions on Dynamic Disease Graphs Download

Thread-safe asyncio-aware queue for Python

Mixed sync-async queue, supposed to be used for communicating between classic synchronous (threaded) code and asynchronous

Atari2600 Training / Evaluation with RLlib

Training Atari2600 by Reinforcement Learning Train Atari2600 and check how it works! How to Setup You can setup packages on your local env. $ make set

A lightweight library designed to accelerate the process of training PyTorch models by providing a minimal

A lightweight library designed to accelerate the process of training PyTorch models by providing a minimal, but extensible training loop which is flexible enough to handle the majority of use cases, and capable of utilizing different hardware options with no code changes required.

A system for quickly generating training data with weak supervision

Programmatically Build and Manage Training Data Announcement The Snorkel team is now focusing their efforts on Snorkel Flow, an end-to-end AI applicat

Larch: Applications and Python Library for Data Analysis of X-ray Absorption Spectroscopy (XAS, XANES, XAFS, EXAFS), X-ray Fluorescence (XRF) Spectroscopy and Imaging

Larch: Data Analysis Tools for X-ray Spectroscopy and More Documentation: http://xraypy.github.io/xraylarch Code: http://github.com/xraypy/xraylarch L

Null safe support for Python

Null Safe Python Null safe support for Python. Installation pip install nullsafe Quick Start Dummy Class class Dummy: pass Normal Python code: o =

The Pytorch implementation for "Video-Text Pre-training with Learned Regions"

Region_Learner The Pytorch implementation for "Video-Text Pre-training with Learned Regions" (arxiv) We are still cleaning up the code further and pre

Code for ShadeGAN (NeurIPS2021) A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis

A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis Project Page | Paper A Shading-Guided Generative Implicit Model

The codebase for our paper "Generative Occupancy Fields for 3D Surface-Aware Image Synthesis" (NeurIPS 2021)

Generative Occupancy Fields for 3D Surface-Aware Image Synthesis (NeurIPS 2021) Project Page | Paper Xudong Xu, Xingang Pan, Dahua Lin and Bo Dai GOF

Official code for "Maximum Likelihood Training of Score-Based Diffusion Models", NeurIPS 2021 (spotlight)

Maximum Likelihood Training of Score-Based Diffusion Models This repo contains the official implementation for the paper Maximum Likelihood Training o

rastrainer is a QGIS plugin to training remote sensing semantic segmentation model based on PaddlePaddle.

rastrainer rastrainer is a QGIS plugin to training remote sensing semantic segmentation model based on PaddlePaddle. UI TODO Init UI. Add Block. Add l

We tried to recreate this classic game using python physics libraries.

We tried to recreate this classic game using python physics libraries. The result is certainly hilarious but enjoyable. One of my very first physics application.

Unimodal Face Classification with Multimodal Training

Unimodal Face Classification with Multimodal Training This is a PyTorch implementation of the following paper: Unimodal Face Classification with Multi

Official Implementation of SimIPU: Simple 2D Image and 3D Point Cloud Unsupervised Pre-Training for Spatial-Aware Visual Representations

Official Implementation of SimIPU SimIPU: Simple 2D Image and 3D Point Cloud Unsupervised Pre-Training for Spatial-Aware Visual Representations Since

Simple Tensorflow implementation of "Adaptive Convolutions for Structure-Aware Style Transfer" (CVPR 2021)

AdaConv — Simple TensorFlow Implementation [Paper] : Adaptive Convolutions for Structure-Aware Style Transfer (CVPR 2021) Note This repository does no

VarCLR: Variable Semantic Representation Pre-training via Contrastive Learning

VarCLR: Variable Representation Pre-training via Contrastive Learning New: Paper accepted by ICSE 2022. Preprint at arXiv! This repository contain

iBOT: Image BERT Pre-Training with Online Tokenizer

Image BERT Pre-Training with iBOT Official PyTorch implementation and pretrained models for paper iBOT: Image BERT Pre-Training with Online Tokenizer.

Code for CVPR2019 paper《Unequal Training for Deep Face Recognition with Long Tailed Noisy Data》

Unequal-Training-for-Deep-Face-Recognition-with-Long-Tailed-Noisy-Data. This is the code of CVPR 2019 paper《Unequal Training for Deep Face Recognition

[NeurIPS 2019] Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss

Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, Tengyu Ma This is the offi

![[ICLR 2021 Spotlight] Pytorch implementation for](https://github.com/frank-xwang/RIDE-LongTailRecognition/raw/main/title-img.png)

[ICLR 2021 Spotlight] Pytorch implementation for "Long-tailed Recognition by Routing Diverse Distribution-Aware Experts."

RIDE: Long-tailed Recognition by Routing Diverse Distribution-Aware Experts. by Xudong Wang, Long Lian, Zhongqi Miao, Ziwei Liu and Stella X. Yu at UC