972 Repositories

Python Physics-Aware-Training Libraries

PyTorch implementation of "Representing Shape Collections with Alignment-Aware Linear Models" paper.

deep-linear-shapes PyTorch implementation of "Representing Shape Collections with Alignment-Aware Linear Models" paper. If you find this code useful i

Generate text line images for training deep learning OCR model (e.g. CRNN)

Generate text line images for training deep learning OCR model (e.g. CRNN)

Demonstration of the Model Training as a CI/CD System in Vertex AI

Model Training as a CI/CD System This project demonstrates the machine model training as a CI/CD system in GCP platform. You will see more detailed wo

Codes for CIKM'21 paper 'Self-Supervised Graph Co-Training for Session-based Recommendation'.

COTREC Codes for CIKM'21 paper 'Self-Supervised Graph Co-Training for Session-based Recommendation'. Requirements: Python 3.7, Pytorch 1.6.0 Best Hype

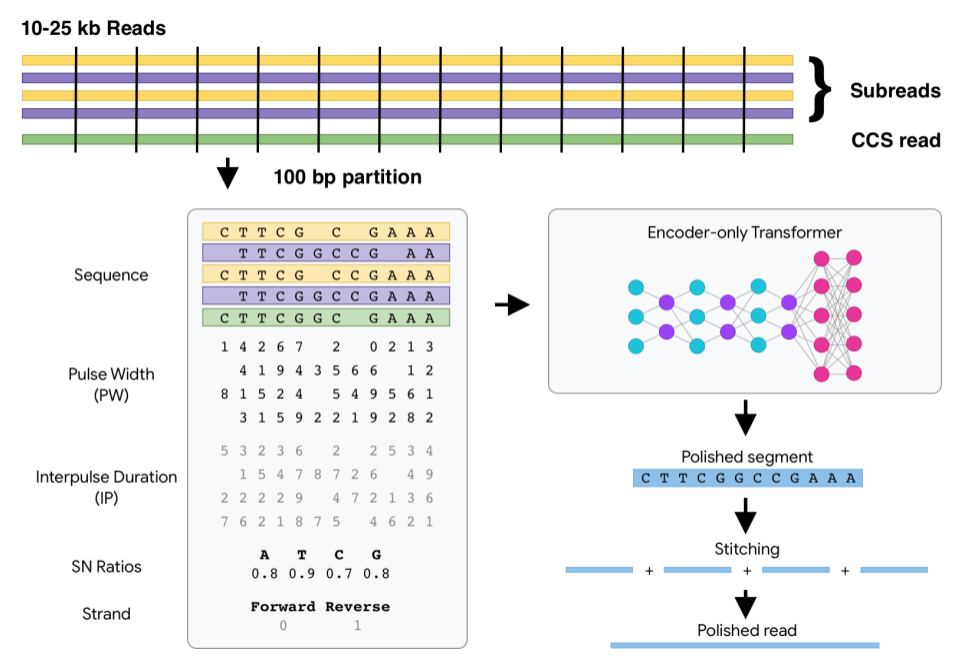

DeepConsensus uses gap-aware sequence transformers to correct errors in Pacific Biosciences (PacBio) Circular Consensus Sequencing (CCS) data.

DeepConsensus DeepConsensus uses gap-aware sequence transformers to correct errors in Pacific Biosciences (PacBio) Circular Consensus Sequencing (CCS)

TAPEX: Table Pre-training via Learning a Neural SQL Executor

TAPEX: Table Pre-training via Learning a Neural SQL Executor The official repository which contains the code and pre-trained models for our paper TAPE

CAPRI: Context-Aware Interpretable Point-of-Interest Recommendation Framework

CAPRI: Context-Aware Interpretable Point-of-Interest Recommendation Framework This repository contains a framework for Recommender Systems (RecSys), a

Ongoing research training transformer language models at scale, including: BERT & GPT-2

Megatron (1 and 2) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA.

ICCV2021 Paper: AutoShape: Real-Time Shape-Aware Monocular 3D Object Detection

ICCV2021 Paper: AutoShape: Real-Time Shape-Aware Monocular 3D Object Detection

![[ICCV'21] Official implementation for the paper Social NCE: Contrastive Learning of Socially-aware Motion Representations](https://github.com/vita-epfl/social-nce-crowdnav/raw/main/docs/illustration.png)

[ICCV'21] Official implementation for the paper Social NCE: Contrastive Learning of Socially-aware Motion Representations

CrowdNav with Social-NCE This is an official implementation for the paper Social NCE: Contrastive Learning of Socially-aware Motion Representations by

A pytorch implementation of Paper "Improved Training of Wasserstein GANs"

WGAN-GP An pytorch implementation of Paper "Improved Training of Wasserstein GANs". Prerequisites Python, NumPy, SciPy, Matplotlib A recent NVIDIA GPU

Code used to generate the results appearing in "Train longer, generalize better: closing the generalization gap in large batch training of neural networks"

Train longer, generalize better - Big batch training This is a code repository used to generate the results appearing in "Train longer, generalize bet

Accelerate Neural Net Training by Progressively Freezing Layers

FreezeOut A simple technique to accelerate neural net training by progressively freezing layers. This repository contains code for the extended abstra

Pytorch implementation of "Forward Thinking: Building and Training Neural Networks One Layer at a Time"

forward-thinking-pytorch Pytorch implementation of Forward Thinking: Building and Training Neural Networks One Layer at a Time Requirements Python 2.7

The official implementation of ICCV paper "Box-Aware Feature Enhancement for Single Object Tracking on Point Clouds".

Box-Aware Tracker (BAT) Pytorch-Lightning implementation of the Box-Aware Tracker. Box-Aware Feature Enhancement for Single Object Tracking on Point C

Unofficial Alias-Free GAN implementation. Based on rosinality's version with expanded training and inference options.

Alias-Free GAN An unofficial version of Alias-Free Generative Adversarial Networks (https://arxiv.org/abs/2106.12423). This repository was heavily bas

This is an official implementation of the paper "Distance-aware Quantization", accepted to ICCV2021.

PyTorch implementation of DAQ This is an official implementation of the paper "Distance-aware Quantization", accepted to ICCV2021. For more informatio

![[ICCV 2021 Oral] PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers](https://github.com/yuxumin/PoinTr/raw/master/fig/pointr.gif)

[ICCV 2021 Oral] PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers

PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers Created by Xumin Yu*, Yongming Rao*, Ziyi Wang, Zuyan Liu, Jiwen Lu, Jie Zhou

![[Preprint]](https://github.com/VITA-Group/Deep_GCN_Benchmarking/raw/main/figs/combo.png)

[Preprint] "Bag of Tricks for Training Deeper Graph Neural Networks A Comprehensive Benchmark Study" by Tianlong Chen*, Kaixiong Zhou*, Keyu Duan, Wenqing Zheng, Peihao Wang, Xia Hu, Zhangyang Wang

Bag of Tricks for Training Deeper Graph Neural Networks: A Comprehensive Benchmark Study Codes for [Preprint] Bag of Tricks for Training Deeper Graph

Experiments on Flood Segmentation on Sentinel-1 SAR Imagery with Cyclical Pseudo Labeling and Noisy Student Training

Flood Detection Challenge This repository contains code for our submission to the ETCI 2021 Competition on Flood Detection (Winning Solution #2). Acco

ST++: Make Self-training Work Better for Semi-supervised Semantic Segmentation

ST++ This is the official PyTorch implementation of our paper: ST++: Make Self-training Work Better for Semi-supervised Semantic Segmentation. Lihe Ya

![[ICCV 2021] Group-aware Contrastive Regression for Action Quality Assessment](https://github.com/yuxumin/CoRe/raw/master/fig/CoRe_pipeline.png)

[ICCV 2021] Group-aware Contrastive Regression for Action Quality Assessment

CoRe Created by Xumin Yu*, Yongming Rao*, Wenliang Zhao, Jiwen Lu, Jie Zhou This is the PyTorch implementation for ICCV paper Group-aware Contrastive

We present a framework for training multi-modal deep learning models on unlabelled video data by forcing the network to learn invariances to transformations applied to both the audio and video streams.

Multi-Modal Self-Supervision using GDT and StiCa This is an official pytorch implementation of papers: Multi-modal Self-Supervision from Generalized D

Empower Sequence Labeling with Task-Aware Language Model

LM-LSTM-CRF Check Our New NER Toolkit 🚀 🚀 🚀 Inference: LightNER: inference w. models pre-trained / trained w. any following tools, efficiently. Tra

PyTorch DepthNet Training on Still Box dataset

DepthNet training on Still Box Project page This code can replicate the results of our paper that was published in UAVg-17. If you use this repo in yo

zoofs is a Python library for performing feature selection using an variety of nature inspired wrapper algorithms. The algorithms range from swarm-intelligence to physics based to Evolutionary. It's easy to use ,flexible and powerful tool to reduce your feature size.

zoofs is a Python library for performing feature selection using a variety of nature-inspired wrapper algorithms. The algorithms range from swarm-intelligence to physics-based to Evolutionary. It's easy to use , flexible and powerful tool to reduce your feature size.

Part-Aware Data Augmentation for 3D Object Detection in Point Cloud

Part-Aware Data Augmentation for 3D Object Detection in Point Cloud This repository contains a reference implementation of our Part-Aware Data Augment

Official implementation of the ICCV 2021 paper "Conditional DETR for Fast Training Convergence".

The DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross-attention mechanism for fast DETR training. Our approach is motivated by that the cross-attention in DETR relies highly on the content embeddings and that the spatial embeddings make minor contributions, increasing the need for high-quality content embeddings and thus increasing the training difficulty.

Reference code for the paper CAMS: Color-Aware Multi-Style Transfer.

CAMS: Color-Aware Multi-Style Transfer Mahmoud Afifi1, Abdullah Abuolaim*1, Mostafa Hussien*2, Marcus A. Brubaker1, Michael S. Brown1 1York University

Dynamic Divide-and-Conquer Adversarial Training for Robust Semantic Segmentation (ICCV2021)

Dynamic Divide-and-Conquer Adversarial Training for Robust Semantic Segmentation This is a pytorch project for the paper Dynamic Divide-and-Conquer Ad

Official Implementation of Domain-Aware Universal Style Transfer

Domain Aware Universal Style Transfer Official Pytorch Implementation of 'Domain Aware Universal Style Transfer' (ICCV 2021) Domain Aware Universal St

Unofficial pytorch implementation for Self-critical Sequence Training for Image Captioning. and others.

An Image Captioning codebase This is a codebase for image captioning research. It supports: Self critical training from Self-critical Sequence Trainin

IAST: Instance Adaptive Self-training for Unsupervised Domain Adaptation (ECCV 2020)

This repo is the official implementation of our paper "Instance Adaptive Self-training for Unsupervised Domain Adaptation". The purpose of this repo is to better communicate with you and respond to your questions. This repo is almost the same with Another-Version, and you can also refer to that version.

Ongoing research training transformer language models at scale, including: BERT & GPT-2

What is this fork of Megatron-LM and Megatron-DeepSpeed This is a detached fork of https://github.com/microsoft/Megatron-DeepSpeed, which in itself is

We evaluate our method on different datasets (including ShapeNet, CUB-200-2011, and Pascal3D+) and achieve state-of-the-art results, outperforming all the other supervised and unsupervised methods and 3D representations, all in terms of performance, accuracy, and training time.

An Effective Loss Function for Generating 3D Models from Single 2D Image without Rendering Papers with code | Paper Nikola Zubić Pietro Lio University

PyTorch implementation of ShapeConv: Shape-aware Convolutional Layer for RGB-D Indoor Semantic Segmentation.

Shape-aware Convolutional Layer (ShapeConv) PyTorch implementation of ShapeConv: Shape-aware Convolutional Layer for RGB-D Indoor Semantic Segmentatio

(CVPR2021) Kaleido-BERT: Vision-Language Pre-training on Fashion Domain

Kaleido-BERT: Vision-Language Pre-training on Fashion Domain Mingchen Zhuge*, Dehong Gao*, Deng-Ping Fan#, Linbo Jin, Ben Chen, Haoming Zhou, Minghui

Code of PVTv2 is released! PVTv2 largely improves PVTv1 and works better than Swin Transformer with ImageNet-1K pre-training.

Updates (2020/06/21) Code of PVTv2 is released! PVTv2 largely improves PVTv1 and works better than Swin Transformer with ImageNet-1K pre-training. Pyr

LightSeq is a high performance training and inference library for sequence processing and generation implemented in CUDA

LightSeq: A High Performance Library for Sequence Processing and Generation

Partial implementation of ODE-GAN technique from the paper Training Generative Adversarial Networks by Solving Ordinary Differential Equations

ODE GAN (Prototype) in PyTorch Partial implementation of ODE-GAN technique from the paper Training Generative Adversarial Networks by Solving Ordinary

Hierarchical Metadata-Aware Document Categorization under Weak Supervision (WSDM'21)

Hierarchical Metadata-Aware Document Categorization under Weak Supervision This project provides a weakly supervised framework for hierarchical metada

ManiSkill-Learn is a framework for training agents on SAPIEN Open-Source Manipulation Skill Challenge (ManiSkill Challenge), a large-scale learning-from-demonstrations benchmark for object manipulation.

ManiSkill-Learn ManiSkill-Learn is a framework for training agents on SAPIEN Open-Source Manipulation Skill Challenge, a large-scale learning-from-dem

Code for paper "ASAP-Net: Attention and Structure Aware Point Cloud Sequence Segmentation"

ASAP-Net This project implements ASAP-Net of paper ASAP-Net: Attention and Structure Aware Point Cloud Sequence Segmentation (BMVC2020). Overview We i

Implementation of the paper All Labels Are Not Created Equal: Enhancing Semi-supervision via Label Grouping and Co-training

SemCo The official pytorch implementation of the paper All Labels Are Not Created Equal: Enhancing Semi-supervision via Label Grouping and Co-training

Official PyTorch implementation of "VITON-HD: High-Resolution Virtual Try-On via Misalignment-Aware Normalization" (CVPR 2021)

VITON-HD — Official PyTorch Implementation VITON-HD: High-Resolution Virtual Try-On via Misalignment-Aware Normalization Seunghwan Choi*1, Sunghyun Pa

A library for uncertainty representation and training in neural networks.

Epistemic Neural Networks A library for uncertainty representation and training in neural networks. Introduction Many applications in deep learning re

Implementation of ICCV2021(Oral) paper - VMNet: Voxel-Mesh Network for Geodesic-aware 3D Semantic Segmentation

VMNet: Voxel-Mesh Network for Geodesic-Aware 3D Semantic Segmentation Created by Zeyu HU Introduction This work is based on our paper VMNet: Voxel-Mes

Object-aware Contrastive Learning for Debiased Scene Representation

Object-aware Contrastive Learning Official PyTorch implementation of "Object-aware Contrastive Learning for Debiased Scene Representation" by Sangwoo

Universal End2End Training Platform, including pre-training, classification tasks, machine translation, and etc.

背景 安装教程 快速上手 (一)预训练模型 (二)机器翻译 (三)文本分类 TenTrans 进阶 1. 多语言机器翻译 2. 跨语言预训练 背景 TrenTrans是一个统一的端到端的多语言多任务预训练平台,支持多种预训练方式,以及序列生成和自然语言理解任务。 安装教程 git clone git

A collection of interactive machine-learning experiments: 🏋️models training + 🎨models demo

🤖 Interactive Machine Learning experiments: 🏋️models training + 🎨models demo

MASS: Masked Sequence to Sequence Pre-training for Language Generation

MASS: Masked Sequence to Sequence Pre-training for Language Generation

In-Place Activated BatchNorm for Memory-Optimized Training of DNNs

In-Place Activated BatchNorm In-Place Activated BatchNorm for Memory-Optimized Training of DNNs In-Place Activated BatchNorm (InPlace-ABN) is a novel

Pytorch Implementation of Interaction Networks for Learning about Objects, Relations and Physics

Interaction-Network-Pytorch Pytorch Implementraion of Interaction Networks for Learning about Objects, Relations and Physics. Interaction Network is a

This's an implementation of deepmind Visual Interaction Networks paper using pytorch

Visual-Interaction-Networks An implementation of Deepmind visual interaction networks in Pytorch. Introduction For the purpose of understanding the ch

Proximal Backpropagation - a neural network training algorithm that takes implicit instead of explicit gradient steps

Proximal Backpropagation Proximal Backpropagation (ProxProp) is a neural network training algorithm that takes implicit instead of explicit gradient s

A pytorch implementation of Detectron. Both training from scratch and inferring directly from pretrained Detectron weights are available.

Use this instead: https://github.com/facebookresearch/maskrcnn-benchmark A Pytorch Implementation of Detectron Example output of e2e_mask_rcnn-R-101-F

Code for paper "Which Training Methods for GANs do actually Converge? (ICML 2018)"

GAN stability This repository contains the experiments in the supplementary material for the paper Which Training Methods for GANs do actually Converg

Code for the ICML 2021 paper "Bridging Multi-Task Learning and Meta-Learning: Towards Efficient Training and Effective Adaptation", Haoxiang Wang, Han Zhao, Bo Li.

Bridging Multi-Task Learning and Meta-Learning Code for the ICML 2021 paper "Bridging Multi-Task Learning and Meta-Learning: Towards Efficient Trainin

IDRLnet, a Python toolbox for modeling and solving problems through Physics-Informed Neural Network (PINN) systematically.

IDRLnet IDRLnet is a machine learning library on top of PyTorch. Use IDRLnet if you need a machine learning library that solves both forward and inver

Deduplicating Training Data Makes Language Models Better

Deduplicating Training Data Makes Language Models Better This repository contains code to deduplicate language model datasets as descrbed in the paper

Reference implementation of code generation projects from Facebook AI Research. General toolkit to apply machine learning to code, from dataset creation to model training and evaluation. Comes with pretrained models.

This repository is a toolkit to do machine learning for programming languages. It implements tokenization, dataset preprocessing, model training and m

A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers.

ViTGAN: Training GANs with Vision Transformers A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers. Refer

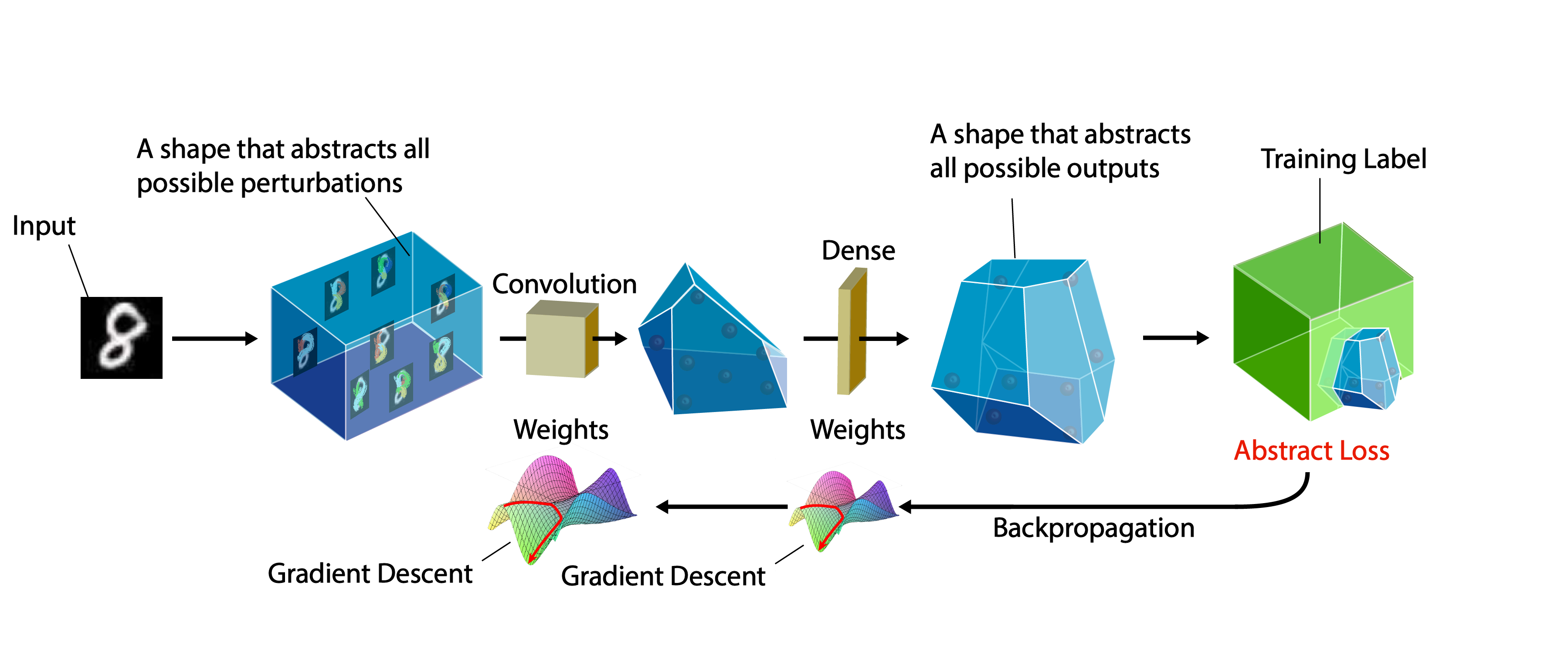

A certifiable defense against adversarial examples by training neural networks to be provably robust

DiffAI v3 DiffAI is a system for training neural networks to be provably robust and for proving that they are robust. The system was developed for the

A PyTorch implementation of "Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks" (KDD 2019).

ClusterGCN ⠀⠀ A PyTorch implementation of "Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks" (KDD 2019). A

![[RSS 2021] An End-to-End Differentiable Framework for Contact-Aware Robot Design](https://github.com/eanswer/DiffHand/raw/master/demos/representative_image.jpg)

[RSS 2021] An End-to-End Differentiable Framework for Contact-Aware Robot Design

DiffHand This repository contains the implementation for the paper An End-to-End Differentiable Framework for Contact-Aware Robot Design (RSS 2021). I

Self-training for Few-shot Transfer Across Extreme Task Differences

Self-training for Few-shot Transfer Across Extreme Task Differences (STARTUP) Introduction This repo contains the official implementation of the follo

PyTorch implementation of our Adam-NSCL algorithm from our CVPR2021 (oral) paper "Training Networks in Null Space for Continual Learning"

Adam-NSCL This is a PyTorch implementation of Adam-NSCL algorithm for continual learning from our CVPR2021 (oral) paper: Title: Training Networks in N

StackRec: Efficient Training of Very Deep Sequential Recommender Models by Iterative Stacking

StackRec: Efficient Training of Very Deep Sequential Recommender Models by Iterative Stacking Datasets You can download datasets that have been pre-pr

Official repository for the paper, MidiBERT-Piano: Large-scale Pre-training for Symbolic Music Understanding.

MidiBERT-Piano Authors: Yi-Hui (Sophia) Chou, I-Chun (Bronwin) Chen Introduction This is the official repository for the paper, MidiBERT-Piano: Large-

AlphaNet Improved Training of Supernet with Alpha-Divergence

AlphaNet: Improved Training of Supernet with Alpha-Divergence This repository contains our PyTorch training code, evaluation code and pretrained model

ONNX Runtime for PyTorch accelerates PyTorch model training using ONNX Runtime.

Accelerate PyTorch models with ONNX Runtime

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators. It's also a suite of learning algorithms to train agents to operate in these environments (PPO, SAC, evolutionary strategy, and direct trajectory optimization are implemented).

Решения, подсказки, тесты и утилиты для тренировки по алгоритмам от Яндекса.

Решения и подсказки к тренировке по алгоритмам от Яндекса Что есть внутри Решения с подсказками и комментариями; рекомендую сначала смотреть md файл п

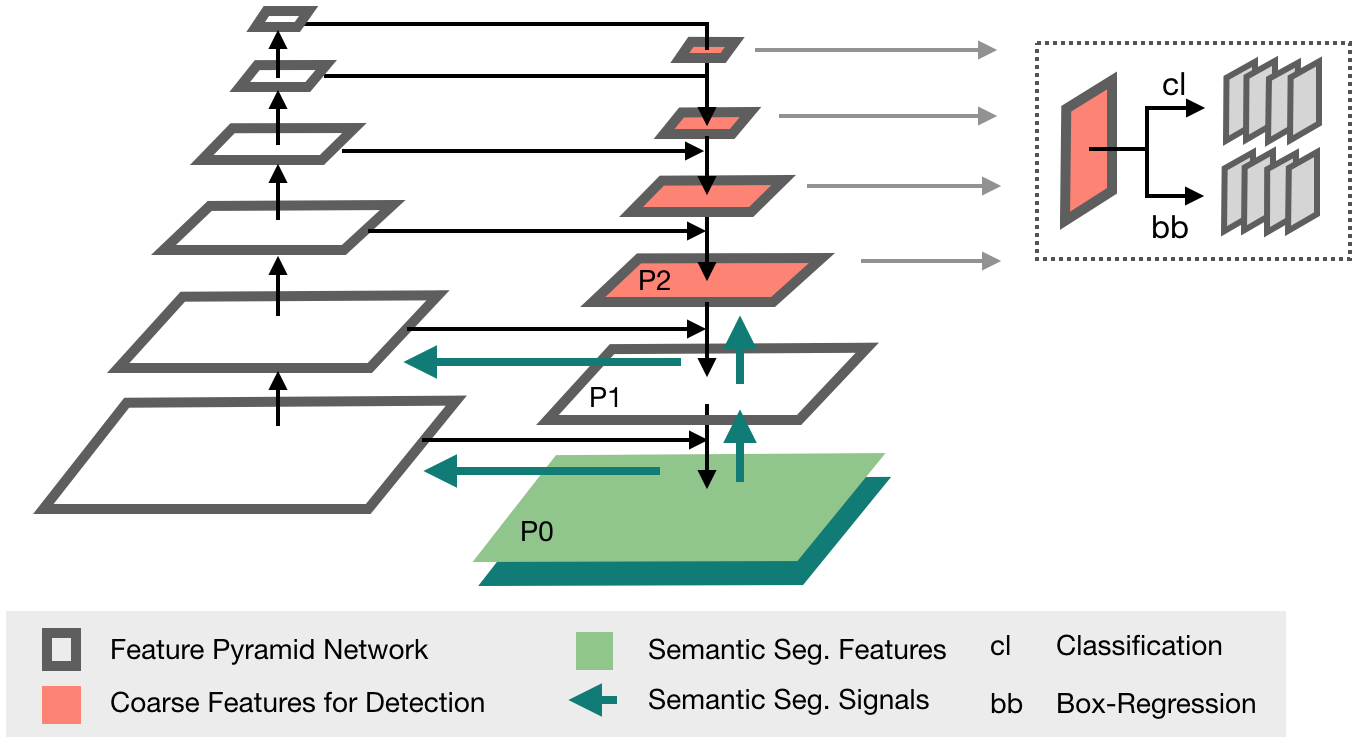

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

Official PyTorch implementation of UACANet: Uncertainty Aware Context Attention for Polyp Segmentation

UACANet: Uncertainty Aware Context Attention for Polyp Segmentation Official pytorch implementation of UACANet: Uncertainty Aware Context Attention fo

HyperPose is a library for building high-performance custom pose estimation applications.

HyperPose is a library for building high-performance custom pose estimation applications.

Code release for BlockGAN: Learning 3D Object-aware Scene Representations from Unlabelled Images

BlockGAN Code release for BlockGAN: Learning 3D Object-aware Scene Representations from Unlabelled Images BlockGAN: Learning 3D Object-aware Scene Rep

Piotr - IoT firmware emulation instrumentation for training and research

Piotr: Pythonic IoT exploitation and Research Introduction to Piotr Piotr is an emulation helper for Qemu that provides a convenient way to create, sh

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

WAGMA-SGD is a decentralized asynchronous SGD for distributed deep learning training based on model averaging.

WAGMA-SGD is a decentralized asynchronous SGD based on wait-avoiding group model averaging. The synchronization is relaxed by making the collectives externally-triggerable, namely, a collective can be initiated without requiring that all the processes enter it. It partially reduces the data within non-overlapping groups of process, improving the parallel scalability.

Bagua is a flexible and performant distributed training algorithm development framework.

Bagua is a flexible and performant distributed training algorithm development framework.

Heimdall watchtower automatically sends you emails to notify you of the latest progress of your deep learning programs.

This software automatically sends you emails to notify you of the latest progress of your deep learning programs.

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Official PyTorch implementation of "Physics-aware Difference Graph Networks for Sparsely-Observed Dynamics".

Physics-aware Difference Graph Networks for Sparsely-Observed Dynamics This repository is the official PyTorch implementation of "Physics-aware Differ

Code for the paper "Balancing Training for Multilingual Neural Machine Translation, ACL 2020"

Balancing Training for Multilingual Neural Machine Translation Implementation of the paper Balancing Training for Multilingual Neural Machine Translat

Shared code for training sentence embeddings with Flax / JAX

flax-sentence-embeddings This repository will be used to share code for the Flax / JAX community event to train sentence embeddings on 1B+ training pa

Source code for the paper "PLOME: Pre-training with Misspelled Knowledge for Chinese Spelling Correction" in ACL2021

PLOME:Pre-training with Misspelled Knowledge for Chinese Spelling Correction (ACL2021) This repository provides the code and data of the work in ACL20

This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code for training a DPR model then continuing training with RAG.

KGI (Knowledge Graph Induction) for slot filling This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code fo

Official code of paper "PGT: A Progressive Method for Training Models on Long Videos" on CVPR2021

PGT Code for paper PGT: A Progressive Method for Training Models on Long Videos. Install Run pip install -r requirements.txt. Run python setup.py buil

This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transformations at CVPR'21. According to some product reasons, we are not planning to release the training/testing codes and models. However, we will release the dataset and the scripts to prepare the dataset.

TransFill-Reference-Inpainting This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transf

The source codes for ACL 2021 paper 'BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data'

BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data This repository provides the implementation details for

YoloV5 implemented by TensorFlow2 , with support for training, evaluation and inference.

Efficient implementation of YOLOV5 in TensorFlow2

PyMedPhys is an open-source Medical Physics python library

PyMedPhys is an open-source Medical Physics python library built by an open community that values and prioritises code sharing, review, improvement, and learning from each other. I

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators

Brax is a differentiable physics engine that simulates environments made up of rigid bodies, joints, and actuators. It's also a suite of learning algorithms to train agents to operate in these environments (PPO, SAC, evolutionary strategy, and direct trajectory optimization are implemented).

Orthogonal Over-Parameterized Training

The inductive bias of a neural network is largely determined by the architecture and the training algorithm. To achieve good generalization, how to effectively train a neural network is of great importance. We propose a novel orthogonal over-parameterized training (OPT) framework that can provably minimize the hyperspherical energy which characterizes the diversity of neurons on a hypersphere. See our previous work -- MHE for an in-depth introduction.

quantize aware training package for NCNN on pytorch

ncnnqat ncnnqat is a quantize aware training package for NCNN on pytorch. Table of Contents ncnnqat Table of Contents Installation Usage Code Examples

Code for PhySG: Inverse Rendering with Spherical Gaussians for Physics-based Relighting and Material Editing

PhySG: Inverse Rendering with Spherical Gaussians for Physics-based Relighting and Material Editing CVPR 2021. Project page: https://kai-46.github.io/

[CVPR'21] Locally Aware Piecewise Transformation Fields for 3D Human Mesh Registration

Locally Aware Piecewise Transformation Fields for 3D Human Mesh Registration This repository contains the implementation of our paper Locally Aware Pi

TAP: Text-Aware Pre-training for Text-VQA and Text-Caption, CVPR 2021 (Oral)

TAP: Text-Aware Pre-training TAP: Text-Aware Pre-training for Text-VQA and Text-Caption by Zhengyuan Yang, Yijuan Lu, Jianfeng Wang, Xi Yin, Dinei Flo

Code for the paper One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation, CVPR 2021.

One Thing One Click One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation (CVPR2021) Code for the paper One Thi