34 Repositories

Python acceleration Libraries

Sionna: An Open-Source Library for Next-Generation Physical Layer Research

Sionna: An Open-Source Library for Next-Generation Physical Layer Research Sionna™ is an open-source Python library for link-level simulations of digi

GenGNN: A Generic FPGA Framework for Graph Neural Network Acceleration

GenGNN: A Generic FPGA Framework for Graph Neural Network Acceleration Stefan Abi-Karam*, Yuqi He*, Rishov Sarkar*, Lakshmi Sathidevi, Zihang Qiao, Co

Orchestrating Distributed Materials Acceleration Platform Tutorial

Orchestrating Distributed Materials Acceleration Platform Tutorial This tutorial for orchestrating distributed materials acceleration platform was pre

PyTorch implementation of federated learning framework based on the acceleration of global momentum

Federated Learning with Acceleration of Global Momentum PyTorch implementation of federated learning framework based on the acceleration of global mom

Collection of machine learning related notebooks to share.

ML_Notebooks Collection of machine learning related notebooks to share. Notebooks GAN_distributed_training.ipynb In this Notebook, TensorFlow's tutori

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

ONNX Runtime is a cross-platform inference and training machine-learning accelerator. ONNX Runtime inference can enable faster customer experiences an

Efficient and Scalable Physics-Informed Deep Learning and Scientific Machine Learning on top of Tensorflow for multi-worker distributed computing

Notice: Support for Python 3.6 will be dropped in v.0.2.1, please plan accordingly! Efficient and Scalable Physics-Informed Deep Learning Collocation-

A python package simulating the quasi-2D pseudospin-1/2 Gross-Pitaevskii equation with NVIDIA GPU acceleration.

A python package simulating the quasi-2D pseudospin-1/2 Gross-Pitaevskii equation with NVIDIA GPU acceleration. Introduction spinor-gpe is high-level,

A C-like hardware description language (HDL) adding high level synthesis(HLS)-like automatic pipelining as a language construct/compiler feature.

██████╗ ██╗██████╗ ███████╗██╗ ██╗███╗ ██╗███████╗ ██████╗ ██╔══██╗██║██╔══██╗██╔════╝██║ ██║████╗ ██║██╔════╝██╔════╝ ██████╔╝██║██████╔╝█

A library for researching neural networks compression and acceleration methods.

A library for researching neural networks compression and acceleration methods.

SAMO: Streaming Architecture Mapping Optimisation

SAMO: Streaming Architecture Mapping Optimiser The SAMO framework provides a method of optimising the mapping of a Convolutional Neural Network model

Deploying a Text Summarization NLP use case on Docker Container Utilizing Nvidia GPU

GPU Docker NLP Application Deployment Deploying a Text Summarization NLP use case on Docker Container Utilizing Nvidia GPU, to setup the enviroment on

⚡️Optimizing einsum functions in NumPy, Tensorflow, Dask, and more with contraction order optimization.

Optimized Einsum Optimized Einsum: A tensor contraction order optimizer Optimized einsum can significantly reduce the overall execution time of einsum

GNNAdvisor: An Efficient Runtime System for GNN Acceleration on GPUs

GNNAdvisor: An Efficient Runtime System for GNN Acceleration on GPUs [Paper, Slides, Video Talk] at USENIX OSDI'21 @inproceedings{GNNAdvisor, title=

Calculates JMA (Japan Meteorological Agency) seismic intensity (shindo) scale from acceleration data recorded in NumPy array

shindo.py Calculates JMA (Japan Meteorological Agency) seismic intensity (shindo) scale from acceleration data stored in NumPy array Introduction Japa

Anderson Acceleration for Deep Learning

Anderson Accelerated Deep Learning (AADL) AADL is a Python package that implements the Anderson acceleration to speed-up the training of deep learning

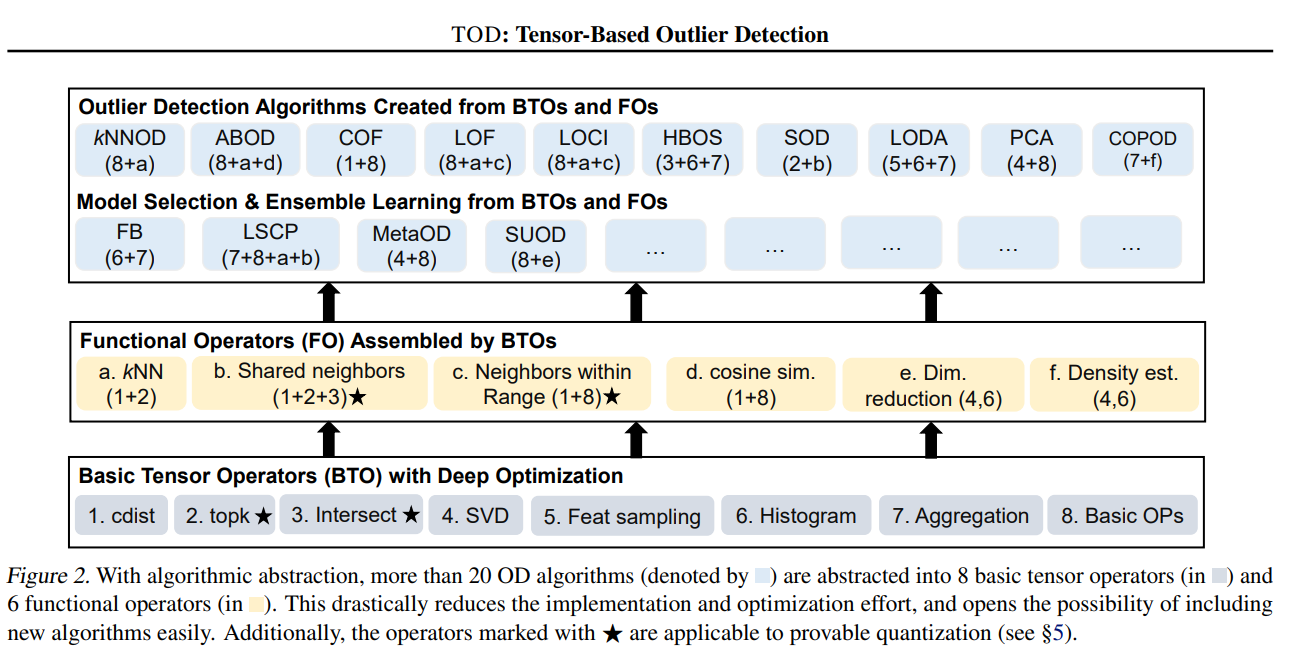

(Py)TOD: Tensor-based Outlier Detection, A General GPU-Accelerated Framework

(Py)TOD: Tensor-based Outlier Detection, A General GPU-Accelerated Framework Background: Outlier detection (OD) is a key data mining task for identify

Joint Channel and Weight Pruning for Model Acceleration on Mobile Devices

Joint Channel and Weight Pruning for Model Acceleration on Mobile Devices Abstract For practical deep neural network design on mobile devices, it is e

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

Neural Fixed-Point Acceleration for Convex Optimization

Licensing The majority of neural-scs is licensed under the CC BY-NC 4.0 License, however, portions of the project are available under separate license

Convert Python 3 code to CUDA code.

Py2CUDA Convert python code to CUDA. Usage To convert a python file say named py_file.py to CUDA, run python generate_cuda.py --file py_file.py --arch

This is the unofficial code of Deep Dual-resolution Networks for Real-time and Accurate Semantic Segmentation of Road Scenes. which achieve state-of-the-art trade-off between accuracy and speed on cityscapes and camvid, without using inference acceleration and extra data

Deep Dual-resolution Networks for Real-time and Accurate Semantic Segmentation of Road Scenes Introduction This is the unofficial code of Deep Dual-re

DI-HPC is an acceleration operator component for general algorithm modules in reinforcement learning algorithms

DI-HPC: Decision Intelligence - High Performance Computation DI-HPC is an acceleration operator component for general algorithm modules in reinforceme

Complete U-net Implementation with keras

U Net Lowered with Keras Complete U-net Implementation with keras Original Paper Link : https://arxiv.org/abs/1505.04597 Special Implementations : The

Sharpness-Aware Minimization for Efficiently Improving Generalization

Sharpness-Aware-Minimization-TensorFlow This repository provides a minimal implementation of sharpness-aware minimization (SAM) (Sharpness-Aware Minim

MASA-SR: Matching Acceleration and Spatial Adaptation for Reference-Based Image Super-Resolution (CVPR2021)

MASA-SR Official PyTorch implementation of our CVPR2021 paper MASA-SR: Matching Acceleration and Spatial Adaptation for Reference-Based Image Super-Re

SLIDE : In Defense of Smart Algorithms over Hardware Acceleration for Large-Scale Deep Learning Systems

The SLIDE package contains the source code for reproducing the main experiments in this paper. Dataset The Datasets can be downloaded in Amazon-

A highly efficient and modular implementation of Gaussian Processes in PyTorch

GPyTorch GPyTorch is a Gaussian process library implemented using PyTorch. GPyTorch is designed for creating scalable, flexible, and modular Gaussian

BlazingSQL is a lightweight, GPU accelerated, SQL engine for Python. Built on RAPIDS cuDF.

A lightweight, GPU accelerated, SQL engine built on the RAPIDS.ai ecosystem. Get Started on app.blazingsql.com Getting Started | Documentation | Examp

A curated list of neural network pruning resources.

A curated list of neural network pruning and related resources. Inspired by awesome-deep-vision, awesome-adversarial-machine-learning, awesome-deep-learning-papers and Awesome-NAS.

A highly efficient and modular implementation of Gaussian Processes in PyTorch

GPyTorch GPyTorch is a Gaussian process library implemented using PyTorch. GPyTorch is designed for creating scalable, flexible, and modular Gaussian

QKeras: a quantization deep learning library for Tensorflow Keras

QKeras github.com/google/qkeras QKeras 0.8 highlights: Automatic quantization using QKeras; Stochastic behavior (including stochastic rouding) is disa

Tensors and Dynamic neural networks in Python with strong GPU acceleration

PyTorch is a Python package that provides two high-level features: Tensor computation (like NumPy) with strong GPU acceleration Deep neural networks b

Tensors and Dynamic neural networks in Python with strong GPU acceleration

PyTorch is a Python package that provides two high-level features: Tensor computation (like NumPy) with strong GPU acceleration Deep neural networks b