860 Repositories

Python attention-feature-distillation Libraries

Sparse Progressive Distillation: Resolving Overfitting under Pretrain-and-Finetune Paradigm

Sparse Progressive Distillation: Resolving Overfitting under Pretrain-and-Finetu

AlexaUsingPython - Alexa will pay attention to your order, as: Hello Alexa, play music, Hello Alexa

AlexaUsingPython - Alexa will pay attention to your order, as: Hello Alexa, play music, Hello Alexa, what's the time? Alexa will pay attention to your order, get it, and afterward do some activity as indicated by your order.

Awesome Graph Classification - A collection of important graph embedding, classification and representation learning papers with implementations.

A collection of graph classification methods, covering embedding, deep learning, graph kernel and factorization papers

Transformer - A TensorFlow Implementation of the Transformer: Attention Is All You Need

[UPDATED] A TensorFlow Implementation of Attention Is All You Need When I opened this repository in 2017, there was no official code yet. I tried to i

50-days-of-Statistics-for-Data-Science - This repository consist of a 50-day program

50-days-of-Statistics-for-Data-Science - This repository consist of a 50-day program. All the statistics required for the complete understanding of data science will be uploaded in this repository.

Mortgage-loan-prediction - Show how to perform advanced Analytics and Machine Learning in Python using a full complement of PyData utilities

Mortgage-loan-prediction - Show how to perform advanced Analytics and Machine Learning in Python using a full complement of PyData utilities. This is aimed at those looking to get into the field of Data Science or those who are already in the field and looking to solve a real-world project with python.

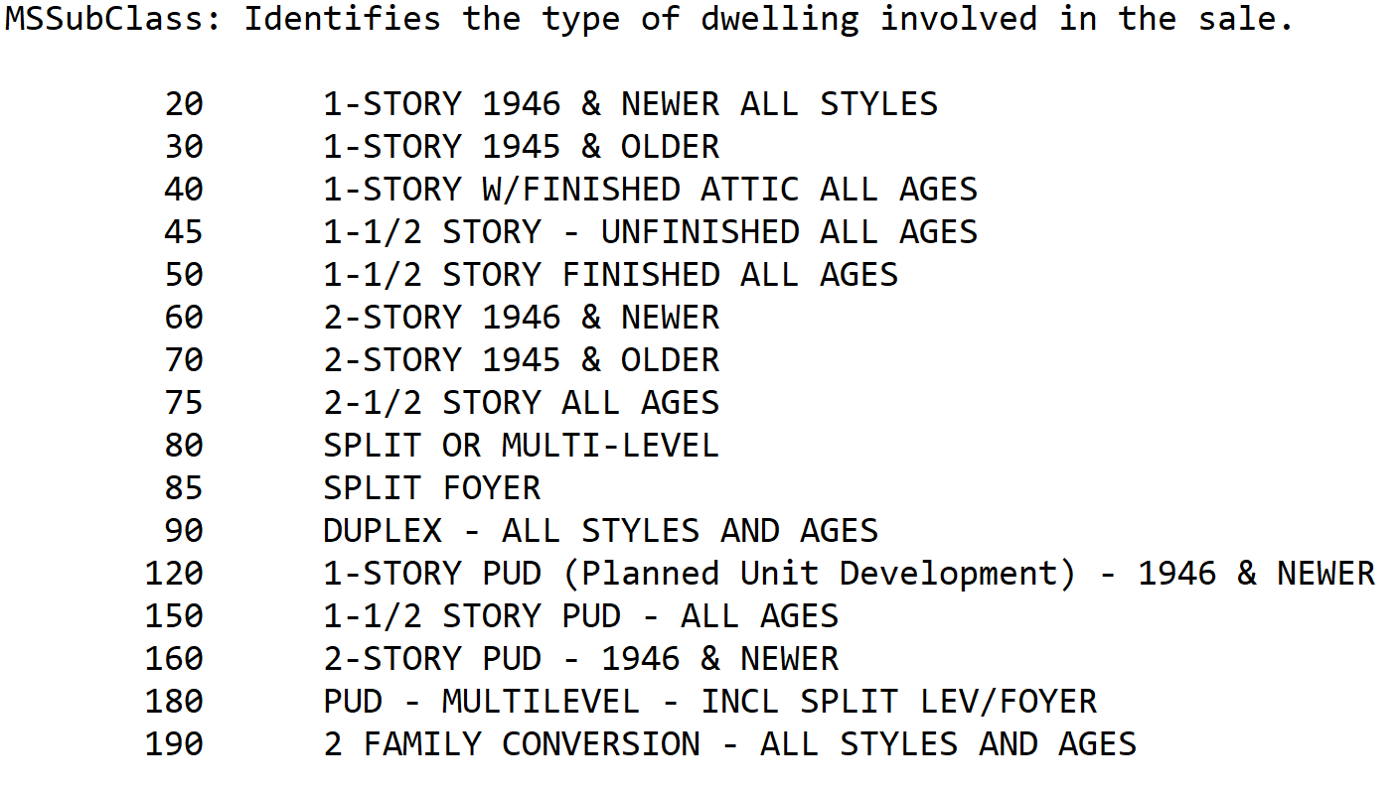

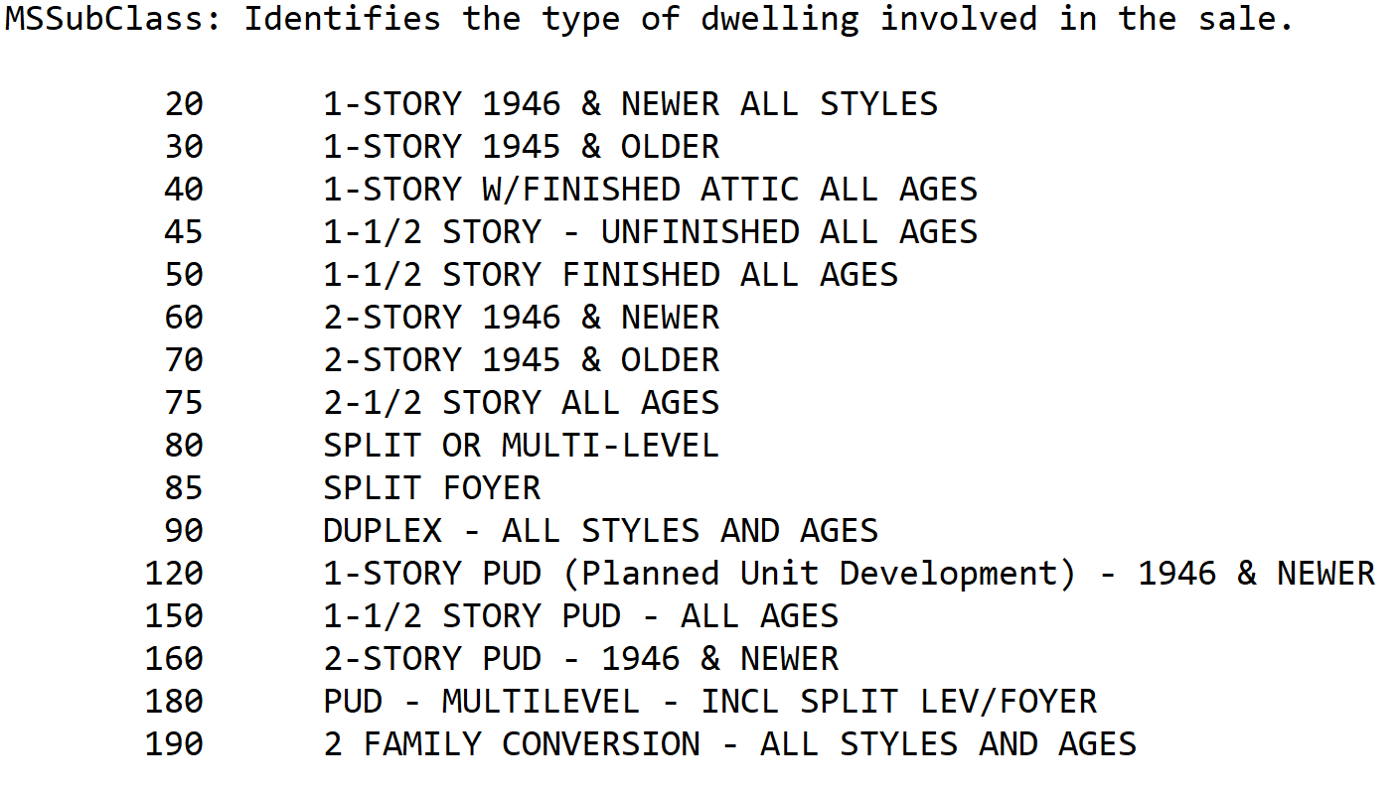

House_prices_kaggle - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

Houseprices - Predict sales prices and practice feature engineering, RFs, and gradient boosting

House Prices - Advanced Regression Techniques Predicting House Prices with Machine Learning This project is build to enhance my knowledge about machin

Roadster - Distance to Closest Road Feature Server

Roadster: Distance to Closest Road Feature Server Milliarium Aerum, the zero of

PyTorch Lightning + Hydra. A feature-rich template for rapid, scalable and reproducible ML experimentation with best practices. ⚡🔥⚡

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation, CVPR 2020 (Oral)

SEAM The implementation of Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentaion. You can also download the repos

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

A PyTorch implementation of "SelfGNN: Self-supervised Graph Neural Networks without explicit negative sampling"

SelfGNN A PyTorch implementation of "SelfGNN: Self-supervised Graph Neural Networks without explicit negative sampling" paper, which will appear in Th

Source code of the "Graph-Bert: Only Attention is Needed for Learning Graph Representations" paper

Graph-Bert Source code of "Graph-Bert: Only Attention is Needed for Learning Graph Representations". Please check the script.py as the entry point. We

PyTorch code for training MM-DistillNet for multimodal knowledge distillation

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge MM-DistillNet is a

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

The code for SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network.

SAG-DTA The code is the implementation for the paper 'SAG-DTA: Prediction of Drug–Target Affinity Using Self-Attention Graph Network'. Requirements py

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

Attention for PyTorch with Linear Memory Footprint

Attention for PyTorch with Linear Memory Footprint Unofficially implements https://arxiv.org/abs/2112.05682 to get Linear Memory Cost on Attention (+

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

Learning hierarchical attention for weakly-supervised chest X-ray abnormality localization and diagnosis

Hierarchical Attention Mining (HAM) for weakly-supervised abnormality localization This is the official PyTorch implementation for the HAM method. Pap

Multi-Probe Attention for Semantic Indexing

Multi-Probe Attention for Semantic Indexing About This project is developed for the topic of COVID-19 semantic indexing. Directories & files A. The di

Low-level, feature rich and easy to use discord python wrapper

PWRCord Low-level, feature rich and easy to use discord python wrapper Important Note: At this point, this library API is considered unstable and can

A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory"

memory_efficient_attention.pytorch A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory" (Rabe&Staats'21). def effic

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation Introduction WAKD is a PyTorch implementation for our ICPR-2022 pap

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python)

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python) 日本語は以下に続きます (Japanese follows) English: This book is written in Japanese and primaril

Source code of D-HAN: Dynamic News Recommendation with Hierarchical Attention Network

D-HAN The source code of D-HAN This is the source code of D-HAN: Dynamic News Recommendation with Hierarchical Attention Network. However, only the co

This repository contains a CBIR system that uses swin transformer to extract image's feature.

Swin-transformer based CBIR This repository contains a CBIR(content-based image retrieval) system. Here we use Swin-transformer to extract query image

Codes for “A Deeply Supervised Attention Metric-Based Network and an Open Aerial Image Dataset for Remote Sensing Change Detection”

DSAMNet The pytorch implementation for "A Deeply-supervised Attention Metric-based Network and an Open Aerial Image Dataset for Remote Sensing Change

CoANet: Connectivity Attention Network for Road Extraction From Satellite Imagery

CoANet: Connectivity Attention Network for Road Extraction From Satellite Imagery This paper (CoANet) has been published in IEEE TIP 2021. This code i

Official Pytorch implementation of the paper: "Locally Shifted Attention With Early Global Integration"

Locally-Shifted-Attention-With-Early-Global-Integration Pretrained models You can download all the models from here. Training Imagenet python -m torch

A-ESRGAN aims to provide better super-resolution images by using multi-scale attention U-net discriminators.

A-ESRGAN: Training Real-World Blind Super-Resolution with Attention-based U-net Discriminators The authors are hidden for the purpose of double blind

A PyTorch version of You Only Look at One-level Feature object detector

PyTorch_YOLOF A PyTorch version of You Only Look at One-level Feature object detector. The input image must be resized to have their shorter side bein

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition"

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition" Pre-trained Deep Convo

Using computer vision method to recognize and calcutate the features of the architecture.

building-feature-recognition In this repository, we accomplished building feature recognition using traditional/dl-assisted computer vision method. Th

This code provides a PyTorch implementation for OTTER (Optimal Transport distillation for Efficient zero-shot Recognition), as described in the paper.

Data Efficient Language-Supervised Zero-Shot Recognition with Optimal Transport Distillation This repository contains PyTorch evaluation code, trainin

A wrapper around SageMaker ML Lineage Tracking extending ML Lineage to end-to-end ML lifecycles, including additional capabilities around Feature Store groups, queries, and other relevant artifacts.

ML Lineage Helper This library is a wrapper around the SageMaker SDK to support ease of lineage tracking across the ML lifecycle. Lineage artifacts in

TensorFlow Implementation of "Show, Attend and Tell"

Show, Attend and Tell Update (December 2, 2016) TensorFlow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attent

Feature rich robust FastAPI template.

Flexible and Lightweight general-purpose template for FastAPI. Usage ⚠️ Git, Python and Poetry must be installed and accessible ⚠️ Poetry version must

Feature Store for Machine Learning

Overview Feast is an open source feature store for machine learning. Feast is the fastest path to productionizing analytic data for model training and

![[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation](https://github.com/Ha0Tang/SelectionGAN/raw/master/imgs/motivation.jpg)

[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation

SelectionGAN for Guided Image-to-Image Translation CVPR Paper | Extended Paper | Guided-I2I-Translation-Papers Citation If you use this code for your

Memory Efficient Attention (O(sqrt(n)) for Jax and PyTorch

Memory Efficient Attention This is unofficial implementation of Self-attention Does Not Need O(n^2) Memory for Jax and PyTorch. Implementation is almo

scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms.

Sklearn-genetic-opt scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms. This is meant to be an alternativ

DeepVoxels is an object-specific, persistent 3D feature embedding.

DeepVoxels is an object-specific, persistent 3D feature embedding. It is found by globally optimizing over all available 2D observations of

Code for the paper Progressive Pose Attention for Person Image Generation in CVPR19 (Oral).

Pose-Transfer Code for the paper Progressive Pose Attention for Person Image Generation in CVPR19(Oral). The paper is available here. Video generation

Industrial Image Anomaly Localization Based on Gaussian Clustering of Pre-trained Feature

Industrial Image Anomaly Localization Based on Gaussian Clustering of Pre-trained Feature Q. Wan, L. Gao, X. Li and L. Wen, "Industrial Image Anomaly

Working demo of the Multi-class and Anomaly classification model using the CLIP feature space

👁️ Hindsight AI: Crime Classification With Clip About For Educational Purposes Only This is a recursive neural net trained to classify specific crime

Virtual Dance Reality Stage: a feature that offers you to share a stage with another user virtually

Portrait Segmentation using Tensorflow This script removes the background from an input image. You can read more about segmentation here Setup The scr

A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization

University1652-Baseline [Paper] [Slide] [Explore Drone-view Data] [Explore Satellite-view Data] [Explore Street-view Data] [Video Sample] [中文介绍] This

The official implementation of the Hybrid Self-Attention NEAT algorithm

PUREPLES - Pure Python Library for ES-HyperNEAT About This is a library of evolutionary algorithms with a focus on neuroevolution, implemented in pure

GANformer: Generative Adversarial Transformers

GANformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick Update: We released the new GANformer2 paper! *I wish to thank Ch

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Nystromformer: A Nystrom-based Algorithm for Approximating Self-Attention

Nystromformer: A Nystrom-based Algorithm for Approximating Self-Attention April 6, 2021 We extended segment-means to compute landmarks without requiri

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

An implementation of the Pay Attention when Required transformer

Pay Attention when Required (PAR) Transformer-XL An implementation of the Pay Attention when Required transformer from the paper: https://arxiv.org/pd

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

Reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer: Self-Attention with Linear Complexity)

Linear Multihead Attention (Linformer) PyTorch Implementation of reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer:

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Examples of using sparse attention, as in "Generating Long Sequences with Sparse Transformers"

Status: Archive (code is provided as-is, no updates expected) Update August 2020: For an example repository that achieves state-of-the-art modeling pe

Code for Crowd counting via unsupervised cross-domain feature adaptation.

CDFA-pytorch Code for Unsupervised crowd counting via cross-domain feature adaptation. Pre-trained models Google Drive Baidu Cloud : t4qc Environment

Pytorch codes for Feature Transfer Learning for Face Recognition with Under-Represented Data

FTLNet_Pytorch Pytorch codes for Feature Transfer Learning for Face Recognition with Under-Represented Data 1. Introduction This repo is an unofficial

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Shuffle and add items from jellyfin to mpd (use in tandem with jellyfin-mopidy and mpd-mopidy). Similar to ncmpcpp's "Add random" feature..

jellyshuf Essentially implements ncmpcpp's add random feature (default hotkey: `) through a script which grabs info from jellyfin api itself. jellyfin

[ACM MM 2021] Yes, "Attention is All You Need", for Exemplar based Colorization

Transformer for Image Colorization This is an implemention for Yes, "Attention Is All You Need", for Exemplar based Colorization, and the current soft

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2 Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexan

Virtual Dance Reality Stage is a feature that offers you to share a stage with another user virtually.

Virtual Dance Reality Stage is a feature that offers you to share a stage with another user virtually. It uses the concept of Image Background Removal using DeepLab Architecture (based on Semantic Segmentation), which is a state-of-art DL model from Google Brain.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm. It contains the code to reproduce the results presented in the original paper: https://arxiv.org/abs/2112.03670

TRACER: Extreme Attention Guided Salient Object Tracing Network implementation in PyTorch

TRACER: Extreme Attention Guided Salient Object Tracing Network This paper was accepted at AAAI 2022 SA poster session. Datasets All datasets are avai

DeepDiffusion: Unsupervised Learning of Retrieval-adapted Representations via Diffusion-based Ranking on Latent Feature Manifold

DeepDiffusion Introduction This repository provides the code of the DeepDiffusion algorithm for unsupervised learning of retrieval-adapted representat

Sleep staging from ECG, assisted with EEG

Sleep_Staging_Knowledge Distillation This codebase implements knowledge distillation approach for ECG based sleep staging assisted by EEG based sleep

Official implementation of the paper "Lightweight Deep CNN for Natural Image Matting via Similarity Preserving Knowledge Distillation"

Lightweight-Deep-CNN-for-Natural-Image-Matting-via-Similarity-Preserving-Knowledge-Distillation Introduction Accepted at IEEE Signal Processing Letter

A repository with exploration into using transformers to predict DNA ↔ transcription factor binding

Transcription Factor binding predictions with Attention and Transformers A repository with exploration into using transformers to predict DNA ↔ transc

Mask-invariant Face Recognition through Template-level Knowledge Distillation

Mask-invariant Face Recognition through Template-level Knowledge Distillation This is the official repository of "Mask-invariant Face Recognition thro

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

A C-like hardware description language (HDL) adding high level synthesis(HLS)-like automatic pipelining as a language construct/compiler feature.

██████╗ ██╗██████╗ ███████╗██╗ ██╗███╗ ██╗███████╗ ██████╗ ██╔══██╗██║██╔══██╗██╔════╝██║ ██║████╗ ██║██╔════╝██╔════╝ ██████╔╝██║██████╔╝█

Implementation of Heterogeneous Graph Attention Network

HetGAN Implementation of Heterogeneous Graph Attention Network This is the code repository of paper "Prediction of Metro Ridership During the COVID-19

a practicable framework used in Deep Learning. So far UDL only provide DCFNet implementation for the ICCV paper (Dynamic Cross Feature Fusion for Remote Sensing Pansharpening)

UDL UDL is a practicable framework used in Deep Learning (computer vision). Benchmark codes, results and models are available in UDL, please contact @

Simplest QRGenerator with a cool feature (-sh=True :D)

Simple QR-Codes Generator :D Generates QR-codes, nothing more and nothing less . How to use Just run ./install.sh to set all the dependencies up, th

Official implementation of the paper Do pedestrians pay attention? Eye contact detection for autonomous driving

Do pedestrians pay attention? Eye contact detection for autonomous driving Official implementation of the paper Do pedestrians pay attention? Eye cont

A Dynamic Residual Self-Attention Network for Lightweight Single Image Super-Resolution

DRSAN A Dynamic Residual Self-Attention Network for Lightweight Single Image Super-Resolution Karam Park, Jae Woong Soh, and Nam Ik Cho Environments U

Implementation of the paper ''Implicit Feature Refinement for Instance Segmentation''.

Implicit Feature Refinement for Instance Segmentation This repository is an official implementation of the ACM Multimedia 2021 paper Implicit Feature

Codes for TIM2021 paper "Anchor-Based Spatio-Temporal Attention 3-D Convolutional Networks for Dynamic 3-D Point Cloud Sequences"

Codes for TIM2021 paper "Anchor-Based Spatio-Temporal Attention 3-D Convolutional Networks for Dynamic 3-D Point Cloud Sequences"

Supplemental learning materials for "Fourier Feature Networks and Neural Volume Rendering"

Fourier Feature Networks and Neural Volume Rendering This repository is a companion to a lecture given at the University of Cambridge Engineering Depa

Code for "Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification", ECCV 2020 Spotlight

Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification Implementation of "Learning From Multiple Experts: Se

Pytorch Implementations of large number classical backbone CNNs, data enhancement, torch loss, attention, visualization and some common algorithms.

Torch-template-for-deep-learning Pytorch implementations of some **classical backbone CNNs, data enhancement, torch loss, attention, visualization and

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

Does MAML Only Work via Feature Re-use? A Data Set Centric Perspective

Does-MAML-Only-Work-via-Feature-Re-use-A-Data-Set-Centric-Perspective Does MAML Only Work via Feature Re-use? A Data Set Centric Perspective Installin

Markov Attention Models

Introduction This repo contains code for reproducing the results in the paper Graphical Models with Attention for Context-Specific Independence and an

SHRIMP: Sparser Random Feature Models via Iterative Magnitude Pruning

SHRIMP: Sparser Random Feature Models via Iterative Magnitude Pruning This repository is the official implementation of "SHRIMP: Sparser Random Featur

Python implementation of ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images, AAAI2022.

ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images Binh M. Le & Simon S. Woo, "ADD:

Bayesian Deep Learning and Deep Reinforcement Learning for Object Shape Error Response and Correction of Manufacturing Systems

Bayesian Deep Learning for Manufacturing 2.0 (dlmfg) Object Shape Error Response (OSER) Digital Lifecycle Management - In Process Quality Improvement

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design"

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design". CoordAttention tensorflow slim

Robust Lane Detection via Expanded Self Attention (WACV 2022)

Robust Lane Detection via Expanded Self Attention (WACV 2022) Minhyeok Lee, Junhyeop Lee, Dogyoon Lee, Woojin Kim, Sangwon Hwang, Sangyoun Lee Overvie

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

Perturbed Self-Distillation: Weakly Supervised Large-Scale Point Cloud Semantic Segmentation (ICCV2021)

Perturbed Self-Distillation: Weakly Supervised Large-Scale Point Cloud Semantic Segmentation (ICCV2021) This is the implementation of PSD (ICCV 2021),

Neural Caption Generator with Attention

Neural Caption Generator with Attention Tensorflow implementation of "Show

TensorFlow implementation of the paper "Hierarchical Attention Networks for Document Classification"

Hierarchical Attention Networks for Document Classification This is an implementation of the paper Hierarchical Attention Networks for Document Classi

Hierarchical Attentive Recurrent Tracking

Hierarchical Attentive Recurrent Tracking This is an official Tensorflow implementation of single object tracking in videos by using hierarchical atte