860 Repositories

Python attention-feature-distillation Libraries

A Tensorfflow implementation of Attend, Infer, Repeat

Attend, Infer, Repeat: Fast Scene Understanding with Generative Models This is an unofficial Tensorflow implementation of Attend, Infear, Repeat (AIR)

The implementation of the paper "A Deep Feature Aggregation Network for Accurate Indoor Camera Localization".

A Deep Feature Aggregation Network for Accurate Indoor Camera Localization This is the PyTorch implementation of our paper "A Deep Feature Aggregation

Official Pytorch Implementation of Relational Self-Attention: What's Missing in Attention for Video Understanding

Relational Self-Attention: What's Missing in Attention for Video Understanding This repository is the official implementation of "Relational Self-Atte

This is Official implementation for "Pose-guided Feature Disentangling for Occluded Person Re-Identification Based on Transformer" in AAAI2022

PFD:Pose-guided Feature Disentangling for Occluded Person Re-identification based on Transformer This repo is the official implementation of "Pose-gui

The Codebase for Causal Distillation for Language Models.

Causal Distillation for Language Models Zhengxuan Wu*,Atticus Geiger*, Josh Rozner, Elisa Kreiss, Hanson Lu, Thomas Icard, Christopher Potts, Noah D.

Code of Puregaze: Purifying gaze feature for generalizable gaze estimation, AAAI 2022.

PureGaze: Purifying Gaze Feature for Generalizable Gaze Estimation Description Our work is accpeted by AAAI 2022. Picture: We propose a domain-general

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds

PCAM: Product of Cross-Attention Matrices for Rigid Registration of Point Clouds PCAM: Product of Cross-Attention Matrices for Rigid Registration of P

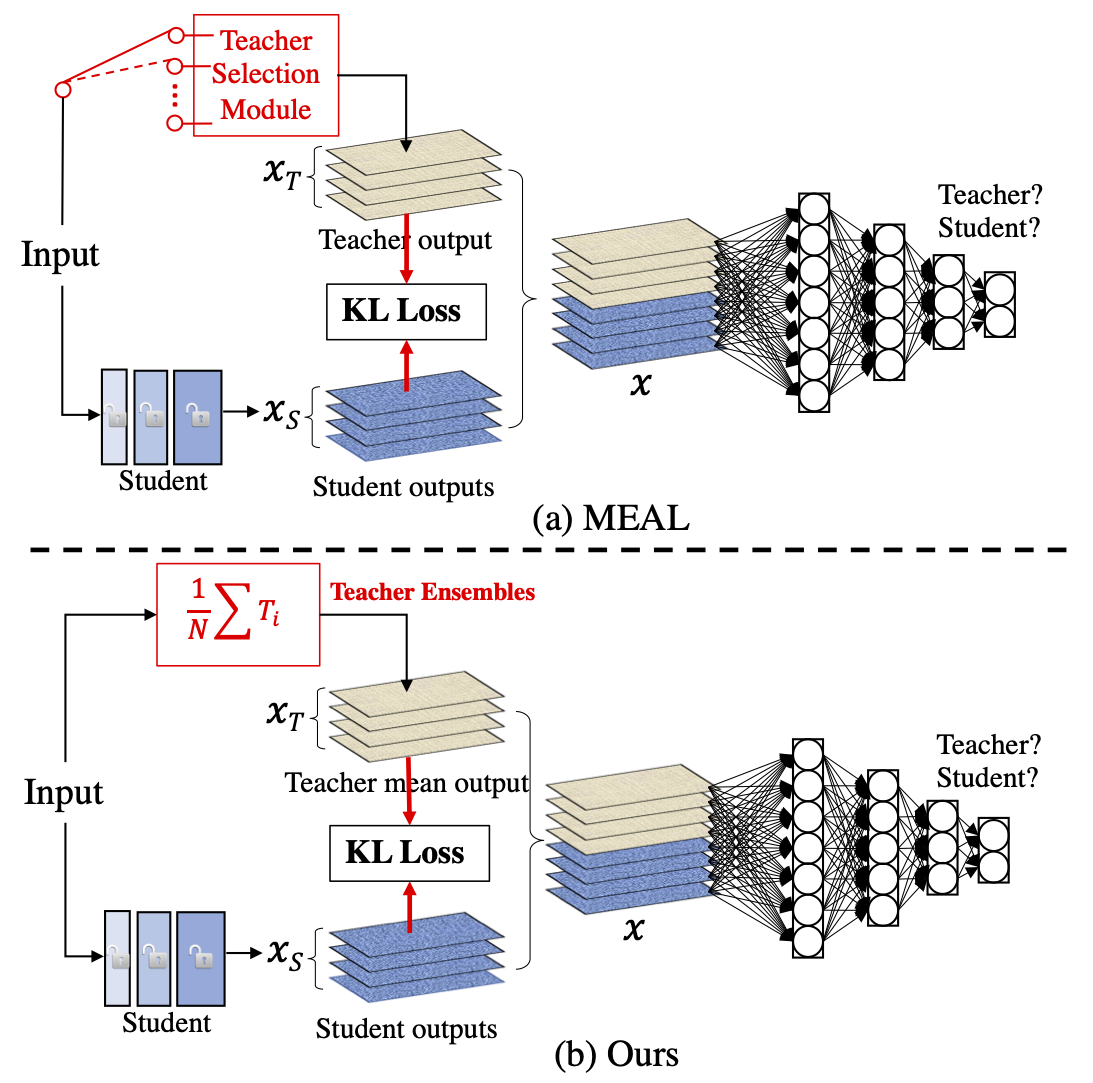

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

The functions we created are included in a script. The necessary parts for pre-processing were taken. Analysis complete.

Feature-Engineering The functions we created are included in a script. The necessary parts for pre-processing were taken. Analysis complete. Business

Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch

NÜWA - Pytorch (wip) Implementation of NÜWA, state of the art attention network for text to video synthesis, in Pytorch. This repository will be popul

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Joint Detection and Identification Feature Learning for Person Search

Person Search Project This repository hosts the code for our paper Joint Detection and Identification Feature Learning for Person Search. The code is

(ACL-IJCNLP 2021) Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models.

BERT Convolutions Code for the paper Convolutions and Self-Attention: Re-interpreting Relative Positions in Pre-trained Language Models. Contains expe

A Fast Knowledge Distillation Framework for Visual Recognition

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

A PyTorch Implementation of PGL-SUM from "Combining Global and Local Attention with Positional Encoding for Video Summarization", Proc. IEEE ISM 2021

PGL-SUM: Combining Global and Local Attention with Positional Encoding for Video Summarization PyTorch Implementation of PGL-SUM From "PGL-SUM: Combin

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving

GSAN Introduction Code for paper GSAN: Graph Self-Attention Network for Learning Spatial-Temporal Interaction Representation in Autonomous Driving, wh

PyTorch implementation of paper A Fast Knowledge Distillation Framework for Visual Recognition.

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

BERT Attention Analysis

BERT Attention Analysis This repository contains code for What Does BERT Look At? An Analysis of BERT's Attention. It includes code for getting attent

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

EasyTransfer is designed to make the development of transfer learning in NLP applications easier.

EasyTransfer is designed to make the development of transfer learning in NLP applications easier. The literature has witnessed the success of applying

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

Pytorch implementation of Feature Pyramid Network (FPN) for Object Detection

fpn.pytorch Pytorch implementation of Feature Pyramid Network (FPN) for Object Detection Introduction This project inherits the property of our pytorc

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

The implementation for "Comprehensive Knowledge Distillation with Causal Intervention".

Comprehensive Knowledge Distillation with Causal Intervention This repository is a PyTorch implementation of "Comprehensive Knowledge Distillation wit

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

PyTorch implementation of "Debiased Visual Question Answering from Feature and Sample Perspectives" (NeurIPS 2021)

D-VQA We provide the PyTorch implementation for Debiased Visual Question Answering from Feature and Sample Perspectives (NeurIPS 2021). Dependencies P

Implementation of Pooling by Sliced-Wasserstein Embedding (NeurIPS 2021)

PSWE: Pooling by Sliced-Wasserstein Embedding (NeurIPS 2021) PSWE is a permutation-invariant feature aggregation/pooling method based on sliced-Wasser

TAUFE: Task-Agnostic Undesirable Feature DeactivationUsing Out-of-Distribution Data

A deep neural network (DNN) has achieved great success in many machine learning tasks by virtue of its high expressive power. However, its prediction can be easily biased to undesirable features, which are not essential for solving the target task and are even imperceptible to a human, thereby resulting in poor generalization

Official PyTorch implementation of Data-free Knowledge Distillation for Object Detection, WACV 2021.

Introduction This repository is the official PyTorch implementation of Data-free Knowledge Distillation for Object Detection, WACV 2021. Data-free Kno

This repository contains the implementation of the paper: Federated Distillation of Natural Language Understanding with Confident Sinkhorns

Federated Distillation of Natural Language Understanding with Confident Sinkhorns This repository provides an alternative method for ensembled distill

Flexible time series feature extraction & processing

tsflex is a toolkit for flexible time series processing & feature extraction, that is efficient and makes few assumptions about sequence data. Useful

Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust.

Subspace Adversarial Training Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust. However,

Unsupervised Feature Ranking via Attribute Networks.

FRANe Unsupervised Feature Ranking via Attribute Networks (FRANe) converts a dataset into a network (graph) with nodes that correspond to the features

Image Captioning using CNN ,LSTM and Attention

Image Captioning using CNN ,LSTM and Attention This is a deeplearning model which tries to summarize an image into a text . Installation Install this

Pytorch implementation for Patient Knowledge Distillation for BERT Model Compression

Patient Knowledge Distillation for BERT Model Compression Knowledge distillation for BERT model Installation Run command below to install the environm

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Towards Open-World Feature Extrapolation: An Inductive Graph Learning Approach

This repository holds the implementation for paper Towards Open-World Feature Extrapolation: An Inductive Graph Learning Approach Download our preproc

Resources related to EMNLP 2021 paper "FAME: Feature-Based Adversarial Meta-Embeddings for Robust Input Representations"

FAME: Feature-based Adversarial Meta-Embeddings This is the companion code for the experiments reported in the paper "FAME: Feature-Based Adversarial

Official repository for Natural Image Matting via Guided Contextual Attention

GCA-Matting: Natural Image Matting via Guided Contextual Attention The source codes and models of Natural Image Matting via Guided Contextual Attentio

Prototypical Cross-Attention Networks for Multiple Object Tracking and Segmentation, NeurIPS 2021 Spotlight

PCAN for Multiple Object Tracking and Segmentation This is the offical implementation of paper PCAN for MOTS. We also present a trailer that consists

Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2

CoaDTI Multi-modal co-attention for drug-target interaction annotation and Its Application to SARS-CoV-2 Abstract Environment The test was conducted i

Revisiting Video Saliency: A Large-scale Benchmark and a New Model (CVPR18, PAMI19)

DHF1K =========================================================================== Wenguan Wang, J. Shen, M.-M Cheng and A. Borji, Revisiting Video Sal

NVTabular is a feature engineering and preprocessing library for tabular data designed to quickly and easily manipulate terabyte scale datasets used to train deep learning based recommender systems.

NVTabular is a feature engineering and preprocessing library for tabular data designed to quickly and easily manipulate terabyte scale datasets used to train deep learning based recommender systems.

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis, accepted at ACMMM 2021.

EvDistill: Asynchronous Events to End-task Learning via Bidirectional Reconstruction-guided Cross-modal Knowledge Distillation (CVPR'21)

EvDistill: Asynchronous Events to End-task Learning via Bidirectional Reconstruction-guided Cross-modal Knowledge Distillation (CVPR'21) Citation If y

Official Codes for Graph Modularity:Towards Understanding the Cross-Layer Transition of Feature Representations in Deep Neural Networks.

Dynamic-Graphs-Construction Official Codes for Graph Modularity:Towards Understanding the Cross-Layer Transition of Feature Representations in Deep Ne

tsflex - feature-extraction benchmarking

tsflex - feature-extraction benchmarking This repository withholds the benchmark results and visualization code of the tsflex paper and toolkit. Flow

Code for DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning

DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning Pytorch Implementation for DisCo: Remedy Self-supervi

FasterAI: A library to make smaller and faster models with FastAI.

Fasterai fasterai is a library created to make neural network smaller and faster. It essentially relies on common compression techniques for networks

Deep Reinforced Attention Regression for Partial Sketch Based Image Retrieval.

DARP-SBIR Intro This repository contains the source code implementation for ICDM submission paper Deep Reinforced Attention Regression for Partial Ske

Kaggler is a Python package for lightweight online machine learning algorithms and utility functions for ETL and data analysis.

Kaggler is a Python package for lightweight online machine learning algorithms and utility functions for ETL and data analysis. It is distributed under the MIT License.

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models.

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models. Feature-engine's transformers follow scikit-learn's functionality with fit() and transform() methods to first learn the transforming parameters from data and then transform the data.

Open-source python package for the extraction of Radiomics features from 2D and 3D images and binary masks.

pyradiomics v3.0.1 Build Status Linux macOS Windows Radiomics feature extraction in Python This is an open-source python package for the extraction of

Python implementation of "Elliptic Fourier Features of a Closed Contour"

PyEFD An Python/NumPy implementation of a method for approximating a contour with a Fourier series, as described in [1]. Installation pip install pyef

A Guide for Feature Engineering and Feature Selection, with implementations and examples in Python.

Feature Engineering & Feature Selection A comprehensive guide [pdf] [markdown] for Feature Engineering and Feature Selection, with implementations and

stability-selection - A scikit-learn compatible implementation of stability selection

stability-selection - A scikit-learn compatible implementation of stability selection stability-selection is a Python implementation of the stability

A fast, flexible, and performant feature selection package for python.

linselect A fast, flexible, and performant feature selection package for python. Package in a nutshell It's built on stepwise linear regression When p

Focal and Global Knowledge Distillation for Detectors

FGD Paper: Focal and Global Knowledge Distillation for Detectors Install MMDetection and MS COCO2017 Our codes are based on MMDetection. Please follow

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models.

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models. Hyperactive: is very easy to lear

Bringing emacs' greatest feature to neovim - Tetris!

nvim-tetris Bringing emacs' greatest feature to neovim - Tetris! This plugin is written in Fennel using Olical's project Aniseed for creating the proj

Quantization library for PyTorch. Support low-precision and mixed-precision quantization, with hardware implementation through TVM.

HAWQ: Hessian AWare Quantization HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform

Source code for our Paper "Learning in High-Dimensional Feature Spaces Using ANOVA-Based Matrix-Vector Multiplication"

NFFT4ANOVA Source code for our Paper "Learning in High-Dimensional Feature Spaces Using ANOVA-Based Matrix-Vector Multiplication" This package uses th

DA2Lite is an automated model compression toolkit for PyTorch.

DA2Lite (Deep Architecture to Lite) is a toolkit to compress and accelerate deep network models. ⭐ Star us on GitHub — it helps!! Frameworks & Librari

Knowledge Distillation Toolbox for Semantic Segmentation

SegDistill: Toolbox for Knowledge Distillation on Semantic Segmentation Networks This repo contains the supported code and configuration files for Seg

Codebase for "ProtoAttend: Attention-Based Prototypical Learning."

Codebase for "ProtoAttend: Attention-Based Prototypical Learning." Authors: Sercan O. Arik and Tomas Pfister Paper: Sercan O. Arik and Tomas Pfister,

Predicting the usefulness of reviews given the review text and metadata surrounding the reviews.

Predicting Yelp Review Quality Table of Contents Introduction Motivation Goal and Central Questions The Data Data Storage and ETL EDA Data Pipeline Da

A denoising autoencoder + adversarial losses and attention mechanisms for face swapping.

faceswap-GAN Adding Adversarial loss and perceptual loss (VGGface) to deepfakes'(reddit user) auto-encoder architecture. Updates Date Update 2018-08-2

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch. Most of the models in NLP were implemented with less than 100 lines of code.(except comments or blank lines)

Automated Machine Learning Pipeline for tabular data. Designed for predictive maintenance applications, failure identification, failure prediction, condition monitoring, etc.

Automated Machine Learning Pipeline for tabular data. Designed for predictive maintenance applications, failure identification, failure prediction, condition monitoring, etc.

PyTorch implementation of Hierarchical Multi-label Text Classification: An Attention-based Recurrent Network

hierarchical-multi-label-text-classification-pytorch Hierarchical Multi-label Text Classification: An Attention-based Recurrent Network Approach This

Surrogate-Assisted Genetic Algorithm for Wrapper Feature Selection

SAGA Surrogate-Assisted Genetic Algorithm for Wrapper Feature Selection Please refer to the Jupyter notebook (Example.ipynb) for an example of using t

You Only Sample (Almost) Once: Linear Cost Self-Attention Via Bernoulli Sampling

You Only Sample (Almost) Once: Linear Cost Self-Attention Via Bernoulli Sampling Transformer-based models are widely used in natural language processi

RAANet: Range-Aware Attention Network for LiDAR-based 3D Object Detection with Auxiliary Density Level Estimation

RAANet: Range-Aware Attention Network for LiDAR-based 3D Object Detection with Auxiliary Density Level Estimation Anonymous submission Abstract 3D obj

The implementation of DeBERTa

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Self-attentive task GAN for space domain awareness data augmentation.

SATGAN TODO: update the article URL once published. Article about this implemention The self-attentive task generative adversarial network (SATGAN) le

A PyTorch implementation of "CoAtNet: Marrying Convolution and Attention for All Data Sizes".

CoAtNet Overview This is a PyTorch implementation of CoAtNet specified in "CoAtNet: Marrying Convolution and Attention for All Data Sizes", arXiv 2021

Contrastive Feature Loss for Image Prediction

Contrastive Feature Loss for Image Prediction We provide a PyTorch implementation of our contrastive feature loss presented in: Contrastive Feature Lo

Feature Detection Based Template Matching

Feature Detection Based Template Matching The classification of the photos was made using the OpenCv template Matching method. Installation Use the pa

![[CVPR 2016] Unsupervised Feature Learning by Image Inpainting using GANs](https://github.com/pathak22/context-encoder/raw/master/images/teaser.jpg)

[CVPR 2016] Unsupervised Feature Learning by Image Inpainting using GANs

Context Encoders: Feature Learning by Inpainting CVPR 2016 [Project Website] [Imagenet Results] Sample results on held-out images: This is the trainin

A data preprocessing and feature engineering script for a machine learning pipeline is prepared.

FEATURE ENGINEERING Business Problem: A data preprocessing and feature engineering script for a machine learning pipeline needs to be prepared. It is

Required for a machine learning pipeline data preprocessing and variable engineering script needs to be prepared

Feature-Engineering Required for a machine learning pipeline data preprocessing and variable engineering script needs to be prepared. When the dataset

Sky attention heatmap of submissions to astrometry.net

astroheat Installation Requires Python 3.6+, Tested with Python 3.9.5 Install library dependencies pip install -r requirements.txt The program require

🛠️ Tools for Transformers compression using Lightning ⚡

Bert-squeeze is a repository aiming to provide code to reduce the size of Transformer-based models or decrease their latency at inference time.

Robust and Accurate Object Detection via Self-Knowledge Distillation

Robust and Accurate Object Detection via Self-Knowledge Distillation paper:https://arxiv.org/abs/2111.07239 Environments Python 3.7 Cuda 10.1 Prepare

A Robust Unsupervised Ensemble of Feature-Based Explanations using Restricted Boltzmann Machines

A Robust Unsupervised Ensemble of Feature-Based Explanations using Restricted Boltzmann Machines Understanding the results of deep neural networks is

Local Multi-Head Channel Self-Attention for FER2013

LHC-Net Local Multi-Head Channel Self-Attention This repository is intended to provide a quick implementation of the LHC-Net and to replicate the resu

Summary of related papers on visual attention

This repo is built for paper: Attention Mechanisms in Computer Vision: A Survey paper Vision-Attention-Papers Channel attention Spatial attention Temp

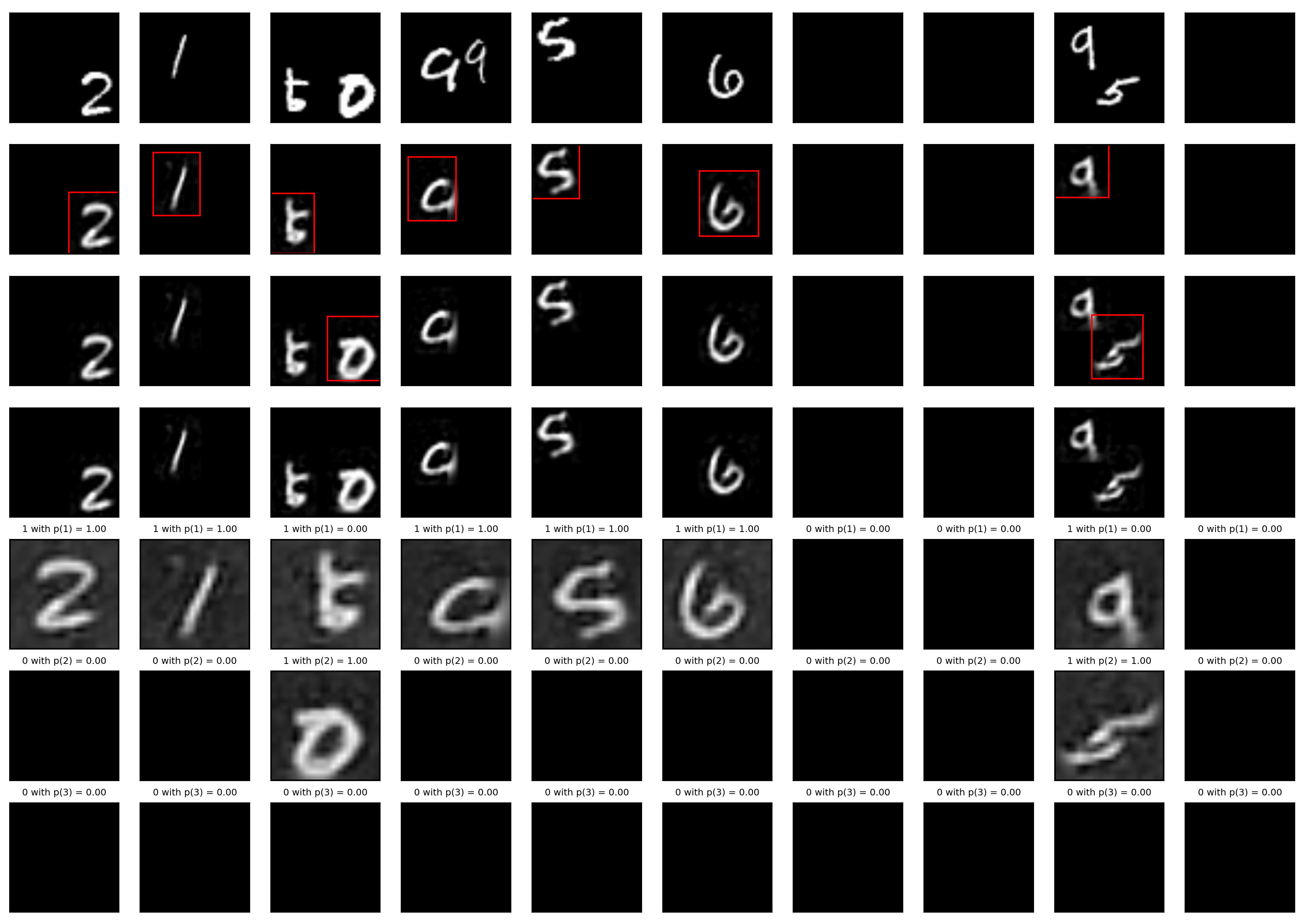

Official code for: A Probabilistic Hard Attention Model For Sequentially Observed Scenes

"A Probabilistic Hard Attention Model For Sequentially Observed Scenes" Authors: Samrudhdhi Rangrej, James Clark Accepted to: BMVC'21 A recurrent atte

Code repo for "FASA: Feature Augmentation and Sampling Adaptation for Long-Tailed Instance Segmentation" (ICCV 2021)

FASA: Feature Augmentation and Sampling Adaptation for Long-Tailed Instance Segmentation (ICCV 2021) This repository contains the implementation of th

kitty - the fast, feature-rich, cross-platform, GPU based terminal

kitty - the fast, feature-rich, cross-platform, GPU based terminal

LOFO (Leave One Feature Out) Importance calculates the importances of a set of features based on a metric of choice,

LOFO (Leave One Feature Out) Importance calculates the importances of a set of features based on a metric of choice, for a model of choice, by iteratively removing each feature from the set, and evaluating the performance of the model, with a validation scheme of choice, based on the chosen metric.