219 Repositories

Python gym-environment Libraries

High-quality single file implementation of Deep Reinforcement Learning algorithms with research-friendly features

CleanRL (Clean Implementation of RL Algorithms) CleanRL is a Deep Reinforcement Learning library that provides high-quality single-file implementation

Basic code and description for GoBigger challenge 2021.

GoBigger Challenge 2021 en / 中文 Challenge Description 2021.11.13 We are holding a competition —— Go-Bigger: Multi-Agent Decision Intelligence Challeng

An example project which contains the Unity components necessary to complete Navigation2's SLAM tutorial with a Turtlebot3, using a custom Unity environment in place of Gazebo.

Navigation 2 SLAM Example This example provides a Unity Project and a colcon workspace that, when used together, allows a user to substitute Unity as

A minimalist environment for decision-making in autonomous driving

highway-env A collection of environments for autonomous driving and tactical decision-making tasks An episode of one of the environments available in

xkeysnail is yet another keyboard remapping tool for X environment written in Python

xkeysnail is yet another keyboard remapping tool for X environment written in Python. It's like xmodmap but allows more flexible remappings.

Bio-OFC gym implementation and Gym-Fly environment

Bio-OFC gym implementation and Gym-Fly environment This repository includes the gym compatible implementation of the Bio-OFC algorithm from the paper

Doom o’clock is a website/project that features a countdown of “when will the earth end” and a greenhouse gas effect emission prediction that’s predicted

Doom o’clock is a website/project that features a countdown of “when will the earth end” and a greenhouse gas effect emission prediction that’s predicted

Isaac Gym Reinforcement Learning Environments

Isaac Gym Reinforcement Learning Environments

C++ Environment InitiatorVisual Studio Code C / C++ Environment Initiator

Visual Studio Code C / C++ Environment Initiator Latest Version : v 1.0.1(2021/11/08) .exe link here About : Visual Studio Code에서 C/C++환경을 MinGW GCC/G

Investigating automatic navigation towards standard US views integrating MARL with the virtual US environment developed in CT2US simulation

AutomaticUSnavigation Investigating automatic navigation towards standard US views integrating MARL with the virtual US environment developed in CT2US

Gym environments used in the paper: "Developmental Reinforcement Learning of Control Policy of a Quadcopter UAV with Thrust Vectoring Rotors"

gym_multirotor Gym to train reinforcement learning agents on UAV platforms Quadrotor Tiltrotor Requirements This package has been tested on Ubuntu 18.

A turtlebot auto controller allows robot to autonomously explore environment.

A turtlebot auto controller allows robot to autonomously explore environment.

Isaac Gym Environments for Legged Robots

Isaac Gym Environments for Legged Robots This repository provides the environment used to train ANYmal (and other robots) to walk on rough terrain usi

A package for "Procedural Content Generation via Reinforcement Learning" OpenAI Gym interface.

Readme: Illuminating Diverse Neural Cellular Automata for Level Generation This is the codebase used to generate the results presented in the paper av

Data-Driven Operational Space Control for Adaptive and Robust Robot Manipulation

OSCAR Project Page | Paper This repository contains the codebase used in OSCAR: Data-Driven Operational Space Control for Adaptive and Robust Robot Ma

A Simulated Optimal Intrusion Response Game

Optimal Intrusion Response An OpenAI Gym interface to a MDP/Markov Game model for optimal intrusion response of a realistic infrastructure simulated u

💻VIEN is a command-line tool for managing Python Virtual Environments.

vien VIEN is a command-line tool for managing Python Virtual Environments. It provides one-line shortcuts for: creating and deleting environments runn

This is a simple python flask web app that implements geometric calculations for three shapes given the user's input for radius and height. It is recommended this app be run using a python virtual environment, but not necessary for success. Unit tests are also included.

Geometry Calculator Web The is a simple Flask-based web application that uses a Geometry Calculator Tool created out of assignments from my Intro to P

DocumentPy is a Python application that runs in a command-line interface environment, made for creating HTML documents.

DocumentPy DocumentPy is a Python application that runs in a command-line interface environment, made for creating HTML documents. Usage DocumentPy, a

A command line tool to query source code from your current Python env

wxc wxc (pronounced "which") allows you to inspect source code in your Python environment from the command line. It is based on the inspect module fro

Launch a ready-to-code Wagtail Live development environment with a single click.

Wagtail Live Gitpod Launch a ready-to-code Wagtail Live development environment with a single click. Steps: Click the Open in Gitpod button. Relax: a

Predicting path with preference based on user demonstration using Maximum Entropy Deep Inverse Reinforcement Learning in a continuous environment

Preference-Planning-Deep-IRL Introduction Check my portfolio post Dependencies Gym stable-baselines3 PyTorch Usage Take Demonstration python3 record.

Exercise to teach a newcomer to the CLSP grid to set up their environment and run jobs

Exercise to teach a newcomer to the CLSP grid to set up their environment and run jobs

Jiminy Cricket Environment (NeurIPS 2021)

Jiminy Cricket This is the repository for "What Would Jiminy Cricket Do? Towards Agents That Behave Morally" by Dan Hendrycks*, Mantas Mazeika*, Andy

wger Workout Manager is a free, open source web application that helps you manage your personal workouts, weight and diet plans and can also be used as a simple gym management utility.

wger (ˈvɛɡɐ) Workout Manager is a free, open source web application that helps you manage your personal workouts, weight and diet plans and can also be used as a simple gym management utility.

Helper script to bootstrap a Python environment with the tools required to build and install packages.

python-bootstrap Helper script to bootstrap a Python environment with the tools required to build and install packages. Usage $ python -m bootstrap.bu

Rotazioni: a linear programming workout split optimizer

Rotazioni: a linear programming workout split optimizer Dependencies Dependencies for the frontend and backend are respectively listed in client/packa

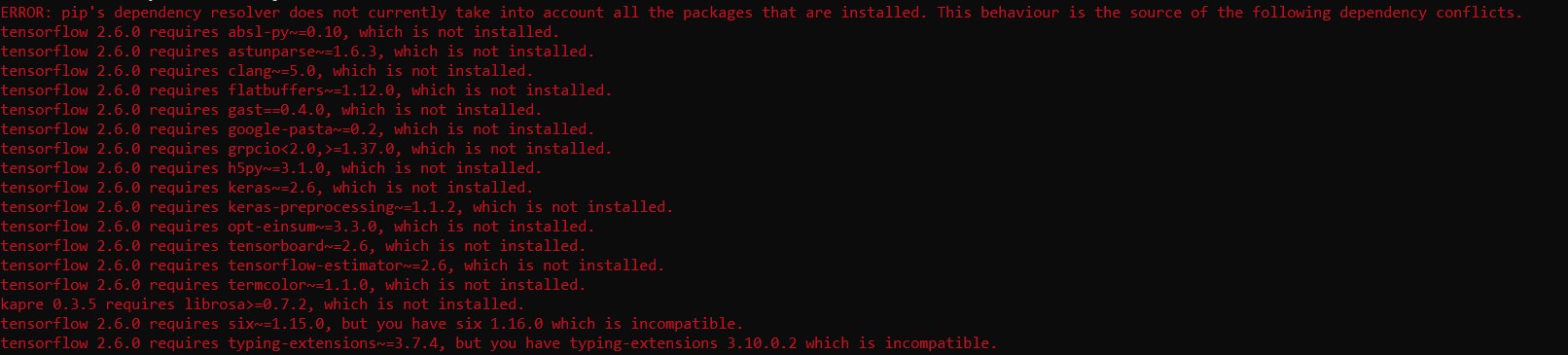

Data Science Environment Setup in single line

datascienv is package that helps your to setup your environment in single line of code with all dependency and it is also include pyforest that provide single line of import all required ml libraries

Setup a flask project using a single command, right from creating virtual environment to creating Procfile for deployment.

AutoFlask-Setup About AutoFlask-Setup can help you set up a new Flask Project, right from creating virtual environment to creating Procfile for deploy

DEEPAGÉ: Answering Questions in Portuguese about the Brazilian Environment

DEEPAGÉ: Answering Questions in Portuguese about the Brazilian Environment This repository is related to the paper DEEPAGÉ: Answering Questions in Por

A StarkNet project template based on a Pythonic environment

StarkNet Project Template This is an opinionated StarkNet project template. It is based around the Python's ecosystem and best practices. tox to manag

A bare-bones Python library for quality diversity optimization.

pyribs Website Source PyPI Conda CI/CD Docs Docs Status Twitter pyribs.org GitHub docs.pyribs.org A bare-bones Python library for quality diversity op

Reinforcement Learning with Q-Learning Algorithm on gym's frozen lake environment implemented in python

Reinforcement Learning with Q Learning Algorithm Q learning algorithm is trained on the gym's frozen lake environment. Libraries Used gym Numpy tqdm P

AEI: Actors-Environment Interaction with Adaptive Attention for Temporal Action Proposals Generation

AEI: Actors-Environment Interaction with Adaptive Attention for Temporal Action Proposals Generation A pytorch-version implementation codes of paper:

Motion planning environment for Sampling-based Planners

Sampling-Based Motion Planners' Testing Environment Sampling-based motion planners' testing environment (sbp-env) is a full feature framework to quick

An OpenAI Gym environment for multi-agent car racing based on Gym's original car racing environment.

Multi-Car Racing Gym Environment This repository contains MultiCarRacing-v0 a multiplayer variant of Gym's original CarRacing-v0 environment. This env

Code for Environment Inference for Invariant Learning (ICML 2020 UDL Workshop Paper)

Environment Inference for Invariant Learning This code accompanies the paper Environment Inference for Invariant Learning, which appears at ICML 2021.

Minimalistic Gridworld Environment (MiniGrid)

Minimalistic Gridworld Environment (MiniGrid) There are other gridworld Gym environments out there, but this one is designed to be particularly simple

The Multi-Mission Maximum Likelihood framework (3ML)

PyPi Conda The Multi-Mission Maximum Likelihood framework (3ML) A framework for multi-wavelength/multi-messenger analysis for astronomy/astrophysics.

Python-based Space Physics Environment Data Analysis Software

pySPEDAS pySPEDAS is an implementation of the SPEDAS framework for Python. The Space Physics Environment Data Analysis Software (SPEDAS) framework is

Deep Sea Treasure Environment for Multi-Objective Optimization Research

DeepSeaTreasure Environment Installation In order to get started with this environment, you can install it using the following command: python3 -m pip

TEACh is a dataset of human-human interactive dialogues to complete tasks in a simulated household environment.

TEACh is a dataset of human-human interactive dialogues to complete tasks in a simulated household environment.

Yadl - it is a simple library for working with both dotenv files and environment variables.

Yadl Yadl - it is a simple library for working with both dotenv files and environment variables. Features Validation of whitespaces. Validation of num

TEACh is a dataset of human-human interactive dialogues to complete tasks in a simulated household environment.

TEACh Task-driven Embodied Agents that Chat Aishwarya Padmakumar*, Jesse Thomason*, Ayush Shrivastava, Patrick Lange, Anjali Narayan-Chen, Spandana Ge

Phoenix Framework is an environment for writing, testing and using exploit code.

Phoenix-Framework Phoenix Framework is an environment for writing, testing and using exploit code. 🖼 Screenshots 🎪 Community PwnWiki Forums 🔑 Licen

Spark development environment for k8s

Local Spark Dev Env with Docker Development environment for k8s. Using the spark-operator image to ensure it will be the same environment. Start conta

Open source guides/codes for mastering deep learning to deploying deep learning in production in PyTorch, Python, C++ and more.

Deep Learning Materials by Deep Learning Wizard Start Learning Now Please head to www.deeplearningwizard.com to start learning! It is mobile/tablet fr

PowerGym is a Gym-like environment for Volt-Var control in power distribution systems.

Overview PowerGym is a Gym-like environment for Volt-Var control in power distribution systems. The Volt-Var control targets minimizing voltage violat

Python library which makes it possible to dynamically mask/anonymize data using JSON string or python dict rules in a PySpark environment.

pyspark-anonymizer Python library which makes it possible to dynamically mask/anonymize data using JSON string or python dict rules in a PySpark envir

Reinforcement learning models in ViZDoom environment

DoomNet DoomNet is a ViZDoom agent trained by reinforcement learning. The agent is a neural network that outputs a probability of actions given only p

Strict separation of config from code.

Python Decouple: Strict separation of settings from code Decouple helps you to organize your settings so that you can change parameters without having

Load Django Settings from Environmental Variables with One Magical Line of Code

DjEnv: Django + Environment Load Django Settings Directly from Environmental Variables features modify django configuration without modifying source c

Lux AI environment interface for RLlib multi-agents

Lux AI interface to RLlib MultiAgentsEnv For Lux AI Season 1 Kaggle competition. LuxAI repo RLlib-multiagents docs Kaggle environments repo Please let

A general-purpose, flexible, and easy-to-use simulator alongside an OpenAI Gym trading environment for MetaTrader 5 trading platform (Approved by OpenAI Gym)

gym-mtsim: OpenAI Gym - MetaTrader 5 Simulator MtSim is a simulator for the MetaTrader 5 trading platform alongside an OpenAI Gym environment for rein

PyBullet CartPole and Quadrotor environments—with CasADi symbolic a priori dynamics—for learning-based control and reinforcement learning

safe-control-gym Physics-based CartPole and Quadrotor Gym environments (using PyBullet) with symbolic a priori dynamics (using CasADi) for learning-ba

pydock - Docker-based environment manager for Python

pydock - Docker-based environment manager for Python ⚠️ pydock is still in beta mode, and very unstable. It is not recommended for anything serious. p

A3C LSTM Atari with Pytorch plus A3G design

NEWLY ADDED A3G A NEW GPU/CPU ARCHITECTURE OF A3C FOR SUBSTANTIALLY ACCELERATED TRAINING!! RL A3C Pytorch NEWLY ADDED A3G!! New implementation of A3C

EMBArk - The firmware security scanning environment

Embark is being developed to provide the firmware security analyzer emba as a containerized service and to ease accessibility to emba regardless of system and operating system.

Customizable RecSys Simulator for OpenAI Gym

gym-recsys: Customizable RecSys Simulator for OpenAI Gym Installation | How to use | Examples | Citation This package describes an OpenAI Gym interfac

A "gym" style toolkit for building lightweight Neural Architecture Search systems

A "gym" style toolkit for building lightweight Neural Architecture Search systems

Phoenix Framework is an environment for writing, testing and using exploit code.

Phoenix Framework is an environment for writing, testing and using exploit code. 🖼 Screenshots 🎪 Community PwnWiki Forums 🔑 Licen

Brandnew-flask is a CLI tool used to generate a powerful and mordern flask-app that supports the production environment.

Brandnew-flask is still in the initial stage and needs to be updated and improved continuously. Everyone is welcome to maintain and improve this CLI.

Train an RL agent to execute natural language instructions in a 3D Environment (PyTorch)

Gated-Attention Architectures for Task-Oriented Language Grounding This is a PyTorch implementation of the AAAI-18 paper: Gated-Attention Architecture

The Hailo Model Zoo includes pre-trained models and a full building and evaluation environment

Hailo Model Zoo The Hailo Model Zoo provides pre-trained models for high-performance deep learning applications. Using the Hailo Model Zoo you can mea

Implement A3C for Mujoco gym envs

pytorch-a3c-mujoco Disclaimer: my implementation right now is unstable (you ca refer to the learning curve below), I'm not sure if it's my problems. A

CL-Gym: Full-Featured PyTorch Library for Continual Learning

CL-Gym: Full-Featured PyTorch Library for Continual Learning CL-Gym is a small yet very flexible library for continual learning research and developme

A simple Django dev environment setup with docker for demo purposes for GalsenDev community

GalsenDEV Docker Demo This is a basic Django dev environment setup with docker and docker-compose for a GalsenDev Meetup. The main purposes was to mak

Visual Python is a GUI-based Python code generator, developed on the Jupyter Notebook environment as an extension.

Visual Python is a GUI-based Python code generator, developed on the Jupyter Notebook environment as an extension.

gym-anm is a framework for designing reinforcement learning (RL) environments that model Active Network Management (ANM) tasks in electricity distribution networks.

gym-anm is a framework for designing reinforcement learning (RL) environments that model Active Network Management (ANM) tasks in electricity distribution networks. It is built on top of the OpenAI Gym toolkit.

BridgeWalk is a partially-observed reinforcement learning environment with dynamics of varying stochasticity.

BridgeWalk is a partially-observed reinforcement learning environment with dynamics of varying stochasticity. The player needs to walk along a bridge to reach a goal location. When the player walks off the bridge into the water, the current will move it randomly until it gets washed back on the shore. A good agent in this environment avoids this stochastic trap

This websocket program is for data transmission between server and client. Data transmission is for Federated Learning in Edge computing environment.

websocket-for-data-transmission This websocket program is for data transmission between server and client. Data transmission is for Federated Learning

CowHerd is a partially-observed reinforcement learning environment

CowHerd is a partially-observed reinforcement learning environment, where the player walks around an area and is rewarded for milking cows. The cows try to escape and the player can place fences to help capture them. The implementation of CowHerd is based on the Crafter environment.

A step-by-step tutorial for how to work with some of the most basic features of Nav2 using a Jupyter Notebook in a warehouse environment to create a basic application.

This project has a step-by-step tutorial for how to work with some of the most basic features of Nav2 using a Jupyter Notebook in a warehouse environment to create a basic application.

render sprites into your desktop environment as shaped windows using GTK

spritegtk render static or animated sprites into your desktop environment as dynamic shaped windows using GTK requires pycairo and PYGobject: pip inst

JAX code for the paper "Control-Oriented Model-Based Reinforcement Learning with Implicit Differentiation"

Optimal Model Design for Reinforcement Learning This repository contains JAX code for the paper Control-Oriented Model-Based Reinforcement Learning wi

MasTrade is a trading bot in baselines3,pytorch,gym

mastrade MasTrade is a trading bot in baselines3,pytorch,gym idea we have for example 1 btc and we buy a crypto with it with market option to trade in

My solution for a MARL problem on a Grid Environment with Q-tables.

To run the project, run: conda create --name env python=3.7 pip install -r requirements.txt python run.py To-do: Add direction to the state space Take

This is code to fit per-pixel environment map with spherical Gaussian lobes, using LBFGS optimization

Spherical Gaussian Optimization This is code to fit per-pixel environment map with spherical Gaussian lobes, using LBFGS optimization. This code has b

AndroidEnv is a Python library that exposes an Android device as a Reinforcement Learning (RL) environment.

AndroidEnv is a Python library that exposes an Android device as a Reinforcement Learning (RL) environment.

This repository contains free labs for setting up an entire workflow and DevOps environment from a real-world perspective in AWS

DevOps-The-Hard-Way-AWS This tutorial contains a full, real-world solution for setting up an environment that is using DevOps technologies and practic

leafmap - A Python package for geospatial analysis and interactive mapping in a Jupyter environment.

A Python package for geospatial analysis and interactive mapping with minimal coding in a Jupyter environment

A collection of various RL algorithms like policy gradients, DQN and PPO. The goal of this repo will be to make it a go-to resource for learning about RL. How to visualize, debug and solve RL problems. I've additionally included playground.py for learning more about OpenAI gym, etc.

Reinforcement Learning (PyTorch) 🤖 + 🍰 = ❤️ This repo will contain PyTorch implementation of various fundamental RL algorithms. It's aimed at making

Transparently load variables from environment or JSON/YAML file.

A thin wrapper over Pydantic's settings management. Allows you to define configuration variables and load them from environment or JSON/YAML file. Also generates initial configuration files and documentation for your defined configuration.

Django-environ allows you to utilize 12factor inspired environment variables to configure your Django application.

Django-environ django-environ allows you to use Twelve-factor methodology to configure your Django application with environment variables. import envi

ManipulaTHOR, a framework that facilitates visual manipulation of objects using a robotic arm

ManipulaTHOR: A Framework for Visual Object Manipulation Kiana Ehsani, Winson Han, Alvaro Herrasti, Eli VanderBilt, Luca Weihs, Eric Kolve, Aniruddha

Plug-n-Play Reinforcement Learning in Python with OpenAI Gym and JAX

coax is built on top of JAX, but it doesn't have an explicit dependence on the jax python package. The reason is that your version of jaxlib will depend on your CUDA version.

Proto-RL: Reinforcement Learning with Prototypical Representations

Proto-RL: Reinforcement Learning with Prototypical Representations This is a PyTorch implementation of Proto-RL from Reinforcement Learning with Proto

This project provides a stock market environment using OpenGym with Deep Q-learning and Policy Gradient.

Stock Trading Market OpenAI Gym Environment with Deep Reinforcement Learning using Keras Overview This project provides a general environment for stoc

Scalable, event-driven, deep-learning-friendly backtesting library

...Minimizing the mean square error on future experience. - Richard S. Sutton BTGym Scalable event-driven RL-friendly backtesting library. Build on

Trading Gym is an open source project for the development of reinforcement learning algorithms in the context of trading.

Trading Gym Trading Gym is an open-source project for the development of reinforcement learning algorithms in the context of trading. It is currently

Trading and Backtesting environment for training reinforcement learning agent or simple rule base algo.

TradingGym TradingGym is a toolkit for training and backtesting the reinforcement learning algorithms. This was inspired by OpenAI Gym and imitated th

BlazingSQL is a lightweight, GPU accelerated, SQL engine for Python. Built on RAPIDS cuDF.

A lightweight, GPU accelerated, SQL engine built on the RAPIDS.ai ecosystem. Get Started on app.blazingsql.com Getting Started | Documentation | Examp

PyTorch version of Stable Baselines, reliable implementations of reinforcement learning algorithms.

PyTorch version of Stable Baselines, reliable implementations of reinforcement learning algorithms.

Open world survival environment for reinforcement learning

Crafter Open world survival environment for reinforcement learning. Highlights Crafter is a procedurally generated 2D world, where the agent finds foo

All-in-one Docker container that allows a user to explore Nautobot in a lab environment.

Nautobot Lab This container is not for production use! Nautobot Lab is an all-in-one Docker container that allows a user to quickly get an instance of

A fork of OpenAI Baselines, implementations of reinforcement learning algorithms

Stable Baselines Stable Baselines is a set of improved implementations of reinforcement learning algorithms based on OpenAI Baselines. You can read a

Directions overlay for working with pandas in an analysis environment

dovpanda Directions OVer PANDAs Directions are hints and tips for using pandas in an analysis environment. dovpanda is an overlay companion for workin

RELATE is an Environment for Learning And TEaching

RELATE Relate is an Environment for Learning And TEaching RELATE is a web-based courseware package. It is set apart by the following features: Focus o

Train robotic agents to learn pick and place with deep learning for vision-based manipulation in PyBullet.

Ravens is a collection of simulated tasks in PyBullet for learning vision-based robotic manipulation, with emphasis on pick and place. It features a Gym-like API with 10 tabletop rearrangement tasks, each with (i) a scripted oracle that provides expert demonstrations (for imitation learning), and (ii) reward functions that provide partial credit (for reinforcement learning).

DeepMind Alchemy task environment: a meta-reinforcement learning benchmark

The DeepMind Alchemy environment is a meta-reinforcement learning benchmark that presents tasks sampled from a task distribution with deep underlying structure.