2259 Repositories

Python multi-scale-model Libraries

WhatsApp Multi Device Client

WhatsApp Multi Device Client

Simple bots or Simbots is a library designed to create simple bots using the power of python. This library utilises Intent, Entity, Relation and Context model to create bots .

Simple bots or Simbots is a library designed to create simple chat bots using the power of python. This library utilises Intent, Entity, Relation and

Self-adjusting, auto-compounding multi-pair DCA crypto trading bot using Python, AWS Lambda & 3Commas API

Self-adjusting, auto-compounding multi-pair DCA crypto trading bot using Python, AWS Lambda & 3Commas API The following code describes how we can leve

Convert ONNX model graph to Keras model format.

Convert ONNX model graph to Keras model format.

This repo provides function call to track multi-objects in videos

Custom Object Tracking Introduction This repo provides function call to track multi-objects in videos with a given trained object detection model and

Yas CRNN model training - Yet Another Genshin Impact Scanner

Yas-Train Yet Another Genshin Impact Scanner 又一个原神圣遗物导出器 介绍 该仓库为 Yas 的模型训练程序 相关资料 MobileNetV3 CRNN 使用 假设你会设置基本的pytorch环境。 生成数据集 python main.py gen 训练

Multi-agent reinforcement learning algorithm and environment

Multi-agent reinforcement learning algorithm and environment [en/cn] Pytorch implements multi-agent reinforcement learning algorithms including IQL, Q

A High-Performance Distributed Library for Large-Scale Bundle Adjustment

MegBA: A High-Performance and Distributed Library for Large-Scale Bundle Adjustment This repo contains an official implementation of MegBA. MegBA is a

Deep learning model for EEG artifact removal

DeepSeparator Introduction Electroencephalogram (EEG) recordings are often contaminated with artifacts. Various methods have been developed to elimina

PyTorch implementation of MICCAI 2018 paper "Liver Lesion Detection from Weakly-labeled Multi-phase CT Volumes with a Grouped Single Shot MultiBox Detector"

Grouped SSD (GSSD) for liver lesion detection from multi-phase CT Note: the MICCAI 2018 paper only covers the multi-phase lesion detection part of thi

Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fusion Framework via Self-Supervised Multi-Task Learning. Code will be available soon.

Official-PyTorch-Implementation-of-TransMEF Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fu

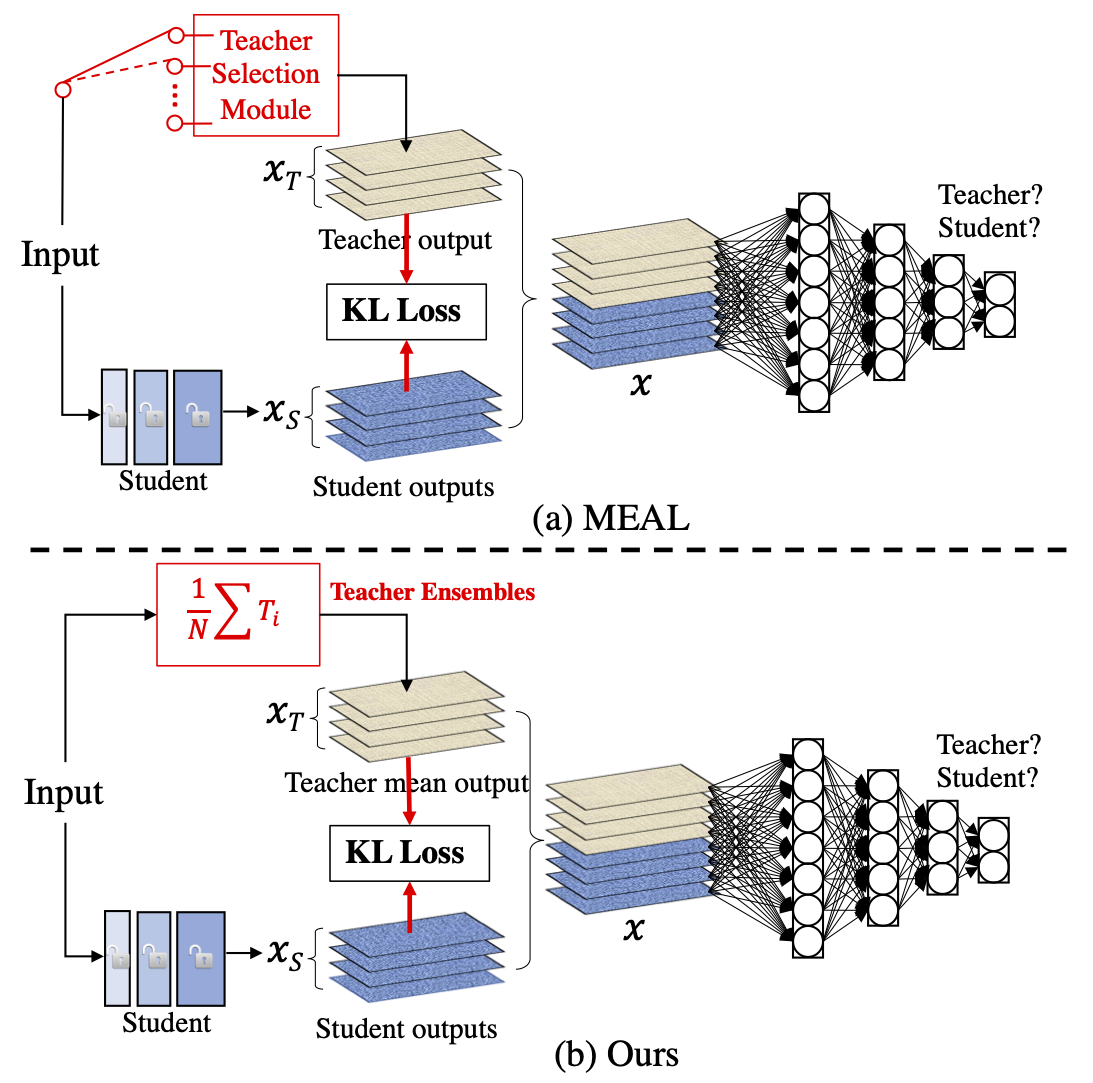

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

Machine learning model evaluation made easy: plots, tables, HTML reports, experiment tracking and Jupyter notebook analysis.

sklearn-evaluation Machine learning model evaluation made easy: plots, tables, HTML reports, experiment tracking, and Jupyter notebook analysis. Suppo

A comprehensive set of fairness metrics for datasets and machine learning models, explanations for these metrics, and algorithms to mitigate bias in datasets and models.

AI Fairness 360 (AIF360) The AI Fairness 360 toolkit is an extensible open-source library containg techniques developed by the research community to h

OpenDILab Multi-Agent Environment

Go-Bigger: Multi-Agent Decision Intelligence Environment GoBigger Doc (中文版) Ongoing 2021.11.13 We are holding a competition —— Go-Bigger: Multi-Agent

Tsunami-Fi is simple multi-tool bash application for Wi-Fi attacks

🪴 Tsunami-Fi 🪴 Русская версия README 🌿 Description 🌿 Tsunami-Fi is simple multi-tool bash application for Wi-Fi WPS PixieDust and NullPIN attack,

A Chinese to English Neural Model Translation Project

ZH-EN NMT Chinese to English Neural Machine Translation This project is inspired by Stanford's CS224N NMT Project Dataset used in this project: News C

Contra is a lightweight, production ready Tensorflow alternative for solving time series prediction challenges with AI

Contra AI Engine A lightweight, production ready Tensorflow alternative developed by Styvio styvio.com » How to Use · Report Bug · Request Feature Tab

Multi-purpose bot made with discord.py

PizzaHat Discord Bot A multi-purpose bot for your server! ℹ️ • Info PizzaHat is a multi-purpose bot, made to satisfy your needs, as well as your serve

Diffusion Probabilistic Models for 3D Point Cloud Generation (CVPR 2021)

Diffusion Probabilistic Models for 3D Point Cloud Generation [Paper] [Code] The official code repository for our CVPR 2021 paper "Diffusion Probabilis

Website for D2C paper

D2C This is the repository that contains source code for the D2C Website. If you find D2C useful for your work please cite: @article{sinha2021d2c au

A collection of resources and papers on Diffusion Models, a darkhorse in the field of Generative Models

This repository contains a collection of resources and papers on Diffusion Models and Score-based Models. If there are any missing valuable resources

A Loss Function for Generative Neural Networks Based on Watson’s Perceptual Model

This repository contains the similarity metrics designed and evaluated in the paper, and instructions and code to re-run the experiments. Implementation in the deep-learning framework PyTorch

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

Model-based Reinforcement Learning Improves Autonomous Racing Performance

Racing Dreamer: Model-based versus Model-free Deep Reinforcement Learning for Autonomous Racing Cars In this work, we propose to learn a racing contro

ROMP: Monocular, One-stage, Regression of Multiple 3D People, ICCV21

Monocular, One-stage, Regression of Multiple 3D People ROMP, accepted by ICCV 2021, is a concise one-stage network for multi-person 3D mesh recovery f

Quick program made to generate alpha and delta tables for Hidden Markov Models

HMM_Calc Functions for generating Alpha and Delta tables from a Hidden Markov Model. Parameters: a: Matrix of transition probabilities. a[i][j] = a_{i

Sample Prior Guided Robust Model Learning to Suppress Noisy Labels

PGDF This repo is the official implementation of our paper "Sample Prior Guided Robust Model Learning to Suppress Noisy Labels ". Citation If you use

Tensorflow implementation of Semi-supervised Sequence Learning (https://arxiv.org/abs/1511.01432)

Transfer Learning for Text Classification with Tensorflow Tensorflow implementation of Semi-supervised Sequence Learning(https://arxiv.org/abs/1511.01

torchbearer: A model fitting library for PyTorch

Note: We're moving to PyTorch Lightning! Read about the move here. From the end of February, torchbearer will no longer be actively maintained. We'll

Recurrent Scale Approximation (RSA) for Object Detection

Recurrent Scale Approximation (RSA) for Object Detection Codebase for Recurrent Scale Approximation for Object Detection in CNN published at ICCV 2017

BERT has a Mouth, and It Must Speak: BERT as a Markov Random Field Language Model

BERT has a Mouth, and It Must Speak: BERT as a Markov Random Field Language Model

Revisiting Pre-trained Models for Chinese Natural Language Processing (Findings of EMNLP 2020)

This repository contains the resources in our paper "Revisiting Pre-trained Models for Chinese Natural Language Processing", which will be published i

Optimus: the first large-scale pre-trained VAE language model

Optimus: the first pre-trained Big VAE language model This repository contains source code necessary to reproduce the results presented in the EMNLP 2

Source code for TACL paper "KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation".

KEPLER: A Unified Model for Knowledge Embedding and Pre-trained Language Representation Source code for TACL 2021 paper KEPLER: A Unified Model for Kn

Phrase-BERT: Improved Phrase Embeddings from BERT with an Application to Corpus Exploration

Phrase-BERT: Improved Phrase Embeddings from BERT with an Application to Corpus Exploration This is the official repository for the EMNLP 2021 long pa

A single model that parses Universal Dependencies across 75 languages.

A single model that parses Universal Dependencies across 75 languages. Given a sentence, jointly predicts part-of-speech tags, morphology tags, lemmas, and dependency trees.

Research Code for NeurIPS 2020 Spotlight paper "Large-Scale Adversarial Training for Vision-and-Language Representation Learning": UNITER adversarial training part

VILLA: Vision-and-Language Adversarial Training This is the official repository of VILLA (NeurIPS 2020 Spotlight). This repository currently supports

![[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations](https://github.com/kdexd/virtex/raw/master/docs/_static/system_figure.jpg)

[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations

VirTex: Learning Visual Representations from Textual Annotations Karan Desai and Justin Johnson University of Michigan CVPR 2021 arxiv.org/abs/2006.06

OpenMMLab 3D Human Parametric Model Toolbox and Benchmark

Introduction English | 简体中文 MMHuman3D is an open source PyTorch-based codebase for the use of 3D human parametric models in computer vision and comput

Label-Free Model Evaluation with Semi-Structured Dataset Representations

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

![Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]](https://github.com/Fangyin1994/KCL/raw/main/fig/overview.png)

Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]

Knowledge-enhanced Contrastive Learning (KCL) Molecular Contrastive Learning with Chemical Element Knowledge Graph [ AAAI 2022 ]. We construct a Chemi

PyTorch trainer and model for Sequence Classification

PyTorch-trainer-and-model-for-Sequence-Classification After cloning the repository, modify your training data so that the training data is a .csv file

Implementation of "Unsupervised Domain Adaptive 3D Detection with Multi-Level Consistency"

Unsupervised Domain Adaptive 3D Detection with Multi-Level Consistency (ICCV2021) Paper Link: https://arxiv.org/abs/2107.11355 This implementation bui

Fuck - Multi Brute Force 🚶♂

f-mbf Fuck - Multi Brute Force 🚶♂ Install Script $ pkg update && pkg upgrade $ pkg install python2 $ pkg install git $ pip2 install requests $ pip2

Built on python (Mathematical straight fit line coordinates error predictor machine learning foundational model)

Sum-Square_Error-Business-Analytical-Tool- Built on python (Mathematical straight fit line coordinates error predictor machine learning foundational m

Multi-Process / Censorship Detection

Multi-Process / Censorship Detection

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

Core ML tools contain supporting tools for Core ML model conversion, editing, and validation.

Core ML Tools Use coremltools to convert machine learning models from third-party libraries to the Core ML format. The Python package contains the sup

Convenient script for trading with python.

Convenient script for trading with python.

MAME is a multi-purpose emulation framework.

MAME's purpose is to preserve decades of software history. As electronic technology continues to rush forward, MAME prevents this important "vintage" software from being lost and forgotten.

Multi-user server for Jupyter notebooks

Technical Overview | Installation | Configuration | Docker | Contributing | License | Help and Resources Please note that this repository is participa

Tools for curating biomedical training data for large-scale language modeling

Tools for curating biomedical training data for large-scale language modeling

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network)

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network) BERTAC is a framework that combines a

Language model Prompt And Query Archive

LPAQA: Language model Prompt And Query Archive This repository contains data and code for the paper How Can We Know What Language Models Know? Install

A Model for Natural Language Attack on Text Classification and Inference

TextFooler A Model for Natural Language Attack on Text Classification and Inference This is the source code for the paper: Jin, Di, et al. "Is BERT Re

The official implementation of "BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Identify Analogies?, ACL 2021 main conference"

BERT is to NLP what AlexNet is to CV This is the official implementation of BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Iden

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed+Megatron trained the world's most powerful language model: MT-530B DeepSpeed is hiring, come join us! DeepSpeed is a deep learning optimizat

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

Code associated with the Don't Stop Pretraining ACL 2020 paper

dont-stop-pretraining Code associated with the Don't Stop Pretraining ACL 2020 paper Citation @inproceedings{dontstoppretraining2020, author = {Suchi

Multi-Task Deep Neural Networks for Natural Language Understanding

New Release We released Adversarial training for both LM pre-training/finetuning and f-divergence. Large-scale Adversarial training for LMs: ALUM code

Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP 2021.

The Stem Cell Hypothesis Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP

PyTorch implementation of the ACL, 2021 paper Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks.

Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks This repo contains the PyTorch implementation of the ACL, 2021 pa

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

Revisiting Self-Training for Few-Shot Learning of Language Model.

SFLM This is the implementation of the paper Revisiting Self-Training for Few-Shot Learning of Language Model. SFLM is short for self-training for few

Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF shows significant improvements over baseline fine-tuning without data filtration.

Information Gain Filtration Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF sho

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

A kedro-plugin to serve Kedro Pipelines as API

General informations Software repository Latest release Total downloads Pypi Code health Branch Tests Coverage Links Documentation Deployment Activity

TensorFlow (Python) implementation of DeepTCN model for multivariate time series forecasting.

DeepTCN TensorFlow TensorFlow (Python) implementation of multivariate time series forecasting model introduced in Chen, Y., Kang, Y., Chen, Y., & Wang

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Cooperative Driving Dataset: a dataset for multi-agent driving scenarios

Cooperative Driving Dataset (CODD) The Cooperative Driving dataset is a synthetic dataset generated using CARLA that contains lidar data from multiple

Label Studio is a multi-type data labeling and annotation tool with standardized output format

Website • Docs • Twitter • Join Slack Community What is Label Studio? Label Studio is an open source data labeling tool. It lets you label data types

Label-Free Model Evaluation with Semi-Structured Dataset Representations

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

Multi-path load balancing is a method used by most of the real-time network to split the packets into different paths rather than transferring it through a single path

Multipath-Load-Balancing Method of managing incoming traffic by distributing and sharing load fairly among multiple routes from source to destination

A simple image-level annotation tool supporting multi-channel images for napari.

napari-labelimg4classification A simple image-level annotation tool supporting multi-channel images for napari. This napari plugin was generated with

Train the HRNet model on ImageNet

High-resolution networks (HRNets) for Image classification News [2021/01/20] Add some stronger ImageNet pretrained models, e.g., the HRNet_W48_C_ssld_

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

EdiBERT, a generative model for image editing

EdiBERT, a generative model for image editing EdiBERT is a generative model based on a bi-directional transformer, suited for image manipulation. The

CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer

CycleTransGAN-EVC CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer Demo emotion CycleTransGAN CycleTransGAN Cycle

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

MapReader: A computer vision pipeline for the semantic exploration of maps at scale

MapReader A computer vision pipeline for the semantic exploration of maps at scale MapReader is an end-to-end computer vision (CV) pipeline designed b

Multi-Template Mouse Brain MRI Atlas (MBMA): both in-vivo and ex-vivo

Multi-template MRI mouse brain atlas (both in vivo and ex vivo) Mouse Brain MRI atlas (both in-vivo and ex-vivo) (repository relocated from the origin

Code for Estimating Multi-cause Treatment Effects via Single-cause Perturbation (NeurIPS 2021)

Estimating Multi-cause Treatment Effects via Single-cause Perturbation (NeurIPS 2021) Single-cause Perturbation (SCP) is a framework to estimate the m

This is the code repository for the paper "Identification of the Generalized Condorcet Winner in Multi-dueling Bandits" (NeurIPS 2021).

Code Repository for the Paper "Identification of the Generalized Condorcet Winner in Multi-dueling Bandits" (To appear in: Proceedings of NeurIPS20

This repository is for the preprint "A generative nonparametric Bayesian model for whole genomes"

BEAR Overview This repository contains code associated with the preprint A generative nonparametric Bayesian model for whole genomes (2021), which pro

Code for "On Memorization in Probabilistic Deep Generative Models"

On Memorization in Probabilistic Deep Generative Models This repository contains the code necessary to reproduce the experiments in On Memorization in

Scalable Multi-Agent Reinforcement Learning

Scalable Multi-Agent Reinforcement Learning 1. Featured algorithms: Value Function Factorization with Variable Agent Sub-Teams (VAST) [1] 2. Implement

Toolbox to analyze temporal context invariance of deep neural networks

PyTCI A toolbox that estimates the integration window of a sensory response using the "Temporal Context Invariance" paradigm (TCI). The TCI method Int

Sample Code for "Pessimism Meets Invariance: Provably Efficient Offline Mean-Field Multi-Agent RL"

Sample Code for "Pessimism Meets Invariance: Provably Efficient Offline Mean-Field Multi-Agent RL" This is the official codebase for Pessimism Meets I

Code for 2021 NeurIPS --- Towards Multi-Grained Explainability for Graph Neural Networks

ReFine: Multi-Grained Explainability for GNNs We are trying hard to update the code, but it may take a while to complete due to our tight schedule rec

Code for models used in Bashiri et al., "A Flow-based latent state generative model of neural population responses to natural images".

A Flow-based latent state generative model of neural population responses to natural images Code for "A Flow-based latent state generative model of ne

This repository is an implementation of our NeurIPS 2021 paper (Stylized Dialogue Generation with Multi-Pass Dual Learning) in PyTorch.

MPDL---TODO This repository is an implementation of our NeurIPS 2021 paper (Stylized Dialogue Generation with Multi-Pass Dual Learning) in PyTorch. Ci

Evolutionary Scale Modeling (esm): Pretrained language models for proteins

Evolutionary Scale Modeling This repository contains code and pre-trained weights for Transformer protein language models from Facebook AI Research, i

This is the repository of the NeurIPS 2021 paper "Curriculum Disentangled Recommendation withNoisy Multi-feedback"

Curriculum_disentangled_recommendation This is the repository of the NeurIPS 2021 paper "Curriculum Disentangled Recommendation with Noisy Multi-feedb

[NeurIPS 2021] "G-PATE: Scalable Differentially Private Data Generator via Private Aggregation of Teacher Discriminators"

G-PATE This is the official code base for our NeurIPS 2021 paper: "G-PATE: Scalable Differentially Private Data Generator via Private Aggregation of T

Mouse Brain in the Model Zoo

Deep Neural Mouse Brain Modeling This is the repository for the ongoing deep neural mouse modeling project, an attempt to characterize the representat

Official Implementation for Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation

Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation We present a generic image-to-image translation framework, pixel2style2pixel (pSp