1136 Repositories

Python non-self-attention Libraries

Pytorch implementation of the paper "Enhancing Content Preservation in Text Style Transfer Using Reverse Attention and Conditional Layer Normalization"

Pytorch implementation of the paper "Enhancing Content Preservation in Text Style Transfer Using Reverse Attention and Conditional Layer Normalization"

Ssl-tool - A simple interactive CLI wrapper around openssl to make creation and installation of self-signed certs easy

What's this? A simple interactive CLI wrapper around openssl to make self-signin

Convolutional neural network that analyzes self-generated images in a variety of languages to find etymological similarities

This project is a convolutional neural network (CNN) that analyzes self-generated images in a variety of languages to find etymological similarities. Specifically, the goal is to prove that computer vision can be used to identify cognates known to exist, and perhaps lead linguists to evidence of unknown cognates.

Mae segmentation - Reproduction of semantic segmentation using masked autoencoder (mae)

ADE20k Semantic segmentation with MAE Getting started Install the mmsegmentation

Real-time domain adaptation for semantic segmentation

Advanced-Machine-Learning This repository contains the code for the project Real

A simple non-official manager interface I'm using for my Raspberry Pis.

My Raspberry Pi Manager Overview I have two Raspberry Pi 4 Model B devices that I hooked up to my two TVs (one in my bedroom and the other in my new g

PyTorch implementation of DirectCLR from paper Understanding Dimensional Collapse in Contrastive Self-supervised Learning

DirectCLR DirectCLR is a simple contrastive learning model for visual representation learning. It does not require a trainable projector as SimCLR. It

An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax

Simple Transformer An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax. Note: The only ex

Pytorch Implementation of Neural Analysis and Synthesis: Reconstructing Speech from Self-Supervised Representations

NANSY: Unofficial Pytorch Implementation of Neural Analysis and Synthesis: Reconstructing Speech from Self-Supervised Representations Notice Papers' D

Self-supervised learning algorithms provide a way to train Deep Neural Networks in an unsupervised way using contrastive losses

Self-supervised learning Self-supervised learning algorithms provide a way to train Deep Neural Networks in an unsupervised way using contrastive loss

CAST: Character labeling in Animation using Self-supervision by Tracking

CAST: Character labeling in Animation using Self-supervision by Tracking (Published as a conference paper at EuroGraphics 2022) Note: The CAST paper c

Using Self-Supervised Pretext Tasks for Active Learning - Official Pytorch Implementation

Using Self-Supervised Pretext Tasks for Active Learning - Official Pytorch Implementation Experiment Setting: CIFAR10 (downloaded and saved in ./DATA

Self-Supervised Deep Blind Video Super-Resolution

Self-Blind-VSR Paper | Discussion Self-Supervised Deep Blind Video Super-Resolution By Haoran Bai and Jinshan Pan Abstract Existing deep learning-base

PyTorch implementation for the paper Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime

Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime Created by Prarthana Bhattacharyya. Disclaimer: This is n

PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks

AttentionHTR PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks. Scene Text

Framework for training options with different attention mechanism and using them to solve downstream tasks.

Using Attention in HRL Framework for training options with different attention mechanism and using them to solve downstream tasks. Requirements GPU re

Official code of Team Yao at Multi-Modal-Fact-Verification-2022

Official code of Team Yao at Multi-Modal-Fact-Verification-2022 A Multi-Modal Fact Verification dataset released as part of the De-Factify workshop in

This repository is for Contrastive Embedding Distribution Refinement and Entropy-Aware Attention Network (CEDR)

CEDR This repository is for Contrastive Embedding Distribution Refinement and Entropy-Aware Attention Network (CEDR) introduced in the following paper

This tool is created by Shahzain and is one of the best self bots out there!

Shahzain SelfBot This tool is created by Shahzain and is one of the best self bots out there! Features Token Destroyer! Server Nuker(50-100 Bans Per S

A script to find the people whom you follow, but they don't follow you back

insta-non-followers A script to find the people whom you follow, but they don't follow you back Dependencies: python3 libraries - instaloader, getpass

Innocent-Bot - A Discord client self-bot for destroying, nuking and causing mischief in servers

Innocent-bot A Discord client self-bot for destroying, nuking and causing mischi

OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive models

OptiPLANT OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive mod

SAS: Self-Augmentation Strategy for Language Model Pre-training

SAS: Self-Augmentation Strategy for Language Model Pre-training This repository

Sinkformers: Transformers with Doubly Stochastic Attention

Code for the paper : "Sinkformers: Transformers with Doubly Stochastic Attention" Paper You will find our paper here. Compat This package has been dev

Cycle Self-Training for Domain Adaptation (NeurIPS 2021)

CST Code release for "Cycle Self-Training for Domain Adaptation" (NeurIPS 2021) Prerequisites torch=1.7.0 torchvision qpsolvers numpy prettytable tqd

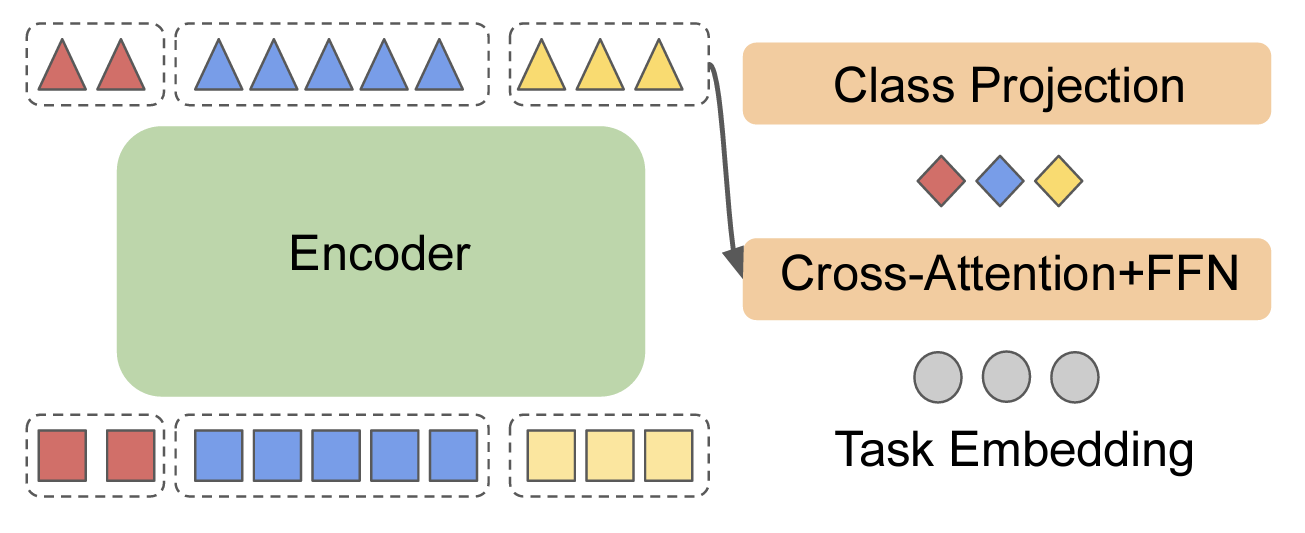

EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks

EncT5 (Unofficial) Pytorch Implementation of EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks About Finetune T5 model for classification & r

This is the repo of the manuscript "Dual-branch Attention-In-Attention Transformer for speech enhancement"

DB-AIAT: A Dual-branch attention-in-attention transformer for single-channel SE

Self-supervised learning (SSL) is a method of machine learning

Self-supervised learning (SSL) is a method of machine learning. It learns from unlabeled sample data. It can be regarded as an intermediate form between supervised and unsupervised learning.

My self-hosting infrastructure, fully automated from empty disk to operating services

Khue's Homelab Current status: ALPHA This project utilizes Infrastructure as Code to automate provisioning, operating, and updating self-hosted servic

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images

Multimodal Co-Attention Transformer (MCAT) for Survival Prediction in Gigapixel Whole Slide Images [ICCV 2021] © Mahmood Lab - This code is made avail

Source code of all the projects of Udacity Self-Driving Car Engineer Nanodegree.

self-driving-car In this repository I will share the source code of all the projects of Udacity Self-Driving Car Engineer Nanodegree. Hope this might

Static Features Classifier - A static features classifier for Point-Could clusters using an Attention-RNN model

Static Features Classifier This is a static features classifier for Point-Could

Built a deep neural network (DNN) that functions as an end-to-end machine translation pipeline

Built a deep neural network (DNN) that functions as an end-to-end machine translation pipeline. The pipeline accepts english text as input and returns the French translation.

This project is the implementation template for HW 0 and HW 1 for both the programming and non-programming tracks

This project is the implementation template for HW 0 and HW 1 for both the programming and non-programming tracks

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

Pytorch implementation of ICASSP 2022 paper Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

MODSKIN-LOLPRO-updater: The mod is fkn 10y old and has'nt a self-updater

The mod is fkn 10y old and has'nt a self-updater. To use it just run the exec, wait some seconds, and it will run the new modsk

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images Histological Image Segmentation This

BioPy is a collection (in-progress) of biologically-inspired algorithms written in Python

BioPy is a collection (in-progress) of biologically-inspired algorithms written in Python. Some of the algorithms included are mor

PyToch implementation of A Novel Self-supervised Learning Task Designed for Anomaly Segmentation

Self-Supervised Anomaly Segmentation Intorduction This is a PyToch implementation of A Novel Self-supervised Learning Task Designed for Anomaly Segmen

Transformer in Vision

Transformer-in-Vision Recent Transformer-based CV and related works. Welcome to comment/contribute! Keep updated. Resource SCENIC: A JAX Library for C

A curated list of efficient attention modules

awesome-fast-attention A curated list of efficient attention modules

GalaXC: Graph Neural Networks with Labelwise Attention for Extreme Classification

GalaXC GalaXC: Graph Neural Networks with Labelwise Attention for Extreme Classification @InProceedings{Saini21, author = {Saini, D. and Jain,

Meta Self-learning for Multi-Source Domain Adaptation: A Benchmark

Meta Self-Learning for Multi-Source Domain Adaptation: A Benchmark Project | Arxiv | YouTube | | Abstract In recent years, deep learning-based methods

Fuzzware is a project for automated, self-configuring fuzzing of firmware images

Fuzzware Fuzzware is a project for automated, self-configuring fuzzing of firmware images. The idea of this project is to configure the memory ranges

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers

Pose Transformers: Human Motion Prediction with Non-Autoregressive Transformers This is the repo used for human motion prediction with non-autoregress

Seq2seq attn - Use the Seq2Seq method to implement machine translation and introduce Attention mechanism to improve the results

Seq2seq_attn Use the Seq2Seq method to implement machine translation and use the

A complete, self-contained example for training ImageNet at state-of-the-art speed with FFCV

ffcv ImageNet Training A minimal, single-file PyTorch ImageNet training script designed for hackability. Run train_imagenet.py to get... ...high accur

Unofficial PyTorch Implementation of "Augmenting Convolutional networks with attention-based aggregation"

Pytorch Implementation of Augmenting Convolutional networks with attention-based aggregation This is the unofficial PyTorch Implementation of "Augment

No-Reference Image Quality Assessment via Transformers, Relative Ranking, and Self-Consistency

This repository contains the implementation for the paper: No-Reference Image Quality Assessment via Transformers, Relative Ranking, and Self-Consiste

Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechanism

Period-alternatives-of-Softmax Experimental Demo for our paper 'Escaping the Gradient Vanishing: Periodic Alternatives of Softmax in Attention Mechani

Official implementation of "Refiner: Refining Self-attention for Vision Transformers".

RefinerViT This repo is the official implementation of "Refiner: Refining Self-attention for Vision Transformers". The repo is build on top of timm an

RETRO-pytorch - Implementation of RETRO, Deepmind's Retrieval based Attention net, in Pytorch

RETRO - Pytorch (wip) Implementation of RETRO, Deepmind's Retrieval based Attent

An official PyTorch Implementation of Boundary-aware Self-supervised Learning for Video Scene Segmentation (BaSSL)

An official PyTorch Implementation of Boundary-aware Self-supervised Learning for Video Scene Segmentation (BaSSL)

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

NeWT: Natural World Tasks

NeWT: Natural World Tasks This repository contains resources for working with the NeWT dataset. ❗ At this time the binary tasks are not publicly avail

Implementation of Memory-Compressed Attention, from the paper "Generating Wikipedia By Summarizing Long Sequences"

Memory Compressed Attention Implementation of the Self-Attention layer of the proposed Memory-Compressed Attention, in Pytorch. This repository offers

Official PyTorch code for "BAM: Bottleneck Attention Module (BMVC2018)" and "CBAM: Convolutional Block Attention Module (ECCV2018)"

BAM and CBAM Official PyTorch code for "BAM: Bottleneck Attention Module (BMVC2018)" and "CBAM: Convolutional Block Attention Module (ECCV2018)" Updat

An implementation of the efficient attention module.

Efficient Attention An implementation of the efficient attention module. Description Efficient attention is an attention mechanism that substantially

GCNet: Non-local Networks Meet Squeeze-Excitation Networks and Beyond

GCNet for Object Detection By Yue Cao, Jiarui Xu, Stephen Lin, Fangyun Wei, Han Hu. This repo is a official implementation of "GCNet: Non-local Networ

This repository contains an implementation of the Permutohedral Attention Module in Pytorch

Permutohedral_attention_module This repository contains an implementation of the Permutohedral Attention Module

The code for Expectation-Maximization Attention Networks for Semantic Segmentation (ICCV'2019 Oral)

EMANet News The bug in loading the pretrained model is now fixed. I have updated the .pth. To use it, download it again. EMANet-101 gets 80.99 on the

Pytorch implementation of Compressive Transformers, from Deepmind

Compressive Transformer in Pytorch Pytorch implementation of Compressive Transformers, a variant of Transformer-XL with compressed memory for long-ran

Implementation of Axial attention - attending to multi-dimensional data efficiently

Axial Attention Implementation of Axial attention in Pytorch. A simple but powerful technique to attend to multi-dimensional data efficiently. It has

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch

Implementing SYNTHESIZER: Rethinking Self-Attention in Transformer Models using Pytorch Reference Paper URL Author: Yi Tay, Dara Bahri, Donald Metzler

My take on a practical implementation of Linformer for Pytorch.

Linformer Pytorch Implementation A practical implementation of the Linformer paper. This is attention with only linear complexity in n, allowing for v

Implementation of Kronecker Attention in Pytorch

Kronecker Attention Pytorch Implementation of Kronecker Attention in Pytorch. Results look less than stellar, but if someone found some context where

Implementation of Memformer, a Memory-augmented Transformer, in Pytorch

Memformer - Pytorch Implementation of Memformer, a Memory-augmented Transformer, in Pytorch. It includes memory slots, which are updated with attentio

![[NeurIPS 2020] Official Implementation:](https://github.com/giannisdaras/smyrf/raw/master/visuals/quality_degradation.png)

[NeurIPS 2020] Official Implementation: "SMYRF: Efficient Attention using Asymmetric Clustering".

SMYRF: Efficient attention using asymmetric clustering Get started: Abstract We propose a novel type of balanced clustering algorithm to approximate a

Action Recognition for Self-Driving Cars

Action Recognition for Self-Driving Cars This repo contains the codes for the 2021 Fall semester project "Action Recognition for Self-Driving Cars" at

Self-Supervised Methods for Noise-Removal

SSMNR | Self-Supervised Methods for Noise Removal Image denoising is the task of removing noise from an image, which can be formulated as the task of

![[ACM MM 2021] TSA-Net: Tube Self-Attention Network for Action Quality Assessment](https://github.com/Shunli-Wang/TSA-Net/raw/main/fig/TSA-Net.jpg)

[ACM MM 2021] TSA-Net: Tube Self-Attention Network for Action Quality Assessment

Tube Self-Attention Network (TSA-Net) This repository contains the PyTorch implementation for paper TSA-Net: Tube Self-Attention Network for Action Qu

Source code for the BMVC-2021 paper "SimReg: Regression as a Simple Yet Effective Tool for Self-supervised Knowledge Distillation".

SimReg: A Simple Regression Based Framework for Self-supervised Knowledge Distillation Source code for the paper "SimReg: Regression as a Simple Yet E

Predicts an answer in yes or no.

Oui-ou-non-prediction Predicts an answer in 'yes' or 'no'. It is based on the game 'effeuiller la marguerite' in which the person plucks flower petals

Non-stationary GP package written from scratch in PyTorch

NSGP-Torch Examples gpytorch model with skgpytorch # Import packages import torch from regdata import NonStat2D from gpytorch.kernels import RBFKernel

LightNet++: Boosted Light-weighted Networks for Real-time Semantic Segmentation

LightNet++ !!!New Repo.!!! ⇒ EfficientNet.PyTorch: Concise, Modular, Human-friendly PyTorch implementation of EfficientNet with Pre-trained Weights !!

The official implementation of ELSA: Enhanced Local Self-Attention for Vision Transformer

ELSA: Enhanced Local Self-Attention for Vision Transformer By Jingkai Zhou, Pich

Calibre Libgen Non-fiction / Sci-tech store plugin

CalibreLibgenSci A Libgen Non-Fiction/Sci-tech store plugin for Calibre Installation Download the latest zip file release from here Open Calibre Navig

Self-supervised spatio-spectro-temporal represenation learning for EEG analysis

EEG-Oriented Self-Supervised Learning and Cluster-Aware Adaptation This repository provides a tensorflow implementation of a submitted paper: EEG-Orie

Code repository for Self-supervised Structure-sensitive Learning, CVPR'17

Self-supervised Structure-sensitive Learning (SSL) Ke Gong, Xiaodan Liang, Xiaohui Shen, Liang Lin, "Look into Person: Self-supervised Structure-sensi

Course on computational design, non-linear optimization, and dynamics of soft systems at UIUC.

Computational Design and Dynamics of Soft Systems · This is a repository that contains the source code for generating the lecture notes, handouts, exe

A car learns to drive in a small 2D environment using the NEAT-Algorithm with Pygame

Self-driving-car-with-Pygame A car learns to drive in a small 2D environment using the NEAT-Algorithm with Pygame Description A car has 5 sensors ("ey

Repository of Vision Transformer with Deformable Attention

Vision Transformer with Deformable Attention This repository contains the code for the paper Vision Transformer with Deformable Attention [arXiv]. Int

We present a regularized self-labeling approach to improve the generalization and robustness properties of fine-tuning.

Overview This repository provides the implementation for the paper "Improved Regularization and Robustness for Fine-tuning in Neural Networks", which

This repository provides the official implementation of 'Learning to ignore: rethinking attention in CNNs' accepted in BMVC 2021.

inverse_attention This repository provides the official implementation of 'Learning to ignore: rethinking attention in CNNs' accepted in BMVC 2021. Le

Scenarios, tutorials and demos for Autonomous Driving

The Autonomous Driving Cookbook (Preview) NOTE: This project is developed and being maintained by Project Road Runner at Microsoft Garage. This is cur

Face Depixelizer based on "PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models" repository.

NOTE We have noticed a lot of concern that PULSE will be used to identify individuals whose faces have been blurred out. We want to emphasize that thi

A self-bot for discord, written in Python, which will send you notifications to your desktop if it detects an intruder on your discord server

A self-bot for discord, written in Python, which will send you notifications to your desktop if it detects an intruder on your discord server

The self-hostable proxy tunnel

TTUN Server The self-hostable proxy tunnel. Running Running: docker run -e TUNNEL_DOMAIN=Your tunnel domain -e SECURE=True if using SSL ghcr.io/to

Unofficial PyTorch reimplementation of the paper Swin Transformer V2: Scaling Up Capacity and Resolution

PyTorch reimplementation of the paper Swin Transformer V2: Scaling Up Capacity and Resolution [arXiv 2021].

The official TensorFlow implementation of the paper Action Transformer: A Self-Attention Model for Short-Time Pose-Based Human Action Recognition

Action Transformer A Self-Attention Model for Short-Time Human Action Recognition This repository contains the official TensorFlow implementation of t

Alignment Attention Fusion framework for Few-Shot Object Detection

AAF framework Framework generalities This repository contains the code of the AAF framework proposed in this paper. The main idea behind this work is

The dynamics of representation learning in shallow, non-linear autoencoders

The dynamics of representation learning in shallow, non-linear autoencoders The package is written in python and uses the pytorch implementation to ML

Towards Boosting the Accuracy of Non-Latin Scene Text Recognition

Convolutional Recurrent Neural Network + CTCLoss | STAR-Net Code for paper "Towards Boosting the Accuracy of Non-Latin Scene Text Recognition" Depende

Implementation of a Transformer using ReLA (Rectified Linear Attention)

ReLA (Rectified Linear Attention) Transformer Implementation of a Transformer using ReLA (Rectified Linear Attention). It will also contain an attempt

Gathers machine learning and Tensorflow deep learning models for NLP problems, 1.13 Tensorflow 2.0

NLP-Models-Tensorflow, Gathers machine learning and tensorflow deep learning models for NLP problems, code simplify inside Jupyter Notebooks 100%. Tab

MVS2D: Efficient Multi-view Stereo via Attention-Driven 2D Convolutions

MVS2D: Efficient Multi-view Stereo via Attention-Driven 2D Convolutions Project Page | Paper If you find our work useful for your research, please con

Official PyTorch implementation of "The Center of Attention: Center-Keypoint Grouping via Attention for Multi-Person Pose Estimation" (ICCV 21).

CenterGroup This the official implementation of our ICCV 2021 paper The Center of Attention: Center-Keypoint Grouping via Attention for Multi-Person P

Code for "Multi-Time Attention Networks for Irregularly Sampled Time Series", ICLR 2021.

Multi-Time Attention Networks (mTANs) This repository contains the PyTorch implementation for the paper Multi-Time Attention Networks for Irregularly