778 Repositories

Python post-training-quantization Libraries

Reference implementation of code generation projects from Facebook AI Research. General toolkit to apply machine learning to code, from dataset creation to model training and evaluation. Comes with pretrained models.

This repository is a toolkit to do machine learning for programming languages. It implements tokenization, dataset preprocessing, model training and m

A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers.

ViTGAN: Training GANs with Vision Transformers A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers. Refer

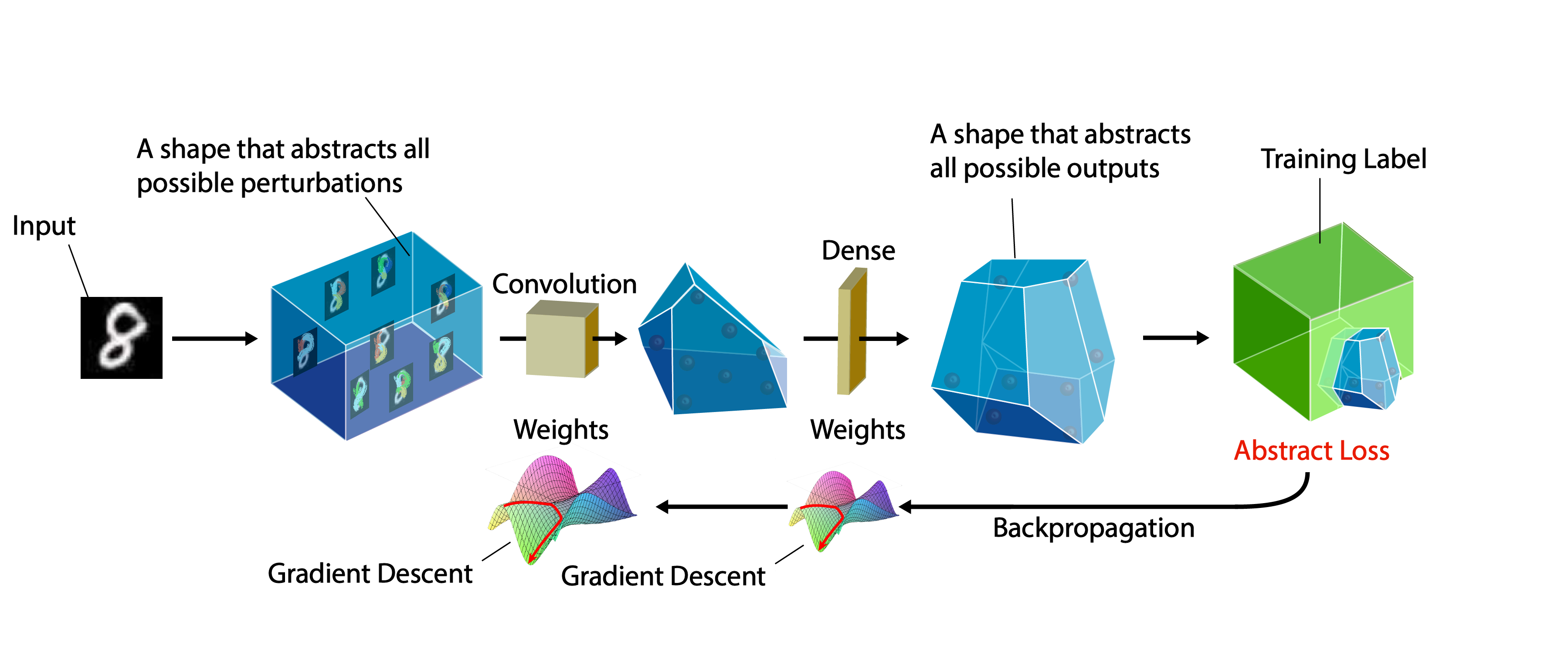

A certifiable defense against adversarial examples by training neural networks to be provably robust

DiffAI v3 DiffAI is a system for training neural networks to be provably robust and for proving that they are robust. The system was developed for the

The Hailo Model Zoo includes pre-trained models and a full building and evaluation environment

Hailo Model Zoo The Hailo Model Zoo provides pre-trained models for high-performance deep learning applications. Using the Hailo Model Zoo you can mea

A PyTorch implementation of "Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks" (KDD 2019).

ClusterGCN ⠀⠀ A PyTorch implementation of "Cluster-GCN: An Efficient Algorithm for Training Deep and Large Graph Convolutional Networks" (KDD 2019). A

Self-training for Few-shot Transfer Across Extreme Task Differences

Self-training for Few-shot Transfer Across Extreme Task Differences (STARTUP) Introduction This repo contains the official implementation of the follo

PyTorch implementation of our Adam-NSCL algorithm from our CVPR2021 (oral) paper "Training Networks in Null Space for Continual Learning"

Adam-NSCL This is a PyTorch implementation of Adam-NSCL algorithm for continual learning from our CVPR2021 (oral) paper: Title: Training Networks in N

StackRec: Efficient Training of Very Deep Sequential Recommender Models by Iterative Stacking

StackRec: Efficient Training of Very Deep Sequential Recommender Models by Iterative Stacking Datasets You can download datasets that have been pre-pr

Official repository for the paper, MidiBERT-Piano: Large-scale Pre-training for Symbolic Music Understanding.

MidiBERT-Piano Authors: Yi-Hui (Sophia) Chou, I-Chun (Bronwin) Chen Introduction This is the official repository for the paper, MidiBERT-Piano: Large-

AlphaNet Improved Training of Supernet with Alpha-Divergence

AlphaNet: Improved Training of Supernet with Alpha-Divergence This repository contains our PyTorch training code, evaluation code and pretrained model

ONNX Runtime for PyTorch accelerates PyTorch model training using ONNX Runtime.

Accelerate PyTorch models with ONNX Runtime

Решения, подсказки, тесты и утилиты для тренировки по алгоритмам от Яндекса.

Решения и подсказки к тренировке по алгоритмам от Яндекса Что есть внутри Решения с подсказками и комментариями; рекомендую сначала смотреть md файл п

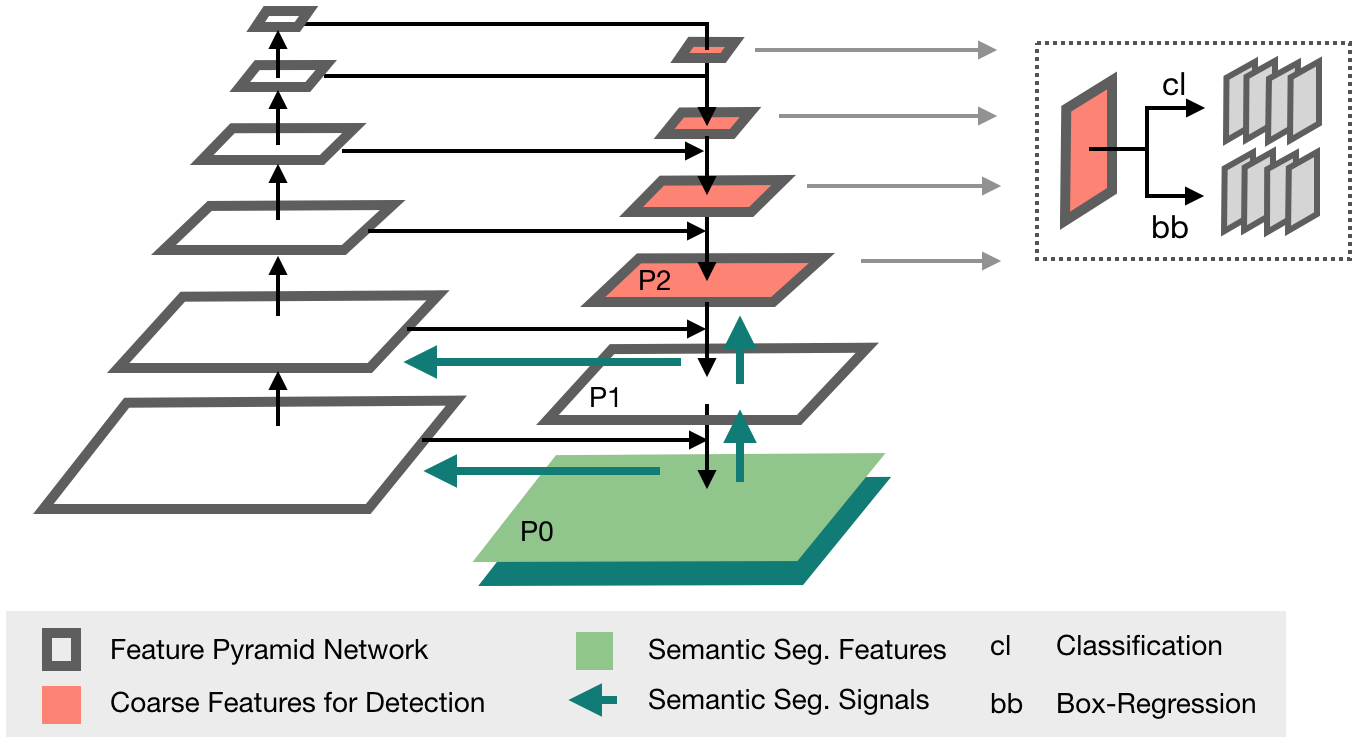

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

HyperPose is a library for building high-performance custom pose estimation applications.

HyperPose is a library for building high-performance custom pose estimation applications.

Piotr - IoT firmware emulation instrumentation for training and research

Piotr: Pythonic IoT exploitation and Research Introduction to Piotr Piotr is an emulation helper for Qemu that provides a convenient way to create, sh

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

WAGMA-SGD is a decentralized asynchronous SGD for distributed deep learning training based on model averaging.

WAGMA-SGD is a decentralized asynchronous SGD based on wait-avoiding group model averaging. The synchronization is relaxed by making the collectives externally-triggerable, namely, a collective can be initiated without requiring that all the processes enter it. It partially reduces the data within non-overlapping groups of process, improving the parallel scalability.

Bagua is a flexible and performant distributed training algorithm development framework.

Bagua is a flexible and performant distributed training algorithm development framework.

Heimdall watchtower automatically sends you emails to notify you of the latest progress of your deep learning programs.

This software automatically sends you emails to notify you of the latest progress of your deep learning programs.

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Code for the paper "Balancing Training for Multilingual Neural Machine Translation, ACL 2020"

Balancing Training for Multilingual Neural Machine Translation Implementation of the paper Balancing Training for Multilingual Neural Machine Translat

This is a cryptocurrency trading bot that analyses Reddit sentiment and places trades on Binance based on reddit post and comment sentiment. If you like this project please consider donating via brave. Thanks.

This is a cryptocurrency trading bot that analyses Reddit sentiment and places trades on Binance based on reddit post and comment sentiment. The bot f

Shared code for training sentence embeddings with Flax / JAX

flax-sentence-embeddings This repository will be used to share code for the Flax / JAX community event to train sentence embeddings on 1B+ training pa

Source code for the paper "PLOME: Pre-training with Misspelled Knowledge for Chinese Spelling Correction" in ACL2021

PLOME:Pre-training with Misspelled Knowledge for Chinese Spelling Correction (ACL2021) This repository provides the code and data of the work in ACL20

This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code for training a DPR model then continuing training with RAG.

KGI (Knowledge Graph Induction) for slot filling This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code fo

Official code of paper "PGT: A Progressive Method for Training Models on Long Videos" on CVPR2021

PGT Code for paper PGT: A Progressive Method for Training Models on Long Videos. Install Run pip install -r requirements.txt. Run python setup.py buil

This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transformations at CVPR'21. According to some product reasons, we are not planning to release the training/testing codes and models. However, we will release the dataset and the scripts to prepare the dataset.

TransFill-Reference-Inpainting This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transf

The source codes for ACL 2021 paper 'BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data'

BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data This repository provides the implementation details for

YoloV5 implemented by TensorFlow2 , with support for training, evaluation and inference.

Efficient implementation of YOLOV5 in TensorFlow2

Bifrost C2. Open-source post-exploitation using Discord API

Bifrost Command and Control What's Bifrost? Bifrost is an open-source Discord BOT that works as Command and Control (C2). This C2 uses Discord API for

Orthogonal Over-Parameterized Training

The inductive bias of a neural network is largely determined by the architecture and the training algorithm. To achieve good generalization, how to effectively train a neural network is of great importance. We propose a novel orthogonal over-parameterized training (OPT) framework that can provably minimize the hyperspherical energy which characterizes the diversity of neurons on a hypersphere. See our previous work -- MHE for an in-depth introduction.

quantize aware training package for NCNN on pytorch

ncnnqat ncnnqat is a quantize aware training package for NCNN on pytorch. Table of Contents ncnnqat Table of Contents Installation Usage Code Examples

TAP: Text-Aware Pre-training for Text-VQA and Text-Caption, CVPR 2021 (Oral)

TAP: Text-Aware Pre-training TAP: Text-Aware Pre-training for Text-VQA and Text-Caption by Zhengyuan Yang, Yijuan Lu, Jianfeng Wang, Xi Yin, Dinei Flo

Code for the paper One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation, CVPR 2021.

One Thing One Click One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation (CVPR2021) Code for the paper One Thi

CVPR 2021 Official Pytorch Code for UC2: Universal Cross-lingual Cross-modal Vision-and-Language Pre-training

UC2 UC2: Universal Cross-lingual Cross-modal Vision-and-Language Pre-training Mingyang Zhou, Luowei Zhou, Shuohang Wang, Yu Cheng, Linjie Li, Zhou Yu,

Unconstrained Text Detection with Box Supervisionand Dynamic Self-Training

SelfText Beyond Polygon: Unconstrained Text Detection with Box Supervisionand Dynamic Self-Training Introduction This is a PyTorch implementation of "

How Do Adam and Training Strategies Help BNNs Optimization? In ICML 2021.

AdamBNN This is the pytorch implementation of our paper "How Do Adam and Training Strategies Help BNNs Optimization?", published in ICML 2021. In this

VIMPAC: Video Pre-Training via Masked Token Prediction and Contrastive Learning

This is a release of our VIMPAC paper to illustrate the implementations. The pretrained checkpoints and scripts will be soon open-sourced in HuggingFace transformers.

Secure Distributed Training at Scale

Secure Distributed Training at Scale This repository contains the implementation of experiments from the paper "Secure Distributed Training at Scale"

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation models. It contains 17 different amateur subjects performing 30 sports-related actions each, for a total of 510 action clips.

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation mode

🔮 Execution time predictions for deep neural network training iterations across different GPUs.

Habitat: A Runtime-Based Computational Performance Predictor for Deep Neural Network Training Habitat is a tool that predicts a deep neural network's

2021海华AI挑战赛·中文阅读理解·技术组·第三名

文字是人类用以记录和表达的最基本工具,也是信息传播的重要媒介。透过文字与符号,我们可以追寻人类文明的起源,可以传播知识与经验,读懂文字是认识与了解的第一步。对于人工智能而言,它的核心问题之一就是认知,而认知的核心则是语义理解。

Code for our paper "Transfer Learning for Sequence Generation: from Single-source to Multi-source" in ACL 2021.

TRICE: a task-agnostic transferring framework for multi-source sequence generation This is the source code of our work Transfer Learning for Sequence

The official PyTorch implementation of recent paper - SAINT: Improved Neural Networks for Tabular Data via Row Attention and Contrastive Pre-Training

This repository is the official PyTorch implementation of SAINT. Find the paper on arxiv SAINT: Improved Neural Networks for Tabular Data via Row Atte

Training data extraction on GPT-2

Training data extraction from GPT-2 This repository contains code for extracting training data from GPT-2, following the approach outlined in the foll

Degree-Quant: Quantization-Aware Training for Graph Neural Networks.

Degree-Quant This repo provides a clean re-implementation of the code associated with the paper Degree-Quant: Quantization-Aware Training for Graph Ne

![[CVPR 2021]](https://github.com/VITA-Group/CV_LTH_Pre-training/raw/main/Figs/Teaser.png)

[CVPR 2021] "The Lottery Tickets Hypothesis for Supervised and Self-supervised Pre-training in Computer Vision Models" Tianlong Chen, Jonathan Frankle, Shiyu Chang, Sijia Liu, Yang Zhang, Michael Carbin, Zhangyang Wang

The Lottery Tickets Hypothesis for Supervised and Self-supervised Pre-training in Computer Vision Models Codes for this paper The Lottery Tickets Hypo

A no-BS, dead-simple training visualizer for tf-keras

A no-BS, dead-simple training visualizer for tf-keras TrainingDashboard Plot inter-epoch and intra-epoch loss and metrics within a jupyter notebook wi

An Efficient Training Approach for Very Large Scale Face Recognition or F²C for simplicity.

Fast Face Classification (F²C) This is the code of our paper An Efficient Training Approach for Very Large Scale Face Recognition or F²C for simplicit

This is the research repository for Vid2Doppler: Synthesizing Doppler Radar Data from Videos for Training Privacy-Preserving Activity Recognition.

Vid2Doppler: Synthesizing Doppler Radar Data from Videos for Training Privacy-Preserving Activity Recognition This is the research repository for Vid2

simpleT5 is built on top of PyTorch-lightning⚡️ and Transformers🤗 that lets you quickly train your T5 models.

Quickly train T5 models in just 3 lines of code + ONNX support simpleT5 is built on top of PyTorch-lightning ⚡️ and Transformers 🤗 that lets you quic

FluxTraining.jl gives you an endlessly extensible training loop for deep learning

A flexible neural net training library inspired by fast.ai

(CVPR2021) Kaleido-BERT: Vision-Language Pre-training on Fashion Domain

Kaleido-BERT: Vision-Language Pre-training on Fashion Domain Mingchen Zhuge*, Dehong Gao*, Deng-Ping Fan#, Linbo Jin, Ben Chen, Haoming Zhou, Minghui

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

Proof-of-concept obfuscation toolkit for C# post-exploitation tools

InvisibilityCloak Proof-of-concept obfuscation toolkit for C# post-exploitation tools. This will perform the below actions for a C# visual studio proj

ActNN: Reducing Training Memory Footprint via 2-Bit Activation Compressed Training

ActNN : Activation Compressed Training This is the official project repository for ActNN: Reducing Training Memory Footprint via 2-Bit Activation Comp

![[CVPR'21 Oral] Seeing Out of tHe bOx: End-to-End Pre-training for Vision-Language Representation Learning](https://github.com/researchmm/soho/raw/master/resources/soho.png)

[CVPR'21 Oral] Seeing Out of tHe bOx: End-to-End Pre-training for Vision-Language Representation Learning

Seeing Out of tHe bOx: End-to-End Pre-training for Vision-Language Representation Learning [CVPR'21, Oral] By Zhicheng Huang*, Zhaoyang Zeng*, Yupan H

Unsupervised Pre-training for Person Re-identification (LUPerson)

LUPerson Unsupervised Pre-training for Person Re-identification (LUPerson). The repository is for our CVPR2021 paper Unsupervised Pre-training for Per

EfficientNetV2 implementation using PyTorch

EfficientNetV2-S implementation using PyTorch Train Steps Configure imagenet path by changing data_dir in train.py python main.py --benchmark for mode

Crowbar - A windows post exploitation tool

Crowbar - A windows post exploitation tool Status - ✔️ This project is now considered finished. Any updates from now on will most likely be new script

Self-training with Weak Supervision (NAACL 2021)

This repo holds the code for our weak supervision framework, ASTRA, described in our NAACL 2021 paper: "Self-Training with Weak Supervision"

Standalone pre-training recipe with JAX+Flax

Sabertooth Sabertooth is standalone pre-training recipe based on JAX+Flax, with data pipelines implemented in Rust. It runs on CPU, GPU, and/or TPU, b

A PyTorch Lightning solution to training OpenAI's CLIP from scratch.

train-CLIP 📎 A PyTorch Lightning solution to training CLIP from scratch. Goal ⚽ Our aim is to create an easy to use Lightning implementation of OpenA

(SIGIR2020) “Asymmetric Tri-training for Debiasing Missing-Not-At-Random Explicit Feedback’’

Asymmetric Tri-training for Debiasing Missing-Not-At-Random Explicit Feedback About This repository accompanies the real-world experiments conducted i

A general-purpose multi-agent training framework.

MALib A general-purpose multi-agent training framework. Installation step1: build environment conda create -n malib python==3.7 -y conda activate mali

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more than just a paraphrasing model.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

Physics-Aware Training (PAT) is a method to train real physical systems with backpropagation.

Physics-Aware Training (PAT) is a method to train real physical systems with backpropagation. It was introduced in Wright, Logan G. & Onodera, Tatsuhiro et al. (2021)1 to train Physical Neural Networks (PNNs) - neural networks whose building blocks are physical systems.

PyTorch code for Vision Transformers training with the Self-Supervised learning method DINO

Self-Supervised Vision Transformers with DINO PyTorch implementation and pretrained models for DINO. For details, see Emerging Properties in Self-Supe

Code to use Augmented Shapiro Wilks Stopping, as well as code for the paper "Statistically Signifigant Stopping of Neural Network Training"

This codebase is being actively maintained, please create and issue if you have issues using it Basics All data files are included under losses and ea

[CVPR 2021] MiVOS - Mask Propagation module. Reproduced STM (and better) with training code :star2:. Semi-supervised video object segmentation evaluation.

MiVOS (CVPR 2021) - Mask Propagation Ho Kei Cheng, Yu-Wing Tai, Chi-Keung Tang [arXiv] [Paper PDF] [Project Page] [Papers with Code] This repo impleme

DiffQ performs differentiable quantization using pseudo quantization noise. It can automatically tune the number of bits used per weight or group of weights, in order to achieve a given trade-off between model size and accuracy.

Differentiable Model Compression via Pseudo Quantization Noise DiffQ performs differentiable quantization using pseudo quantization noise. It can auto

TorchShard is a lightweight engine for slicing a PyTorch tensor into parallel shards

TorchShard is a lightweight engine for slicing a PyTorch tensor into parallel shards. It can reduce GPU memory and scale up the training when the model has massive linear layers (e.g., ViT, BERT and GPT) or huge classes (millions). It has the same API design as PyTorch.

A library for preparing, training, and evaluating scalable deep learning hybrid recommender systems using PyTorch.

collie_recs Collie is a library for preparing, training, and evaluating implicit deep learning hybrid recommender systems, named after the Border Coll

TorchPQ is a python library for Approximate Nearest Neighbor Search (ANNS) and Maximum Inner Product Search (MIPS) on GPU using Product Quantization (PQ) algorithm.

Efficient implementations of Product Quantization and its variants using Pytorch and CUDA

A Practical Debugging Tool for Training Deep Neural Networks

Cockpit is a visual and statistical debugger specifically designed for deep learning!

PlenOctrees: NeRF-SH Training & Conversion

PlenOctrees Official Repo: NeRF-SH training and conversion This repository contains code to train NeRF-SH and to extract the PlenOctree, constituting

Pytorch implementation of "Training a 85.4% Top-1 Accuracy Vision Transformer with 56M Parameters on ImageNet"

Token Labeling: Training an 85.4% Top-1 Accuracy Vision Transformer with 56M Parameters on ImageNet (arxiv) This is a Pytorch implementation of our te

FID calculation with proper image resizing and quantization steps

clean-fid: Fixing Inconsistencies in FID Project | Paper The FID calculation involves many steps that can produce inconsistencies in the final metric.

(CVPR 2021) ST3D: Self-training for Unsupervised Domain Adaptation on 3D Object Detection

ST3D Code release for the paper ST3D: Self-training for Unsupervised Domain Adaptation on 3D Object Detection, CVPR 2021 Authors: Jihan Yang*, Shaoshu

Coreference resolution for English, German and Polish, optimised for limited training data and easily extensible for further languages

Coreferee Author: Richard Paul Hudson, msg systems ag 1. Introduction 1.1 The basic idea 1.2 Getting started 1.2.1 English 1.2.2 German 1.2.3 Polish 1

Training code of Spatial Time Memory Network. Semi-supervised video object segmentation.

Training-code-of-STM This repository fully reproduces Space-Time Memory Networks Performance on Davis17 val set&Weights backbone training stage traini

Focus on Algorithm Design, Not on Data Wrangling

The dataTap Python library is the primary interface for using dataTap's rich data management tools. Create datasets, stream annotations, and analyze model performance all with one library.

skweak: A software toolkit for weak supervision applied to NLP tasks

Labelled data remains a scarce resource in many practical NLP scenarios. This is especially the case when working with resource-poor languages (or text domains), or when using task-specific labels without pre-existing datasets. The only available option is often to collect and annotate texts by hand, which is expensive and time-consuming.

Source code for AAAI20 "Generating Persona Consistent Dialogues by Exploiting Natural Language Inference".

Generating Persona Consistent Dialogues by Exploiting Natural Language Inference Source code for RCDG model in AAAI20 Generating Persona Consistent Di

![[CVPRW 21]](https://github.com/VITA-Group/BNN_NoBN/raw/main/Figs/res.png)

[CVPRW 21] "BNN - BN = ? Training Binary Neural Networks without Batch Normalization", Tianlong Chen, Zhenyu Zhang, Xu Ouyang, Zechun Liu, Zhiqiang Shen, Zhangyang Wang

BNN - BN = ? Training Binary Neural Networks without Batch Normalization Codes for this paper BNN - BN = ? Training Binary Neural Networks without Bat

This repository allows you to anonymize sensitive information in images/videos. The solution is fully compatible with the DL-based training/inference solutions that we already published/will publish for Object Detection and Semantic Segmentation.

BMW-Anonymization-Api Data privacy and individuals’ anonymity are and always have been a major concern for data-driven companies. Therefore, we design

Diffgram - Supervised Learning Data Platform

Data Annotation, Data Labeling, Annotation Tooling, Training Data for Machine Learning

Official code of our work, Unified Pre-training for Program Understanding and Generation [NAACL 2021].

PLBART Code pre-release of our work, Unified Pre-training for Program Understanding and Generation accepted at NAACL 2021. Note. A detailed documentat

Sandbox for training deep learning networks

Deep learning networks This repo is used to research convolutional networks primarily for computer vision tasks. For this purpose, the repo contains (

Provided is code that demonstrates the training and evaluation of the work presented in the paper: "On the Detection of Digital Face Manipulation" published in CVPR 2020.

FFD Source Code Provided is code that demonstrates the training and evaluation of the work presented in the paper: "On the Detection of Digital Face M

I-BERT: Integer-only BERT Quantization

I-BERT: Integer-only BERT Quantization HuggingFace Implementation I-BERT is also available in the master branch of HuggingFace! Visit the following li

Based on the paper "Geometry-aware Instance-reweighted Adversarial Training" ICLR 2021 oral

Geometry-aware Instance-reweighted Adversarial Training This repository provides codes for Geometry-aware Instance-reweighted Adversarial Training (ht

Trading environnement for RL agents, backtesting and training.

TradzQAI Trading environnement for RL agents, backtesting and training. Live session with coinbasepro-python is finaly arrived ! Available sessions: L

Trading and Backtesting environment for training reinforcement learning agent or simple rule base algo.

TradingGym TradingGym is a toolkit for training and backtesting the reinforcement learning algorithms. This was inspired by OpenAI Gym and imitated th

Learning recognition/segmentation models without end-to-end training. 40%-60% less GPU memory footprint. Same training time. Better performance.

InfoPro-Pytorch The Information Propagation algorithm for training deep networks with local supervision. (ICLR 2021) Revisiting Locally Supervised Lea

Code for pre-training CharacterBERT models (as well as BERT models).

Pre-training CharacterBERT (and BERT) This is a repository for pre-training BERT and CharacterBERT. DISCLAIMER: The code was largely adapted from an o

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.