2334 Repositories

Python pre-trained-language-models Libraries

VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training [Arxiv] VideoMAE: Masked Autoencoders are Data-Efficient Learne

Code and pre-trained models for MultiMAE: Multi-modal Multi-task Masked Autoencoders

MultiMAE: Multi-modal Multi-task Masked Autoencoders Roman Bachmann*, David Mizrahi*, Andrei Atanov, Amir Zamir Website | arXiv | BibTeX Official PyTo

BDDM: Bilateral Denoising Diffusion Models for Fast and High-Quality Speech Synthesis

Bilateral Denoising Diffusion Models (BDDMs) This is the official PyTorch implementation of the following paper: BDDM: BILATERAL DENOISING DIFFUSION M

Lyrics generation with GPT2-based Transformer

HuggingArtists - Train a model to generate lyrics Create AI-Artist in just 5 minutes! 🚀 Run the demo notebook to train 🚀 Run the GUI demo to test Di

I will implement Fastai in each projects present in this repository.

DEEP LEARNING FOR CODERS WITH FASTAI AND PYTORCH The repository contains a list of the projects which I have worked on while reading the book Deep Lea

Accelerated NLP pipelines for fast inference on CPU and GPU. Built with Transformers, Optimum and ONNX Runtime.

Optimum Transformers Accelerated NLP pipelines for fast inference 🚀 on CPU and GPU. Built with 🤗 Transformers, Optimum and ONNX runtime. Installatio

![[CVPR 2022 Oral] Versatile Multi-Modal Pre-Training for Human-Centric Perception](https://github.com/hongfz16/HCMoCo/raw/main/assets/teaser.png)

[CVPR 2022 Oral] Versatile Multi-Modal Pre-Training for Human-Centric Perception

Versatile Multi-Modal Pre-Training for Human-Centric Perception Fangzhou Hong1 Liang Pan1 Zhongang Cai1,2,3 Ziwei Liu1* 1S-Lab, Nanyang Technologic

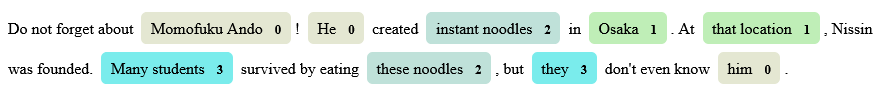

Entity Disambiguation as text extraction (ACL 2022)

ExtEnD: Extractive Entity Disambiguation This repository contains the code of ExtEnD: Extractive Entity Disambiguation, a novel approach to Entity Dis

A multi-lingual approach to AllenNLP CoReference Resolution along with a wrapper for spaCy.

Crosslingual Coreference Coreference is amazing but the data required for training a model is very scarce. In our case, the available training for non

ACL'22: Structured Pruning Learns Compact and Accurate Models

☕ CoFiPruning: Structured Pruning Learns Compact and Accurate Models This repository contains the code and pruned models for our ACL'22 paper Structur

![[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links](https://github.com/michiyasunaga/LinkBERT/raw/main/figs/overview.png)

[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links

LinkBERT: A Knowledgeable Language Model Pretrained with Document Links This repo provides the model, code & data of our paper: LinkBERT: Pretraining

Generate images from texts. In Russian

ruDALL-E Generate images from texts pip install rudalle==1.1.0rc0 🤗 HF Models: ruDALL-E Malevich (XL) ruDALL-E Emojich (XL) (readme here) ruDALL-E S

Lightning ⚡️ fast forecasting with statistical and econometric models.

Nixtla Statistical ⚡️ Forecast Lightning fast forecasting with statistical and econometric models StatsForecast offers a collection of widely used uni

Pre-crisis Risk Management for Personal Finance

Антикризисный риск-менеджмент личных финансов Риск-менеджмент личных финансов условиях санкций и/или финансового кризиса: делаем сегодня все, чтобы за

This repository contains the best Data Science free hand-picked resources to equip you with all the industry-driven skills and interview preparation kit.

Best Data Science Resources Hey, Data Enthusiasts out there! Finally, after lots of requests from the community I finally came up with the best free D

HugsVision is a easy to use huggingface wrapper for state-of-the-art computer vision

HugsVision is an open-source and easy to use all-in-one huggingface wrapper for computer vision. The goal is to create a fast, flexible and user-frien

A Python package develop for transportation spatio-temporal big data processing, analysis and visualization.

English 中文版 TransBigData Introduction TransBigData is a Python package developed for transportation spatio-temporal big data processing, analysis and

🙌Kart of 210+ projects based on machine learning, deep learning, computer vision, natural language processing and all. Show your support by ✨ this repository.

ML-ProjectKart 📌 Repository This kart showcases the finest collection of all projects based on machine learning, deep learning, computer vision, natu

Curso práctico: NLP de cero a cien 🤗

Curso Práctico: NLP de cero a cien Comprende todos los conceptos y arquitecturas clave del estado del arte del NLP y aplícalos a casos prácticos utili

This repository consists of a complete guide on natural language processing (NLP) in Python where we'll learn various techniques for implementing NLP including parsing & text processing and understand how to use NLP for text feature engineering.

Python_Natural_Language_Processing This repository contains tutorials on important topics related to Natural Language Processing (NPL). No. Name 01 01

Extracting Tables from Document Images using a Multi-stage Pipeline for Table Detection and Table Structure Recognition:

Multi-Type-TD-TSR Check it out on Source Code of our Paper: Multi-Type-TD-TSR Extracting Tables from Document Images using a Multi-stage Pipeline for

BigDetection: A Large-scale Benchmark for Improved Object Detector Pre-training

BigDetection: A Large-scale Benchmark for Improved Object Detector Pre-training By Likun Cai, Zhi Zhang, Yi Zhu, Li Zhang, Mu Li, Xiangyang Xue. This

StorSeismic: An approach to pre-train a neural network to store seismic data features

StorSeismic: An approach to pre-train a neural network to store seismic data features This repository contains codes and resources to reproduce experi

An easy-to-use framework for BERT models, with trainers, various NLP tasks and detailed annonations

FantasyBert English | 中文 Introduction An easy-to-use framework for BERT models, with trainers, various NLP tasks and detailed annonations. You can imp

RITA is a family of autoregressive protein models, developed by LightOn in collaboration with the OATML group at Oxford and the Debora Marks Lab at Harvard.

RITA: a Study on Scaling Up Generative Protein Sequence Models RITA is a family of autoregressive protein models, developed by a collaboration of Ligh

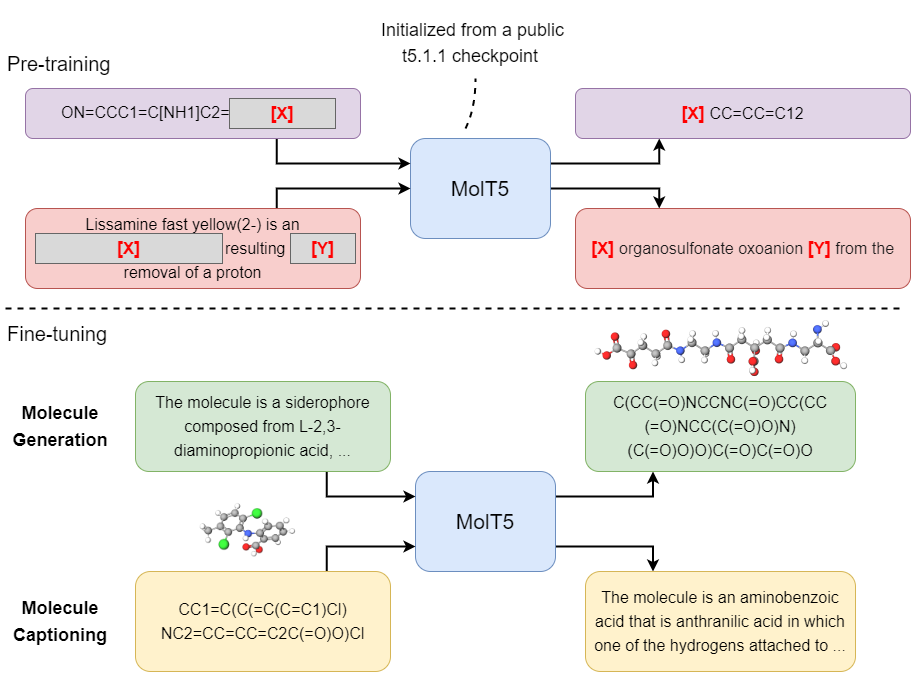

Associated Repository for "Translation between Molecules and Natural Language"

MolT5: Translation between Molecules and Natural Language Associated repository for "Translation between Molecules and Natural Language". Table of Con

Official repository for the paper "Self-Supervised Models are Continual Learners" (CVPR 2022)

Self-Supervised Models are Continual Learners This is the official repository for the paper: Self-Supervised Models are Continual Learners Enrico Fini

SeqTR: A Simple yet Universal Network for Visual Grounding

SeqTR This is the official implementation of SeqTR: A Simple yet Universal Network for Visual Grounding, which simplifies and unifies the modelling fo

An attempt to map the areas with active conflict in Ukraine using open source twitter data.

Live Action Map (LAM) An attempt to use open source data on Twitter to map areas with active conflict. Right now it is used for the Ukraine-Russia con

A Python library that enables ML teams to share, load, and transform data in a collaborative, flexible, and efficient way :chestnut:

Squirrel Core Share, load, and transform data in a collaborative, flexible, and efficient way What is Squirrel? Squirrel is a Python library that enab

Natural Language Processing - Sommer Semester 2022

Natural Language Processing (DIS25a/NLP) This course can be taken for the Bachelor Programm Data and Information Science (DIS25a) or the Master Progra

Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch

CoCa - Pytorch Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch. They were able to elegantly fit in contras

Unified API to facilitate usage of pre-trained "perceptor" models, a la CLIP

mmc installation git clone https://github.com/dmarx/Multi-Modal-Comparators cd 'Multi-Modal-Comparators' pip install poetry poetry build pip install d

Language Models Can See: Plugging Visual Controls in Text Generation

Language Models Can See: Plugging Visual Controls in Text Generation Authors: Yixuan Su, Tian Lan, Yahui Liu, Fangyu Liu, Dani Yogatama, Yan Wang, Lin

code for TCL: Vision-Language Pre-Training with Triple Contrastive Learning, CVPR 2022

Vision-Language Pre-Training with Triple Contrastive Learning, CVPR 2022 News (03/16/2022) upload retrieval checkpoints finetuned on COCO and Flickr T

Convert scikit-learn models to PyTorch modules

sk2torch sk2torch converts scikit-learn models into PyTorch modules that can be tuned with backpropagation and even compiled as TorchScript. Problems

SentimentArcs: a large ensemble of dozens of sentiment analysis models to analyze emotion in text over time

SentimentArcs - Emotion in Text An end-to-end pipeline based on Jupyter notebooks to detect, extract, process and anlayze emotion over time in text. E

Doing the asl sign language classification on static images using graph neural networks.

SignLangGNN When GNNs 💜 MediaPipe. This is a starter project where I tried to implement some traditional image classification problem i.e. the ASL si

Code for intrusion detection system (IDS) development using CNN models and transfer learning

Intrusion-Detection-System-Using-CNN-and-Transfer-Learning This is the code for the paper entitled "A Transfer Learning and Optimized CNN Based Intrus

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

DLO8012: Natural Language Processing & CSL804: Computational Lab - II Semester VIII

NATURAL-LANGUAGE-PROCESSING-AND-COMPUTATIONAL-LAB-II DLO8012: NLP & CSL804: CL-II [SEMESTER VIII] Syllabus NLP - Reference Books THE WALL MEGA SATISH

Sapiens is a human antibody language model based on BERT.

Sapiens: Human antibody language model ____ _ / ___| __ _ _ __ (_) ___ _ __ ___ \___ \ / _` | '_ \| |/ _ \ '

Implementation of GeoDiff: a Geometric Diffusion Model for Molecular Conformation Generation (ICLR 2022).

GeoDiff: a Geometric Diffusion Model for Molecular Conformation Generation [OpenReview] [arXiv] [Code] The official implementation of GeoDiff: A Geome

Process text, including tokenizing and representing sentences as vectors and Applying some concepts like RNN, LSTM and GRU to create a classifier can detect the language in which a sentence is written from among 17 languages.

Language Identifier What is this ? The goal of this project is to create a model that is able to predict a given sentence language through text proces

A Persian Image Captioning model based on Vision Encoder Decoder Models of the transformers🤗.

Persian-Image-Captioning We fine-tuning the Vision Encoder Decoder Model for the task of image captioning on the coco-flickr-farsi dataset. The implem

An official repository for tutorials of Probabilistic Modelling and Reasoning (2021/2022) - a University of Edinburgh master's course.

PMR computer tutorials on HMMs (2021-2022) This is a repository for computer tutorials of Probabilistic Modelling and Reasoning (2021/2022) - a Univer

The FLARE team's open-source library to disassemble Common Intermediate Language (CIL) instructions.

dncil is a Common Intermediate Language (CIL) disassembly library written in Python that supports parsing the header, instructions, and exception hand

PyTorch implementation of "data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language" from Meta AI

data2vec-pytorch PyTorch implementation of "data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language" from Meta AI (F

Guide to using pre-trained large language models of source code

Large Models of Source Code I occasionally train and publicly release large neural language models on programs, including PolyCoder. Here, I describe

AI Summer's complete catalog of articles

Learn Deep Learning with AI Summer A collection of all articles (almost 100) written for the AI Summer blog organized by topic. Deep Learning Theory M

Get started with Machine Learning with Python - An introduction with Python programming examples

Machine Learning With Python Get started with Machine Learning with Python An engaging introduction to Machine Learning with Python TL;DR Download all

Large-scale language modeling tutorials with PyTorch

Large-scale language modeling tutorials with PyTorch 안녕하세요. 저는 TUNiB에서 머신러닝 엔지니어로 근무 중인 고현웅입니다. 이 자료는 대규모 언어모델 개발에 필요한 여러가지 기술들을 소개드리기 위해 마련하였으며 기본적으로

CLIP (Contrastive Language–Image Pre-training) for Italian

Italian CLIP CLIP (Radford et al., 2021) is a multimodal model that can learn to represent images and text jointly in the same space. In this project,

Official repository of OFA. Paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

Paper | Blog OFA is a unified multimodal pretrained model that unifies modalities (i.e., cross-modality, vision, language) and tasks (e.g., image gene

Official code for the CVPR 2022 (oral) paper "Extracting Triangular 3D Models, Materials, and Lighting From Images".

nvdiffrec Joint optimization of topology, materials and lighting from multi-view image observations as described in the paper Extracting Triangular 3D

Applied Natural Language Processing in the Enterprise - An O'Reilly Media Publication

Applied Natural Language Processing in the Enterprise This is the companion repo for Applied Natural Language Processing in the Enterprise, an O'Reill

Includes PyTorch - Keras model porting code for ConvNeXt family of models with fine-tuning and inference notebooks.

ConvNeXt-TF This repository provides TensorFlow / Keras implementations of different ConvNeXt [1] variants. It also provides the TensorFlow / Keras mo

A benchmark for evaluation and comparison of various NLP tasks in Persian language.

Persian NLP Benchmark The repository aims to track existing natural language processing models and evaluate their performance on well-known datasets.

An ever-growing playground of notebooks showcasing CLIP's impressive zero-shot capabilities.

Playground for CLIP-like models Demo Colab Link GradCAM Visualization Naive Zero-shot Detection Smarter Zero-shot Detection Captcha Solver Changelog 2

In this tutorial, you will perform inference across 10 well-known pre-trained object detectors and fine-tune on a custom dataset. Design and train your own object detector.

Object Detection Object detection is a computer vision task for locating instances of predefined objects in images or videos. In this tutorial, you wi

The implementation our EMNLP 2021 paper "Enhanced Language Representation with Label Knowledge for Span Extraction".

LEAR The implementation our EMNLP 2021 paper "Enhanced Language Representation with Label Knowledge for Span Extraction". See below for an overview of

Repo for the Tutorials of Day1-Day3 of the Nordic Probabilistic AI School 2021 (https://probabilistic.ai/)

ProbAI 2021 - Probabilistic Programming and Variational Inference Tutorial with Pryo Day 1 (June 14) Slides Notebook: students_PPLs_Intro Notebook: so

This is a repo of basic Machine Learning!

Basic Machine Learning This repository contains a topic-wise curated list of Machine Learning and Deep Learning tutorials, articles and other resource

Fast SHAP value computation for interpreting tree-based models

FastTreeSHAP FastTreeSHAP package is built based on the paper Fast TreeSHAP: Accelerating SHAP Value Computation for Trees published in NeurIPS 2021 X

Make your first PR. A beginner friendly repository made specifically for open source beginners. Add any program under any language (it can be anything from a simple program to a complex data structure algorithm). Happy coding...

Hacktober Fest 2021 Upload Different Types of Programs in any Language Use this project to make your first contribution to an open source project on G

.png)

Contextual Attention Network: Transformer Meets U-Net

Contextual Attention Network: Transformer Meets U-Net Contexual attention network for medical image segmentation with state of the art results on skin

Package towards building Explainable Forecasting and Nowcasting Models with State-of-the-art Deep Neural Networks and Dynamic Factor Model on Time Series data sets with single line of code. Also, provides utilify facility for time-series signal similarities matching, and removing noise from timeseries signals.

DeepXF: Explainable Forecasting and Nowcasting with State-of-the-art Deep Neural Networks and Dynamic Factor Model Also, verify TS signal similarities

MAGMA - a GPT-style multimodal model that can understand any combination of images and language

MAGMA -- Multimodal Augmentation of Generative Models through Adapter-based Finetuning Authors repo (alphabetical) Constantin (CoEich), Mayukh (Mayukh

🚀 RocketQA, dense retrieval for information retrieval and question answering, including both Chinese and English state-of-the-art models.

In recent years, the dense retrievers based on pre-trained language models have achieved remarkable progress. To facilitate more developers using cutt

SciFive: a text-text transformer model for biomedical literature

SciFive SciFive provided a Text-Text framework for biomedical language and natural language in NLP. Under the T5's framework and desrbibed in the pape

GARCH and Multivariate LSTM forecasting models for Bitcoin realized volatility with potential applications in crypto options trading, hedging, portfolio management, and risk management

Bitcoin Realized Volatility Forecasting with GARCH and Multivariate LSTM Author: Chi Bui This Repository Repository Directory ├── README.md

Data and code to support "Applied Natural Language Processing" (INFO 256, Fall 2021, UC Berkeley)

anlp21 Course materials for "Applied Natural Language Processing" (INFO 256, Fall 2021, UC Berkeley) Syllabus: http://people.ischool.berkeley.edu/~dba

TorchMultimodal is a PyTorch library for training state-of-the-art multimodal multi-task models at scale.

TorchMultimodal (Alpha Release) Introduction TorchMultimodal is a PyTorch library for training state-of-the-art multimodal multi-task models at scale.

[CVPR 2022 Oral] TubeDETR: Spatio-Temporal Video Grounding with Transformers

TubeDETR: Spatio-Temporal Video Grounding with Transformers Website • STVG Demo • Paper This repository provides the code for our paper. This includes

UT-Sarulab MOS prediction system using SSL models

UTMOS: UTokyo-SaruLab MOS Prediction System Official implementation of "UTMOS: UTokyo-SaruLab System for VoiceMOS Challenge 2022" submitted to INTERSP

Code for CVPR'2022 paper ✨ "Predict, Prevent, and Evaluate: Disentangled Text-Driven Image Manipulation Empowered by Pre-Trained Vision-Language Model"

PPE ✨ Repository for our CVPR'2022 paper: Predict, Prevent, and Evaluate: Disentangled Text-Driven Image Manipulation Empowered by Pre-Trained Vision-

Code for our paper "Graph Pre-training for AMR Parsing and Generation" in ACL2022

AMRBART An implementation for ACL2022 paper "Graph Pre-training for AMR Parsing and Generation". You may find our paper here (Arxiv). Requirements pyt

Implementation of Retrieval-Augmented Denoising Diffusion Probabilistic Models in Pytorch

Retrieval-Augmented Denoising Diffusion Probabilistic Models (wip) Implementation of Retrieval-Augmented Denoising Diffusion Probabilistic Models in P

![[ICLR 2022] Pretraining Text Encoders with Adversarial Mixture of Training Signal Generators](https://github.com/microsoft/AMOS/raw/main/AMOS.png)

[ICLR 2022] Pretraining Text Encoders with Adversarial Mixture of Training Signal Generators

AMOS This repository contains the scripts for fine-tuning AMOS pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: Pretraining Text Encoders wi

ACL22 paper: Imputing Out-of-Vocabulary Embeddings with LOVE Makes Language Models Robust with Little Cost

Imputing Out-of-Vocabulary Embeddings with LOVE Makes Language Models Robust with Little Cost LOVE is accpeted by ACL22 main conference as a long pape

Code and data for ImageCoDe, a contextual vison-and-language benchmark

ImageCoDe This repository contains code and data for ImageCoDe: Image Retrieval from Contextual Descriptions. Data All collected descriptions for the

Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers

beyond masking Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers The code is coming Figure 1: Pipeline of token-based pre-

Large-Scale Pre-training for Person Re-identification with Noisy Labels (LUPerson-NL)

LUPerson-NL Large-Scale Pre-training for Person Re-identification with Noisy Labels (LUPerson-NL) The repository is for our CVPR2022 paper Large-Scale

Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capability)

Protein GLM (wip) Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capabil

Pretrained models for Jax/Haiku; MobileNet, ResNet, VGG, Xception.

Pre-trained image classification models for Jax/Haiku Jax/Haiku Applications are deep learning models that are made available alongside pre-trained we

Very simple NCHW and NHWC conversion tool for ONNX. Change to the specified input order for each and every input OP. Also, change the channel order of RGB and BGR. Simple Channel Converter for ONNX.

scc4onnx Very simple NCHW and NHWC conversion tool for ONNX. Change to the specified input order for each and every input OP. Also, change the channel

SIGIR'22 paper: Axiomatically Regularized Pre-training for Ad hoc Search

Introduction This codebase contains source-code of the Python-based implementation (ARES) of our SIGIR 2022 paper. Chen, Jia, et al. "Axiomatically Re

Repository for fine-tuning Transformers 🤗 based seq2seq speech models in JAX/Flax.

Seq2Seq Speech in JAX A JAX/Flax repository for combining a pre-trained speech encoder model (e.g. Wav2Vec2, HuBERT, WavLM) with a pre-trained text de

CLIP-GEN: Language-Free Training of a Text-to-Image Generator with CLIP

CLIP-GEN [简体中文][English] 本项目在萤火二号集群上用 PyTorch 实现了论文 《CLIP-GEN: Language-Free Training of a Text-to-Image Generator with CLIP》。 CLIP-GEN 是一个 Language-F

Cairo hooks for pre-commit

pre-commit-cairo Cairo hooks for pre-commit. See pre-commit for more details Using pre-commit-cairo with pre-commit Add this to your .pre-commit-confi

Classification models 1D Zoo - Keras and TF.Keras

Classification models 1D Zoo - Keras and TF.Keras This repository contains 1D variants of popular CNN models for classification like ResNets, DenseNet

Simple tool to combine(merge) onnx models. Simple Network Combine Tool for ONNX.

snc4onnx Simple tool to combine(merge) onnx models. Simple Network Combine Tool for ONNX. https://github.com/PINTO0309/simple-onnx-processing-tools 1.

DeepGNN is a framework for training machine learning models on large scale graph data.

DeepGNN Overview DeepGNN is a framework for training machine learning models on large scale graph data. DeepGNN contains all the necessary features in

Official codebase for ICLR oral paper Unsupervised Vision-Language Grammar Induction with Shared Structure Modeling

CLIORA This is the official codebase for ICLR oral paper: Unsupervised Vision-Language Grammar Induction with Shared Structure Modeling. We introduce

Aiming at the common training datsets split, spectrum preprocessing, wavelength select and calibration models algorithm involved in the spectral analysis process

Aiming at the common training datsets split, spectrum preprocessing, wavelength select and calibration models algorithm involved in the spectral analysis process, a complete algorithm library is established, which is named opensa (openspectrum analysis).

A very simple tool to rewrite parameters such as attributes and constants for OPs in ONNX models. Simple Attribute and Constant Modifier for ONNX.

sam4onnx A very simple tool to rewrite parameters such as attributes and constants for OPs in ONNX models. Simple Attribute and Constant Modifier for

Simple ONNX operation generator. Simple Operation Generator for ONNX.

sog4onnx Simple ONNX operation generator. Simple Operation Generator for ONNX. https://github.com/PINTO0309/simple-onnx-processing-tools Key concept V

Simple node deletion tool for onnx.

snd4onnx Simple node deletion tool for onnx. I only test very miscellaneous and limited patterns as a hobby. There are probably a large number of bugs

A multi-voice TTS system trained with an emphasis on quality

TorToiSe Tortoise is a text-to-speech program built with the following priorities: Strong multi-voice capabilities. Highly realistic prosody and inton

Princeton NLP's pre-training library based on fairseq with DeepSpeed kernel integration 🚃

This repository provides a library for efficient training of masked language models (MLM), built with fairseq. We fork fairseq to give researchers mor