1317 Repositories

Python prophet-model Libraries

This project deploys a yolo fastest model in the form of tflite on raspberry 3b+. The model is from another repository of mine called -Trash-Classification-Car

Deploy-yolo-fastest-tflite-on-raspberry 觉得有用的话可以顺手点个star嗷 这个项目将垃圾分类小车中的tflite模型移植到了树莓派3b+上面。 该项目主要是为了记录在树莓派部署yolo fastest tflite的流程 (之后有时间会尝试用C++部署来提升

PyTorch implementation of CVPR 2020 paper (Reference-Based Sketch Image Colorization using Augmented-Self Reference and Dense Semantic Correspondence) and pre-trained model on ImageNet dataset

Reference-Based-Sketch-Image-Colorization-ImageNet This is a PyTorch implementation of CVPR 2020 paper (Reference-Based Sketch Image Colorization usin

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Real-Time Multi-Contact Model Predictive Control via ADMM

Here, you can find the code for the paper 'Real-Time Multi-Contact Model Predictive Control via ADMM'. Code is currently being cleared up and optimize

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

PyTorch original implementation of Cross-lingual Language Model Pretraining.

XLM NEW: Added XLM-R model. PyTorch original implementation of Cross-lingual Language Model Pretraining. Includes: Monolingual language model pretrain

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

PyTorch implementation of Tacotron speech synthesis model.

tacotron_pytorch PyTorch implementation of Tacotron speech synthesis model. Inspired from keithito/tacotron. Currently not as much good speech quality

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

High level network definitions with pre-trained weights in TensorFlow

TensorNets High level network definitions with pre-trained weights in TensorFlow (tested with 2.1.0 = TF = 1.4.0). Guiding principles Applicability.

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

DeepLab resnet v2 model in pytorch

pytorch-deeplab-resnet DeepLab resnet v2 model implementation in pytorch. The architecture of deepLab-ResNet has been replicated exactly as it is from

CNNs for Sentence Classification in PyTorch

Introduction This is the implementation of Kim's Convolutional Neural Networks for Sentence Classification paper in PyTorch. Kim's implementation of t

PyTorch implementation of "Learning to Discover Cross-Domain Relations with Generative Adversarial Networks"

DiscoGAN in PyTorch PyTorch implementation of Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. * All samples in READM

Task-based end-to-end model learning in stochastic optimization

Task-based End-to-end Model Learning in Stochastic Optimization This repository is by Priya L. Donti, Brandon Amos, and J. Zico Kolter and contains th

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

TextAttack 🐙 is a Python framework for adversarial attacks, data augmentation, and model training in NLP

TextAttack 🐙 Generating adversarial examples for NLP models [TextAttack Documentation on ReadTheDocs] About • Setup • Usage • Design About TextAttack

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Model Agnostic Confidence Estimator (MACEST) - A Python library for calibrating Machine Learning models' confidence scores

Django model field that can hold a geoposition, and corresponding widget

django-geoposition A model field that can hold a geoposition (latitude/longitude), and corresponding admin/form widget. Prerequisites Starting with ve

Incorporating KenLM language model with HuggingFace implementation of Wav2Vec2CTC Model using beam search decoding

Wav2Vec2CTC With KenLM Using KenLM ARPA language model with beam search to decode audio files and show the most probable transcription. Assuming you'v

Replication attempt for the Protein Folding Model

RGN2-Replica (WIP) To eventually become an unofficial working Pytorch implementation of RGN2, an state of the art model for MSA-less Protein Folding f

A django model and form field for normalised phone numbers using python-phonenumbers

django-phonenumber-field A Django library which interfaces with python-phonenumbers to validate, pretty print and convert phone numbers. python-phonen

MAC address Model Field & Form Field for Django apps

django-macaddress MAC Address model and form fields for Django We use netaddr to parse and validate the MAC address. The tests aren't complete yet. Pa

This is a vision-based 3d model manipulation and control UI

Manipulation of 3D Models Using Hand Gesture This program allows user to manipulation 3D models (.obj format) with their hands. The project support bo

Use Django admin to manage drip campaign emails using querysets on Django's User model.

Django Drip Drip campaigns are pre-written sets of emails sent to customers or prospects over time. Django Drips lets you use the admin to manage drip

![[ICCV 2021] Official Tensorflow Implementation for](https://github.com/HyeongseokSon1/KPAC/raw/main/assets/KPAC.jpg)

[ICCV 2021] Official Tensorflow Implementation for "Single Image Defocus Deblurring Using Kernel-Sharing Parallel Atrous Convolutions"

KPAC: Kernel-Sharing Parallel Atrous Convolutional block This repository contains the official Tensorflow implementation of the following paper: Singl

IndoBERTweet is the first large-scale pretrained model for Indonesian Twitter. Published at EMNLP 2021 (main conference)

IndoBERTweet 🐦 🇮🇩 1. Paper Fajri Koto, Jey Han Lau, and Timothy Baldwin. IndoBERTweet: A Pretrained Language Model for Indonesian Twitter with Effe

![[EMNLP 2021] LM-Critic: Language Models for Unsupervised Grammatical Error Correction](https://github.com/michiyasunaga/LM-Critic/raw/main/figs/local_optimality.png)

[EMNLP 2021] LM-Critic: Language Models for Unsupervised Grammatical Error Correction

LM-Critic: Language Models for Unsupervised Grammatical Error Correction This repo provides the source code & data of our paper: LM-Critic: Language M

Code & Models for 3DETR - an End-to-end transformer model for 3D object detection

3DETR: An End-to-End Transformer Model for 3D Object Detection PyTorch implementation and models for 3DETR. 3DETR (3D DEtection TRansformer) is a simp

Code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

This repository contains the code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

The training code for the 4th place model at MDX 2021 leaderboard A.

The training code for the 4th place model at MDX 2021 leaderboard A.

Must-read papers on improving efficiency for pre-trained language models.

Must-read papers on improving efficiency for pre-trained language models.

DAFNe: A One-Stage Anchor-Free Deep Model for Oriented Object Detection

DAFNe: A One-Stage Anchor-Free Deep Model for Oriented Object Detection Code for our Paper DAFNe: A One-Stage Anchor-Free Deep Model for Oriented Obje

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

[EMNLP 2021] Distantly-Supervised Named Entity Recognition with Noise-Robust Learning and Language Model Augmented Self-Training

RoSTER The source code used for Distantly-Supervised Named Entity Recognition with Noise-Robust Learning and Language Model Augmented Self-Training, p

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt. This is done by

A custom DeepStack model for detecting 16 human actions.

DeepStack_ActionNET This repository provides a custom DeepStack model that has been trained and can be used for creating a new object detection API fo

Demonstrates how to divide a DL model into multiple IR model files (division) and introduce a simplest way to implement a custom layer works with OpenVINO IR models.

Demonstration of OpenVINO techniques - Model-division and a simplest-way to support custom layers Description: Model Optimizer in Intel(r) OpenVINO(tm

G-NIA model from "Single Node Injection Attack against Graph Neural Networks" (CIKM 2021)

Single Node Injection Attack against Graph Neural Networks This repository is our Pytorch implementation of our paper: Single Node Injection Attack ag

Demonstrates iterative FGSM on Apple's NeuralHash model.

apple-neuralhash-attack Demonstrates iterative FGSM on Apple's NeuralHash model. TL;DR: It is possible to apply noise to CSAM images and make them loo

UniLM AI - Large-scale Self-supervised Pre-training across Tasks, Languages, and Modalities

Pre-trained (foundation) models across tasks (understanding, generation and translation), languages (100+ languages), and modalities (language, image, audio, vision + language, audio + language, etc.)

Python scripts form performing stereo depth estimation using the HITNET model in ONNX.

ONNX-HITNET-Stereo-Depth-estimation Python scripts form performing stereo depth estimation using the HITNET model in ONNX. Stereo depth estimation on

Python scripts form performing stereo depth estimation using the HITNET model in Tensorflow Lite.

TFLite-HITNET-Stereo-depth-estimation Python scripts form performing stereo depth estimation using the HITNET model in Tensorflow Lite. Stereo depth e

This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced in the paper titled "BanglaBERT: Combating Embedding Barrier in Multilingual Models for Low-Resource Language Understanding".

BanglaBERT This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced i

A web app for presenting my research in BEM(building energy model) simulation

BEM(building energy model)-SIM-APP The is a web app presenting my research in BEM(building energy model) calibration. You can play around with some pa

Tutorial to pretrain & fine-tune a 🤗 Flax T5 model on a TPUv3-8 with GCP

Pretrain and Fine-tune a T5 model with Flax on GCP This tutorial details how pretrain and fine-tune a FlaxT5 model from HuggingFace using a TPU VM ava

Python scripts form performing stereo depth estimation using the high res stereo model in PyTorch .

PyTorch-High-Res-Stereo-Depth-Estimation Python scripts form performing stereo depth estimation using the high res stereo model in PyTorch. Stereo dep

Python scripts form performing stereo depth estimation using the CoEx model in ONNX.

ONNX-CoEx-Stereo-Depth-estimation Python scripts form performing stereo depth estimation using the CoEx model in ONNX. Stereo depth estimation on the

Model Quantization Benchmark

MQBench Update V0.0.2 Fix academic prepare setting. More deployable prepare process. Fix setup.py. Fix deploy on SNPE. Fix convert_deploy bug. Add Qua

Bridging Vision and Language Model

BriVL BriVL (Bridging Vision and Language Model) 是首个中文通用图文多模态大规模预训练模型。BriVL模型在图文检索任务上有着优异的效果,超过了同期其他常见的多模态预训练模型(例如UNITER、CLIP)。 BriVL论文:WenLan: Bridgi

Pathdreamer: A World Model for Indoor Navigation

Pathdreamer: A World Model for Indoor Navigation This repository hosts the open source code for Pathdreamer, to be presented at ICCV 2021. Paper | Pro

Example scripts for the detection of lanes using the ultra fast lane detection model in Tensorflow Lite.

TFlite Ultra Fast Lane Detection Inference Example scripts for the detection of lanes using the ultra fast lane detection model in Tensorflow Lite. So

Generate text line images for training deep learning OCR model (e.g. CRNN)

Generate text line images for training deep learning OCR model (e.g. CRNN)

Demonstration of the Model Training as a CI/CD System in Vertex AI

Model Training as a CI/CD System This project demonstrates the machine model training as a CI/CD system in GCP platform. You will see more detailed wo

MWPToolkit is a PyTorch-based toolkit for Math Word Problem (MWP) solving.

MWPToolkit is a PyTorch-based toolkit for Math Word Problem (MWP) solving. It is a comprehensive framework for research purpose that integrates popular MWP benchmark datasets and typical deep learning-based MWP algorithms.

Train GPT-3 model on V100(16GB Mem) Using improved Transformer.

GPT-X using transformer pytorch

Python scripts for performing stereo depth estimation using the HITNET Tensorflow model.

HITNET-Stereo-Depth-estimation Python scripts for performing stereo depth estimation using the HITNET Tensorflow model from Google Research. Stereo de

Collection of NLP model explanations and accompanying analysis tools

Thermostat is a large collection of NLP model explanations and accompanying analysis tools. Combines explainability methods from the captum library wi

Simple linear model implementations from scratch.

Hand Crafted Models Simple linear model implementations from scratch. Table of contents Overview Project Structure Getting started Citing this project

This repo uses a combination of logits and feature distillation method to teach the PSPNet model of ResNet18 backbone with the PSPNet model of ResNet50 backbone. All the models are trained and tested on the PASCAL-VOC2012 dataset.

PSPNet-logits and feature-distillation Introduction This repository is based on PSPNet and modified from semseg and Pixelwise_Knowledge_Distillation_P

🍅🍅🍅YOLOv5-Lite: lighter, faster and easier to deploy. Evolved from yolov5 and the size of model is only 1.7M (int8) and 3.3M (fp16). It can reach 10+ FPS on the Raspberry Pi 4B when the input size is 320×320~

YOLOv5-Lite:lighter, faster and easier to deploy Perform a series of ablation experiments on yolov5 to make it lighter (smaller Flops, lower memory, a

machine learning model deployment project of Iris classification model in a minimal UI using flask web framework and deployed it in Azure cloud using Azure app service

This is a machine learning model deployment project of Iris classification model in a minimal UI using flask web framework and deployed it in Azure cloud using Azure app service. We initially made this project as a requirement for an internship at Indian Servers. We are now making it open to contribution.

VIL-100: A New Dataset and A Baseline Model for Video Instance Lane Detection (ICCV 2021)

Preparation Please see dataset/README.md to get more details about our datasets-VIL100 Please see INSTALL.md to install environment and evaluation too

Voice of Pajlada with model and weights.

Pajlada TTS Stripped down version of ForwardTacotron (https://github.com/as-ideas/ForwardTacotron) with pretrained weights for Pajlada's (https://gith

An original implementation of "Noisy Channel Language Model Prompting for Few-Shot Text Classification"

Channel LM Prompting (and beyond) This includes an original implementation of Sewon Min, Mike Lewis, Hannaneh Hajishirzi, Luke Zettlemoyer. "Noisy Cha

Example scripts for the detection of lanes using the ultra fast lane detection model in ONNX.

Example scripts for the detection of lanes using the ultra fast lane detection model in ONNX.

The Official Implementation of the ICCV-2021 Paper: Semantically Coherent Out-of-Distribution Detection.

SCOOD-UDG (ICCV 2021) This repository is the official implementation of the paper: Semantically Coherent Out-of-Distribution Detection Jingkang Yang,

The official implementation of the Interspeech 2021 paper WSRGlow: A Glow-based Waveform Generative Model for Audio Super-Resolution.

WSRGlow The official implementation of the Interspeech 2021 paper WSRGlow: A Glow-based Waveform Generative Model for Audio Super-Resolution. Audio sa

CrossNorm and SelfNorm for Generalization under Distribution Shifts (ICCV 2021)

CrossNorm (CN) and SelfNorm (SN) (Accepted at ICCV 2021) This is the official PyTorch implementation of our CNSN paper, in which we propose CrossNorm

【ACMMM 2021】DSANet: Dynamic Segment Aggregation Network for Video-Level Representation Learning

DSANet: Dynamic Segment Aggregation Network for Video-Level Representation Learning (ACMMM 2021) Overview We release the code of the DSANet (Dynamic S

CrossNorm and SelfNorm for Generalization under Distribution Shifts (ICCV 2021)

CrossNorm (CN) and SelfNorm (SN) (Accepted at ICCV 2021) This is the official PyTorch implementation of our CNSN paper, in which we propose CrossNorm

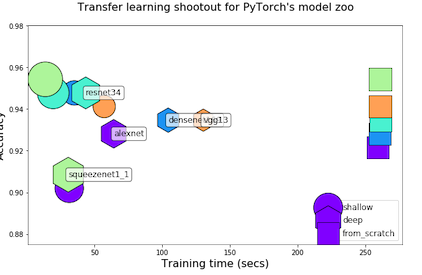

Transfer Learning Shootout for PyTorch's model zoo (torchvision)

pytorch-retraining Transfer Learning shootout for PyTorch's model zoo (torchvision). Load any pretrained model with custom final layer (num_classes) f

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

PyTorch implementation of SampleRNN: An Unconditional End-to-End Neural Audio Generation Model

samplernn-pytorch A PyTorch implementation of SampleRNN: An Unconditional End-to-End Neural Audio Generation Model. It's based on the reference implem

PyTorch implementation of the supervised learning experiments from the paper Model-Agnostic Meta-Learning (MAML)

pytorch-maml This is a PyTorch implementation of the supervised learning experiments from the paper Model-Agnostic Meta-Learning (MAML): https://arxiv

PyTorch implementation of Tacotron speech synthesis model.

tacotron_pytorch PyTorch implementation of Tacotron speech synthesis model. Inspired from keithito/tacotron. Currently not as much good speech quality

Implementation of Token Shift GPT - An autoregressive model that solely relies on shifting the sequence space for mixing

Token Shift GPT Implementation of Token Shift GPT - An autoregressive model that relies solely on shifting along the sequence dimension and feedforwar

Empower Sequence Labeling with Task-Aware Language Model

LM-LSTM-CRF Check Our New NER Toolkit 🚀 🚀 🚀 Inference: LightNER: inference w. models pre-trained / trained w. any following tools, efficiently. Tra

Evaluation suite for large-scale language models.

This repo contains code for running the evaluations and reproducing the results from the Jurassic-1 Technical Paper (see blog post), with current support for running the tasks through both the AI21 Studio API and OpenAI's GPT3 API.

Transformer model implemented with Pytorch

transformer-pytorch Transformer model implemented with Pytorch Attention is all you need-[Paper] Architecture Self-Attention self_attention.py class

Convert Apple NeuralHash model for CSAM Detection to ONNX.

Apple NeuralHash is a perceptual hashing method for images based on neural networks. It can tolerate image resize and compression.

In this project we investigate the performance of the SetCon model on realistic video footage. Therefore, we implemented the model in PyTorch and tested the model on two example videos.

Contrastive Learning of Object Representations Supervisor: Prof. Dr. Gemma Roig Institutions: Goethe University CVAI - Computational Vision & Artifici

LONG-TERM SERIES FORECASTING WITH QUERYSELECTOR – EFFICIENT MODEL OF SPARSEATTENTION

Query Selector Here you can find code and data loaders for the paper https://arxiv.org/pdf/2107.08687v1.pdf . Query Selector is a novel approach to sp

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

😇A pyTorch implementation of the DeepMoji model: state-of-the-art deep learning model for analyzing sentiment, emotion, sarcasm etc

------ Update September 2018 ------ It's been a year since TorchMoji and DeepMoji were released. We're trying to understand how it's being used such t

Learning and Building Convolutional Neural Networks using PyTorch

Image Classification Using Deep Learning Learning and Building Convolutional Neural Networks using PyTorch. Models, selected are based on number of ci

FedScale: Benchmarking Model and System Performance of Federated Learning

FedScale: Benchmarking Model and System Performance of Federated Learning (Paper) This repository contains scripts and instructions of building FedSca

SlotRefine: A Fast Non-Autoregressive Model forJoint Intent Detection and Slot Filling

SlotRefine: A Fast Non-Autoregressive Model for Joint Intent Detection and Slot Filling Reference Main paper to be cited (Di Wu et al., 2020) @article

A DNN inference latency prediction toolkit for accurately modeling and predicting the latency on diverse edge devices.

Note: This is an alpha (preview) version which is still under refining. nn-Meter is a novel and efficient system to accurately predict the inference l

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

The RWKV Language Model

RWKV-LM We propose the RWKV language model, with alternating time-mix and channel-mix layers: The R, K, V are generated by linear transforms of input,

How to Become More Salient? Surfacing Representation Biases of the Saliency Prediction Model

How to Become More Salient? Surfacing Representation Biases of the Saliency Prediction Model

nn-Meter is a novel and efficient system to accurately predict the inference latency of DNN models on diverse edge devices

A DNN inference latency prediction toolkit for accurately modeling and predicting the latency on diverse edge devices.

This is the pytorch implementation for the paper: *Learning Accurate Performance Predictors for Ultrafast Automated Model Compression*, which is in submission to TPAMI

SeerNet This is the pytorch implementation for the paper: Learning Accurate Performance Predictors for Ultrafast Automated Model Compression, which is

A Serverless Application Model stack that persists the $XRP price to the XRPL every minute as a TrustLine. There are no servers, it is effectively a "smart contract" in Python for the XRPL.

xrpl-price-persist-oracle-sam This is a XRPL Oracle that publishes external data into the XRPL. This Oracle was inspired by XRPL-Labs/XRPL-Persist-Pri