2006 Repositories

Python radio-transformer-networks Libraries

Semi-Autoregressive Transformer for Image Captioning

Semi-Autoregressive Transformer for Image Captioning Requirements Python 3.6 Pytorch 1.6 Prepare data Please use git clone --recurse-submodules to clo

EdMIPS: Rethinking Differentiable Search for Mixed-Precision Neural Networks

EdMIPS is an efficient algorithm to search the optimal mixed-precision neural network directly without proxy task on ImageNet given computation budgets. It can be applied to many popular network architectures, including ResNet, GoogLeNet, and Inception-V3.

CLIP: Connecting Text and Image (Learning Transferable Visual Models From Natural Language Supervision)

CLIP (Contrastive Language–Image Pre-training) Experiments (Evaluation) Model Dataset Acc (%) ViT-B/32 (Paper) CIFAR100 65.1 ViT-B/32 (Our) CIFAR100 6

Pytorch Implementation of Spiking Neural Networks Calibration, ICML 2021

SNN_Calibration Pytorch Implementation of Spiking Neural Networks Calibration, ICML 2021 Feature Comparison of SNN calibration: Features SNN Direct Tr

PyTorch implementation of Soft-DTW: a Differentiable Loss Function for Time-Series in CUDA

Soft DTW Loss Function for PyTorch in CUDA This is a Pytorch Implementation of Soft-DTW: a Differentiable Loss Function for Time-Series which is batch

Lyapunov-guided Deep Reinforcement Learning for Stable Online Computation Offloading in Mobile-Edge Computing Networks

PyTorch code to reproduce LyDROO algorithm [1], which is an online computation offloading algorithm to maximize the network data processing capability subject to the long-term data queue stability and average power constraints. It applies Lyapunov optimization to decouple the multi-stage stochastic MINLP into deterministic per-frame MINLP subproblems and solves each subproblem via DROO algorithm. It includes:

The implementation of "Shuffle Transformer: Rethinking Spatial Shuffle for Vision Transformer"

Shuffle Transformer The implementation of "Shuffle Transformer: Rethinking Spatial Shuffle for Vision Transformer" Introduction Very recently, window-

This is an official implementation for "Video Swin Transformers".

Video Swin Transformer By Ze Liu*, Jia Ning*, Yue Cao, Yixuan Wei, Zheng Zhang, Stephen Lin and Han Hu. This repo is the official implementation of "V

π-GAN: Periodic Implicit Generative Adversarial Networks for 3D-Aware Image Synthesis

π-GAN: Periodic Implicit Generative Adversarial Networks for 3D-Aware Image Synthesis Project Page | Paper | Data Eric Ryan Chan*, Marco Monteiro*, Pe

Spatially-Adaptive Pixelwise Networks for Fast Image Translation, CVPR 2021

Image Translation with ASAPNets Spatially-Adaptive Pixelwise Networks for Fast Image Translation, CVPR 2021 Webpage | Paper | Video Installation insta

MetaBalance: High-Performance Neural Networks for Class-Imbalanced Data

This repository is the official PyTorch implementation of Meta-Balance. Find the paper on arxiv MetaBalance: High-Performance Neural Networks for Clas

The official implementation of the CVPR2021 paper: Decoupled Dynamic Filter Networks

Decoupled Dynamic Filter Networks This repo is the official implementation of CVPR2021 paper: "Decoupled Dynamic Filter Networks". Introduction DDF is

Random Walk Graph Neural Networks

Random Walk Graph Neural Networks This repository is the official implementation of Random Walk Graph Neural Networks. Requirements Code is written in

The implementation of CVPR2021 paper Temporal Query Networks for Fine-grained Video Understanding, by Chuhan Zhang, Ankush Gupta and Andrew Zisserman.

Temporal Query Networks for Fine-grained Video Understanding 📋 This repository contains the implementation of CVPR2021 paper Temporal_Query_Networks

The `rtdl` library + The official implementation of the paper

The `rtdl` library + The official implementation of the paper "Revisiting Deep Learning Models for Tabular Data"

Speech Recognition for Uyghur using Speech transformer

Speech Recognition for Uyghur using Speech transformer Training: this model using CTC loss and Cross Entropy loss for training. Download pretrained mo

PyTorch implementation of "ContextNet: Improving Convolutional Neural Networks for Automatic Speech Recognition with Global Context" (INTERSPEECH 2020)

ContextNet ContextNet has CNN-RNN-transducer architecture and features a fully convolutional encoder that incorporates global context information into

A PyTorch Implementation of ViT (Vision Transformer)

ViT - Vision Transformer This is an implementation of ViT - Vision Transformer by Google Research Team through the paper "An Image is Worth 16x16 Word

Generating Anime Images by Implementing Deep Convolutional Generative Adversarial Networks paper

AnimeGAN - Deep Convolutional Generative Adverserial Network PyTorch implementation of DCGAN introduced in the paper: Unsupervised Representation Lear

This repository contains the code for the paper "SCANimate: Weakly Supervised Learning of Skinned Clothed Avatar Networks"

SCANimate: Weakly Supervised Learning of Skinned Clothed Avatar Networks (CVPR 2021 Oral) This repository contains the official PyTorch implementation

Episodic Transformer (E.T.) is a novel attention-based architecture for vision-and-language navigation. E.T. is based on a multimodal transformer that encodes language inputs and the full episode history of visual observations and actions.

Episodic Transformers (E.T.) Episodic Transformer for Vision-and-Language Navigation Alexander Pashevich, Cordelia Schmid, Chen Sun Episodic Transform

![A PyTorch implementation of EventProp [https://arxiv.org/abs/2009.08378], a method to train Spiking Neural Networks](https://github.com/lolemacs/pytorch-eventprop/raw/master/images/forward.png)

A PyTorch implementation of EventProp [https://arxiv.org/abs/2009.08378], a method to train Spiking Neural Networks

Spiking Neural Network training with EventProp This is an unofficial PyTorch implemenation of EventProp, a method to compute exact gradients for Spiki

LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant Self-At

![[ICML 2021]](https://github.com/Shen-Lab/GraphCL_Automated/raw/master/joao.png)

[ICML 2021] "Graph Contrastive Learning Automated" by Yuning You, Tianlong Chen, Yang Shen, Zhangyang Wang

Graph Contrastive Learning Automated PyTorch implementation for Graph Contrastive Learning Automated [talk] [poster] [appendix] Yuning You, Tianlong C

Official NumPy Implementation of Deep Networks from the Principle of Rate Reduction (2021)

Deep Networks from the Principle of Rate Reduction This repository is the official NumPy implementation of the paper Deep Networks from the Principle

Aggragrating Nested Transformer Official Jax Implementation

NesT is a simple method, which aggragrates nested local transformers on image blocks. The idea makes vision transformers attain better accuracy, data efficiency, and convergence on the ImageNet benchmark. NesT can be scaled to small datasets to match convnet accuracy.

PyTorch implementation and pretrained models for XCiT models. See XCiT: Cross-Covariance Image Transformer

Official code Cross-Covariance Image Transformer (XCiT)

Official pytorch implement for “Transformer-Based Source-Free Domain Adaptation”

Official implementation for TransDA Official pytorch implement for “Transformer-Based Source-Free Domain Adaptation”. Overview: Result: Prerequisites:

🔮 Execution time predictions for deep neural network training iterations across different GPUs.

Habitat: A Runtime-Based Computational Performance Predictor for Deep Neural Network Training Habitat is a tool that predicts a deep neural network's

PyTorch implementation and pretrained models for XCiT models. See XCiT: Cross-Covariance Image Transformer

Cross-Covariance Image Transformer (XCiT) PyTorch implementation and pretrained models for XCiT models. See XCiT: Cross-Covariance Image Transformer L

Implementation of Uformer, Attention-based Unet, in Pytorch

Uformer - Pytorch Implementation of Uformer, Attention-based Unet, in Pytorch. It will only offer the concat-cross-skip connection. This repository wi

Tensorflow implementation of Swin Transformer model.

Swin Transformer (Tensorflow) Tensorflow reimplementation of Swin Transformer model. Based on Official Pytorch implementation. Requirements tensorflow

This is the official repository of XVFI (eXtreme Video Frame Interpolation)

XVFI This is the official repository of XVFI (eXtreme Video Frame Interpolation), https://arxiv.org/abs/2103.16206 Last Update: 20210607 We provide th

Code for the CVPR 2021 paper: Understanding Failures of Deep Networks via Robust Feature Extraction

Welcome to Barlow Barlow is a tool for identifying the failure modes for a given neural network. To achieve this, Barlow first creates a group of imag

The codes and models in 'Gaze Estimation using Transformer'.

GazeTR We provide the code of GazeTR-Hybrid in "Gaze Estimation using Transformer". We recommend you to use data processing codes provided in GazeHub.

Official implementation of "SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers"

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

Code for STFT Transformer used in BirdCLEF 2021 competition.

STFT_Transformer Code for STFT Transformer used in BirdCLEF 2021 competition. The STFT Transformer is a new way to use Transformers similar to Vision

This repository implements and evaluates convolutional networks on the Möbius strip as toy model instantiations of Coordinate Independent Convolutional Networks.

Orientation independent Möbius CNNs This repository implements and evaluates convolutional networks on the Möbius strip as toy model instantiations of

This is the official implementation for "Do Transformers Really Perform Bad for Graph Representation?".

Graphormer By Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng*, Guolin Ke, Di He*, Yanming Shen and Tie-Yan Liu. This repo is the official impl

Official implement of "CAT: Cross Attention in Vision Transformer".

CAT: Cross Attention in Vision Transformer This is official implement of "CAT: Cross Attention in Vision Transformer". Abstract Since Transformer has

Implementation of the "Point 4D Transformer Networks for Spatio-Temporal Modeling in Point Cloud Videos" paper.

Point 4D Transformer Networks for Spatio-Temporal Modeling in Point Cloud Videos Introduction Point cloud videos exhibit irregularities and lack of or

Code for Deterministic Neural Networks with Appropriate Inductive Biases Capture Epistemic and Aleatoric Uncertainty

Deep Deterministic Uncertainty This repository contains the code for Deterministic Neural Networks with Appropriate Inductive Biases Capture Epistemic

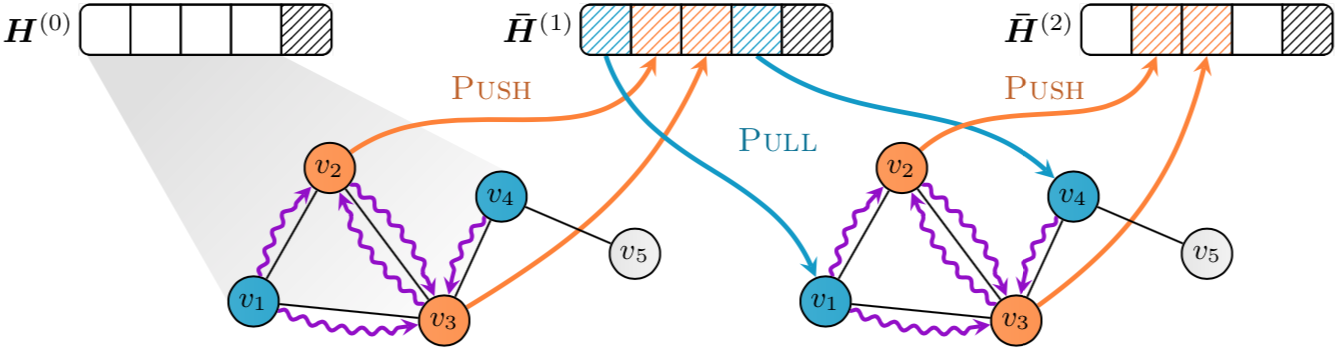

Implementation of "GNNAutoScale: Scalable and Expressive Graph Neural Networks via Historical Embeddings" in PyTorch

PyGAS: Auto-Scaling GNNs in PyG PyGAS is the practical realization of our G NN A uto S cale (GAS) framework, which scales arbitrary message-passing GN

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis Jungil Kong, Jaehyeon Kim, Jaekyoung Bae In our paper, we p

Rethinking Space-Time Networks with Improved Memory Coverage for Efficient Video Object Segmentation

STCN Rethinking Space-Time Networks with Improved Memory Coverage for Efficient Video Object Segmentation Ho Kei Cheng, Yu-Wing Tai, Chi-Keung Tang [a

The official PyTorch implementation of recent paper - SAINT: Improved Neural Networks for Tabular Data via Row Attention and Contrastive Pre-Training

This repository is the official PyTorch implementation of SAINT. Find the paper on arxiv SAINT: Improved Neural Networks for Tabular Data via Row Atte

banditml is a lightweight contextual bandit & reinforcement learning library designed to be used in production Python services.

banditml is a lightweight contextual bandit & reinforcement learning library designed to be used in production Python services. This library is developed by Bandit ML and ex-authors of Facebook's applied reinforcement learning platform, Reagent.

small collection of functions for neural networks

neurobiba other languages: RU small collection of functions for neural networks. very easy to use! Installation: pip install neurobiba See examples h

Unofficial TensorFlow implementation of Protein Interface Prediction using Graph Convolutional Networks.

[TensorFlow] Protein Interface Prediction using Graph Convolutional Networks Unofficial TensorFlow implementation of Protein Interface Prediction usin

The source code of the paper "Understanding Graph Neural Networks from Graph Signal Denoising Perspectives"

GSDN-F and GSDN-EF This repository provides a reference implementation of GSDN-F and GSDN-EF as described in the paper "Understanding Graph Neural Net

Sharpness-Aware Minimization for Efficiently Improving Generalization

Sharpness-Aware-Minimization-TensorFlow This repository provides a minimal implementation of sharpness-aware minimization (SAM) (Sharpness-Aware Minim

Pytorch implementation of MaskFlownet

MaskFlownet-Pytorch Unofficial PyTorch implementation of MaskFlownet (https://github.com/microsoft/MaskFlownet). Tested with: PyTorch 1.5.0 CUDA 10.1

Code for paper: Group-CAM: Group Score-Weighted Visual Explanations for Deep Convolutional Networks

Group-CAM By Zhang, Qinglong and Rao, Lu and Yang, Yubin [State Key Laboratory for Novel Software Technology at Nanjing University] This repo is the o

Benchmarks for semi-supervised domain generalization.

Semi-Supervised Domain Generalization This code is the official implementation of the following paper: Semi-Supervised Domain Generalization with Stoc

Unofficial PyTorch implementation of Attention Free Transformer (AFT) layers by Apple Inc.

aft-pytorch Unofficial PyTorch implementation of Attention Free Transformer's layers by Zhai, et al. [abs, pdf] from Apple Inc. Installation You can i

"SOLQ: Segmenting Objects by Learning Queries", SOLQ is an end-to-end instance segmentation framework with Transformer.

SOLQ: Segmenting Objects by Learning Queries This repository is an official implementation of the paper SOLQ: Segmenting Objects by Learning Queries.

Degree-Quant: Quantization-Aware Training for Graph Neural Networks.

Degree-Quant This repo provides a clean re-implementation of the code associated with the paper Degree-Quant: Quantization-Aware Training for Graph Ne

Subdivision-based Mesh Convolutional Networks

Subdivision-based Mesh Convolutional Networks The official implementation of SubdivNet in our paper, Subdivion-based Mesh Convolutional Networks Requi

Deep learning-based approach to discovering Granger causality networks in multivariate time series

Granger causality discovery for neural networks.

Code for the paper "How Attentive are Graph Attention Networks?"

How Attentive are Graph Attention Networks? This repository is the official implementation of How Attentive are Graph Attention Networks?. The PyTorch

Official codebase for Decision Transformer: Reinforcement Learning via Sequence Modeling.

Decision Transformer Lili Chen*, Kevin Lu*, Aravind Rajeswaran, Kimin Lee, Aditya Grover, Michael Laskin, Pieter Abbeel, Aravind Srinivas†, and Igor M

You Only 👀 One Sequence

You Only 👀 One Sequence TL;DR: We study the transferability of the vanilla ViT pre-trained on mid-sized ImageNet-1k to the more challenging COCO obje

MSG-Transformer: Exchanging Local Spatial Information by Manipulating Messenger Tokens

MSG-Transformer Official implementation of the paper MSG-Transformer: Exchanging Local Spatial Information by Manipulating Messenger Tokens, by Jiemin

This repo contains the official code and pre-trained models for the Dynamic Vision Transformer (DVT).

Dynamic-Vision-Transformer (Pytorch) This repo contains the official code and pre-trained models for the Dynamic Vision Transformer (DVT). Not All Ima

Deep Text Search is an AI-powered multilingual text search and recommendation engine with state-of-the-art transformer-based multilingual text embedding (50+ languages).

Deep Text Search - AI Based Text Search & Recommendation System Deep Text Search is an AI-powered multilingual text search and recommendation engine w

SparseML is a libraries for applying sparsification recipes to neural networks with a few lines of code, enabling faster and smaller models

SparseML is a toolkit that includes APIs, CLIs, scripts and libraries that apply state-of-the-art sparsification algorithms such as pruning and quantization to any neural network. General, recipe-driven approaches built around these algorithms enable the simplification of creating faster and smaller models for the ML performance community at large.

All course materials for the Zero to Mastery Deep Learning with TensorFlow course.

All course materials for the Zero to Mastery Deep Learning with TensorFlow course.

This is the repo for the paper `SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization'. (published in Bioinformatics'21)

SumGNN: Multi-typed Drug Interaction Prediction via Efficient Knowledge Graph Summarization This is the code for our paper ``SumGNN: Multi-typed Drug

Official code for "Mean Shift for Self-Supervised Learning"

MSF Official code for "Mean Shift for Self-Supervised Learning" Requirements Python = 3.7.6 PyTorch = 1.4 torchvision = 0.5.0 faiss-gpu = 1.6.1 In

A tensorflow implementation of GCN-LPA

GCN-LPA This repository is the implementation of GCN-LPA (arXiv): Unifying Graph Convolutional Neural Networks and Label Propagation Hongwei Wang, Jur

Implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT : Cross-Attention Multi-Scale Vision Transformer for Image Classification This is an unofficial PyTorch implementation of CrossViT: Cross-Att

This is an official implementation for "ResT: An Efficient Transformer for Visual Recognition".

ResT By Qing-Long Zhang and Yu-Bin Yang [State Key Laboratory for Novel Software Technology at Nanjing University] This repo is the official implement

A supplementary code for Editable Neural Networks, an ICLR 2020 submission.

Editable neural networks A supplementary code for Editable Neural Networks, an ICLR 2020 submission by Anton Sinitsin, Vsevolod Plokhotnyuk, Dmitry Py

NR-GAN: Noise Robust Generative Adversarial Networks

NR-GAN: Noise Robust Generative Adversarial Networks (CVPR 2020) This repository provides PyTorch implementation for noise robust GAN (NR-GAN). NR-GAN

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep

Unofficial TensorFlow implementation of the Keyword Spotting Transformer model

Keyword Spotting Transformer This is the unofficial TensorFlow implementation of the Keyword Spotting Transformer model. This model is used to train o

whm also known as wifi-heat-mapper is a Python library for benchmarking Wi-Fi networks and gather useful metrics that can be converted into meaningful easy-to-understand heatmaps.

whm also known as wifi-heat-mapper is a Python library for benchmarking Wi-Fi networks and gather useful metrics that can be converted into meaningful easy-to-understand heatmaps.

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Monitora la qualità della ricezione dei segnali radio nelle province siciliane.

FMap-server Monitora la qualità della ricezione dei segnali radio nelle province siciliane. Conversion data Frequency - StationName maps are stored in

Third party Pytorch implement of Image Processing Transformer (Pre-Trained Image Processing Transformer arXiv:2012.00364v2)

ImageProcessingTransformer Third party Pytorch implement of Image Processing Transformer (Pre-Trained Image Processing Transformer arXiv:2012.00364v2)

Official PyTorch implementation for "Mixed supervision for surface-defect detection: from weakly to fully supervised learning"

Mixed supervision for surface-defect detection: from weakly to fully supervised learning [Computers in Industry 2021] Official PyTorch implementation

Deep functional residue identification

DeepFRI Deep functional residue identification Citing @article {Gligorijevic2019, author = {Gligorijevic, Vladimir and Renfrew, P. Douglas and Koscio

Pytorch implementation of AngularGrad: A New Optimization Technique for Angular Convergence of Convolutional Neural Networks

AngularGrad Optimizer This repository contains the oficial implementation for AngularGrad: A New Optimization Technique for Angular Convergence of Con

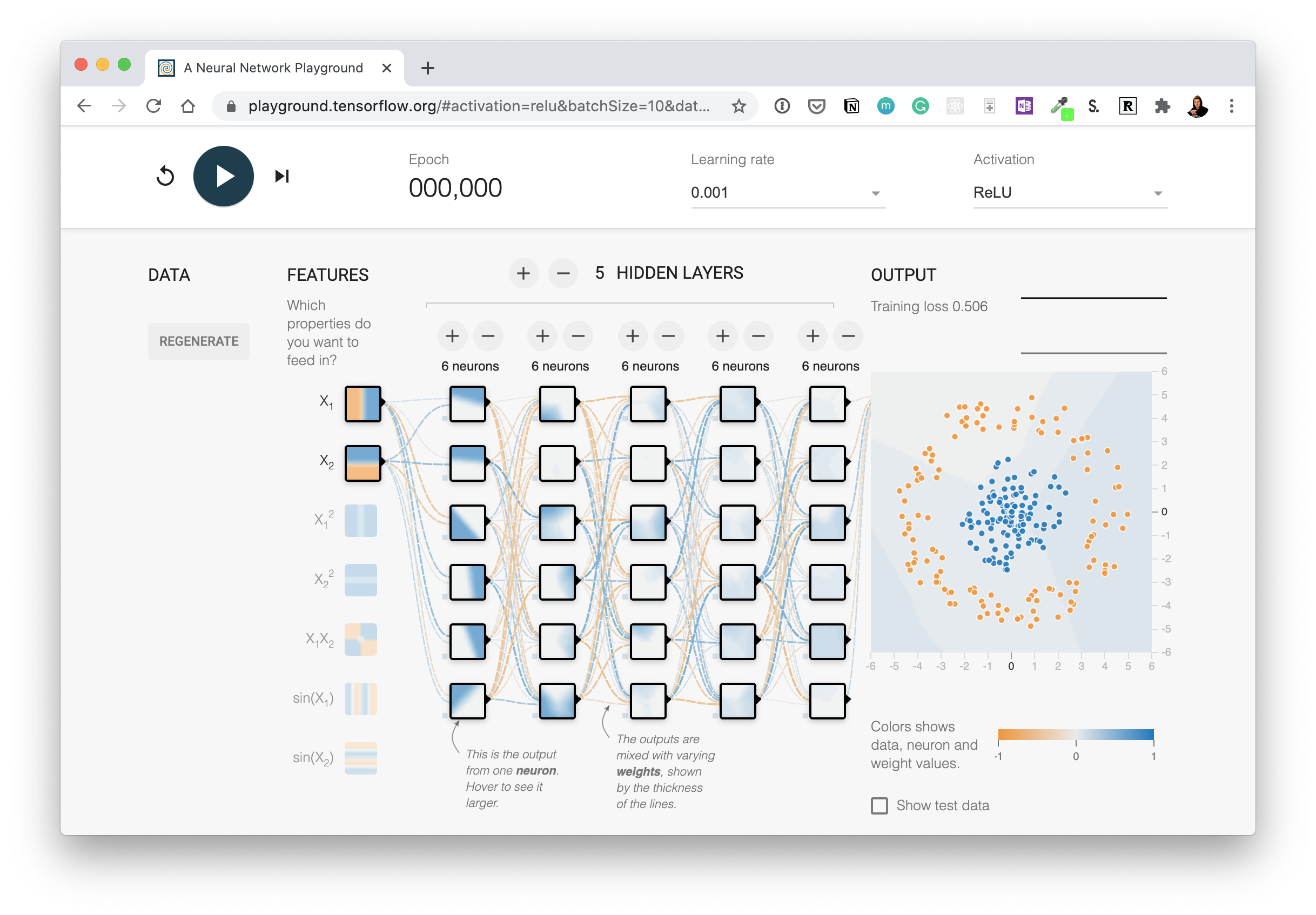

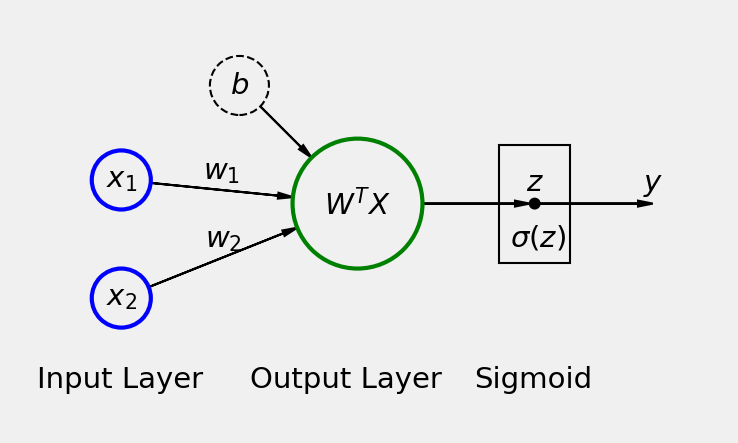

Deep Learning Visuals contains 215 unique images divided in 23 categories

Deep Learning Visuals contains 215 unique images divided in 23 categories (some images may appear in more than one category). All the images were originally published in my book "Deep Learning with PyTorch Step-by-Step: A Beginner's Guide".

This Is Advanced Version Of Old Radio Player, An Telegram Bot to Play Radio/Music in Channel or Group Voice Chats.

Telegram Radio Player V2 An Telegram Bot to Play Radio/Music in Channel or Group Voice Chats. This is also the source code of the bot which is being u

PyTorch implementation of some learning rate schedulers for deep learning researcher.

pytorch-lr-scheduler PyTorch implementation of some learning rate schedulers for deep learning researcher. Usage WarmupReduceLROnPlateauScheduler Visu

TrTr: Visual Tracking with Transformer

TrTr: Visual Tracking with Transformer We propose a novel tracker network based on a powerful attention mechanism called Transformer encoder-decoder a

PyTorch code of my ICDAR 2021 paper Vision Transformer for Fast and Efficient Scene Text Recognition (ViTSTR)

Vision Transformer for Fast and Efficient Scene Text Recognition (ICDAR 2021) ViTSTR is a simple single-stage model that uses a pre-trained Vision Tra

Code for Transformer Hawkes Process, ICML 2020.

Transformer Hawkes Process Source code for Transformer Hawkes Process (ICML 2020). Run the code Dependencies Python 3.7. Anaconda contains all the req

Implementation of Bidirectional Recurrent Independent Mechanisms (Learning to Combine Top-Down and Bottom-Up Signals in Recurrent Neural Networks with Attention over Modules)

BRIMs Bidirectional Recurrent Independent Mechanisms Implementation of the paper Learning to Combine Top-Down and Bottom-Up Signals in Recurrent Neura

Implementation of the paper "Shapley Explanation Networks"

Shapley Explanation Networks Implementation of the paper "Shapley Explanation Networks" at ICLR 2021. Note that this repo heavily uses the experimenta

We will release the code of "ConTNet: Why not use convolution and transformer at the same time?" in this repo

ConTNet Introduction ConTNet (Convlution-Tranformer Network) is proposed mainly in response to the following two issues: (1) ConvNets lack a large rec

This project demonstrates the use of neural networks and computer vision to create a classifier that interprets the Brazilian Sign Language.

LIBRAS-Image-Classifier This project demonstrates the use of neural networks and computer vision to create a classifier that interprets the Brazilian

Face Transformer for Recognition

Face-Transformer This is the code of Face Transformer for Recognition (https://arxiv.org/abs/2103.14803v2). Recently there has been great interests of

Repo for "Event-Stream Representation for Human Gaits Identification Using Deep Neural Networks"

Summary This is the code for the paper Event-Stream Representation for Human Gaits Identification Using Deep Neural Networks by Yanxiang Wang, Xian Zh

Pytorch code for ICRA'21 paper: "Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation"

Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation This repository is the pytorch implementation of our paper: Hierarchical Cr

SuperSDR: multiplatform KiwiSDR + CAT transceiver integrator

SuperSDR SuperSDR integrates a realtime spectrum waterfall and audio receive from any KiwiSDR around the world, together with a local (or remote) cont

Expressive Power of Invariant and Equivaraint Graph Neural Networks (ICLR 2021)

Expressive Power of Invariant and Equivaraint Graph Neural Networks In this repository, we show how to use powerful GNN (2-FGNN) to solve a graph alig

PyTorch implementation of the paper Deep Networks from the Principle of Rate Reduction

Deep Networks from the Principle of Rate Reduction This repository is the official PyTorch implementation of the paper Deep Networks from the Principl

A look-ahead multi-entity Transformer for modeling coordinated agents.

baller2vec++ This is the repository for the paper: Michael A. Alcorn and Anh Nguyen. baller2vec++: A Look-Ahead Multi-Entity Transformer For Modeling