197 Repositories

Python shared-memory Libraries

Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memories using approximate nearest neighbors, in Pytorch

Memorizing Transformers - Pytorch Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memori

Code for CVPR 2022 paper "Bailando: 3D dance generation via Actor-Critic GPT with Choreographic Memory"

Bailando Code for CVPR 2022 (oral) paper "Bailando: 3D dance generation via Actor-Critic GPT with Choreographic Memory" [Paper] | [Project Page] | [Vi

Shared, streaming Python dict

UltraDict Sychronized, streaming Python dictionary that uses shared memory as a backend Warning: This is an early hack. There are only few unit tests

Easy Parallel Library (EPL) is a general and efficient deep learning framework for distributed model training.

English | 简体中文 Easy Parallel Library Overview Easy Parallel Library (EPL) is a general and efficient library for distributed model training. Usability

Winner system (DAMO-NLP) of SemEval 2022 MultiCoNER shared task over 10 out of 13 tracks.

KB-NER: a Knowledge-based System for Multilingual Complex Named Entity Recognition The code is for the winner system (DAMO-NLP) of SemEval 2022 MultiC

Lowest memory consumption and second shortest runtime in NTIRE 2022 challenge on Efficient Super-Resolution

FMEN Lowest memory consumption and second shortest runtime in NTIRE 2022 on Efficient Super-Resolution. Our paper: Fast and Memory-Efficient Network T

Official codebase for ICLR oral paper Unsupervised Vision-Language Grammar Induction with Shared Structure Modeling

CLIORA This is the official codebase for ICLR oral paper: Unsupervised Vision-Language Grammar Induction with Shared Structure Modeling. We introduce

Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch

MeMOT - Pytorch (wip) Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch. This paper is just one in a line of work, but importan

Implementation of a memory efficient multi-head attention as proposed in the paper, "Self-attention Does Not Need O(n²) Memory"

Memory Efficient Attention Pytorch Implementation of a memory efficient multi-head attention as proposed in the paper, Self-attention Does Not Need O(

K2HASH Python library - NoSQL Key Value Store(KVS) library

k2hash_python Overview k2hash_python is an official python driver for k2hash. Install Firstly you must install the k2hash shared library: curl -o- htt

Episodic-memory - Ego4D Episodic Memory Benchmark

Ego4D Episodic Memory Benchmark EGO4D is the world's largest egocentric (first p

Django-shared-app-isolated-databases-example - Django - Shared App & Isolated Databases

Django - Shared App & Isolated Databases An app that demonstrates the implementa

CVE-2022-22536 - SAP memory pipes(MPI) desynchronization vulnerability CVE-2022-22536

CVE-2022-22536 SAP memory pipes desynchronization vulnerability(MPI) CVE-2022-22

This repository contains the code for: RerrFact model for SciVer shared task

RerrFact This repository contains the code for: RerrFact model for SciVer shared task. Setup for Inference 1. Download SciFact database Download the S

Memory Defense: More Robust Classificationvia a Memory-Masking Autoencoder

Memory Defense: More Robust Classificationvia a Memory-Masking Autoencoder Authors: - Eashan Adhikarla - Dan Luo - Dr. Brian D. Davison Abstract Many

Easily benchmark PyTorch model FLOPs, latency, throughput, max allocated memory and energy consumption

⏱ pytorch-benchmark Easily benchmark model inference FLOPs, latency, throughput, max allocated memory and energy consumption Install pip install pytor

FewBit — a library for memory efficient training of large neural networks

FewBit FewBit — a library for memory efficient training of large neural networks. Its efficiency originates from storage optimizations applied to back

This speeds up PyCharm's package index processes and avoids CPU & memory overloading

This speeds up PyCharm's package index processes and avoids CPU & memory overloading

Scripts to convert the Ted-MDB corpora into the formats for DISRPT shared task and the converted corpora

Scripts to convert the Ted-MDB corpora into the formats for DISRPT shared task and the converted corpora.

Decorators for maximizing memory utilization with PyTorch & CUDA

torch-max-mem This package provides decorators for memory utilization maximization with PyTorch and CUDA by starting with a maximum parameter size and

Rip Raw - a small tool to analyse the memory of compromised Linux systems

Rip Raw Rip Raw is a small tool to analyse the memory of compromised Linux systems. It is similar in purpose to Bulk Extractor, but particularly focus

The Dual Memory is build from a simple CNN for the deep memory and Linear Regression fro the fast Memory

Simple-DMA a simple Dual Memory Architecture for classifications. based on the paper Dual-Memory Deep Learning Architectures for Lifelong Learning of

Generating Radiology Reports via Memory-driven Transformer

R2Gen This is the implementation of Generating Radiology Reports via Memory-driven Transformer at EMNLP-2020. Citations If you use or extend our work,

PyTorchMemTracer - Depict GPU memory footprint during DNN training of PyTorch

A Memory Tracer For PyTorch OOM is a nightmare for PyTorch users. However, most

This project generates news headlines using a Long Short-Term Memory (LSTM) neural network.

News Headlines Generator bunnysaini/Generate-Headlines Goal This project aims to generate news headlines using a Long Short-Term Memory (LSTM) neural

GPU implementation of $k$-Nearest Neighbors and Shared-Nearest Neighbors

GPU implementation of kNN and SNN GPU implementation of $k$-Nearest Neighbors and Shared-Nearest Neighbors Supported by numba cuda and faiss library E

Visual Python and C++ nanosecond profiler, logger, tests enabler

Look into Palanteer and get an omniscient view of your program Palanteer is a set of lean and efficient tools to improve the quality of software, for

Holographic Declarative Memory for Python ACT-R

HDM This is the repository for the Holographic Declarative Memory (HDM) module for Python ACT-R. This repository contains: documentation: a paper, con

Implementation of Memory-Compressed Attention, from the paper "Generating Wikipedia By Summarizing Long Sequences"

Memory Compressed Attention Implementation of the Self-Attention layer of the proposed Memory-Compressed Attention, in Pytorch. This repository offers

The accompanying code for the paper "GMAT: Global Memory Augmentation for Transformers" (Ankit Gupta and Jonathan Berant).

GMAT: Global Memory Augmentation for Transformers This repository contains the accompanying code for the paper: "GMAT: Global Memory Augmentation for

Implementation of Memformer, a Memory-augmented Transformer, in Pytorch

Memformer - Pytorch Implementation of Memformer, a Memory-augmented Transformer, in Pytorch. It includes memory slots, which are updated with attentio

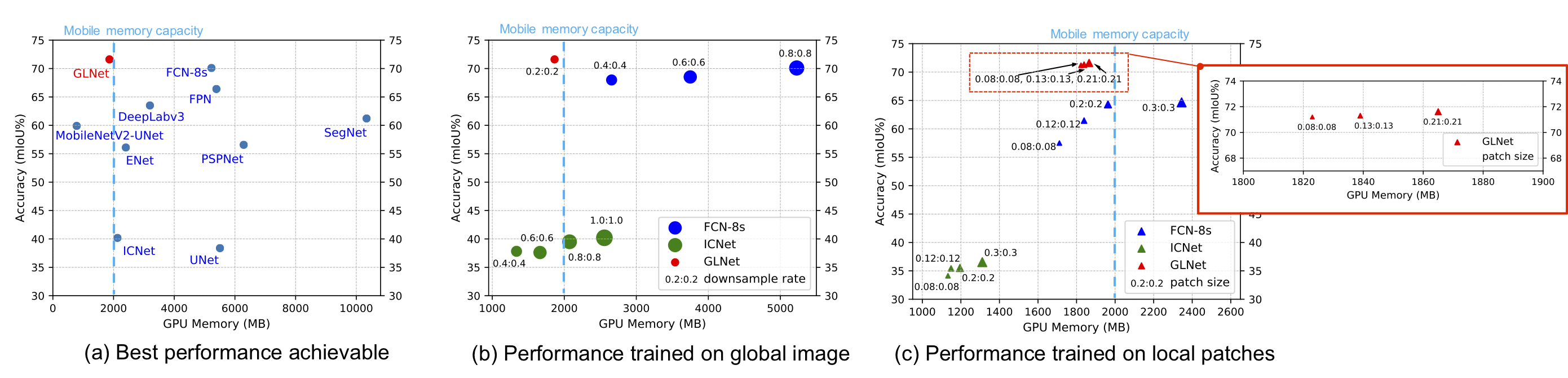

GLNet for Memory-Efficient Segmentation of Ultra-High Resolution Images

GLNet for Memory-Efficient Segmentation of Ultra-High Resolution Images Collaborative Global-Local Networks for Memory-Efficient Segmentation of Ultra-

Semi-Supervised Semantic Segmentation with Pixel-Level Contrastive Learning from a Class-wise Memory Bank

This repository provides the official code for replicating experiments from the paper: Semi-Supervised Semantic Segmentation with Pixel-Level Contrast

Implements a polyglot REPL which supports multiple languages and shared meta-object protocol scope between REPLs.

MetaCall Polyglot REPL Description This repository implements a Polyglot REPL which shares the state of the meta-object protocol between the REPLs. Us

A wrapper around ffmpeg to make it work in a concurrent and memory-buffered fashion.

Media Fixer Have you ever had a film or TV show that your TV wasn't able to play its audio? Well this program is for you. Media Fixer is a program whi

Sign Language Recognition service utilizing a deep learning model with Long Short-Term Memory to perform sign language recognition.

Sign Language Recognition Service This is a Sign Language Recognition service utilizing a deep learning model with Long Short-Term Memory to perform s

Import Python modules from dicts and JSON formatted documents.

Paker Paker is module for importing Python packages/modules from dictionaries and JSON formatted documents. It was inspired by httpimporter. Important

Module for remote in-memory Python package/module loading through HTTP/S

httpimport Python's missing feature! The feature has been suggested in Python Mailing List Remote, in-memory Python package/module importing through H

MIMIC Code Repository: Code shared by the research community for the MIMIC-III database

MIMIC Code Repository The MIMIC Code Repository is intended to be a central hub for sharing, refining, and reusing code used for analysis of the MIMIC

Scalene: a high-performance, high-precision CPU, GPU, and memory profiler for Python

Scalene: a high-performance CPU, GPU and memory profiler for Python by Emery Berger, Sam Stern, and Juan Altmayer Pizzorno. Scalene community Slack Ab

Content shared at DS-OX Meetup

Streamlit-Projects Streamlit projects available in this repo: An introduction to Streamlit presented at DS-OX (Feb 26, 2020) meetup Streamlit 101 - Ja

Meshed-Memory Transformer for Image Captioning. CVPR 2020

M²: Meshed-Memory Transformer This repository contains the reference code for the paper Meshed-Memory Transformer for Image Captioning (CVPR 2020). Pl

PyTorch code for MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning

MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning PyTorch code for our ACL 2020 paper "MART: Memory-Augmented Recur

Predictive Maintenance LSTM

Predictive-Maintenance-LSTM - Predictive maintenance study for Complex case study, we've obtained failure causes by operational error and more deeply by design mistakes.

Python function to construct an ODS spreadsheet on the fly - without having to store the entire file in memory or disk

stream-write-ods Python function to construct an ODS (OpenDocument Spreadsheet) on the fly - without having to store the entire file in memory or disk

Memory efficient transducer loss computation

Introduction This project implements the optimization techniques proposed in Improving RNN Transducer Modeling for End-to-End Speech Recognition to re

Memory-efficient optimum einsum using opt_einsum planning and PyTorch kernels.

opt-einsum-torch There have been many implementations of Einstein's summation. numpy's numpy.einsum is the least efficient one as it only runs in sing

Python function to construct a ZIP archive with on the fly - without having to store the entire ZIP in memory or disk

Python function to construct a ZIP archive with on the fly - without having to store the entire ZIP in memory or disk

Robust, highly tunable and easy-to-integrate in-memory cache solution written in pure Python, with no dependencies.

Omoide Cache Caching doesn't need to be hard anymore. With just a few lines of code Omoide Cache will instantly bring your Python services to the next

A small site to list shared directories

Nebula Server Directories This site can be used to list folder and subdirectories in your server : Python It's required to have Python 3.8 or more ins

Implementation of Memory-Efficient Neural Networks with Multi-Level Generation, ICCV 2021

Memory-Efficient Multi-Level In-Situ Generation (MLG) By Jiaqi Gu, Hanqing Zhu, Chenghao Feng, Mingjie Liu, Zixuan Jiang, Ray T. Chen and David Z. Pan

Attention for PyTorch with Linear Memory Footprint

Attention for PyTorch with Linear Memory Footprint Unofficially implements https://arxiv.org/abs/2112.05682 to get Linear Memory Cost on Attention (+

This script allows you to retrieve all functions / variables names of a Python code, and the variables values.

Memory Extractor This script allows you to retrieve all functions / variables names of a Python code, and the variables values. How to use it ? The si

A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory"

memory_efficient_attention.pytorch A human-readable PyTorch implementation of "Self-attention Does Not Need O(n^2) Memory" (Rabe&Staats'21). def effic

Python Implementation of Scalable In-Memory Updatable Bitmap Indexing

PyUpBit CS490 Large Scale Data Analytics — Implementation of Updatable Compressed Bitmap Indexing Paper Table of Contents About The Project Usage Cont

Simple embedded in memory json database

dbj dbj is a simple embedded in memory json database. It is easy to use, fast and has a simple query language. The code is fully documented, tested an

A low-impact profiler to figure out how much memory each task in Dask is using

dask-memusage If you're using Dask with tasks that use a lot of memory, RAM is your bottleneck for parallelism. That means you want to know how much m

![[NeurIPS-2020] Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID.](https://github.com/yxgeee/SpCL/raw/master/figs/framework.png)

[NeurIPS-2020] Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID.

Self-paced Contrastive Learning (SpCL) The official repository for Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID

Memory game in Python

Concentration - Memory Game Concentration is a memory game written in Python, inspired by memory-game. Description As stated in the introduction of th

Article Reranking by Memory-enhanced Key Sentence Matching for Detecting Previously Fact-checked Claims.

MTM This is the official repository of the paper: Article Reranking by Memory-enhanced Key Sentence Matching for Detecting Previously Fact-checked Cla

Library for Memory Trace Statistics in Python

Memory Search Library for Memory Trace Statistics in Python The library uses tracemalloc as a core module, which is why it is only available for Pytho

A universal memory dumper using Frida

Fridump Fridump (v0.1) is an open source memory dumping tool, primarily aimed to penetration testers and developers. Fridump is using the Frida framew

Memory Efficient Attention (O(sqrt(n)) for Jax and PyTorch

Memory Efficient Attention This is unofficial implementation of Self-attention Does Not Need O(n^2) Memory for Jax and PyTorch. Implementation is almo

BLEND: A Fast, Memory-Efficient, and Accurate Mechanism to Find Fuzzy Seed Matches

BLEND is a mechanism that can efficiently find fuzzy seed matches between sequences to significantly improve the performance and accuracy while reducing the memory space usage of two important applications: 1) finding overlapping reads and 2) read mapping. Described by Firtina et al.

A Python dictionary implementation designed to act as an in-memory cache for FaaS environments

faas-cache-dict A Python dictionary implementation designed to act as an in-memory cache for FaaS environments. Formally you would describe this a mem

Collection of common code that's shared among different research projects in FAIR computer vision team.

fvcore fvcore is a light-weight core library that provides the most common and essential functionality shared in various computer vision frameworks de

Memory-Augmented Model Predictive Control

Memory-Augmented Model Predictive Control This repository hosts the source code for the journal article "Composing MPC with LQR and Neural Networks fo

Asyncio cache manager for redis, memcached and memory

aiocache Asyncio cache supporting multiple backends (memory, redis and memcached). This library aims for simplicity over specialization. All caches co

Easily share folders between VMs.

This package aims to solve the problem of inter-VM file sharing (rather than manual copying) by allowing a VM to mount folders from any other VM's file system (or mounted network shares).

In-memory Graph Database and Knowledge Graph with Natural Language Interface, compatible with Pandas

CogniPy for Pandas - In-memory Graph Database and Knowledge Graph with Natural Language Interface Whats in the box Reasoning, exploration of RDF/OWL,

Official implementation of "A Shared Representation for Photorealistic Driving Simulators" in PyTorch.

A Shared Representation for Photorealistic Driving Simulators The official code for the paper: "A Shared Representation for Photorealistic Driving Sim

Official PyTorch implementation of RIO

Image-Level or Object-Level? A Tale of Two Resampling Strategies for Long-Tailed Detection Figure 1: Our proposed Resampling at image-level and obect-

End-To-End Memory Network using Tensorflow

MemN2N Implementation of End-To-End Memory Networks with sklearn-like interface using Tensorflow. Tasks are from the bAbl dataset. Get Started git clo

Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch

N-Grammer - Pytorch Implementation of N-Grammer, augmenting Transformers with latent n-grams, in Pytorch Install $ pip install n-grammer-pytorch Usage

This project is created to visualize the system statistics such as memory usage, CPU usage, memory accessible by process and much more using Kibana Dashboard with Elasticsearch.

System Stats Visualizer This project is created to visualize the system statistics such as memory usage, CPU usage, memory accessible by process and m

End-2-end speech synthesis with recurrent neural networks

Introduction New: Interactive demo using Google Colaboratory can be found here TTS-Cube is an end-2-end speech synthesis system that provides a full p

Train Dense Passage Retriever (DPR) with a single GPU

Gradient Cached Dense Passage Retrieval Gradient Cached Dense Passage Retrieval (GC-DPR) - is an extension of the original DPR library. We introduce G

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

PyTorch implementation of the ACL, 2021 paper Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks.

Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks This repo contains the PyTorch implementation of the ACL, 2021 pa

A library for low-memory inferencing in PyTorch.

Pylomin Pylomin (PYtorch LOw-Memory INference) is a library for low-memory inferencing in PyTorch. Installation ... Usage For example, the following c

A simple, lightweight Discord bot running with only 512 MB memory on Heroku

Haruka This used to be a music bot, but people keep using it for NSFW content. Can't everyone be less horny? Bot commands See the built-in help comman

Memory tests solver with using OpenCV

Human Benchmark project This project is OpenCV based programs which are puzzle solvers for 7 different games for https://humanbenchmark.com/. made as

Long Expressive Memory (LEM)

Long Expressive Memory for Sequence Modeling This repository contains the implementation to reproduce the numerical experiments of the paper Long Expr

Prevent `CUDA error: out of memory` in just 1 line of code.

🐨 Koila Koila solves CUDA error: out of memory error painlessly. Fix it with just one line of code, and forget it. 🚀 Features 🙅 Prevents CUDA error

Shared utility scripts for AI for Earth projects and team members

Overview Shared utilities developed by the Microsoft AI for Earth team The general convention in this repo is that users who want to consume these uti

![[IEEE Transactions on Computational Imaging] Self-Gated Memory Recurrent Network for Efficient Scalable HDR Deghosting](https://github.com/Susmit-A/HDRRNN/raw/master/github_images/overview.png)

[IEEE Transactions on Computational Imaging] Self-Gated Memory Recurrent Network for Efficient Scalable HDR Deghosting

Few-shot Deep HDR Deghosting This repository contains code and pretrained models for our paper: Self-Gated Memory Recurrent Network for Efficient Scal

Our implementation used for the MICCAI 2021 FLARE Challenge titled 'Efficient Multi-Organ Segmentation Using SpatialConfiguartion-Net with Low GPU Memory Requirements'.

Efficient Multi-Organ Segmentation Using SpatialConfiguartion-Net with Low GPU Memory Requirements Our implementation used for the MICCAI 2021 FLARE C

Source code for CsiNet and CRNet using Fully Connected Layer-Shared feedback architecture.

FCS-applications Source code for CsiNet and CRNet using the Fully Connected Layer-Shared feedback architecture. Introduction This repository contains

Turdshovel is an interactive CLI tool that allows users to dump objects from .NET memory dumps

Turdshovel Description Turdshovel is an interactive CLI tool that allows users to dump objects from .NET memory dumps without having to fully understa

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for Trans

A fast, efficient universal vector embedding utility package.

Magnitude: a fast, simple vector embedding utility library A feature-packed Python package and vector storage file format for utilizing vector embeddi

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

Mesa: A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for

This repo contains implementation of different architectures for emotion recognition in conversations.

Emotion Recognition in Conversations Updates 🔥 🔥 🔥 Date Announcements 03/08/2021 🎆 🎆 We have released a new dataset M2H2: A Multimodal Multiparty

ZipFly is a zip archive generator based on zipfile.py

ZipFly is a zip archive generator based on zipfile.py. It was created by Buzon.io to generate very large ZIP archives for immediate sending out to clients, or for writing large ZIP archives without memory inflation.

Like htop (CPU and memory usage), but for your case LEDs. 😄

Like htop (CPU and memory usage), but for your case LEDs. 😄

InvTorch: memory-efficient models with invertible functions

InvTorch: Memory-Efficient Invertible Functions This module extends the functionality of torch.utils.checkpoint.checkpoint to work with invertible fun

DrWhy is the collection of tools for eXplainable AI (XAI). It's based on shared principles and simple grammar for exploration, explanation and visualisation of predictive models.

Responsible Machine Learning With Great Power Comes Great Responsibility. Voltaire (well, maybe) How to develop machine learning models in a responsib

Code for the paper "Attention Approximates Sparse Distributed Memory"

Attention Approximates Sparse Distributed Memory - Codebase This is all of the code used to run analyses in the paper "Attention Approximates Sparse D

Demo of using DataLoader to prevent out of memory

Demo of using DataLoader to prevent out of memory

A customisable game where you have to quickly click on black tiles in order of appearance while avoiding clicking on white squares.

W.I.P-Aim-Memory-Game A customisable game where you have to quickly click on black tiles in order of appearance while avoiding clicking on white squar