1448 Repositories

Python tensorflow-training Libraries

Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow.

Generative Models Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow. Also present here are RBM and Helmholtz Machine. Note: Gen

An implementation of the AlphaZero algorithm for Gomoku (also called Gobang or Five in a Row)

AlphaZero-Gomoku This is an implementation of the AlphaZero algorithm for playing the simple board game Gomoku (also called Gobang or Five in a Row) f

Compare outputs between layers written in Tensorflow and layers written in Pytorch

Compare outputs of Wasserstein GANs between TensorFlow vs Pytorch This is our testing module for the implementation of improved WGAN in Pytorch Prereq

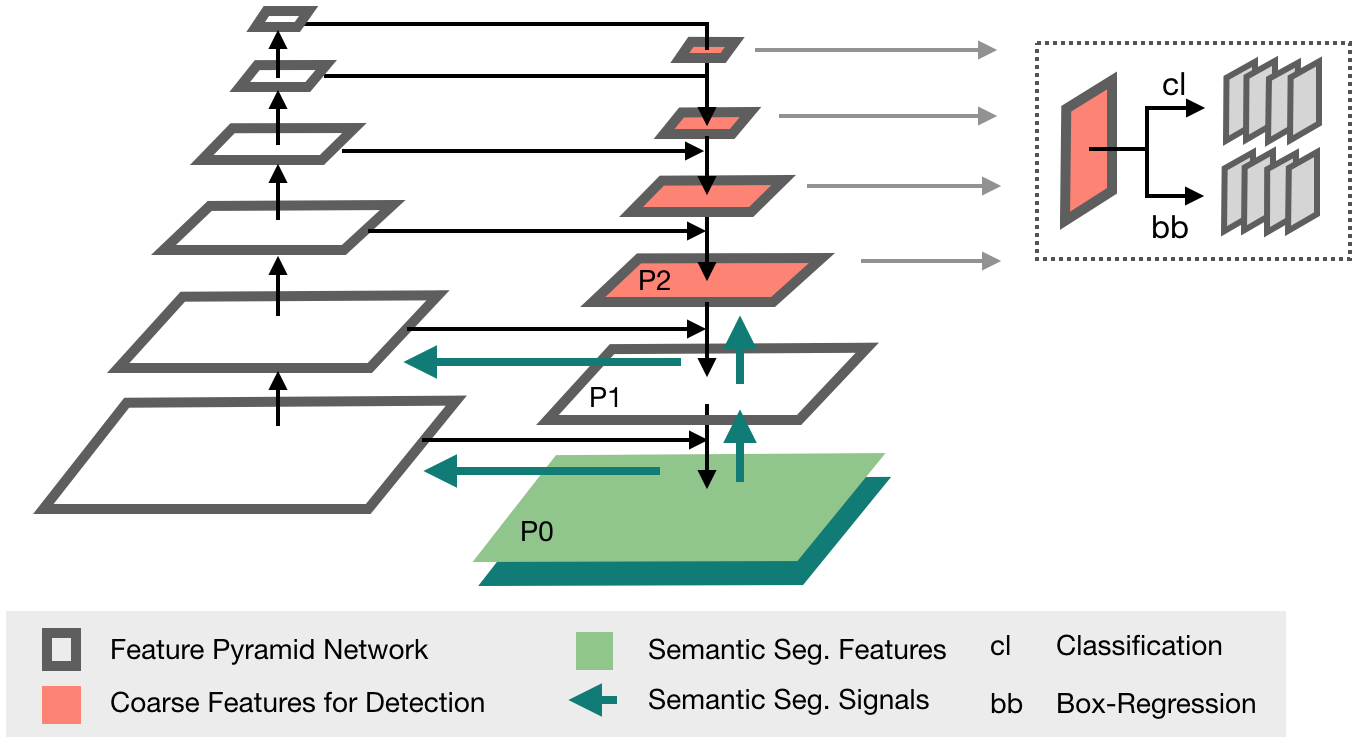

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

The Medical Detection Toolkit contains 2D + 3D implementations of prevalent object detectors such as Mask R-CNN, Retina Net, Retina U-Net, as well as a training and inference framework focused on dealing with medical images.

🛠 All-in-one web-based IDE specialized for machine learning and data science.

All-in-one web-based development environment for machine learning Getting Started • Features & Screenshots • Support • Report a Bug • FAQ • Known Issu

HyperPose is a library for building high-performance custom pose estimation applications.

HyperPose is a library for building high-performance custom pose estimation applications.

RetinaFace: Deep Face Detection Library in TensorFlow for Python

RetinaFace is a deep learning based cutting-edge facial detector for Python coming with facial landmarks.

Deep Learning and Logical Reasoning from Data and Knowledge

Logic Tensor Networks (LTN) Logic Tensor Network (LTN) is a neurosymbolic framework that supports querying, learning and reasoning with both rich data

Piotr - IoT firmware emulation instrumentation for training and research

Piotr: Pythonic IoT exploitation and Research Introduction to Piotr Piotr is an emulation helper for Qemu that provides a convenient way to create, sh

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

WAGMA-SGD is a decentralized asynchronous SGD for distributed deep learning training based on model averaging.

WAGMA-SGD is a decentralized asynchronous SGD based on wait-avoiding group model averaging. The synchronization is relaxed by making the collectives externally-triggerable, namely, a collective can be initiated without requiring that all the processes enter it. It partially reduces the data within non-overlapping groups of process, improving the parallel scalability.

Bagua is a flexible and performant distributed training algorithm development framework.

Bagua is a flexible and performant distributed training algorithm development framework.

Heimdall watchtower automatically sends you emails to notify you of the latest progress of your deep learning programs.

This software automatically sends you emails to notify you of the latest progress of your deep learning programs.

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Code for the paper "Balancing Training for Multilingual Neural Machine Translation, ACL 2020"

Balancing Training for Multilingual Neural Machine Translation Implementation of the paper Balancing Training for Multilingual Neural Machine Translat

Shared code for training sentence embeddings with Flax / JAX

flax-sentence-embeddings This repository will be used to share code for the Flax / JAX community event to train sentence embeddings on 1B+ training pa

Source code for the paper "PLOME: Pre-training with Misspelled Knowledge for Chinese Spelling Correction" in ACL2021

PLOME:Pre-training with Misspelled Knowledge for Chinese Spelling Correction (ACL2021) This repository provides the code and data of the work in ACL20

This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code for training a DPR model then continuing training with RAG.

KGI (Knowledge Graph Induction) for slot filling This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code fo

An official TensorFlow implementation of “CLCC: Contrastive Learning for Color Constancy” accepted at CVPR 2021.

CLCC: Contrastive Learning for Color Constancy (CVPR 2021) Yi-Chen Lo*, Chia-Che Chang*, Hsuan-Chao Chiu, Yu-Hao Huang, Chia-Ping Chen, Yu-Lin Chang,

Official code of paper "PGT: A Progressive Method for Training Models on Long Videos" on CVPR2021

PGT Code for paper PGT: A Progressive Method for Training Models on Long Videos. Install Run pip install -r requirements.txt. Run python setup.py buil

This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transformations at CVPR'21. According to some product reasons, we are not planning to release the training/testing codes and models. However, we will release the dataset and the scripts to prepare the dataset.

TransFill-Reference-Inpainting This is the official repo for TransFill: Reference-guided Image Inpainting by Merging Multiple Color and Spatial Transf

Unofficial implementation of "Swin Transformer: Hierarchical Vision Transformer using Shifted Windows" (https://arxiv.org/abs/2103.14030)

Swin-Transformer-Tensorflow A direct translation of the official PyTorch implementation of "Swin Transformer: Hierarchical Vision Transformer using Sh

The source codes for ACL 2021 paper 'BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data'

BoB: BERT Over BERT for Training Persona-based Dialogue Models from Limited Personalized Data This repository provides the implementation details for

YoloV5 implemented by TensorFlow2 , with support for training, evaluation and inference.

Efficient implementation of YOLOV5 in TensorFlow2

Complete U-net Implementation with keras

U Net Lowered with Keras Complete U-net Implementation with keras Original Paper Link : https://arxiv.org/abs/1505.04597 Special Implementations : The

Attention mechanism with MNIST dataset

[TensorFlow] Attention mechanism with MNIST dataset Usage $ python run.py Result Training Loss graph. Test Each figure shows input digit, attention ma

Streamlit component for TensorBoard, TensorFlow's visualization toolkit

streamlit-tensorboard This is a work-in-progress, providing a function to embed TensorBoard, TensorFlow's visualization toolkit, in Streamlit apps. In

Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis (SV2TTS)

This repository is an implementation of Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis (SV2TTS) with a vocoder that works in real-time. Feel free to check my thesis if you're curious or if you're looking for info I haven't documented. Mostly I would recommend giving a quick look to the figures beyond the introduction.

Orthogonal Over-Parameterized Training

The inductive bias of a neural network is largely determined by the architecture and the training algorithm. To achieve good generalization, how to effectively train a neural network is of great importance. We propose a novel orthogonal over-parameterized training (OPT) framework that can provably minimize the hyperspherical energy which characterizes the diversity of neurons on a hypersphere. See our previous work -- MHE for an in-depth introduction.

quantize aware training package for NCNN on pytorch

ncnnqat ncnnqat is a quantize aware training package for NCNN on pytorch. Table of Contents ncnnqat Table of Contents Installation Usage Code Examples

TAP: Text-Aware Pre-training for Text-VQA and Text-Caption, CVPR 2021 (Oral)

TAP: Text-Aware Pre-training TAP: Text-Aware Pre-training for Text-VQA and Text-Caption by Zhengyuan Yang, Yijuan Lu, Jianfeng Wang, Xi Yin, Dinei Flo

Code for the paper One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation, CVPR 2021.

One Thing One Click One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation (CVPR2021) Code for the paper One Thi

Tensorflow implementation of the paper "HumanGPS: Geodesic PreServing Feature for Dense Human Correspondences", CVPR 2021.

HumanGPS: Geodesic PreServing Feature for Dense Human Correspondences Tensorflow implementation of the paper "HumanGPS: Geodesic PreServing Feature fo

CVPR 2021 Official Pytorch Code for UC2: Universal Cross-lingual Cross-modal Vision-and-Language Pre-training

UC2 UC2: Universal Cross-lingual Cross-modal Vision-and-Language Pre-training Mingyang Zhou, Luowei Zhou, Shuohang Wang, Yu Cheng, Linjie Li, Zhou Yu,

Unconstrained Text Detection with Box Supervisionand Dynamic Self-Training

SelfText Beyond Polygon: Unconstrained Text Detection with Box Supervisionand Dynamic Self-Training Introduction This is a PyTorch implementation of "

SmartSim makes it easier to use common Machine Learning (ML) libraries like PyTorch and TensorFlow

SmartSim makes it easier to use common Machine Learning (ML) libraries like PyTorch and TensorFlow, in High Performance Computing (HPC) simulations and workloads.

How Do Adam and Training Strategies Help BNNs Optimization? In ICML 2021.

AdamBNN This is the pytorch implementation of our paper "How Do Adam and Training Strategies Help BNNs Optimization?", published in ICML 2021. In this

VIMPAC: Video Pre-Training via Masked Token Prediction and Contrastive Learning

This is a release of our VIMPAC paper to illustrate the implementations. The pretrained checkpoints and scripts will be soon open-sourced in HuggingFace transformers.

Secure Distributed Training at Scale

Secure Distributed Training at Scale This repository contains the implementation of experiments from the paper "Secure Distributed Training at Scale"

Tutorial to set up TensorFlow Object Detection API on the Raspberry Pi

A tutorial showing how to set up TensorFlow's Object Detection API on the Raspberry Pi

Python code for "Machine learning: a probabilistic perspective" (2nd edition)

Python code for "Machine learning: a probabilistic perspective" (2nd edition)

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

DeepLab2: A TensorFlow Library for Deep Labeling

DeepLab2 is a TensorFlow library for deep labeling, aiming to provide a unified and state-of-the-art TensorFlow codebase for dense pixel labeling tasks.

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation models. It contains 17 different amateur subjects performing 30 sports-related actions each, for a total of 510 action clips.

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation mode

M-LSDを用いて四角形を検出し、射影変換を行うサンプルプログラム

M-LSD-warpPerspective-Example M-LSDを用いて四角形を検出し、射影変換を行うサンプルプログラムです。 Requirements OpenCV 3.4.2 or Later tensorflow 2.4.1 or Later Usage 実行方法は以下です。 pytho

🔮 Execution time predictions for deep neural network training iterations across different GPUs.

Habitat: A Runtime-Based Computational Performance Predictor for Deep Neural Network Training Habitat is a tool that predicts a deep neural network's

Tensorflow implementation of Swin Transformer model.

Swin Transformer (Tensorflow) Tensorflow reimplementation of Swin Transformer model. Based on Official Pytorch implementation. Requirements tensorflow

Tensorflow python implementation of "Learning High Fidelity Depths of Dressed Humans by Watching Social Media Dance Videos"

Learning High Fidelity Depths of Dressed Humans by Watching Social Media Dance Videos This repository is the official tensorflow python implementation

2021海华AI挑战赛·中文阅读理解·技术组·第三名

文字是人类用以记录和表达的最基本工具,也是信息传播的重要媒介。透过文字与符号,我们可以追寻人类文明的起源,可以传播知识与经验,读懂文字是认识与了解的第一步。对于人工智能而言,它的核心问题之一就是认知,而认知的核心则是语义理解。

Build tensorflow keras model pipelines in a single line of code. Created by Ram Seshadri. Collaborators welcome. Permission granted upon request.

deep_autoviml Build keras pipelines and models in a single line of code! Table of Contents Motivation How it works Technology Install Usage API Image

Tensorflow implementation of MIRNet for Low-light image enhancement

MIRNet Tensorflow implementation of the MIRNet architecture as proposed by Learning Enriched Features for Real Image Restoration and Enhancement. Lanu

Aerial Imagery dataset for fire detection: classification and segmentation (Unmanned Aerial Vehicle (UAV))

Aerial Imagery dataset for fire detection: classification and segmentation using Unmanned Aerial Vehicle (UAV) Title FLAME (Fire Luminosity Airborne-b

Load What You Need: Smaller Multilingual Transformers for Pytorch and TensorFlow 2.0.

Smaller Multilingual Transformers This repository shares smaller versions of multilingual transformers that keep the same representations offered by t

The official PyTorch implementation of recent paper - SAINT: Improved Neural Networks for Tabular Data via Row Attention and Contrastive Pre-Training

This repository is the official PyTorch implementation of SAINT. Find the paper on arxiv SAINT: Improved Neural Networks for Tabular Data via Row Atte

验证码识别 深度学习 tensorflow 神经网络

captcha_tf2 验证码识别 深度学习 tensorflow 神经网络 使用卷积神经网络,对字符,数字类型验证码进行识别,tensorflow使用2.0以上 目前项目还在更新中,诸多bug,欢迎提出issue和PR, 希望和你一起共同完善项目。 实例demo 训练过程 优化器选择: Adam

KSAI Lite is a deep learning inference framework of kingsoft, based on tensorflow lite

KSAI Lite is a deep learning inference framework of kingsoft, based on tensorflow lite

Object tracking implemented with YOLOv4, DeepSort, and TensorFlow.

Object tracking implemented with YOLOv4, DeepSort, and TensorFlow. YOLOv4 is a state of the art algorithm that uses deep convolutional neural networks to perform object detections. We can take the output of YOLOv4 feed these object detections into Deep SORT (Simple Online and Realtime Tracking with a Deep Association Metric) in order to create a highly accurate object tracker.

Handwritten Text Recognition (HTR) system implemented with TensorFlow (TF) and trained on the IAM off-line HTR dataset. This Neural Network (NN) model recognizes the text contained in the images of segmented words.

Handwritten-Text-Recognition Handwritten Text Recognition (HTR) system implemented with TensorFlow (TF) and trained on the IAM off-line HTR dataset. T

Deep Learning Package based on TensorFlow

White-Box-Layer is a Python module for deep learning built on top of TensorFlow and is distributed under the MIT license. The project was started in M

Unofficial TensorFlow implementation of Protein Interface Prediction using Graph Convolutional Networks.

[TensorFlow] Protein Interface Prediction using Graph Convolutional Networks Unofficial TensorFlow implementation of Protein Interface Prediction usin

Sharpness-Aware Minimization for Efficiently Improving Generalization

Sharpness-Aware-Minimization-TensorFlow This repository provides a minimal implementation of sharpness-aware minimization (SAM) (Sharpness-Aware Minim

Training data extraction on GPT-2

Training data extraction from GPT-2 This repository contains code for extracting training data from GPT-2, following the approach outlined in the foll

Degree-Quant: Quantization-Aware Training for Graph Neural Networks.

Degree-Quant This repo provides a clean re-implementation of the code associated with the paper Degree-Quant: Quantization-Aware Training for Graph Ne

![[CVPR 2021]](https://github.com/VITA-Group/CV_LTH_Pre-training/raw/main/Figs/Teaser.png)

[CVPR 2021] "The Lottery Tickets Hypothesis for Supervised and Self-supervised Pre-training in Computer Vision Models" Tianlong Chen, Jonathan Frankle, Shiyu Chang, Sijia Liu, Yang Zhang, Michael Carbin, Zhangyang Wang

The Lottery Tickets Hypothesis for Supervised and Self-supervised Pre-training in Computer Vision Models Codes for this paper The Lottery Tickets Hypo

A no-BS, dead-simple training visualizer for tf-keras

A no-BS, dead-simple training visualizer for tf-keras TrainingDashboard Plot inter-epoch and intra-epoch loss and metrics within a jupyter notebook wi

An Efficient Training Approach for Very Large Scale Face Recognition or F²C for simplicity.

Fast Face Classification (F²C) This is the code of our paper An Efficient Training Approach for Very Large Scale Face Recognition or F²C for simplicit

This is the research repository for Vid2Doppler: Synthesizing Doppler Radar Data from Videos for Training Privacy-Preserving Activity Recognition.

Vid2Doppler: Synthesizing Doppler Radar Data from Videos for Training Privacy-Preserving Activity Recognition This is the research repository for Vid2

Official Tensorflow implementation of "M-LSD: Towards Light-weight and Real-time Line Segment Detection"

M-LSD: Towards Light-weight and Real-time Line Segment Detection Official Tensorflow implementation of "M-LSD: Towards Light-weight and Real-time Line

simpleT5 is built on top of PyTorch-lightning⚡️ and Transformers🤗 that lets you quickly train your T5 models.

Quickly train T5 models in just 3 lines of code + ONNX support simpleT5 is built on top of PyTorch-lightning ⚡️ and Transformers 🤗 that lets you quic

tf2onnx - Convert TensorFlow, Keras and Tflite models to ONNX.

tf2onnx converts TensorFlow (tf-1.x or tf-2.x), tf.keras and tflite models to ONNX via command line or python api.

SparseML is a libraries for applying sparsification recipes to neural networks with a few lines of code, enabling faster and smaller models

SparseML is a toolkit that includes APIs, CLIs, scripts and libraries that apply state-of-the-art sparsification algorithms such as pruning and quantization to any neural network. General, recipe-driven approaches built around these algorithms enable the simplification of creating faster and smaller models for the ML performance community at large.

All course materials for the Zero to Mastery Deep Learning with TensorFlow course.

All course materials for the Zero to Mastery Deep Learning with TensorFlow course.

Implements MLP-Mixer: An all-MLP Architecture for Vision.

MLP-Mixer-CIFAR10 This repository implements MLP-Mixer as proposed in MLP-Mixer: An all-MLP Architecture for Vision. The paper introduces an all MLP (

A tensorflow implementation of GCN-LPA

GCN-LPA This repository is the implementation of GCN-LPA (arXiv): Unifying Graph Convolutional Neural Networks and Label Propagation Hongwei Wang, Jur

Unofficial TensorFlow implementation of the Keyword Spotting Transformer model

Keyword Spotting Transformer This is the unofficial TensorFlow implementation of the Keyword Spotting Transformer model. This model is used to train o

FluxTraining.jl gives you an endlessly extensible training loop for deep learning

A flexible neural net training library inspired by fast.ai

(CVPR2021) Kaleido-BERT: Vision-Language Pre-training on Fashion Domain

Kaleido-BERT: Vision-Language Pre-training on Fashion Domain Mingchen Zhuge*, Dehong Gao*, Deng-Ping Fan#, Linbo Jin, Ben Chen, Haoming Zhou, Minghui

Deep functional residue identification

DeepFRI Deep functional residue identification Citing @article {Gligorijevic2019, author = {Gligorijevic, Vladimir and Renfrew, P. Douglas and Koscio

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

Bachelor's Thesis in Computer Science: Privacy-Preserving Federated Learning Applied to Decentralized Data

federated is the source code for the Bachelor's Thesis Privacy-Preserving Federated Learning Applied to Decentralized Data (Spring 2021, NTNU) Federat

ActNN: Reducing Training Memory Footprint via 2-Bit Activation Compressed Training

ActNN : Activation Compressed Training This is the official project repository for ActNN: Reducing Training Memory Footprint via 2-Bit Activation Comp

Minimal implementation of PAWS (https://arxiv.org/abs/2104.13963) in TensorFlow.

PAWS-TF 🐾 Implementation of Semi-Supervised Learning of Visual Features by Non-Parametrically Predicting View Assignments with Support Samples (PAWS)

![[CVPR'21 Oral] Seeing Out of tHe bOx: End-to-End Pre-training for Vision-Language Representation Learning](https://github.com/researchmm/soho/raw/master/resources/soho.png)

[CVPR'21 Oral] Seeing Out of tHe bOx: End-to-End Pre-training for Vision-Language Representation Learning

Seeing Out of tHe bOx: End-to-End Pre-training for Vision-Language Representation Learning [CVPR'21, Oral] By Zhicheng Huang*, Zhaoyang Zeng*, Yupan H

Tensorflow implementation for Self-supervised Graph Learning for Recommendation

If the compilation is successful, the evaluator of cpp implementation will be called automatically. Otherwise, the evaluator of python implementation will be called.

Unsupervised Pre-training for Person Re-identification (LUPerson)

LUPerson Unsupervised Pre-training for Person Re-identification (LUPerson). The repository is for our CVPR2021 paper Unsupervised Pre-training for Per

EfficientNetV2 implementation using PyTorch

EfficientNetV2-S implementation using PyTorch Train Steps Configure imagenet path by changing data_dir in train.py python main.py --benchmark for mode

An end-to-end machine learning library to directly optimize AUC loss

LibAUC An end-to-end machine learning library for AUC optimization. Why LibAUC? Deep AUC Maximization (DAM) is a paradigm for learning a deep neural n

Contains code for the paper "Vision Transformers are Robust Learners".

Vision Transformers are Robust Learners This repository contains the code for the paper Vision Transformers are Robust Learners by Sayak Paul* and Pin

Self-training with Weak Supervision (NAACL 2021)

This repo holds the code for our weak supervision framework, ASTRA, described in our NAACL 2021 paper: "Self-Training with Weak Supervision"

A collection of Korean Text Datasets ready to use using Tensorflow-Datasets.

tfds-korean A collection of Korean Text Datasets ready to use using Tensorflow-Datasets. TensorFlow-Datasets를 이용한 한국어/한글 데이터셋 모음입니다. Dataset Catalog |

Standalone pre-training recipe with JAX+Flax

Sabertooth Sabertooth is standalone pre-training recipe based on JAX+Flax, with data pipelines implemented in Rust. It runs on CPU, GPU, and/or TPU, b

A PyTorch Lightning solution to training OpenAI's CLIP from scratch.

train-CLIP 📎 A PyTorch Lightning solution to training CLIP from scratch. Goal ⚽ Our aim is to create an easy to use Lightning implementation of OpenA

OpenVisionAPI server

🚀 Quick start An instance of ova-server is free and publicly available here: https://api.openvisionapi.com Checkout ova-client for a quick demo. Inst

(SIGIR2020) “Asymmetric Tri-training for Debiasing Missing-Not-At-Random Explicit Feedback’’

Asymmetric Tri-training for Debiasing Missing-Not-At-Random Explicit Feedback About This repository accompanies the real-world experiments conducted i

A general-purpose multi-agent training framework.

MALib A general-purpose multi-agent training framework. Installation step1: build environment conda create -n malib python==3.7 -y conda activate mali

Unofficial Implementation of MLP-Mixer in TensorFlow

mlp-mixer-tf Unofficial Implementation of MLP-Mixer [abs, pdf] in TensorFlow. Note: This project may have some bugs in it. I'm still learning how to i

Identify the emotion of multiple speakers in an Audio Segment

MevonAI - Speech Emotion Recognition

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more than just a paraphrasing model.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.