1820 Repositories

Python transformer-models Libraries

《DeepViT: Towards Deeper Vision Transformer》(2021)

DeepViT This repo is the official implementation of "DeepViT: Towards Deeper Vision Transformer". The repo is based on the timm library (https://githu

Official PyTorch implementation of MX-Font (Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Experts)

Introduction Pytorch implementation of Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Expert. | paper Song Park1

A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models

wav2vec-toolkit A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models This repository accompanies the

Source code for the GPT-2 story generation models in the EMNLP 2020 paper "STORIUM: A Dataset and Evaluation Platform for Human-in-the-Loop Story Generation"

Storium GPT-2 Models This is the official repository for the GPT-2 models described in the EMNLP 2020 paper [STORIUM: A Dataset and Evaluation Platfor

Code for the Convolutional Vision Transformer (ConViT)

ConViT : Vision Transformers with Convolutional Inductive Biases This repository contains PyTorch code for ConViT. It builds on code from the Data-Eff

Code, Data and Demo for Paper: Controllable Generation from Pre-trained Language Models via Inverse Prompting

InversePrompting Paper: Controllable Generation from Pre-trained Language Models via Inverse Prompting Code: The code is provided in the "chinese_ip"

Official code for the CVPR 2021 paper "How Well Do Self-Supervised Models Transfer?"

How Well Do Self-Supervised Models Transfer? This repository hosts the code for the experiments in the CVPR 2021 paper How Well Do Self-Supervised Mod

Transformer related optimization, including BERT, GPT

This repository provides a script and recipe to run the highly optimized transformer-based encoder and decoder component, and it is tested and maintained by NVIDIA.

(ImageNet pretrained models) The official pytorch implemention of the TPAMI paper "Res2Net: A New Multi-scale Backbone Architecture"

Res2Net The official pytorch implemention of the paper "Res2Net: A New Multi-scale Backbone Architecture" Our paper is accepted by IEEE Transactions o

Top2Vec is an algorithm for topic modeling and semantic search.

Top2Vec is an algorithm for topic modeling and semantic search. It automatically detects topics present in text and generates jointly embedded topic, document and word vectors.

Code to reproduce the experiments in the paper "Transformer Based Multi-Source Domain Adaptation" (EMNLP 2020)

Transformer Based Multi-Source Domain Adaptation Dustin Wright and Isabelle Augenstein To appear in EMNLP 2020. Read the preprint: https://arxiv.org/a

Neural models of common sense. 🤖

Unicorn on Rainbow Neural models of common sense. This repository is for the paper: Unicorn on Rainbow: A Universal Commonsense Reasoning Model on a N

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''.

P-tuning A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''. How to use our code We have released the code

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Learning Spatio-Temporal Transformer for Visual Tracking

STARK The official implementation of the paper Learning Spatio-Temporal Transformer for Visual Tracking Hiring research interns for visual transformer

This is the official PyTorch implementation of the paper "TransFG: A Transformer Architecture for Fine-grained Recognition" (Ju He, Jie-Neng Chen, Shuai Liu, Adam Kortylewski, Cheng Yang, Yutong Bai, Changhu Wang, Alan Yuille).

TransFG: A Transformer Architecture for Fine-grained Recognition Official PyTorch code for the paper: TransFG: A Transformer Architecture for Fine-gra

Implementation of the Swin Transformer in PyTorch.

Swin Transformer - PyTorch Implementation of the Swin Transformer architecture. This paper presents a new vision Transformer, called Swin Transformer,

TransReID: Transformer-based Object Re-Identification

TransReID: Transformer-based Object Re-Identification [arxiv] The official repository for TransReID: Transformer-based Object Re-Identification achiev

Viewflow is an Airflow-based framework that allows data scientists to create data models without writing Airflow code.

Viewflow Viewflow is a framework built on the top of Airflow that enables data scientists to create materialized views. It allows data scientists to f

This is the official PyTorch implementation of the paper "TransFG: A Transformer Architecture for Fine-grained Recognition" (Ju He, Jie-Neng Chen, Shuai Liu, Adam Kortylewski, Cheng Yang, Yutong Bai, Changhu Wang, Alan Yuille).

TransFG: A Transformer Architecture for Fine-grained Recognition Official PyTorch code for the paper: TransFG: A Transformer Architecture for Fine-gra

Learning Spatio-Temporal Transformer for Visual Tracking

STARK The official implementation of the paper Learning Spatio-Temporal Transformer for Visual Tracking Highlights The strongest performances Tracker

Automated Machine Learning Pipeline with Feature Engineering and Hyper-Parameters Tuning

The mljar-supervised is an Automated Machine Learning Python package that works with tabular data. I

A probabilistic programming library for Bayesian deep learning, generative models, based on Tensorflow

ZhuSuan is a Python probabilistic programming library for Bayesian deep learning, which conjoins the complimentary advantages of Bayesian methods and

A probabilistic programming language in TensorFlow. Deep generative models, variational inference.

Edward is a Python library for probabilistic modeling, inference, and criticism. It is a testbed for fast experimentation and research with probabilis

Describing statistical models in Python using symbolic formulas

Patsy is a Python library for describing statistical models (especially linear models, or models that have a linear component) and building design mat

A Python package for Bayesian forecasting with object-oriented design and probabilistic models under the hood.

Disclaimer This project is stable and being incubated for long-term support. It may contain new experimental code, for which APIs are subject to chang

Python Library for learning (Structure and Parameter) and inference (Statistical and Causal) in Bayesian Networks.

pgmpy pgmpy is a python library for working with Probabilistic Graphical Models. Documentation and list of algorithms supported is at our official sit

Fast, flexible and easy to use probabilistic modelling in Python.

Please consider citing the JMLR-MLOSS Manuscript if you've used pomegranate in your academic work! pomegranate is a package for building probabilistic

Hidden Markov Models in Python, with scikit-learn like API

hmmlearn hmmlearn is a set of algorithms for unsupervised learning and inference of Hidden Markov Models. For supervised learning learning of HMMs and

Scikit-learn compatible estimation of general graphical models

skggm : Gaussian graphical models using the scikit-learn API In the last decade, learning networks that encode conditional independence relationships

Differentiable SDE solvers with GPU support and efficient sensitivity analysis.

PyTorch Implementation of Differentiable SDE Solvers This library provides stochastic differential equation (SDE) solvers with GPU support and efficie

An implementation of Performer, a linear attention-based transformer, in Pytorch

Performer - Pytorch An implementation of Performer, a linear attention-based transformer variant with a Fast Attention Via positive Orthogonal Random

Pretrained EfficientNet, EfficientNet-Lite, MixNet, MobileNetV3 / V2, MNASNet A1 and B1, FBNet, Single-Path NAS

(Generic) EfficientNets for PyTorch A 'generic' implementation of EfficientNet, MixNet, MobileNetV3, etc. that covers most of the compute/parameter ef

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

Rembg Video Virtual Green Screen Edition

Rembg Virtual Greenscreen Edition is a tool to create a green screen matte for videos

Decentralized deep learning in PyTorch. Built to train models on thousands of volunteers across the world.

Hivemind: decentralized deep learning in PyTorch Hivemind is a PyTorch library to train large neural networks across the Internet. Its intended usage

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

AtsPy: Automated Time Series Models in Python (by @firmai)

Automated Time Series Models in Python (AtsPy) SSRN Report Easily develop state of the art time series models to forecast univariate data series. Simp

Automatically build ARIMA, SARIMAX, VAR, FB Prophet and XGBoost Models on Time Series data sets with a Single Line of Code. Now updated with Dask to handle millions of rows.

Auto_TS: Auto_TimeSeries Automatically build multiple Time Series models using a Single Line of Code. Now updated with Dask. Auto_timeseries is a comp

A python library for easy manipulation and forecasting of time series.

Time Series Made Easy in Python darts is a python library for easy manipulation and forecasting of time series. It contains a variety of models, from

A statistical library designed to fill the void in Python's time series analysis capabilities, including the equivalent of R's auto.arima function.

pmdarima Pmdarima (originally pyramid-arima, for the anagram of 'py' + 'arima') is a statistical library designed to fill the void in Python's time se

ARCH models in Python

arch Autoregressive Conditional Heteroskedasticity (ARCH) and other tools for financial econometrics, written in Python (with Cython and/or Numba used

Qlib is an AI-oriented quantitative investment platform, which aims to realize the potential, empower the research, and create the value of AI technologies in quantitative investment. With Qlib, you can easily try your ideas to create better Quant investment strategies.

Qlib is an AI-oriented quantitative investment platform, which aims to realize the potential, empower the research, and create the value of AI technol

git《Self-Attention Attribution: Interpreting Information Interactions Inside Transformer》(AAAI 2021) GitHub:

Self-Attention Attribution This repository contains the implementation for AAAI-2021 paper Self-Attention Attribution: Interpreting Information Intera

Source code, datasets and trained models for the paper Learning Advanced Mathematical Computations from Examples (ICLR 2021), by François Charton, Amaury Hayat (ENPC-Rutgers) and Guillaume Lample

Maths from examples - Learning advanced mathematical computations from examples This is the source code and data sets relevant to the paper Learning a

Diverse Image Captioning with Context-Object Split Latent Spaces (NeurIPS 2020)

Diverse Image Captioning with Context-Object Split Latent Spaces This repository is the PyTorch implementation of the paper: Diverse Image Captioning

a general-purpose Transformer based vision backbone

Swin Transformer By Ze Liu*, Yutong Lin*, Yue Cao*, Han Hu*, Yixuan Wei, Zheng Zhang, Stephen Lin and Baining Guo. This repo is the official implement

CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent performances in several models such as Faster R-CNN, YOLOv4, RetinaNet and . There is a maximum AP improvement of 1.9% and an average AP of 0.8% improvement on MS COCO dataset, compared to traditional evaluation-feedback modules. Here we just use as an example to illustrate the code.

CDIoU-CDIoUloss CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent p

⚡ boost inference speed of T5 models by 5x & reduce the model size by 3x using fastT5.

Reduce T5 model size by 3X and increase the inference speed up to 5X. Install Usage Details Functionalities Benchmarks Onnx model Quantized onnx model

git git《Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking》(CVPR 2021) GitHub:git2] 《Masksembles for Uncertainty Estimation》(CVPR 2021) GitHub:git3]

Transformer Meets Tracker: Exploiting Temporal Context for Robust Visual Tracking Ning Wang, Wengang Zhou, Jie Wang, and Houqiang Li Accepted by CVPR

Monocular Depth Estimation - Weighted-average prediction from multiple pre-trained depth estimation models

merged_depth runs (1) AdaBins, (2) DiverseDepth, (3) MiDaS, (4) SGDepth, and (5) Monodepth2, and calculates a weighted-average per-pixel absolute dept

Repository providing a wide range of self-supervised pretrained models for computer vision tasks.

Hierarchical Pretraining: Research Repository This is a research repository for reproducing the results from the project "Self-supervised pretraining

Rotary Transformer

Rotary Transformer Rotary Transformer,简称RoFormer,是我们自研的语言模型之一,主要是为Transformer结构设计了新的旋转式位置编码(Rotary Position Embedding,RoPE)。RoPE具有良好的理论性质,且是目前唯一一种可以应用

This repository lets you train neural networks models for performing end-to-end full-page handwriting recognition using the Apache MXNet deep learning frameworks on the IAM Dataset.

Handwritten Text Recognition (OCR) with MXNet Gluon These notebooks have been created by Jonathan Chung, as part of his internship as Applied Scientis

Adaptive Attention Span for Reinforcement Learning

Adaptive Transformers in RL Official implementation of Adaptive Transformers in RL In this work we replicate several results from Stabilizing Transfor

Code for SentiBERT: A Transferable Transformer-Based Architecture for Compositional Sentiment Semantics (ACL'2020).

SentiBERT Code for SentiBERT: A Transferable Transformer-Based Architecture for Compositional Sentiment Semantics (ACL'2020). https://arxiv.org/abs/20

TensorFlow code for the neural network presented in the paper: "Structural Language Models of Code" (ICML'2020)

SLM: Structural Language Models of Code This is an official implementation of the model described in: "Structural Language Models of Code" [PDF] To ap

中文语音识别系列,读者可以借助它快速训练属于自己的中文语音识别模型,或直接使用预训练模型测试效果。

MASR中文语音识别(pytorch版) 开箱即用 自行训练 使用与训练分离(增量训练) 识别率高 说明:因为每个人电脑机器不同,而且有些安装包安装起来比较麻烦,强烈建议直接用我编译好的docker环境跑 目前docker基础环境为ubuntu-cuda10.1-cudnn7-pytorch1.6.

Transformer Tracking (CVPR2021)

TransT - Transformer Tracking [CVPR2021] Official implementation of the TransT (CVPR2021) , including training code and trained models. We are revisin

(under submission) Bayesian Integration of a Generative Prior for Image Restoration

BIGPrior: Towards Decoupling Learned Prior Hallucination and Data Fidelity in Image Restoration Authors: Majed El Helou, and Sabine Süsstrunk {Note: p

Open source code for Paper "A Co-Interactive Transformer for Joint Slot Filling and Intent Detection"

A Co-Interactive Transformer for Joint Slot Filling and Intent Detection This repository contains the PyTorch implementation of the paper: A Co-Intera

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

Deep generative modeling for time-stamped heterogeneous data, enabling high-fidelity models for a large variety of spatio-temporal domains.

Neural Spatio-Temporal Point Processes [arxiv] Ricky T. Q. Chen, Brandon Amos, Maximilian Nickel Abstract. We propose a new class of parameterizations

Model parallel transformers in Jax and Haiku

Mesh Transformer Jax A haiku library using the new(ly documented) xmap operator in Jax for model parallelism of transformers. See enwik8_example.py fo

🦉Data Version Control | Git for Data & Models

Website • Docs • Blog • Twitter • Chat (Community & Support) • Tutorial • Mailing List Data Version Control or DVC is an open-source tool for data sci

This is the code for HOI Transformer

HOI Transformer Code for CVPR 2021 accepted paper End-to-End Human Object Interaction Detection with HOI Transformer. Reproduction We recomend you to

CoTr: Efficiently Bridging CNN and Transformer for 3D Medical Image Segmentation

CoTr: Efficient 3D Medical Image Segmentation by bridging CNN and Transformer This is the official pytorch implementation of the CoTr: Paper: CoTr: Ef

Code for ICLR 2021 Paper, "Anytime Sampling for Autoregressive Models via Ordered Autoencoding"

Anytime Autoregressive Model Anytime Sampling for Autoregressive Models via Ordered Autoencoding , ICLR 21 Yilun Xu, Yang Song, Sahaj Gara, Linyuan Go

This repo provides the official code for TransBTS: Multimodal Brain Tumor Segmentation Using Transformer (https://arxiv.org/pdf/2103.04430.pdf).

TransBTS: Multimodal Brain Tumor Segmentation Using Transformer This repo is the official implementation for TransBTS: Multimodal Brain Tumor Segmenta

Beyond the Imitation Game collaborative benchmark for enormous language models

BIG-bench 🪑 The Beyond the Imitation Game Benchmark (BIG-bench) will be a collaborative benchmark intended to probe large language models, and extrap

本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。

【关于 NLP】那些你不知道的事 作者:杨夕、芙蕖、李玲、陈海顺、twilight、LeoLRH、JimmyDU、艾春辉、张永泰、金金金 介绍 本项目是作者们根据个人面试和经验总结出的自然语言处理(NLP)面试准备的学习笔记与资料,该资料目前包含 自然语言处理各领域的 面试题积累。 目录架构 一、【

Scripts of Machine Learning Algorithms from Scratch. Implementations of machine learning models and algorithms using nothing but NumPy with a focus on accessibility. Aims to cover everything from basic to advance.

Algo-ScriptML Python implementations of some of the fundamental Machine Learning models and algorithms from scratch. The goal of this project is not t

Django project starter on steroids: quickly create a Django app AND generate source code for data models + REST/GraphQL APIs (the generated code is auto-linted and has 100% test coverage).

Create Django App 💛 We're a Django project starter on steroids! One-line command to create a Django app with all the dependencies auto-installed AND

Code associated with the "Data Augmentation using Pre-trained Transformer Models" paper

Data Augmentation using Pre-trained Transformer Models Code associated with the Data Augmentation using Pre-trained Transformer Models paper Code cont

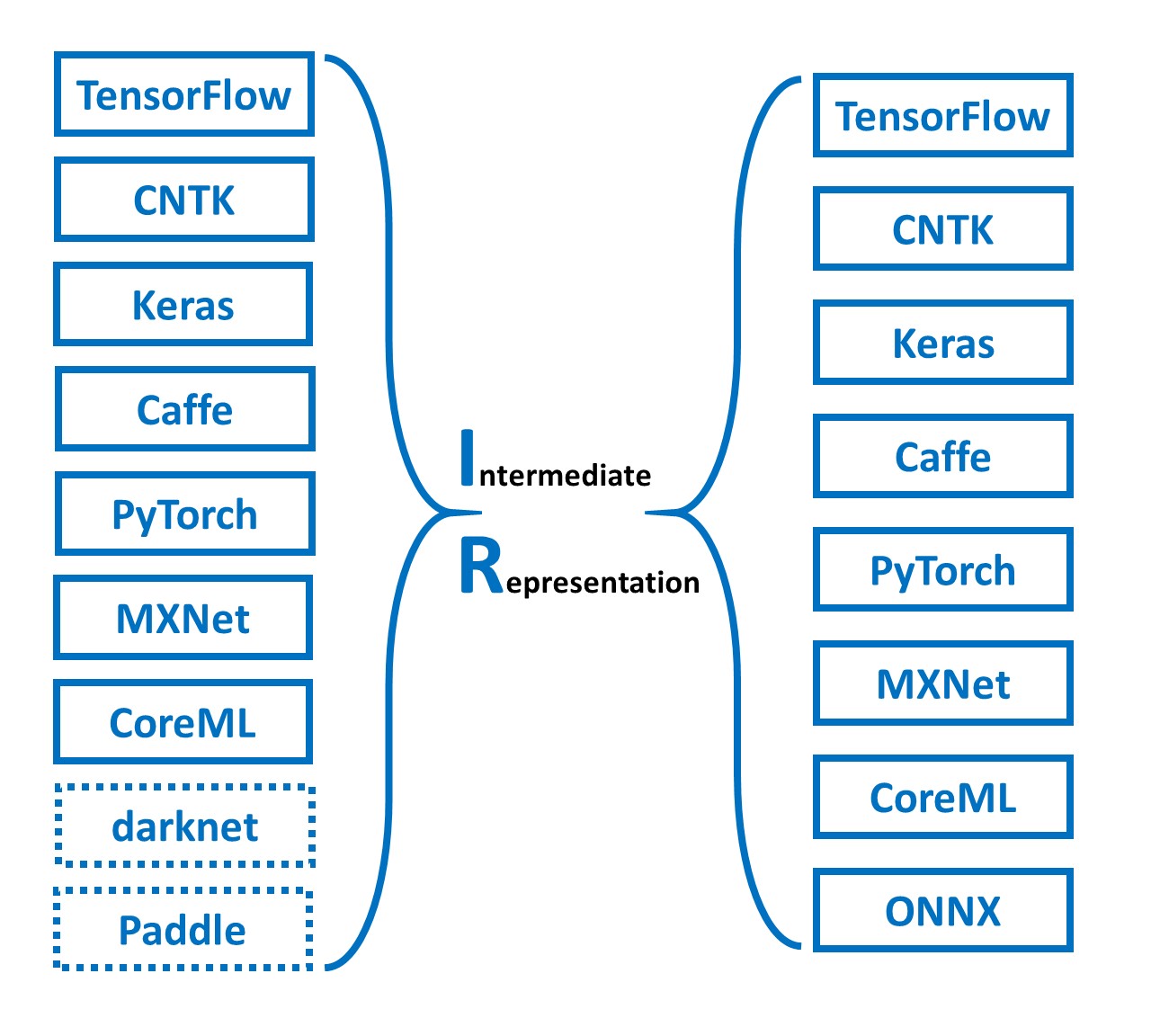

MMdnn is a set of tools to help users inter-operate among different deep learning frameworks. E.g. model conversion and visualization. Convert models between Caffe, Keras, MXNet, Tensorflow, CNTK, PyTorch Onnx and CoreML.

MMdnn MMdnn is a comprehensive and cross-framework tool to convert, visualize and diagnose deep learning (DL) models. The "MM" stands for model manage

A library for hidden semi-Markov models with explicit durations

hsmmlearn hsmmlearn is a library for unsupervised learning of hidden semi-Markov models with explicit durations. It is a port of the hsmm package for

Scikit-learn compatible estimation of general graphical models

skggm : Gaussian graphical models using the scikit-learn API In the last decade, learning networks that encode conditional independence relationships

Visualizer for neural network, deep learning, and machine learning models

Netron is a viewer for neural network, deep learning and machine learning models. Netron supports ONNX (.onnx, .pb, .pbtxt), Keras (.h5, .keras), Tens

Interpretability and explainability of data and machine learning models

AI Explainability 360 (v0.2.1) The AI Explainability 360 toolkit is an open-source library that supports interpretability and explainability of datase

FairML - is a python toolbox auditing the machine learning models for bias.

======== FairML: Auditing Black-Box Predictive Models FairML is a python toolbox auditing the machine learning models for bias. Description Predictive

Algorithms for monitoring and explaining machine learning models

Alibi is an open source Python library aimed at machine learning model inspection and interpretation. The focus of the library is to provide high-qual

Ludwig is a toolbox that allows to train and evaluate deep learning models without the need to write code.

Translated in 🇰🇷 Korean/ Ludwig is a toolbox that allows users to train and test deep learning models without the need to write code. It is built on

📝 Wrapper library for text generation / language models at char and word level with RNN in TensorFlow

tensorlm Generate Shakespeare poems with 4 lines of code. Installation tensorlm is written in / for Python 3.4+ and TensorFlow 1.1+ pip3 install tenso

Datasets, Transforms and Models specific to Computer Vision

torchvision The torchvision package consists of popular datasets, model architectures, and common image transformations for computer vision. Installat

Probabilistic programming framework that facilitates objective model selection for time-varying parameter models.

Time series analysis today is an important cornerstone of quantitative science in many disciplines, including natural and life sciences as well as eco

![[HELP REQUESTED] Generalized Additive Models in Python](https://github.com/dswah/pyGAM/raw/master/imgs/pygam_tensor.png)

[HELP REQUESTED] Generalized Additive Models in Python

pyGAM Generalized Additive Models in Python. Documentation Official pyGAM Documentation: Read the Docs Building interpretable models with Generalized

Highly interpretable classifiers for scikit learn, producing easily understood decision rules instead of black box models

Highly interpretable, sklearn-compatible classifier based on decision rules This is a scikit-learn compatible wrapper for the Bayesian Rule List class

50% faster, 50% less RAM Machine Learning. Numba rewritten Sklearn. SVD, NNMF, PCA, LinearReg, RidgeReg, Randomized, Truncated SVD/PCA, CSR Matrices all 50+% faster

[Due to the time taken @ uni, work + hell breaking loose in my life, since things have calmed down a bit, will continue commiting!!!] [By the way, I'm

OpenChat: Opensource chatting framework for generative models

OpenChat is opensource chatting framework for generative models.

Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image classification, in Pytorch

Transformer in Transformer Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image c

Implementation of E(n)-Transformer, which extends the ideas of Welling's E(n)-Equivariant Graph Neural Network to attention

E(n)-Equivariant Transformer (wip) Implementation of E(n)-Equivariant Transformer, which extends the ideas from Welling's E(n)-Equivariant G

A Python library that helps data scientists to infer causation rather than observing correlation.

A Python library that helps data scientists to infer causation rather than observing correlation.

A wiki system with complex functionality for simple integration and a superb interface. Store your knowledge with style: Use django models.

django-wiki Django support The below table explains which Django versions are supported. Release Django Upgrade from 0.7.x 2.2, 3.0, 3.1 0.5 or 0.6 0.

🦉Data Version Control | Git for Data & Models

Website • Docs • Blog • Twitter • Chat (Community & Support) • Tutorial • Mailing List Data Version Control or DVC is an open-source tool for data sci

Admin Panel for GinoORM - ready to up & run (just add your models)

Gino-Admin Docs (state: in process): Gino-Admin docs Play with Demo (current master 0.2.3) Gino-Admin demo (login: admin, pass: 1234) Admin

FastAPI Skeleton App to serve machine learning models production-ready.

FastAPI Model Server Skeleton Serving machine learning models production-ready, fast, easy and secure powered by the great FastAPI by Sebastián Ramíre

Pytorch Code for "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation"

Medical-Transformer Pytorch Code for the paper "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation" About this repo: This repo

Release for Improved Denoising Diffusion Probabilistic Models

improved-diffusion This is the codebase for Improved Denoising Diffusion Probabilistic Models. Usage This section of the README walks through how to t